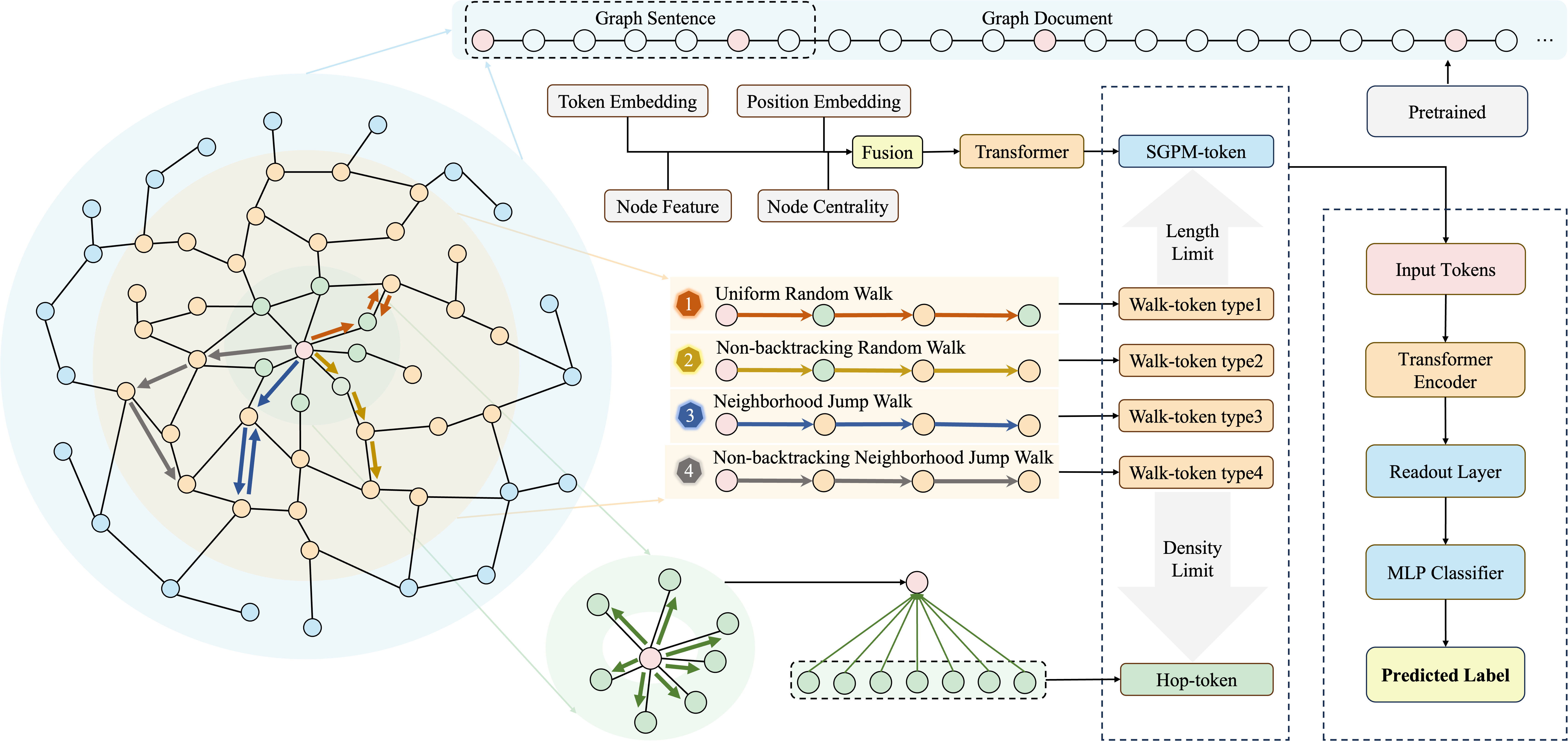

Tokenphormer is a novel graph transfomer model designed to address limitations in traditional Graph Neural Networks (GNNs) and Graph Transformers by utilizing multi-token generation. This approach effectively captures both local and global information while retaining graph structural context.

The full version(with Appendix) is online now: Tokenphormer.

Graph Neural Networks (GNNs) are commonly used for graph data mining tasks, but they face issues like over-smoothing and over-squashing, limiting their receptive field. Graph Transformers, on the other hand, offer a global receptive field but suffer from noisy, irrelevant nodes and a loss of structural information.

Tokenphormer overcomes these challenges by generating multiple tokens at different levels of granularity, ensuring both local and global information are captured. The model is inspired by fine-grained token-based representation learning from Natural Language Processing (NLP).

Below is an illustration of the Tokenphormer framework:

To set up the environment for Tokenphormer and SGPM, follow these steps:

-

Clone the repository:

git clone https://github.com/your-username/tokenphormer.git cd tokenphormer -

Install dependencies:

The project provides environment files to help set up the required dependencies for both Tokenphormer and SGPM.- Go to the

Envsfolder and select the appropriate.yamlfile for your environment. - Create a conda environment with the following command:

conda env create -f Envs/tokenphormer_env.yaml

Adjust the environment file name as needed (for example,

sgpm_env.yamlif you're setting up SGPM). Then activate the environment:conda activate tokenphormer_env

- Go to the

-

Navigate to the SGPM directory:

cd SGPM -

Modify the dataset you want to use by editing

pretraining_args.py. -

Run SGPM:

sh run.sh

This will pre-train the graph model using SGPM. Once the pre-training is complete, you will need to move the generated files to the Tokenphormer directory.

-

Navigate to the Tokenphormer directory:

cd Tokenphormer -

Modify the dataset you want to use by editing

run.sh. -

Use SGPM-generated tokens:

If you want to run Tokenphormer with SGPM tokens, you need to move the relevant files generated by SGPM into the Tokenphormer directory.- Move SGPM files:

- From

SGPM/PRETRAINED_MODELS/dataset/pytorch_model.bintoTokenphormer/GraphPretrainedModel/dataset/pytorch_model.bin - From

SGPM/TOKENIZED_GRAPH_DATESET/dataset/bert_config.jsontoTokenphormer/GraphPretrainedModel/dataset/config.json - From

SGPM/TOKENIZED_GRAPH_DATESET/dataset/vocab.txttoTokenphormer/GraphPretrainedModel/dataset/vocab.txt

- From

- Move SGPM files:

-

Run Tokenphormer:

sh run.sh

This will train Tokenphormer using the SGPM pre-trained model and tokens.

If you find Tokenphormer useful for your research, please cite our paper.

This project is licensed under the MIT License.

We would like to thank the authors of the following works for their contributions to the field:

- SGPM: Modified from Meelfy/pytorch_pretrained_BERT.

- Tokenphormer: Modified from JHL-HUST/NAGphormer.