Arena is a general evaluation platform for multi-agent intelligence. As a part of Arena project, this repository is to provide implementation of state-of-the-art deep multi-agent reinforcement learning baselines. More resources can be found in Arena Home. If you use this repository to conduct research, we kindly ask that you cite the paper as a reference.

We are currently open to any suggestions or pull requests from the community to make Arena a better platform. To contribute to the project, joint us in Slack, and check To Do list in Trello.

Follow our short and simple tutorials in Tutorials: Baselines, which should get you ready in minutes.

In order to install requirements, follow:

# For users behind the Great Wall of China

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/free/

conda config --add channels https://mirrors.tuna.tsinghua.edu.cn/anaconda/pkgs/main/

conda config --set show_channel_urls yes

pip install pip -U

pip config set global.index-url https://pypi.tuna.tsinghua.edu.cn/simple

# Create a virtual environment

conda create -n Arena python=3.6.7 -y

source activate Arena

# PyTorch

pip install --upgrade torch torchvision

# TensorFlow

pip install --upgrade tensorflow

# Baselines for Atari preprocessing

git clone https://github.com/openai/baselines.git

cd baselines

pip install -e .

cd ..

# ML-Agents

git clone https://github.com/Unity-Technologies/ml-agents.git

cd ml-agents

cd ml-agents

pip install -e .

cd ..

cd gym-unity

pip install -e .

cd ..

cd ..

# Clone code

mkdir Arena

cd Arena

git clone https://github.com/YuhangSong/Arena-4.git

cd Arena-4

# Other requirements

pip install -r requirements.txtIf you run into following situations,

- you are running on a remote server without GUI (X-Server).

- your machine have a X-Server but it does not belongs (started by) your account, so you cannot access it.

- if none of above is your situation, i.e., you are running things on your onw desktop, skip this section.

follow guidelines below to setup a virtual display. If you are not in above situations, skip to run the code. Note that if you have a X-Server belongs to your account, you can just start multiple windows held by a TMUX session when you are on the machine's GUI desktop (in this way, the window has access to X-Server). After that, connect to this machine remotely and attach the session (windows) you started on the GUI desktop.

# Install Xorg

sudo apt-get update

sudo apt-get install -y xserver-xorg mesa-utils

sudo nvidia-xconfig -a --use-display-device=None --virtual=1280x1024

# Get the BusID information

nvidia-xconfig --query-gpu-info

# Add the BusID information to your /etc/X11/xorg.conf file

sudo sed -i 's/ BoardName "GeForce GTX TITAN X"/ BoardName "GeForce GTX TITAN X"\n BusID "0:30:0"/g' /etc/X11/xorg.conf

# Remove the Section "Files" from the /etc/X11/xorg.conf file

# And remove two lines that contain Section "Files" and EndSection

sudo vim /etc/X11/xorg.conf

# Download and install the latest Nvidia driver for ubuntu

# Please refer to http://download.nvidia.com/XFree86/Linux-#x86_64/latest.txt

wget http://download.nvidia.com/XFree86/Linux-x86_64/390.87/NVIDIA-Linux-x86_64-390.87.run

sudo /bin/bash ./NVIDIA-Linux-x86_64-390.87.run --accept-license --no-questions --ui=none

# Disable Nouveau as it will clash with the Nvidia driver

sudo echo 'blacklist nouveau' | sudo tee -a /etc/modprobe.d/blacklist.conf

sudo echo 'options nouveau modeset=0' | sudo tee -a /etc/modprobe.d/blacklist.conf

sudo echo options nouveau modeset=0 | sudo tee -a /etc/modprobe.d/nouveau-kms.conf

sudo update-initramfs -u

sudo reboot now

Kill Xorg using one of following three ways (different ways may work on different linux versions):

- Run

ps aux | grep -ie Xorg | awk '{print "sudo kill -9 " $2}', and then run the output of this command. - Run

sudo killall Xorg - Run

sudo init 3

Start vitual display with

sudo ls

sudo /usr/bin/X :0 &

You shoud be seeing the virtual display starts successfully with the output:

X.Org X Server 1.19.5

Release Date: 2017-10-12

X Protocol Version 11, Revision 0

Build Operating System: Linux 4.4.0-97-generic x86_64 Ubuntu

Current Operating System: Linux W5 4.13.0-46-generic #51-Ubuntu SMP Tue Jun 12 12:36:29 UTC 2018 x86_64

Kernel command line: BOOT_IMAGE=/boot/vmlinuz-4.13.0-46-generic.efi.signed root=UUID=5fdb5e18-f8ee-4762-a53b-e58d2b663df1 ro quiet splash nomodeset acpi=noirq thermal.off=1 vt.handoff=7

Build Date: 15 October 2017 05:51:19PM

xorg-server 2:1.19.5-0ubuntu2 (For technical support please see http://www.ubuntu.com/support)

Current version of pixman: 0.34.0

Before reporting problems, check http://wiki.x.org

to make sure that you have the latest version.

Markers: (--) probed, (**) from config file, (==) default setting,

(++) from command line, (!!) notice, (II) informational,

(WW) warning, (EE) error, (NI) not implemented, (??) unknown.

(==) Log file: "/var/log/Xorg.0.log", Time: Fri Jun 14 01:18:40 2019

(==) Using config file: "/etc/X11/xorg.conf"

(==) Using system config directory "/usr/share/X11/xorg.conf.d"

If you are seeing errors, go back to "kill Xorg using one of following three ways" and try another way.

Before running Arena-Baselines in a new window, run following command to attach a virtual display port to the window:

export DISPLAY=:0

Crate TMUX session (if the machine is a server you connect via SSH) and enter virtual environment

tmux new-session -s Arena

source activate Arena

Games:

- ArenaCrawler-Example-v2-Continuous

- ArenaCrawlerMove-2T1P-v1-Continuous

- ArenaCrawlerRush-2T1P-v1-Continuous

- ArenaCrawlerPush-2T1P-v1-Continuous

- ArenaWalkerMove-2T1P-v1-Continuous

- Crossroads-2T1P-v1-Continuous

- Crossroads-2T2P-v1-Continuous

- ArenaCrawlerPush-2T2P-v1-Continuous

- RunToGoal-2T1P-v1-Continuous

- Sumo-2T1P-v1-Continuous

- YouShallNotPass-Dense-2T1P-v1-Continuous

Commands, replace GAME_NAME with above games:

CUDA_VISIBLE_DEVICES=0 python main.py --mode train --env-name GAME_NAME --obs-type visual --num-frame-stack 4 --recurrent-brain --normalize-obs --trainer ppo --use-gae --lr 3e-4 --value-loss-coef 0.5 --ppo-epoch 10 --num-processes 16 --num-steps 2048 --num-mini-batch 16 --use-linear-lr-decay --entropy-coef 0 --gamma 0.995 --tau 0.95 --num-env-steps 100000000 --reload-playing-agents-principle OpenAIFive --vis --vis-interval 1 --log-interval 1 --num-eval-episodes 10 --arena-start-index 31969 --aux 0

Games:

- Crossroads-2T1P-v1-Discrete

- FighterNoTurn-2T1P-v1-Discrete

- FighterFull-2T1P-v1-Discrete

- Soccer-2T1P-v1-Discrete

- BlowBlow-2T1P-v1-Discrete

- Boomer-2T1P-v1-Discrete

- Gunner-2T1P-v1-Discrete

- Maze2x2Gunner-2T1P-v1-Discrete

- Maze3x3Gunner-2T1P-v1-Discrete

- Maze3x3Gunner-PenalizeTie-2T1P-v1-Discrete

- Barrier4x4Gunner-2T1P-v1-Discrete

- Soccer-2T2P-v1-Discrete

- BlowBlow-2T2P-v1-Discrete

- BlowBlow-Dense-2T2P-v1-Discrete

- Tennis-2T1P-v1-Discrete

- Tank-FP-2T1P-v1-Discrete

- BlowBlow-Dense-2T1P-v1-Discrete

Commands, replace GAME_NAME with above games:

CUDA_VISIBLE_DEVICES=0 python main.py --mode train --env-name GAME_NAME --obs-type visual --num-frame-stack 4 --recurrent-brain --normalize-obs --trainer ppo --use-gae --lr 2.5e-4 --value-loss-coef 0.5 --ppo-epoch 4 --num-processes 16 --num-steps 1024 --num-mini-batch 16 --use-linear-lr-decay --entropy-coef 0.01 --clip-param 0.1 --num-env-steps 100000000 --reload-playing-agents-principle OpenAIFive --vis --vis-interval 1 --log-interval 1 --num-eval-episodes 10 --arena-start-index 31569 --aux 0

Curves: The code log multiple curves to help analysis the training process, run:

source activate Arena && tensorboard --logdir ../results/ --port 8888

and visit http://localhost:4253 for visualization with tensorboard.

If your port is blocked, use natapp to forward a port:

./natapp --authtoken 237e94b5d173a7c3

./natapp --authtoken 09d9c46fedceda3f

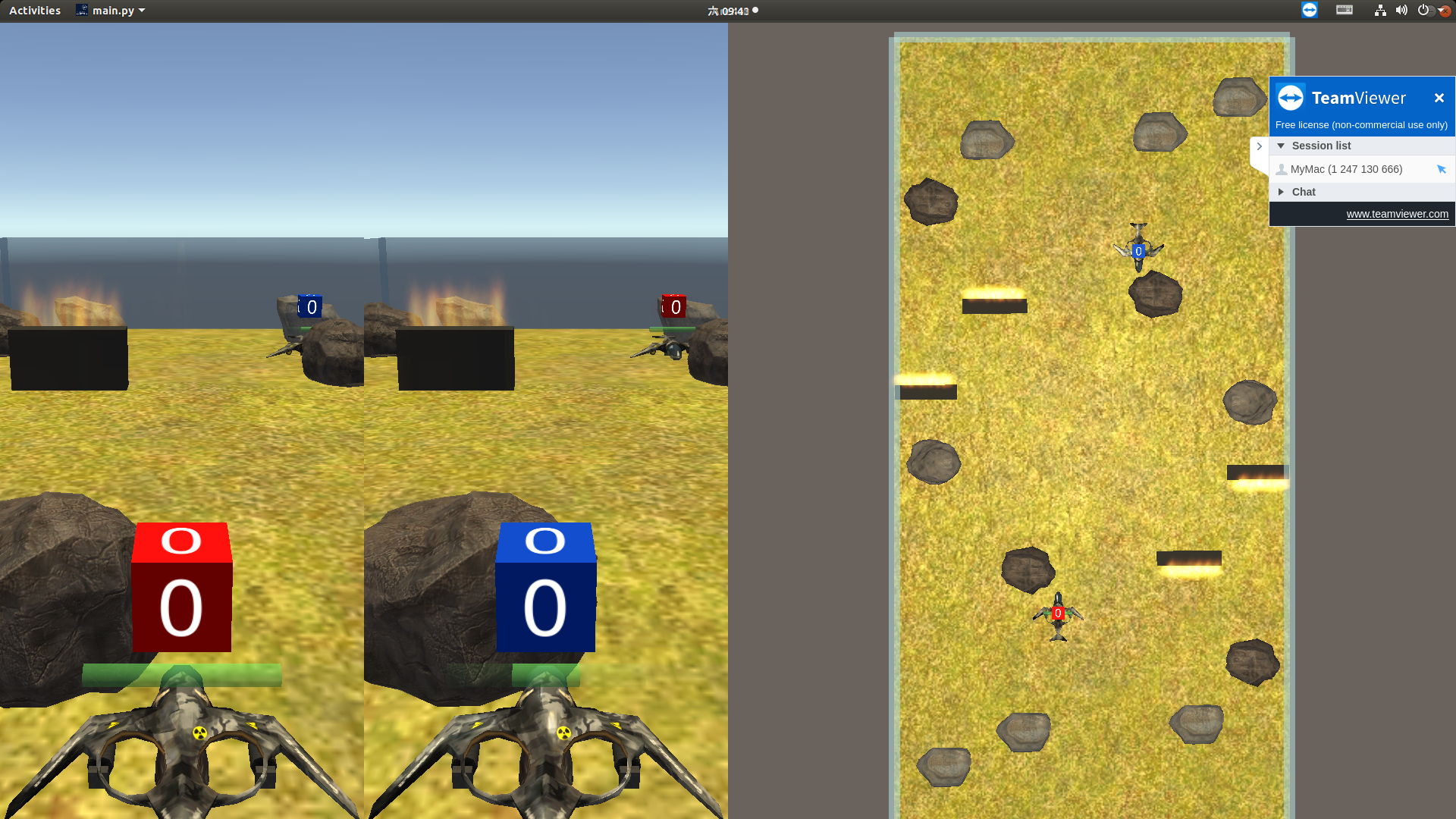

Behaviors:

Set --mode vis_train, so that

- The game engine runs at a real time scale of 1 (when training, it runs 100 times as the real time scale).

- The game runs only one thread.

- The game renders at 1920*1080, where you can observe agents' observations as well as the top-down view of the global state. So you are expected to do this on a desktop with X-Server you can access, instead of using a remote server.

- All agents act deterministically without exploring.

- Two video files (.avi and .gif) of the episode will be saved, so that you can post it on your project website. The orignal resolution is the same as that of your screen, which is 1920*1080 in our case, click on the gif video to see the high-resolution original file. See here.

- A picture (.png) of the episode will be saved, so that you can use it as a visulizatino of your agents' behavior in your paper. The orignal resolution is the same as that of your screen, which is 1920*1080 in our case, click on the image to see the high-resolution original file. See here.

Above example commands runs a self-play with following options and features:

- In different thread, the agent is taking different roles, so that the learning generalize better.

--reload-playing-agents-principlehas three optionsrecentplaying agents are loaded with the most recent checkpoint.earliestplaying agents are loaded with the oldest checkpoint. This option is mainly for debugging, since it removes the stochasticity of playing agent.

uniformplaying agents are loaded with the a random checkpoint sampled uniformly from all historical checkpoints.OpenAIFiveTo avoid strategy collapse, the agent trains 80% of its games against itself and the other 20% against its past selves. Thus, OpenAIFive is 0.8 probability to be recent, 0.2 probability to be uniform.

Population-based training is usefull when the game is none-transitive, which means there is no best agent, but there could be a best population.

Population-based training will train a batch of agents instead of just one.

Add argument --population-number 32 to enable population base training, for example:

This will result in training a population of 32 agents.

Sometimes, the game threads do not exit properly after you kill the python thread. Run following command to print a banch of kill commmands. Then run the printed commands to kill all the game threads.

ps aux | grep -ie Linux.x86_64 | awk '{print "kill -9 " $2}'

It takes some time for the port to release after you killed the python thread.

If you make make sure that your game threads have been killed, you are perfectly fine to run python with a different --arena-start-index 33969.

Or just wait for a while till the system release the port.

You may find it is useful to copy models from a remote server to your desktop, so that you can see training visualization of the game. For example,

- The experiment you want to copy is:

/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506 - The most recent agent id is:

P0_agent_F20152320 - You are copying from a remote server:

-P 30007 yuhangsong@fbafc1ae575e5123.natapp.cc

You can run following commands to copy necessary checkpoints:

/* Wx0 */

mkdir -p /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

scp -r -P 33007 yuhangsong@ca56526248261483.natapp.cc:/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/\{P0_agent_F20152320.pt,eval,checkpoints_reward_record.npy,P0_update_i.npy,event*\} /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

/* Wx1 */

mkdir -p /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

scp -r -P 30007 yuhangsong@fbafc1ae575e5123.natapp.cc:/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/\{P0_agent_F20152320.pt,eval,checkpoints_reward_record.npy,P0_update_i.npy,event*\} /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

/* W4n */

mkdir -p /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

scp -r -P 7334 yuhangsong@s1.natapp.cc:/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/\{P0_agent_F20152320.pt,eval,checkpoints_reward_record.npy,P0_update_i.npy,event*\} /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

/* W2n */

mkdir -p /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

scp -r -P 7330 yuhangsong@s1.natapp.cc:/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/\{P0_agent_F20152320.pt,eval,checkpoints_reward_record.npy,P0_update_i.npy,event*\} /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

/* W5n */

mkdir -p /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

scp -r -P 7333 yuhangsong@s1.natapp.cc:/home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/\{P0_agent_F20152320.pt,eval,checkpoints_reward_record.npy,P0_update_i.npy,event*\} /home/yuhangsong/Arena/results/__en-ArenaWalkerMove-2T1P-v1-Continuous__ot-visual__nfs-4__rb-True__no-True__ti-ppo__pn-1__rpap-OpenAIFive__a-2886aeaec20e4ffc53c06bef0c7e7380aed9e506/

If you use Arena to conduct research, we ask that you cite the following paper as a reference:

@article{song2019arena,

title={Arena: A General Evaluation Platform and Building Toolkit for Multi-Agent Intelligence},

author={Song, Yuhang and Wang, Jianyi and Lukasiewicz, Thomas and Xu, Zhenghua and Xu, Mai and Ding, Zihan and Wu, Lianlong},

journal={arXiv preprint arXiv:1905.08085},

year={2019}

}

as well as the engine behind Arena, without which the platform would be impossible to create

@article{juliani2018unity,

title={Unity: A general platform for intelligent agents},

author={Juliani, Arthur and Berges, Vincent-Pierre and Vckay, Esh and Gao, Yuan and Henry, Hunter and Mattar, Marwan and Lange, Danny},

journal={arXiv preprint arXiv:1809.02627},

year={2018}

}

We give special thanks to the Whiteson Research Lab and Unity ML-Agents Team, with which the discussion shaped the vision of the project a lot.