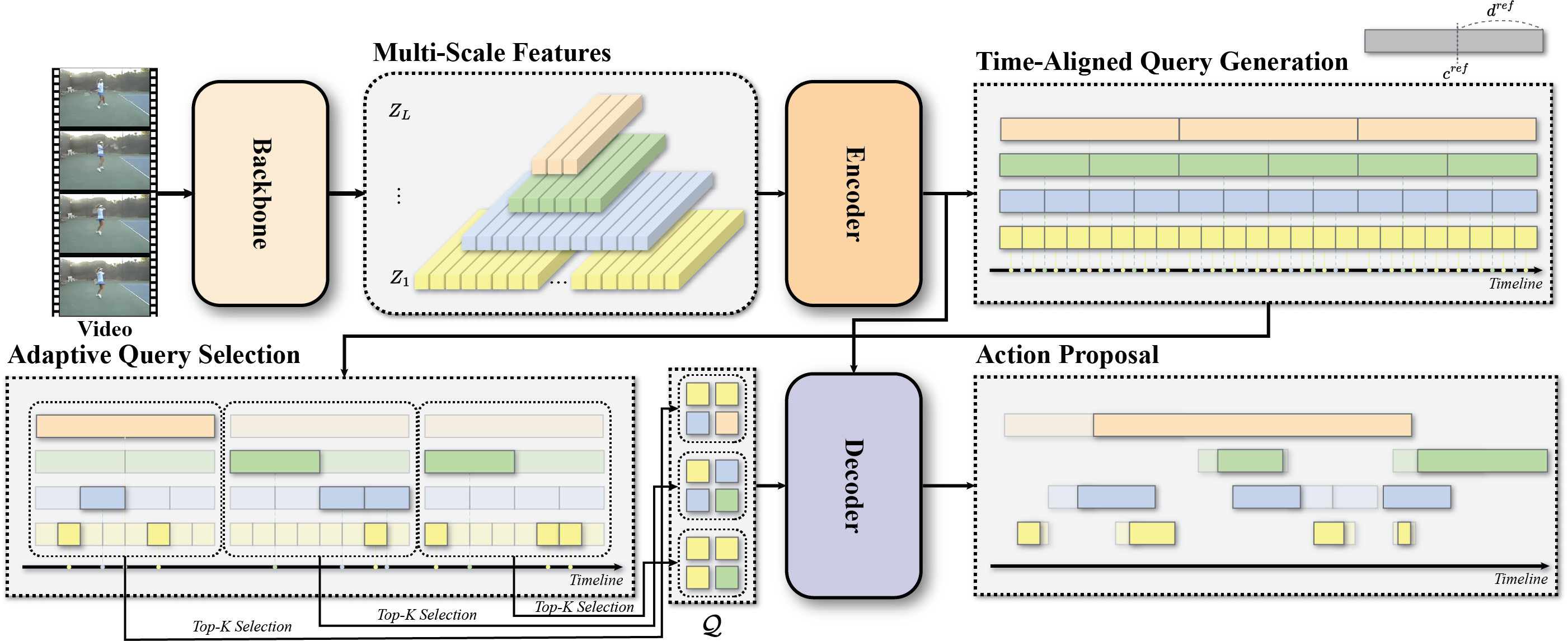

This repository contains the official implementation of the paper TE-TAD: Towards Fully End-to-End Temporal Action Detection via Time-Aligned Coordinate Expression.

Several comments are remained.

cd util

python setup.py # build NMS

cd ..We follow ActionFormer repository for preparing datasets including THUMOS14, ActivityNet v1.3, and EpicKitchens.

Use scripts/make_feature_info.py to generate feature information for each dataset.

To train the TE-TAD model on the THUMOS14 dataset, execute the following command:

python main.py --c configs/thumos14.yaml --output_dir logs/thumos14To evaluate the trained model and obtain performance metrics, use the following command structure:

python main.py --eval --c configs/thumos14.yaml --output_dir logs/thumos14if you find our work helpful, please consider citing our paper:

@InProceedings{Kim_2024_CVPR,

author = {Kim, Ho-Joong and Hong, Jung-Ho and Kong, Heejo and Lee, Seong-Whan},

title = {TE-TAD: Towards Full End-to-End Temporal Action Detection via Time-Aligned Coordinate Expression},

booktitle = {Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2024},

pages = {18837-18846}

}