For a full explanation of the dataset, refers to the article DEAM: MediaEval Database for Emotional Analysis in Music, 2016.

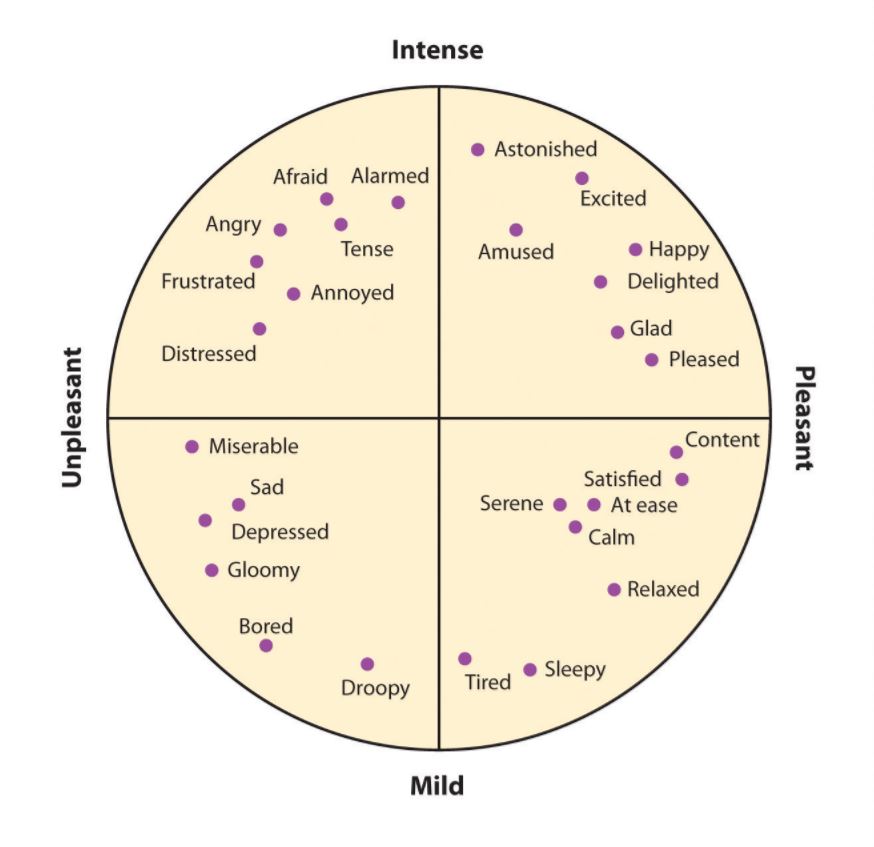

This database links audio songs to the corresponding dynamic emotions in terms of valence and arousal.

Multiple teams tried to modelize valence and emotion based on this dataset.

Aljani, Yang & Soleymani sums up different methodologies and results achieved until 2016 in their article Developing a benchmark for emotional analysis of music, 2016. In 2017, Malik, Adavanne, Drossos, Virtanen, Ticha and Jarina used CovNets to modelize emotions and achieved those scores :

- Arousal : RMSE = 0.202 +/- 0.007

- Valence : RMSE = 0.267 +/- 0.003

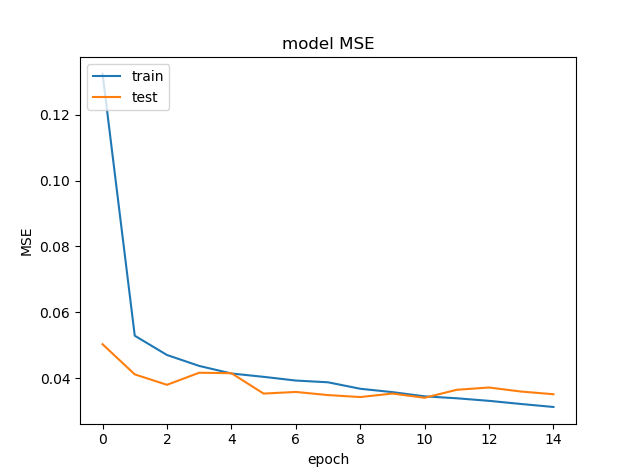

Current models scores :

- Arousal only : RMSE = 0.1876

- Valence only : RMSE = 0.2200

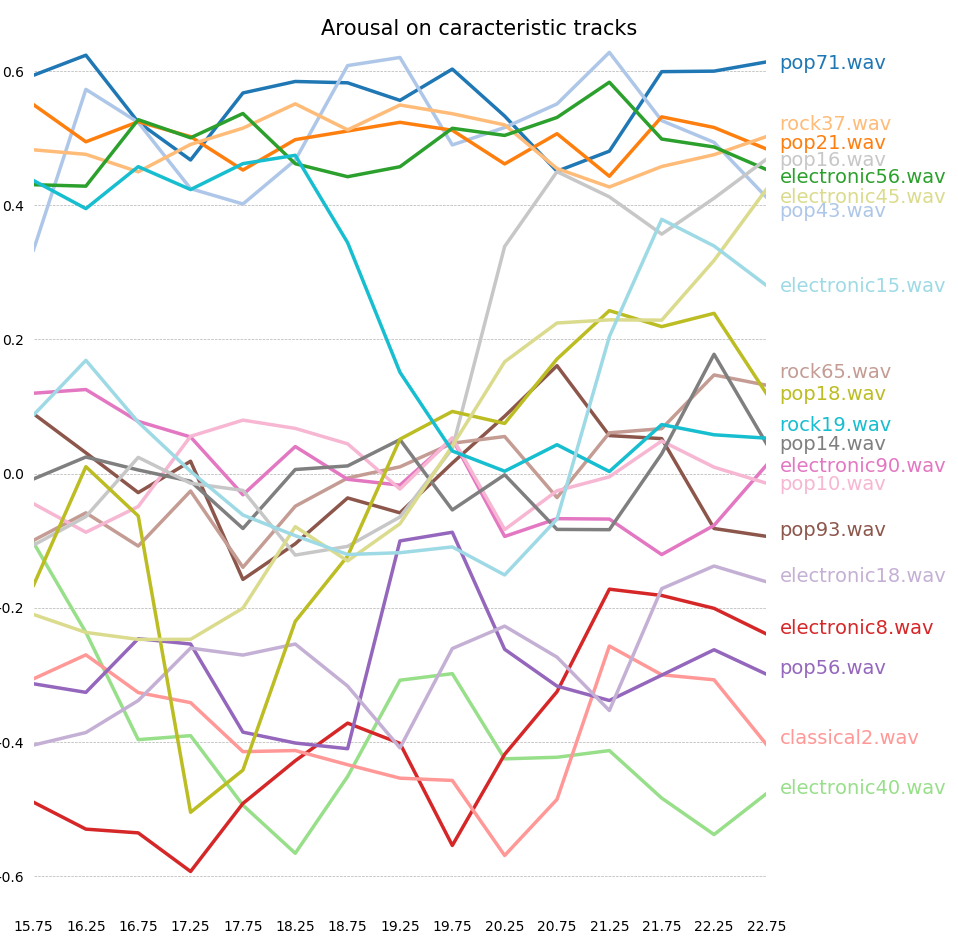

Models dynamic results can be seen on this web page.

From initial songs, compute the 100 first MFCCs with those parameters :

sample rate = 12000

offset = 15s

Duration = 1.5s

Overlap = 2/3

Nb FFT = 512

Nb mel filters = 96

Window size = 256Since the annotations are updated each 500ms, and each MFCC correspond to 1.5s sample with 2/3 overlaping, target variable of each MFCC correspond to the mean of the values included in the window of 1.5s.

MFCCs and corresponding target variable are splited into train and test samples, stratified by song, and then randomized.

Each song is splited into 500ms samples, and chromagrams are computed. It allows the network to modelize valence directly from music notes. LSTM are used.

├── README.md

├── data

│ ├── annotations

│ │ ├── arousal.csv

│ │ └── valence.csv

│ ├── DEAM_mfccs

│ │ └── <DEAM_ID>.npy

│ └── test_songs

│ └── <SONG_ID>.wav

├── readme_files

│ ├── valence_arousal_emotions.png

│ ├── valence_arousal_emotions.jpg

│ └── valence_arousal_emotions2.jpg

├── models

│ ├── <trained models architectures>.json

│ └── <trained models weights>.h5

├── MAIN.py

├── MAIN_arousal.py

└── MAIN_valence.py_________________________________________________________________

Layer (type) Output Shape Param #

=================================================================

=================================================================

Total params:

Trainable params:

Non-trainable params: - Train set : ?? MFCCs

- Early stop : patience =

- 24 Epochs of training achieved in ??h??

- Test set : ?? MFCCs

Test RMSE = 0.1878

Test accuracy:

Sparsity : Predictions are made on another dataset : Emotivity_genre_dataset.7z, which can be found on gs://audio_databases/, from seconds 15 to 23,5.