This repository is for the source code for the paper NeROIC: Neural Object Capture and Rendering from Online Image Collections by Zhengfei Kuang, Kyle Olszewski, Menglei Chai, Zeng Huang, Panos Achlioptas, and Sergey Tulyakov.

The code is coming soon. For more information, please check out the project website.

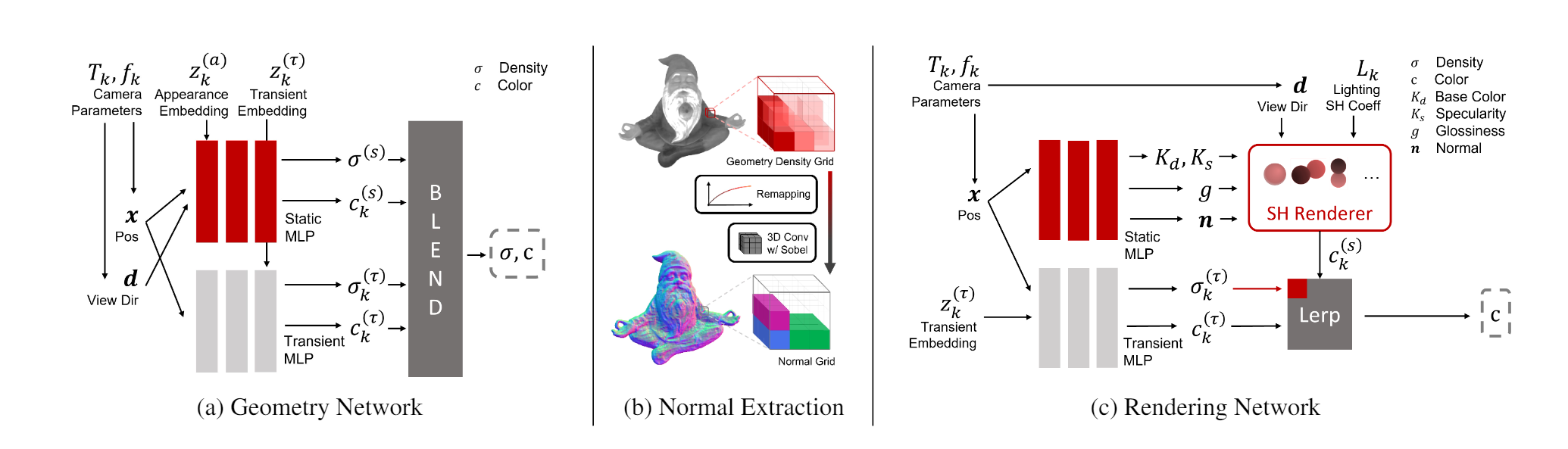

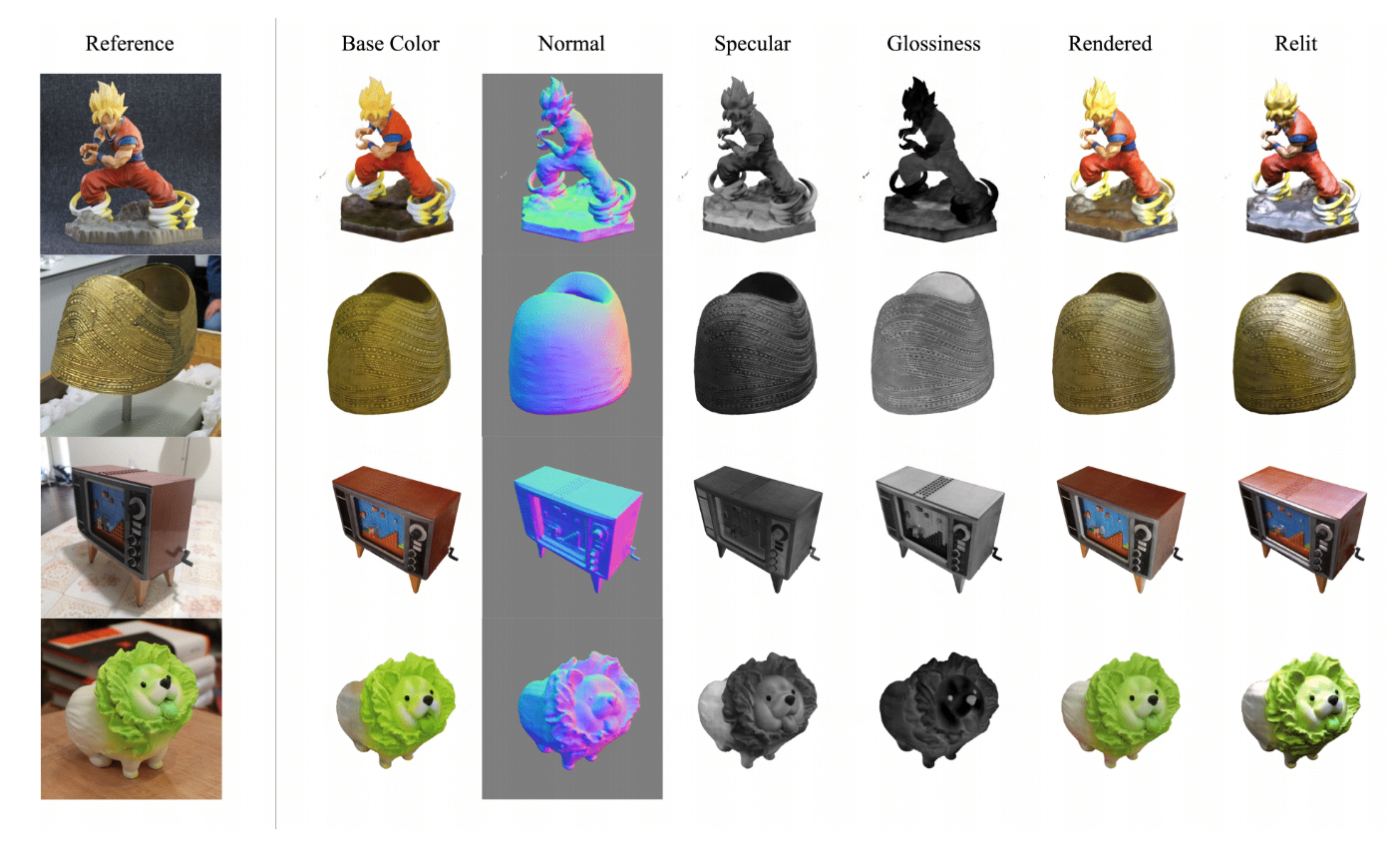

Our two-stage model takes images of an object from different conditions as input. With the camera poses of images and object foreground masks acquired by other state-of-the-art methods, We first optimize the geometry of scanned object and refine camera poses by training a NeRF-based network; We then compute the surface normal from the geometry (represented by density function) using our normal extraction layer; Finally, our second stage model decomposes the material properties of the object and solves for the lighting conditions for each image.

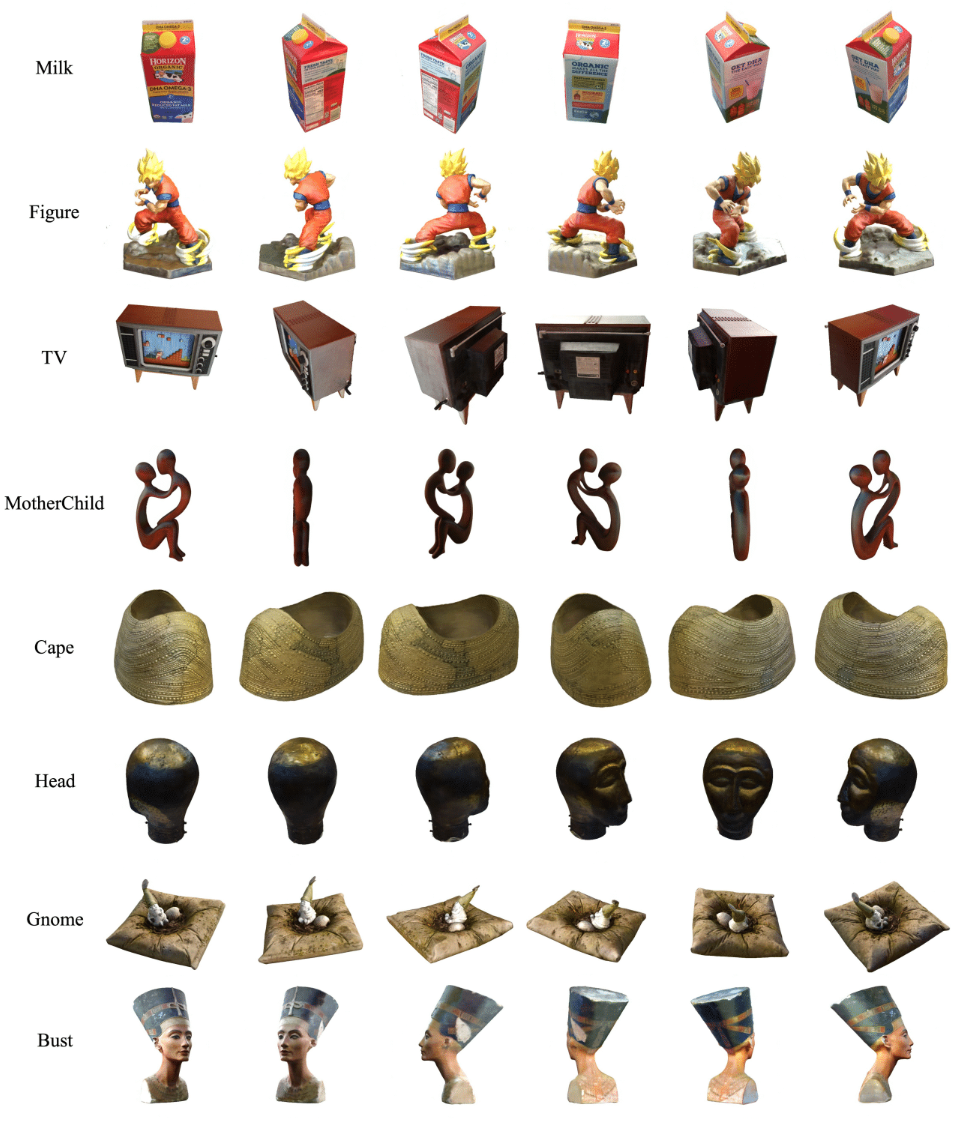

Given online images from a common object, our model can synthesize novel views of the object with the lighting conditions from the training images.

nvs.mp4

material.mp4

relighting.mp4

If you find this useful, please cite the following:

@article{kuang2021neroic,

author = {Kuang, Zhengfei and Olszewski, Kyle and Chai, Menglei and Huang, Zeng and Achlioptas, Panos and Tulyakov, Sergey},

title = {{NeROIC}: Neural Object Capture and Rendering from Online Image Collections},

journal = Computing Research Repository (CoRR),

volume = {abs/2201.02533},

year = {2022}

}