PI-Fed: Continual Federated Learning with Parameter-Level Importance Aggregation This repo contains the source code of our proposed PI-FED, a federated learning framework, which supports task-incremental learning on private datasets through iterative server-client communications. Compared with 3 classic federated learning methods [FedAvg, FedNova and SCAFFOLD], PI-Fed demonstrates significantly better performance on continual FL benchmarks.

Due to the catastrophic forgetting feature of vanilla network optimizers, prior federated learning approaches are restricted to single task learning and typically assume that data from all nodes are simultaneously available during training, which are impractical in most real-world scenarios.

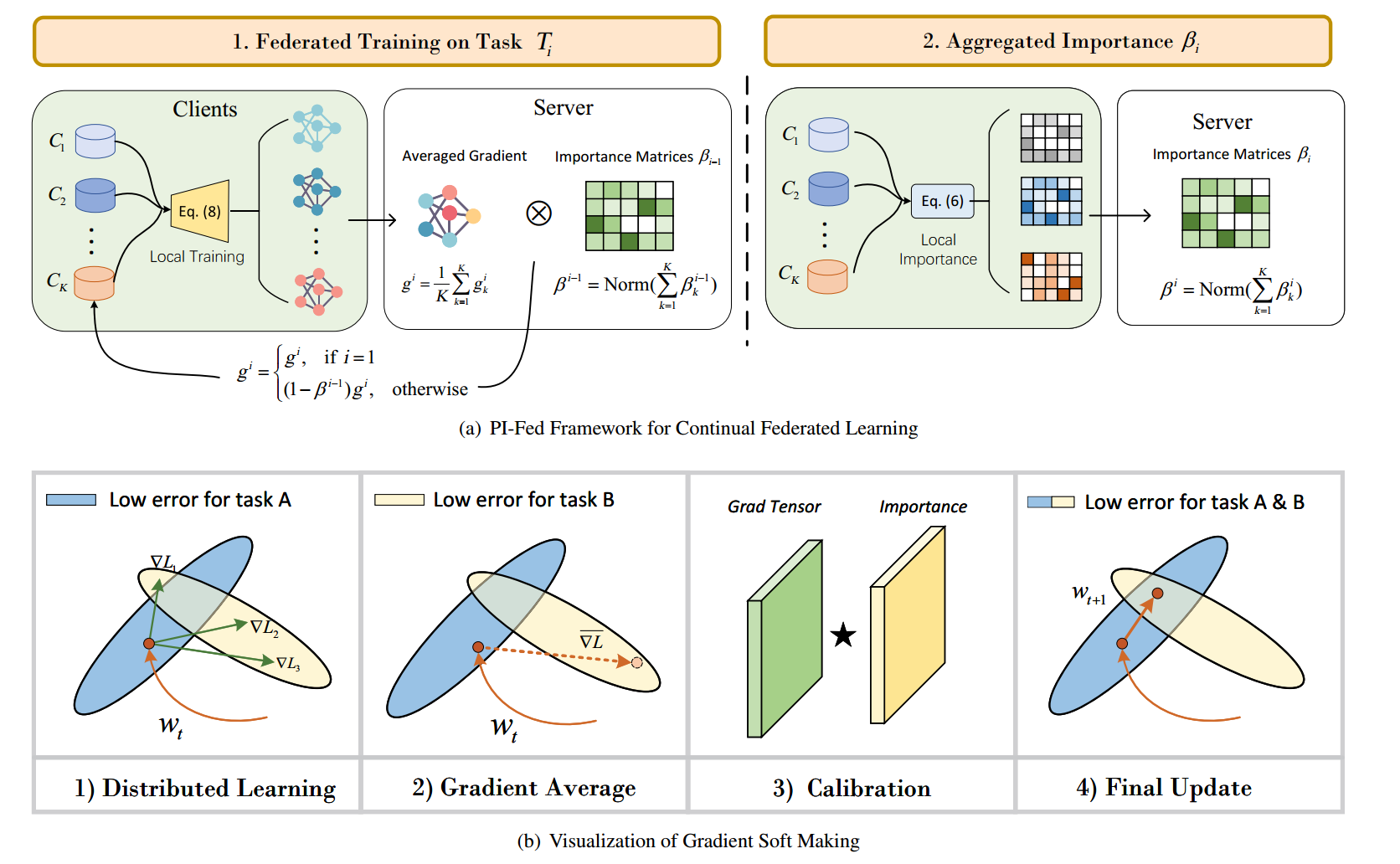

To overcome these limitations, we propose a continual federated learning framework with parameter-level importance aggregation PI-Fed,

which supports task-incremental learning on private datasets through iterative server-client communications.

Different FL methods (PI-Fed, FedAvg, FedNova and SCAFFOLD) could be specified with

# PI-Fed/conf/config.yaml

fed:

alg: PI_Fed # PI_Fed, FedAvg, FedNova, SCAFFOLDExperiments can be reproduced by running

python3 main.py appr=default seq=<seq> with specifying <seq> for a dataset you want to run.

For <seq>, you can choose one from the following datasets.

cifar100_10for C-10 (CIFAR100 with 10 tasks)cifar100_20for C-20tinyimagenet_10for T-10 (TinyImageNet with 10 tasks)tinyimagenet_20for T-20fceleba_10for FC-10 (Federated CelebA with 10 tasks)fceleba_20for FC-20femnist_10for FE-10 (Federated EMNIST with 10 tasks)femnist_20for FE-20

We use 4 datasets in the paper. To reproduce the results, some of these datasets need to be prepared manually.

You do not need do anything for these datasets as they will be automatically downloaded.

You can download the datasets from the official site.

- Download the Tiny ImageNet file.

- Extract the file, and place them as follows.

data/tiny-imagenet-200/

|- train/

| |- n01443537/

| |- n01629819/

| +- ...

+- val/

|- val_annotations.txt

+- images/

|- val_0.JPEG

|- val_1.JPEG

+- ...

- Run

prep_tinyimagenet.pyto reorganise files so thattorchvision.datasets.ImageFoldercan read them. - Make sure you see the structure as follows.

data/tiny-imagenet-200/ |- test/ | |- n01443537/ | |- n01629819/ | +- ... |- train/ | |- n01443537/ | |- n01629819/ | +- ... +- val/ +- # These files are not used any more.

- Follow the instruction to create data.

- Place the raw images under

data/fceleba/raw/img_align_celeba/. - Make sure you see the structure as follows.

data/fceleba/

|- iid/

| |- test/

| | +- all_data_iid_01_0_keep_5_test_9.json

| +- train/

| +- all_data_iid_01_0_keep_5_train_9.json

+- raw/

+- img_align_celeba/

|- 000001.jpg

|- 000002.jpg

+- ...

./preprocess.sh -s iid --sf 1.0 -k 5 -t sample --iu 0.01

- Follow the instruction to create data.

- Place the raw images under

data/femnist/raw/train/anddata/femnist/raw/test/. - Make sure you see the structure as follows.

data/femnist/

+- raw/

|- test

| |- all_data_0_iid_01_0_keep_0_test_9.json

| |- ...

| +- all_data_34_iid_01_0_keep_0_test_9.json

+- train

|- all_data_0_iid_01_0_keep_0_train_9.json

|- ...

+- all_data_34_iid_01_0_keep_0_train_9.json

./preprocess.sh -s iid --sf 1.0 -k 0 -t sample