Make sure to check that your docker daemon is running before trying to run the pipeline or it will fail

JakeLehle opened this issue · 10 comments

Describe the bug

Hello, I'm trying to bring the ENCODE pipelines to my school but I'm working my way through learning how to use them. I'm getting a better hang of understanding what to do with .wdl and input json files.

I've been playing around with cloning the wgbs-pipeline and running the sample code as described in the readme file which was working last week. However, this week I recently started getting an error message when I tried running the pipeline from scratch.

Here is the code I ran

$ git clone https://github.com/ENCODE-DCC/wgbs-pipeline.git

$ pip3 install caper

$ caper run wgbs-pipeline.wdl -i tests/functional/json/test_wgbs.json --docker

The caper failure id is 0b655180-c147-4524-bed5-92d854487050

I tried running the debug on that error code but had an issue connecting to the caper server.

OS/Platform

- OS/Platform: Ubuntu 20.04.2 LTS,

- Conda version: 4.10.3

- Pipeline version: v1.1.7

- Caper version: 2.1.1

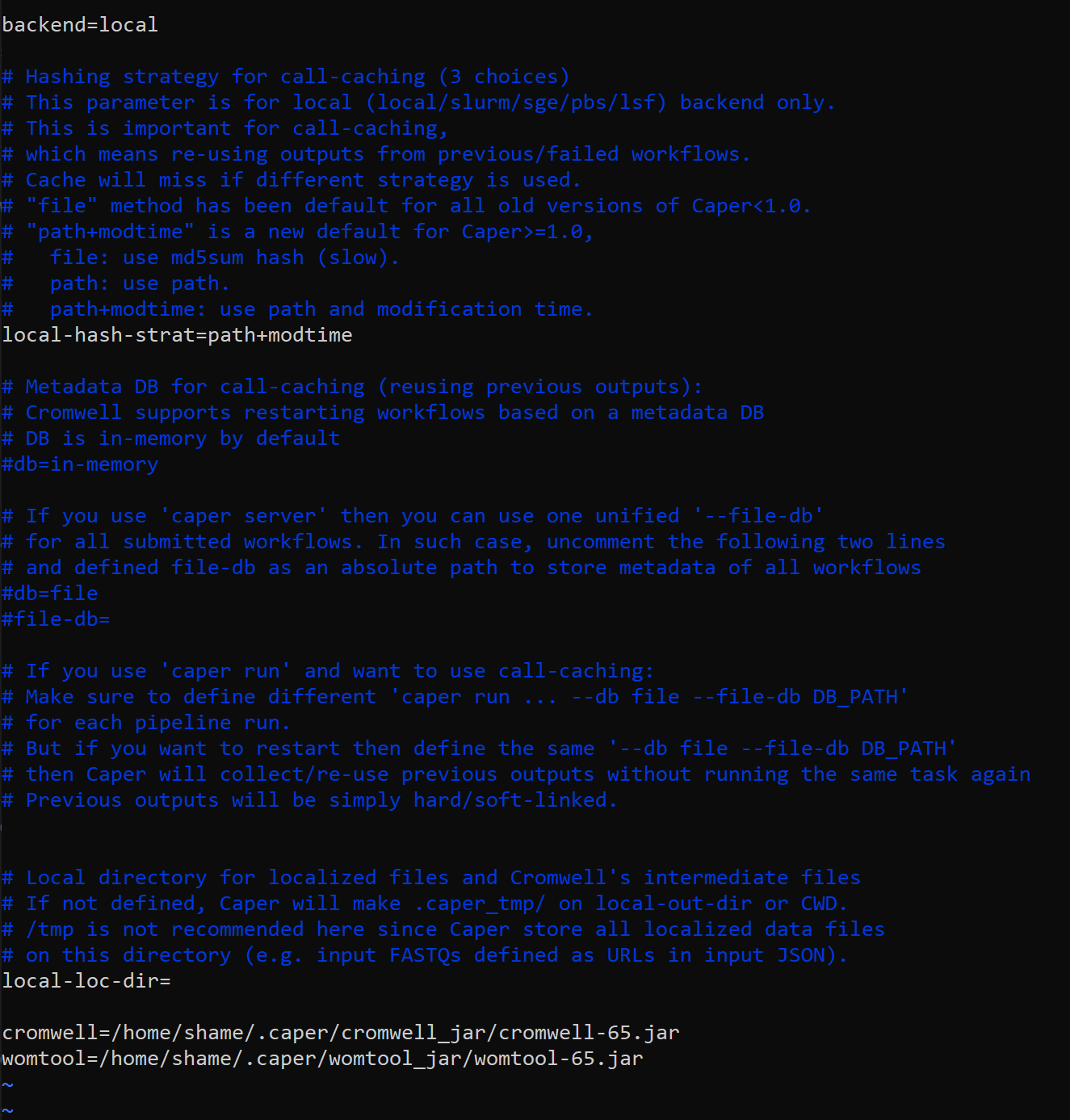

Caper configuration file

Input JSON file

- name: test_wgbs

tags:- functional

command: >-

tests/caper_run.sh

wgbs-pipeline.wdl

tests/functional/json/test_wgbs.json

files: - path: test-output/gembs.conf

md5sum: 1ad8f25544fa7dcb56383bc233407b54 - path: test-output/gembs_metadata.csv

md5sum: 9dd5d3bee6e37ae7dbbf4a29edd0ed3f - path: test-output/indexes.tar.gz

md5sum: bde2c7f6984c318423cb7354c4c37d44 - path: test-output/gemBS.json

md5sum: d6ef6f4d2ee7e4c3d2e8c439bb2cb618 - path: test-output/glob-e97d885c83d966247d485dc62b6ae799/sacCer3.contig.sizes

md5sum: 0497066e3880c6932cf6bde815c42c40 - path: test-output/glob-c8599c0b9048b55a8d5cfaad52995a94/sample_sample5.bam

md5sum: fc1d87ed4f9f7dab78f58147c02d06c9 - path: test-output/glob-e42c489c9c1355a3e5eca0071600f795/sample_sample5.bam.csi

md5sum: b37be1c10623f32bbe73c364325754b0 - path: test-output/glob-13824b1e03fdcf315fe2424593870e56/sample_sample5.bam.md5

md5sum: 56f31539029eab274ff0ac03e84e214a - path: test-output/glob-c0e92e4e9fb050e7e70bb645748b45dd/0.json

md5sum: cbf4ba8d84384779c626b298a9a60b96 - path: test-output/glob-1e6c456aecc092f75370b54a5806588f/sample_sample5.bcf

md5sum: 7cafb436b89898e852f971f1f3b20fc6 - path: test-output/glob-804650e4b0c9cc57f1bbc0b3919d1f73/sample_sample5.bcf.csi

md5sum: 156c39bd2bcc0bc83052eb4171f83507 - path: test-output/glob-95d24e89d025dc63935acc9ded9f8810/sample_sample5.bcf.md5

md5sum: 8ebe942fe48b07e1a3455f572aadf57b - path: test-output/glob-0b0236659b9524643e6454061959b28c/sample_sample5_pos.bw

md5sum: c30bc10a258ca4f1fe67f115c4c2db10 - path: test-output/glob-041e1709c7dd1f320426281eb4649f9b/sample_sample5_neg.bw

md5sum: f49ab06a51d9c4a8e663f0472e70eb06 - path: test-output/glob-708835e6a0042d33b00b6937266734f5/sample_sample5_chg.bb

md5sum: cc123bff807e0637864d387628d410fa - path: test-output/glob-f70a6609728d4fb1448dba1f41361a30/sample_sample5_cpg.bb

md5sum: f67f273c68577197ecf19a8bb92c925c - path: test-output/glob-52f916d7cc14a5bcfb168d6910e04b56/sample_sample5_chg.bed.gz

md5sum: 6994fafbf9eab44ff6e7fafa421fffbc - path: test-output/glob-2b5148d6967b43eee33eb370fd36b70e/sample_sample5_chh.bed.gz

md5sum: 31a69396b7084520c04bd80f2cabfd59 - path: test-output/glob-f31cca1fcab505e10c2fe5ff003b211a/sample_sample5_cpg.bed.gz

md5sum: 67193e21cc34b76d12efba1a19df6644 - path: test-output/glob-b76cddd256e1197e0b726acc7184afc4/sample_sample5_cpg.txt.gz

md5sum: fccd9c9c5b4fea80890abd536fd76a35 - path: test-output/glob-24ceb385eea2ca53f1e6c4a1438ccd21/sample_sample5_cpg.txt.gz.tbi

md5sum: a1e08686f568af353e9026c1de00c25d - path: test-output/glob-40c90aa4516b00209d682b819b1d021f/sample_sample5_non_cpg.txt.gz

md5sum: 6809ee8479439454aa502ae11f48d91c - path: test-output/glob-664ff83c3881df2363da923f006b098b/sample_sample5_non_cpg.txt.gz.tbi md5sum: 6cdebb4ad2ea184ca4783acb350ae038

- path: test-output/gembs_map_qc.json

md5sum: 26b5238ab7bb5b195d1cf8127767261c - path: test-output/gembs_map_qc.json

md5sum: 26b5238ab7bb5b195d1cf8127767261c - path: test-output/glob-65c481a690a62b639d918bb70927f25e/sample_sample5.isize.png

md5sum: f0277a185298dee7156ec927b02466c7 - path: test-output/mapping_reports/mapping/sample_sample5.mapq.png

md5sum: 49837be15f24f23c59c50241cf504614 - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/ENCODE.html

md5sum: 163401e0bf6a377c2a35dc4bf9064574 - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/0.html

md5sum: 604b6f1a4c641b7d308a4766d97cadb7 - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/sample_sample5.html

md5sum: b86ce9e15ab8e1e9c45f467120c22649 - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/0.isize.png

md5sum: 82a262d0bf2dadb02239272da490bba1 - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/0.mapq.png

md5sum: d8c3af2eae5af12f1eb5dd9ec4e225bb - path: test-output/glob-1aeed469ae5d1e8d7cbca51e8758b781/style.css

md5sum: a09ae01f70fa6d2461e37d5814ceb579 - path: test-output/coverage.bw

md5sum: afa224c2037829dccacea4a67b6fa84a - path: test-output/average_coverage_qc.json

md5sum: 82ce31e21d361d52a7f19dce1988b827 - path: test-output/bed_pearson_correlation_qc.json

should_exist: false

- functional

$ caper debug [WORKFLOW_ID_OR_METADATA_JSON_FILE]

Thank you so much!

Best,

Jake Lehle

caper debug only works when you have a running server. For debugging you probably want to look at the contents of the workflow metadata JSON file. You can see the path in the error log you linked in the line containing "Wrote metadata file".

For debugging you typically want to look at the failed task stdout/stderr, the metadata file contains their paths. If you could provide the contents of those file here I'll be able to provide more help. The logs you've send are fairly generic so unfortunately I can't tell what the real issue is.

Hi Paul,

Thanks for getting back to me so quickly and letting me know what that documentation means. I'll look there for troubleshooting this stuff in the future if the pipeline fails.

Here is that file. I'm gonna go start an qRT-PCR run in our core and then come back and look over the file in more depth. I re-saved the file as a .txt since that is how I have to upload it on here.

Thanks again,

Jake

Could you share the following files as well?

/home/shame/wgbs/encode/wgbs-pipeline/wgbs/0b655180-c147-4524-bed5-92d854487050/call-make_conf/execution/stdout

/home/shame/wgbs/encode/wgbs-pipeline/wgbs/0b655180-c147-4524-bed5-92d854487050/call-make_conf/execution/stderr

/home/shame/wgbs/encode/wgbs-pipeline/wgbs/0b655180-c147-4524-bed5-92d854487050/call-make_metadata_csv/execution/stdout

/home/shame/wgbs/encode/wgbs-pipeline/wgbs/0b655180-c147-4524-bed5-92d854487050/call-make_metadata_csv/execution/stderr

Those are some interesting files. Is the stdout the specific error code? Then the stderr the error message associated with that code?

stderr_make_metadata_csv.txt

stderr_make_conf.txt

stdout_make_metadata_csv.txt

stdout_make_conf.txt

These two files contain the contents of the stdout and stderr for the task. In this case the stdout just contains a single number (perhaps the PID) but oftentimes it contains useful logs.

I'm seeing this in your stderr:

docker: Cannot connect to the Docker daemon at unix:///var/run/docker.sock. Is the docker daemon running?.

So it looks like Docker is not running. You can double check it by running the command sudo systemctl status docker. If it's not running, sudo systemctl start docker should bring it up.

Didn't Caper automatically show the contents of stderr of the failed task?

Can you upload a full log of Caper? something like

* Recursively finding failures ...

...

Haha well now I'm embarrassed. That was an easy fix. @leepc12 yes it did but I'm still new at using caper so I was just having trouble reading the outputs and figuring out where things broke down. I should pick it up more quickly now. Knowing about those directories to look in as well for the stderr will be helpful in the future.

So i'm actually running linux inside of my windows machine using WSL.

So those two commands should have worked but they don't for WSL cause WSL dosen't actually have a systemd I believe.

What does work is

sudo service docker startThe pipeline is working now and I learned a good lesson to check that my docker daemon is running before I try using it to run a pipeline.

You guys can close this issue in record time.

But while I have you. So the next steps in the pipeline are to use croo to output the results as a .html page and other .tsv or qc files. Then it looks like I can use qc2tsv to merge the qc files together into a tsv file and poke through it. Are there any recommendations on the best way to view those files either the html or tsv. I've recently started using shiny servers to work with files like that in an interactive web application. Is that what this was intended to be used for? Most of the documentation seems to end after generating the html files with croo so I'm curious on what you guys do with these files to view them easily. I can dig into everything else and try and set something up.

For this pipeline, the qc_report task actually emits HTML already, see html_assets here. I typically just open the HTML files for inspection in Chrome.

Croo is more useful for presenting pipeline outputs in a tabular format. qc2tsv will be useful if you want to merge the JSON QC from multiple pipeline runs into a single flat file.

Oh cool okay I'll keep that in mind for merging multiple pipeline runs.

Okay I can open the html file and I get something like this.

I was just curious about ways to increase the portability of the croo outputs since I'm running the pipeline in a virtual machine with WSL I haven't figured out a way to forward outputs from the terminal to an X11 server on my windows computer so I can run chrome with a GUI. I usually just move files from the linux computer into a shared volume and then open them with my windows computer but that breaks all the path files. I have a linux desktop at home I'll rerun the pipeline and look at all of the outputs.

I'll also look into the soft linking of the output files mentioned in the readme if research groups are using AWS or google cloud platforms. That would probably be a better setup for running this on our school's HPC setup.

Anyway. Thanks so much for helping me out with this.

Best,

Jake Lehle