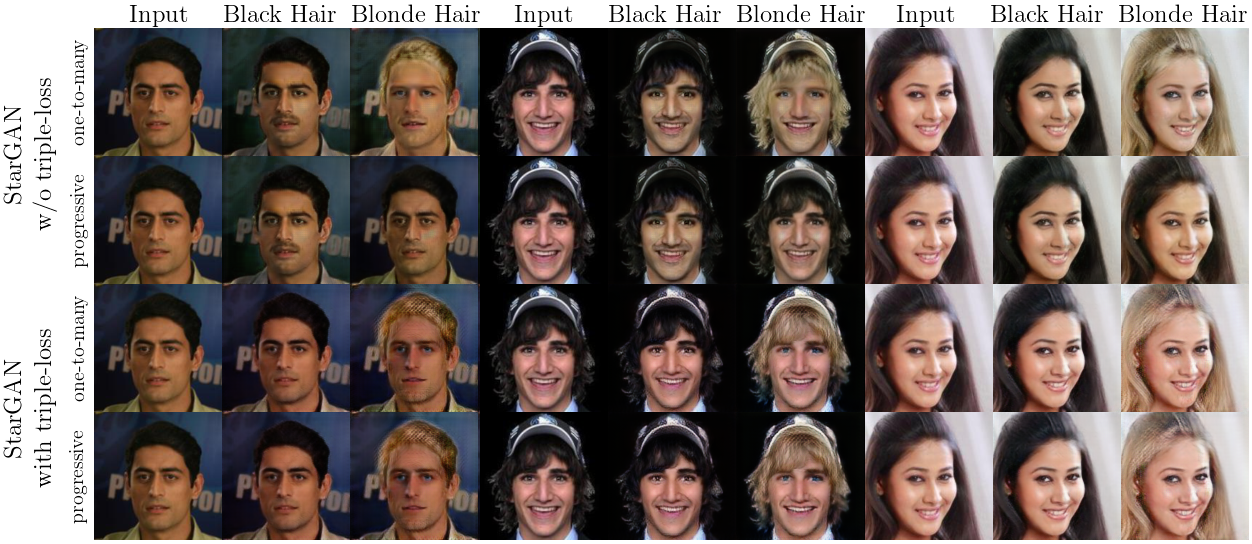

This is the modified training of StarGAN using a triple consistency loss, as presented in our preprint.

Should you use the code please cite the original StarGAN paper (of course!), as well as our pre-print. For further details on how to use the StarGAN, please refer to the original repository

Should you want to check the progressive image generation, please set config = 'progressive' (see main.py for further details). It has only been implemented for the single-dataset case.

@article{Sanchez2018Gannotation,

title={Triple consistency loss for pairing distributions in GAN-based face synthesis},

author={Enrique Sanchez and Michel Valstar},

journal={arXiv preprint arXiv:1811.03492},

year={2018}

}

@InProceedings{StarGAN2018,

author = {Choi, Yunjey and Choi, Minje and Kim, Munyoung and Ha, Jung-Woo and Kim, Sunghun and Choo, Jaegul},

title = {StarGAN: Unified Generative Adversarial Networks for Multi-Domain Image-to-Image Translation},

booktitle = {The IEEE Conference on Computer Vision and Pattern Recognition (CVPR)},

month = {June},

year = {2018}

}