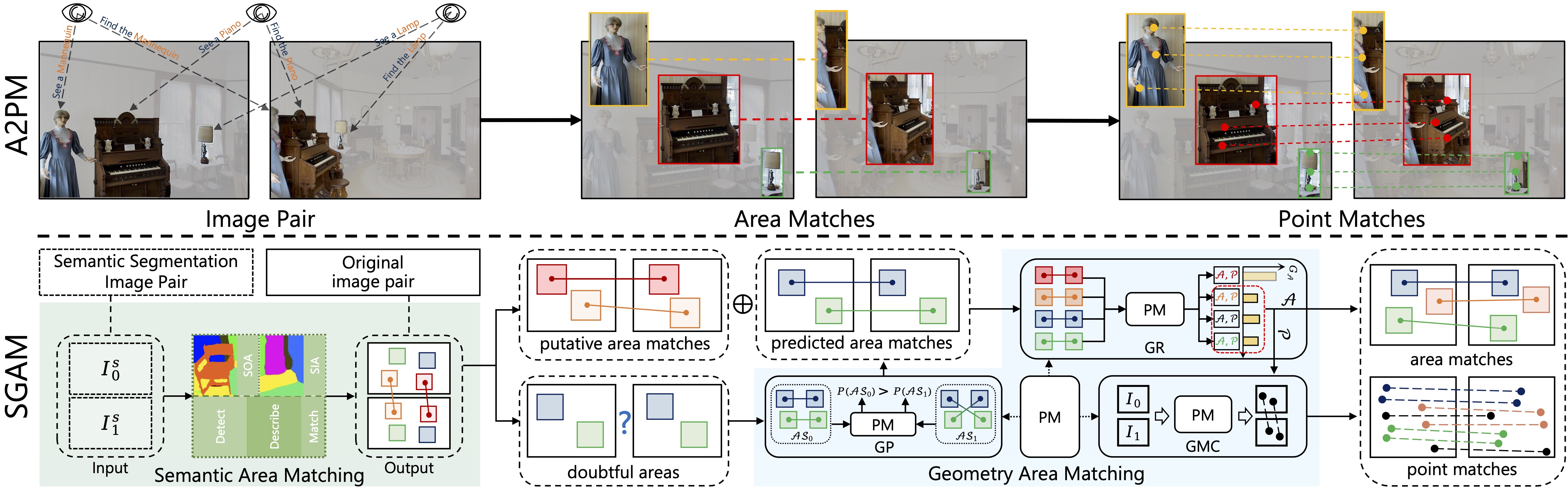

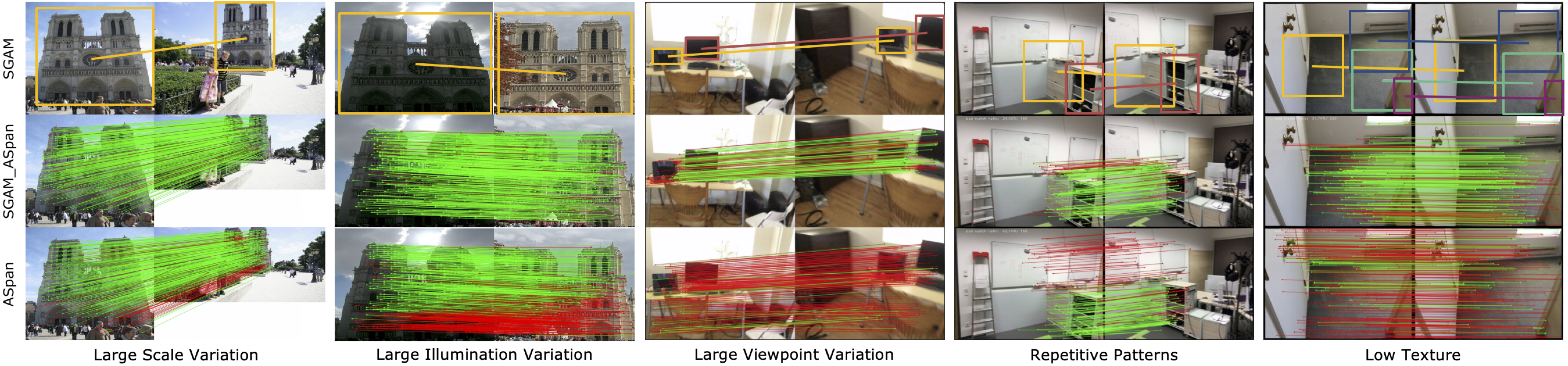

This is the the offical code release for the paper: `Searching from Area to Point: A Semantic Guided Framework with Geometric Consistency for Accurate Feature Matching', by Yesheng Zhang and Xu Zhao and Dahong Qian.

See also a refactored version here.

- Main Code release

- SAM

- GAM

- Demo Code construction

- SAM demo @ 2024-06-05

- SGAM demo @ 2024-06-06

- ReadMe Complete

You can install torch like:

conda create -n sgam_aspan python==3.8

conda activate sgam_aspan

pip install torch==2.0.0+cu118 torchvision==0.15.1+cu118 torchaudio==2.0.1 --index-url https://download.pytorch.org/whl/cu118 pip install -r requirements.txtPoint matchers including SuperPoint, SuperGlue, ASpanFormer, LoFTR, QuadTree and DKM can share the same conda environment.

Please follow the instructions in ASpanFormer.

Note, the environment for ASpanFormer can be shared with the environment for SGAM. That is, you have no need to create a new conda environment for ASpanFormer, but just install the required packages in the same environment sgam_aspan.

Similarly, you can install other aforementioned point matchers in the same environment i.e. sgam_aspan.

The demo for semantic area matching is provided in ./demo/semantic_area_match_demo.py.

You can directly run it as:

cd demo

python semantic_area_match_demo.pyThis will match the image 0.jpg with 5.jpg, which are provided in /opt/data/private/SGAM/demo/demo_data, using the ground truth semantic labels.

The image selection can be adjusted in the script:

SAMer_cfg = assemble_config_with_scene_pair(SAMer_cfg, scene="scene0002_00", pair0="0", pair1="5", out_path="")After running, the results can be found in demo/demo_sam_res, including area matches, doubtful area pairs, semantic object areas and semantic intersection areas, like we provided.

The semantic area matching results are shown as below:

The doubtful area pairs are shown as below:

The code for combination of SEEM will be released soon.

We are still working on the SGAM demo, including refactoring the code due to the complexity of the configuration of SGAM. We will release the refactored code soon.

You can run the demo for SGAM as:

cd demo

python sgam_demo.pyAfter that, the results will be saved in demo/demo_sgam_res, including the predicted doubtful area pairs (results of GP), rejected area pairs (results of GR).

The results of GP are shown as below:

We also report the pose error between the baseline (ASpanFormer) and our SGAM. You should see:

| SUCCESS | __main__:pose_eval:93 - calc pose with 1000 corrs

2024-06-06 23:19:32.632 | SUCCESS | utils.geo:compute_pose_error_simp:1292 - use len=1000 corrs to eval pose err = t-14.6511, R-0.3452

2024-06-06 23:19:32.632 | SUCCESS | __main__:<module>:207 - ori pose error: [0.3452374520071945, 14.651053301930869]

2024-06-06 23:19:32.633 | SUCCESS | __main__:pose_eval:93 - calc pose with 1000 corrs

2024-06-06 23:19:32.690 | SUCCESS | utils.geo:compute_pose_error_simp:1292 - use len=1000 corrs to eval pose err = t-10.8309, R-0.2458

2024-06-06 23:19:32.691 | SUCCESS | __main__:<module>:213 - SGAM-5.0 pose error: [0.24582250341773104, 10.830935884665799]In this case, the pose error is reduced from 0.3452 to 0.2458 for rotation and 14.6511 to 10.8309 for translation.

Note the images in this case come from the training set of ASpanFormer, and SGAM can further improve its performance.

If you find this code helpful, please cite:

@article{zhang2023searching,

title={Searching from Area to Point: A Hierarchical Framework for Semantic-Geometric Combined Feature Matching},

author={Zhang, Yesheng and Zhao, Xu and Qian, Dahong},

journal={arXiv preprint arXiv:2305.00194},

year={2023}

}We thank the authors of SuperPoint, SuperGlue, ASpanFormer, LoFTR, QuadTree and DKM for their great works.

We also thank the authors of SEEM for their great works.