This is a side project initialized by Jasmine (Jingyi) Sun and Edward (Haoran) Li.

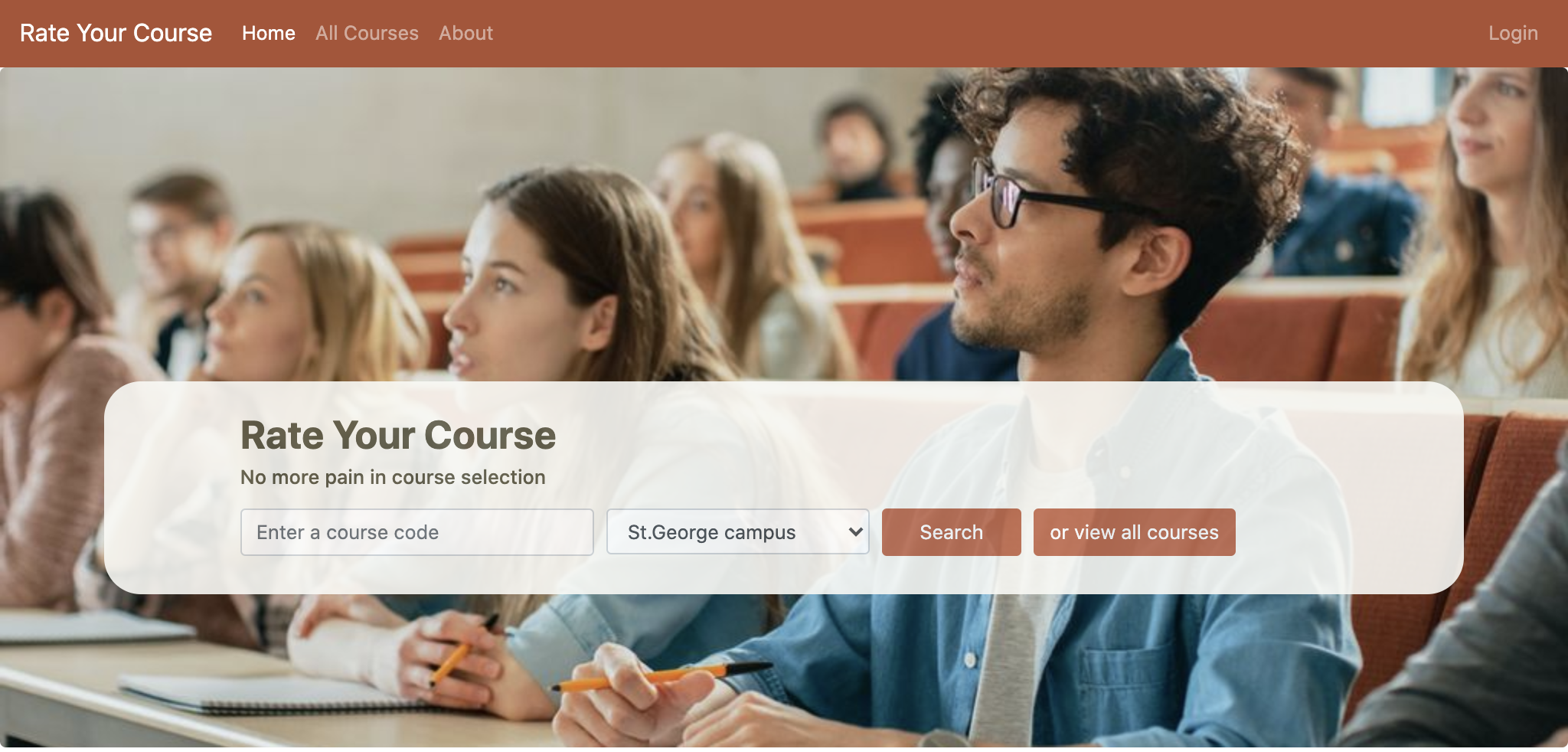

This project is started with the mission of helping undergraduate student in University of Toronto to make a better decision when enrolling courses for each semester. Mission is achieved by organizing and analyzing the public data from reddit.com/r/uoft, the subreddit for U of T. The project is presented in a form of website, and consists three major parts: web front-end (by Jasmine), web back-end (by Jasmine) and algorithms (by Edward).

This repository contains:

- The algorithm Python file folder where contains all the Python code to process the raw data from reddit.

- All the rest files are components of web implementation.

As students from University of Toronto (and we bet it is the same for many other universities), at the beginning of every semester, choosing the courses is always a torturing process for us. As two "senior" users of reddit.com, we found that lots of students will share their thought and their experience of all espects on this social partform. With all these in mind, a brillant idea came cross to our brain:

Can we extract the those student's thought about each course from reddit, process them, and then present the result to our community in a visualized method?

And here it comes this side project.

In this section, we will introduce the process on how we extract data from reddit and then finally generate a json file to be used in webpage.

The first thing we do is crawling down all the course code and correponding information to a file. Luckily, with the easy-to-use U of T academic calendar, we could download a html file with all the information we need. After applying the regular expression, we are able to locate the specifc string we need.

The next step will be downloading the raw data from reddit. The Python library we used is praw (documentation). This library is a python wrapper of reddit's official API. We searched the course code in U of T subreddit via praw, downloaded posts from the search result, and convert each course to a "course complex".

After getting all the related information about reddit, we combine strings from the course complex. Using the long long string, we put into nltk library, a natural language toolkit, to filter unimportant words and only leave nouns and adjectives. Then we count the frequency of these words. With the frequency, we put them in wordcloud library and generate a word cloud image.

To quantitively reflect how the students feel about the target course, we will measure the sentiment of each sentence in the course complex, where negative value means negative feeling, and positive value stands for positive feeling. We are doing so by using another natural language processing library, textblob. After that, we average the sentiment value, and turn it into a percentage. Therefore, our beloved users can compare how the public feels about those courses.

- Frontend: Ejs, Bootstrap, CSS, Jquery, Ajax

- Backend: Node.js, Express.js, MongoDB, Mongoose

- Other technologies: Heroku, Google API

Two authentication strategies are implemented: id/password login and Google login. For security consideration, Javascript library PassportJS is used to encrypt user's password before saving into the database.

The server is implemented with ExpressJS to handle request/response and web-page routing. Users can browse course review :

- by entering the course code in the landing page

- by visiting the all-courses page, with options to view by alphabet/popularity/sentiment score/monthly visit number

After logged in, users may post reviews or upvote/downvote for contents scraped from Reddit.