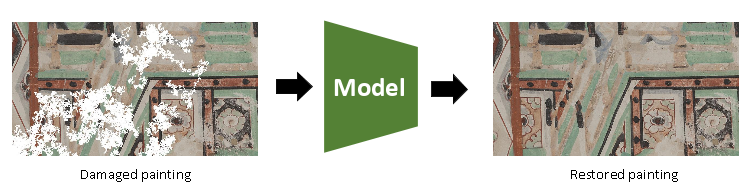

In this challenge, you are asked to restore damaged paintings, where you can take advantage of the knowledge and skill learned in DLCV lessons. This is a " Image Inpainting " task, you are encouraged to search for related resources on your own.

The original challenge website is provided here.

To get the dataset for this challenge, please use the following command:

bash download.sh

All the data is under ./Data_Challenge2, and the dataset is aranged as below:

- train/ : 400 damaged images (* _masked.jpg) and corresponding masks (* _mask.jpg) for training.

- train_gt/ : 400 ground truth images (* .jpg) for training.

- test/ : 100 damaged images (* _masked.jpg) and corresponding masks (* _mask.jpg) for testing.

- test_gt/ : 100 ground truth images (* .jpg) for testing.

Please note that you cannot use the testing data (i.e. query.csv and gallery.csv) to train your model.

2020/1/13 (Mon.) 23:59 GMT+8 (Late submission will not be accepted.)

- Taking any unfair advantages over other class members (or letting anyone do so) is strictly prohibited. Violating university policy would result in an F grade for this course (NOT negotiable).

- If you refer to some parts of the public code, you are required to specify the references in your report (e.g. URL to GitHub repositories).

- You are encouraged to discuss homework assignments with your fellow class members, but you must complete the assignment by yourself. TAs will compare the similarity of everyone’s submission. Any form of cheating or plagiarism will not be tolerated and will also result in an F grade for students with such misconduct.

Aside from your own Python scripts and model files, you should make sure that your submission includes at least the following files in the root directory of this repository:

-

final_poster.pdf

The poster used in your final presentation. You need to make sure all the experimental results are in this poster. -

final.shYou need to provide a linux shell script to reproduce your results.

We will execute the shell script in following manner:

CUDA_VISIBLE_DEVICES=GPU_NUMBER bash final.sh $1 $2

$1is the source folder (e.g.Data_Challenge2/test/)of testing images (The contents are the same asData_Challenge2/test/)$2is the folder (e.g.Data_Challenge2/pred/) to save the restored images (predicted images). If the input image is name xxx.jpg, the outputed image should be name xxx.jpg. (Please do not create this file in your code or shell script.)

⚠️ IMPORTANT NOTE⚠️

- For the sake of conformity, please use the -python3 command to call your

.pyfiles in all your shell scripts. Do not usepythonor other aliases, otherwise your commands may fail in our autograding scripts.- You must not use commands such as rm, sudo, CUDA_VISIBLE_DEVICES, cp, mv, mkdir, cd, pip or other commands to change the Linux environment.

- We will execute you code on Linux system, so please make sure you code can be executed on Linux system before submitting your homework.

- DO NOT hard code any path in your file or script except for the path of your trained model.

- The execution time of your testing code should not exceed an allowed maximum of 10 minutes.

- Use the wget command in your script to download you model files. Do not use the curl command.

- Do not delete your trained model before the TAs disclose your homework score and before you make sure that your score is correct.

- If you use matplotlib in your code, please add matplotlib.use(“Agg”) in you code or we will not be able to execute your code.

- Do not use imshow() or show() in your code or your code will crash.

- Use os.path.join to deal with path issues as often as possible.

- Please do not upload your training information generated by tensorboard to github.

The final project should be done using python3.6 and you can use all the python3.6 standard libraries. For a list of third-party packages allowed to be used in this assignment, please refer to the requirments.txt for more details. You can run the following command to install all the packages listed in the requirements.txt:

pip3 install -r requirements.txt

Note that using packages with different versions will very likely lead to compatibility issues, so make sure that you install the correct version if one is specified above. E-mail or ask the TAs first if you want to import other packages.

We evaluate the quality of your restored images with Mean Square Error (MSE) and Structural Similarity Index (SSIM). We provide the script (evaluate.py) to evaluate the performance of your model for you. You can use the following command to evaluate your model.

python3 evaluate.py -g $1 -p $2

$1: folder of ground truth images. (e.g../Data_Challenge2/test_gt/).$2: folder of your predicted images. (e.g.Data_Challenge2/pred/)

The baseline scores are:

| MSE | SSIM | |

|---|---|---|

| Baseline score | 92 | 0.79 |

To get the baseline points (5%), the MSE must be LOWER than baseline MSE and SSIM must be HIGHER than baseline SSIM simultateously. As there are two score used to evaluate the performance of your algorithm, we will use the following equation to caculate your final score. We will use this final score to rank your team among the class.

Final score = 1 - MSE/100 + SSIM