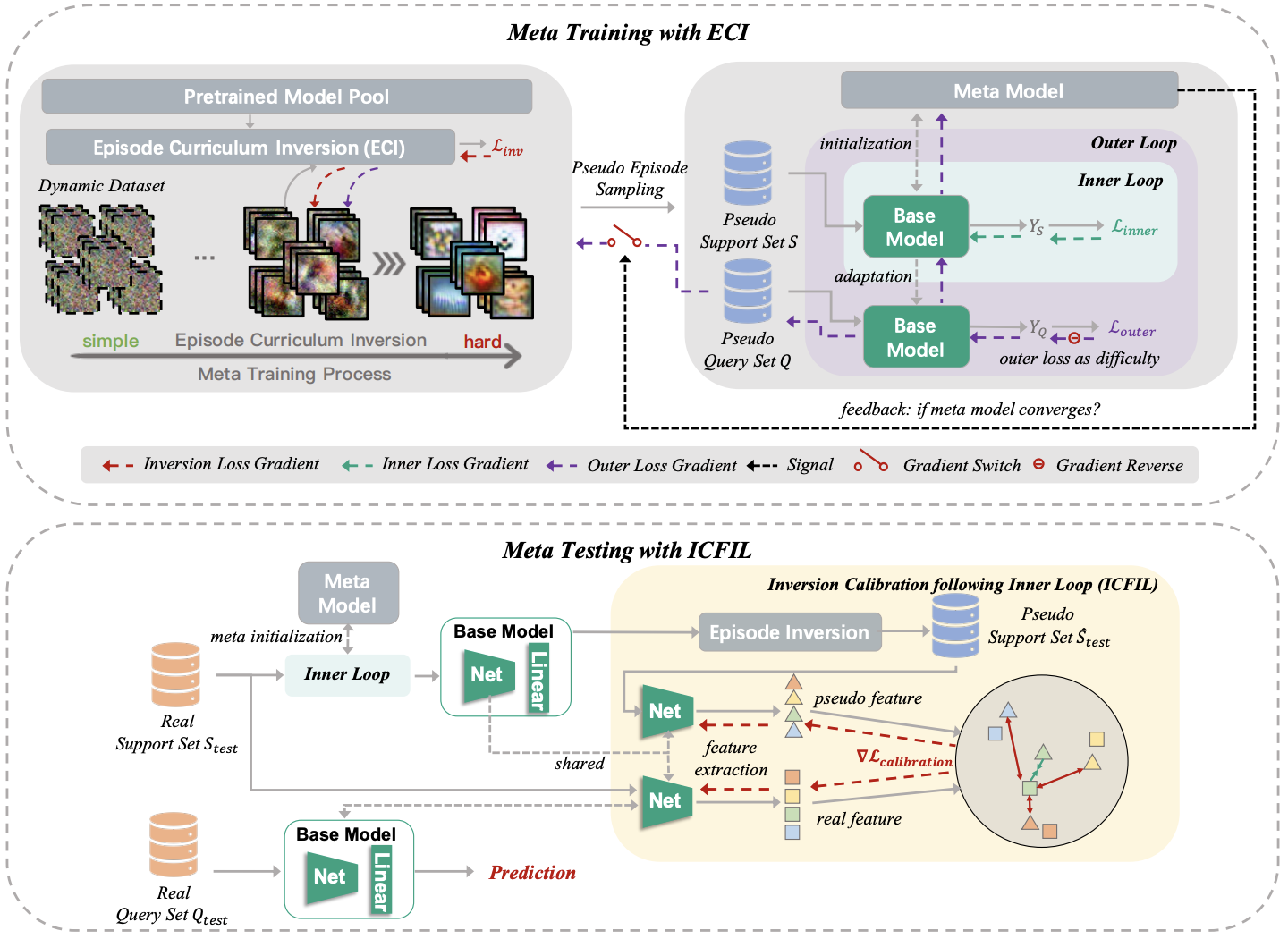

PURER-Plus can:

- work well with ProtoNet (metric-based) and ANIL/MAML (optimization-based);

- work well with a larger number of pre-trained models with overlapping label spece.

For our paper accepted by CVPR-2023, please refer to

[camera-ready], [arxiv], [poster], [slide], [video].

- python == 3.8.15

- torch == 1.13.1

Datasets:

-

CIFAR-FS:

-

Please manually download the CIFAR-FS dataset from here to obtain "cifar100.zip".

-

Unzip ".zip". The directory structure is presented as follows:

cifar100 ├─mete_train ├─apple (label_directory) └─ ***.png (image_file) ... ├─mete_val ├─ ... ├─ ... └─mete_test ├─ ... ├─ ...

-

Place it in "./DFL2Ldata/".

-

-

Mini-Imagenet: Please manually download it here. Unzip and then place it in "./DFL2Ldata/".

-

CUB: Please manually download it here. Unzip and then place it in "./DFL2Ldata/".

-

Flower: Please manually download it here. Unzip and then place it in "./DFL2Ldata/".

Pre-trained models:

- You can pre-train the models following the instructions below (Step 3).

-

Make sure that the root directory is "./PURER-Plus".

-

Prepare the dataset files.

-

For CIFAR-FS:

python ./write_cifar100_filelist.py

After running, you will obtain "meta_train.csv", "meta_val.csv", and "meta_test.csv" files under "./DFL2Ldata/cifar100/split/".

-

For MiniImageNet:

python ./write_miniimagenet_filelist.py

After running, you will obtain "meta_train.csv", "meta_val.csv", and "meta_test.csv" files under "./DFL2Ldata/Miniimagenet/split/".

-

For CUB:

python ./write_CUB_filelist.py

After running, you will obtain "meta_train.csv", "meta_val.csv", and "meta_test.csv" files under "./DFL2Ldata/CUB_200_2011/split/".

-

For Flower:

python ./write_flower_filelist.py

After running, you will obtain "meta_train.csv", "meta_val.csv", and "meta_test.csv" files under "./DFL2Ldata/flower/split/".

-

-

Prepare the pre-trained models.

bash ./scripts/pretrain.sh

Some options you may change:

Option Help --dataset cifar100/miniimagenet/cub/flower --pre_backbone conv4/resnet10/resnet18 -

Meta training

bash ./scripts/metatrain.sh

Some options you may change:

Option Help --dataset & --testdataset cifar100/miniimagenet/cub/flower --num_sup_train 1 for 1-shot, 5 for 5-shot --backbone conv4, the architecture of meta model --Glr learning rate of pueudo data generation --teacherMethod Proponent/anil/maml --APInum number of pre-trained models

If you find this code is useful to your research, please consider to cite our paper.

@inproceedings{hu2023architecture,

title={Architecture, Dataset and Model-Scale Agnostic Data-free Meta-Learning},

author={Hu, Zixuan and Shen, Li and Wang, Zhenyi and Liu, Tongliang and Yuan, Chun and Tao, Dacheng},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={7736--7745},

year={2023}

}

Some codes are inspired from DeepInversion and CloserLookFewShot.

- Zixuan Hu: huzixuan21@mails.tsinghua.edu.cn