Simple GitHub Action to scrape the GitHub Advanced Security API and shove it into a CSV.

Note

You need to set and store a PAT because the built-in

GITHUB_TOKENdoesn't have the appropriate permissions for this Action to get all of the alerts.

GitHub Advanced Security can compile a ton of information on the vulnerabalities in your project's code, supply chain, and any secrets (like API keys or other sensitive info) that might have been accidentally exposed. That information is surfaced in the repository, organization, or enterprise security overview and the API. The overview has all sorts of neat filters and such you can play with. The API is great and powers all manner of partner integrations, but there's no direct CSV export.

The API changes a bit based on the version of GitHub you're using. This Action gathers the GitHub API endpoint to use from the runner's environment variables, so as long as you have a license for Advanced Security, this should work as expected in GitHub Enterprise Server and GitHub AE too.

Because I really want to see this data as a time-series to understand it, and Flat Data doesn't support paginated APIs (yet?) ... so ... it's really an experiment and I wanted to play around with the shiny new toy.

Also ... CSV files are the dead-simple ingest point for a ton of other software services you might want have to use in business. And some people just like CSV files and want to do things in spreadsheets and I'm not here to judge that. Shine on, you spreadsheet gurus! ✨

This got a little more complicated than I'd like, but the tl;dr of what I'm trying to figure out is below:

graph TD

A(GitHub API) -->|this Action| B(fa:fa-file-csv CSV files)

B -->|actions/upload-artifact| C(fa:fa-github GitHub)

C -->|download| D(fa:fa-file-csv CSV files)

C -->|flat-data| E(fa:fa-chart-line data awesomeness)

Obviously if you're only wanting the CSV file, run this thing, then download the artifact. It's a zip file with the CSV file(s). You're ready to rock and roll. :)

An example of use is below. Note that the custom inputs, such as if you are wanting data on a different repo and need additional scopes for that, are set as environmental variables:

- name: CSV export

uses: some-natalie/ghas-to-csv@v2

env:

GITHUB_PAT: ${{ secrets.PAT }} # you need to set a PAT

- name: Upload CSV

uses: actions/upload-artifact@v3

with:

name: ghas-data

path: ${{ github.workspace }}/*.csv

if-no-files-found: errorTo run this targeting an organization, here's an example:

- name: CSV export

uses: some-natalie/ghas-to-csv@v2

env:

GITHUB_PAT: ${{ secrets.PAT }}

GITHUB_REPORT_SCOPE: "organization"

SCOPE_NAME: "org-name-goes-here"Or for an enterprise:

- name: CSV export

uses: some-natalie/ghas-to-csv@v2

env:

GITHUB_PAT: ${{ secrets.PAT }}

GITHUB_REPORT_SCOPE: "enterprise"

SCOPE_NAME: "enterprise-slug-goes-here"| GitHub Enterprise Cloud | GitHub Enterprise Server (3.5+) | GitHub AE (M2) | Notes | |

|---|---|---|---|---|

| Secret scanning | ✅ Repo ✅ Org ✅ Enterprise |

✅ Repo ✅ Org ✅ Enterprise |

✅ Repo ❌ Org ❌ Enterprise |

API docs |

| Code scanning | ✅ Repo ✅ Org ✅ Enterprise |

✅ Repo ✅ Org ➰ Enterprise |

✅ Repo ❌ Org ➰ Enterprise |

API docs |

| Dependabot | ✅ Repo ✅ Org ✅ Enterprise |

❌ | ❌ | API docs |

ℹ️ All of this reporting requires either public repositories or a GitHub Advanced Security license.

ℹ️ Any item with a ➰ needs some looping logic, since repositories are supported and not higher-level ownership (like orgs or enterprises). How this looks won't differ much between GHAE or GHES. In both cases, you'll need an enterprise admin PAT to access the all_organizations.csv or all_repositories.csv report from stafftools/reports, then looping over it in the appropriate scope. That will tell you about the existence of everything, but not give you permission to access it. To do that, you'll need to use ghe-org-admin-promote in GHES (link) to own all organizations within the server.

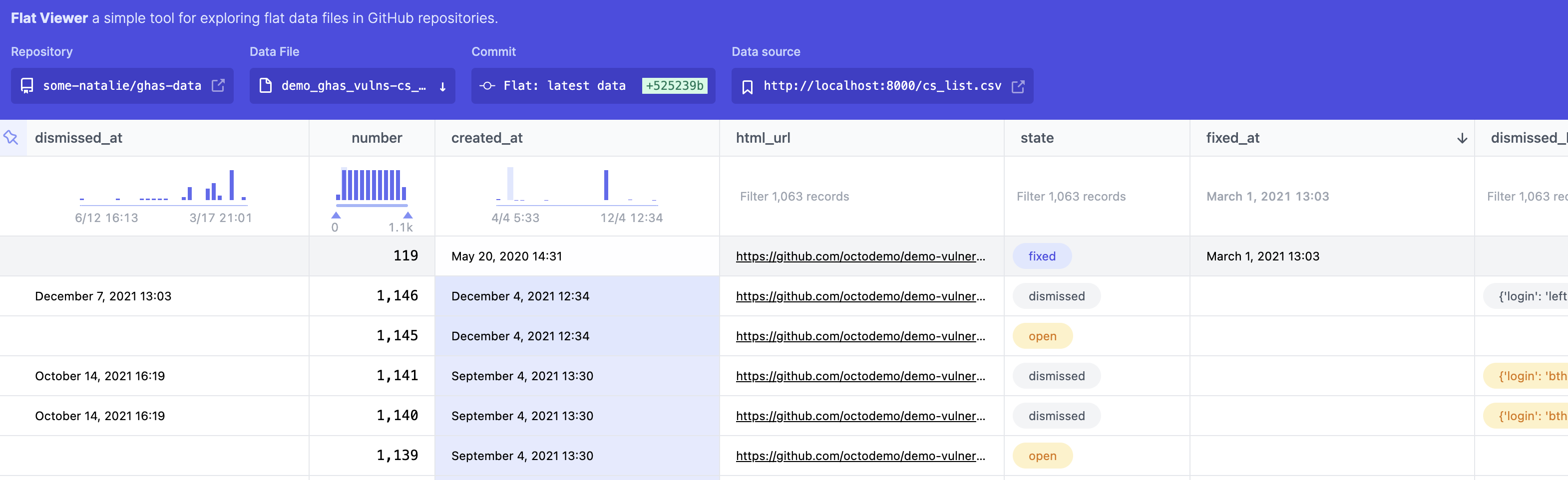

Why? Because look at this beatiful viewer. It's so nice to have a working time-series data set without a ton of drama.

This gets a little tricky because Flat doesn't support scraping paginated APIs or importing from a local file, so here's an example workflow that loads the data through a GitHub Actions runner.

name: Gather data for Flat Data

on:

schedule:

- cron: '30 22 * * 1' # Weekly at 22:30 UTC on Mondays

jobs:

data_gathering:

runs-on: ubuntu-latest

steps:

- name: CSV export

uses: some-natalie/ghas-to-csv@v2

env:

GITHUB_PAT: ${{ secrets.PAT }} # needed if not running against the current repository

SCOPE_NAME: "OWNER-NAME/REPO-NAME" # repository name, needed only if not running against the current repository

- name: Upload CSV

uses: actions/upload-artifact@v3

with:

name: ghas-data

path: ${{ github.workspace }}/*.csv

if-no-files-found: error

flat_data:

runs-on: ubuntu-latest

needs: [data_gathering]

steps:

- name: Check out repo

uses: actions/checkout@v3

- name: Download CSVs

uses: actions/download-artifact@v3

with:

name: ghas-data

- name: Tiny http server moment # Flat can only use HTTP or SQL, so ... yeah.

run: |

docker run -d -p 8000:80 --read-only -v $(pwd)/nginx-cache:/var/cache/nginx -v $(pwd)/nginx-pid:/var/run -v $(pwd):/usr/share/nginx/html:ro nginx

sleep 10

- name: Flat the code scanning alerts

uses: githubocto/flat@v3

with:

http_url: http://localhost:8000/cs_list.csv

downloaded_filename: cs_list.csv

- name: Flat the secret scanning alerts

uses: githubocto/flat@v3

with:

http_url: http://localhost:8000/secrets_list.csv

downloaded_filename: secrets_list.csvℹ️ You may want to append what's below to the repository's .gitignore file to ignore the pid directory created by nginx.

nginx-pid/The API docs are here and pull requests are welcome! ❤️

GitHub Copilot wrote most of the Python code in this project. I mostly just structured the files/functions, wrote some docstrings, accounted for the differences in API versions across the products, and edited what it gave me. ❤️