- Introduction and environment

- Why we need LayerNorm

- What is LayerNorm in GRU

- How does it improve our model

- References

- Implement layer normalization GRU in pytorch, followed the instruction from the paper Layer normalization.

- Code modified from this repository.

- Our research has exerted this technique in predicting kinematic variables from invasive brain-computer interface (BCI) dataset, Nonhuman Primate Reaching with Multichannel Sensorimotor Cortex Electrophysiology. For more information regarding this dataset, please see the article Superior arm-movement decoding from cortex with a new, unsupervised-learning algorithm.

- Environment: Official pytorch docker image from Docker Hub

pytorch/pytorch:1.4-cuda10.1-cudnn7-runtime

Activation functions, such as tanh and sigmoid have saturation area, as showed the their first derivatives.

| Sigmoid and hyperbolic tangent (tanh) | First derivatives |

|---|---|

|

|

For the values outside (-4, +4), the output will be very close to zero, and their gradients might also vanish, incurring the gradient vanishing problem.

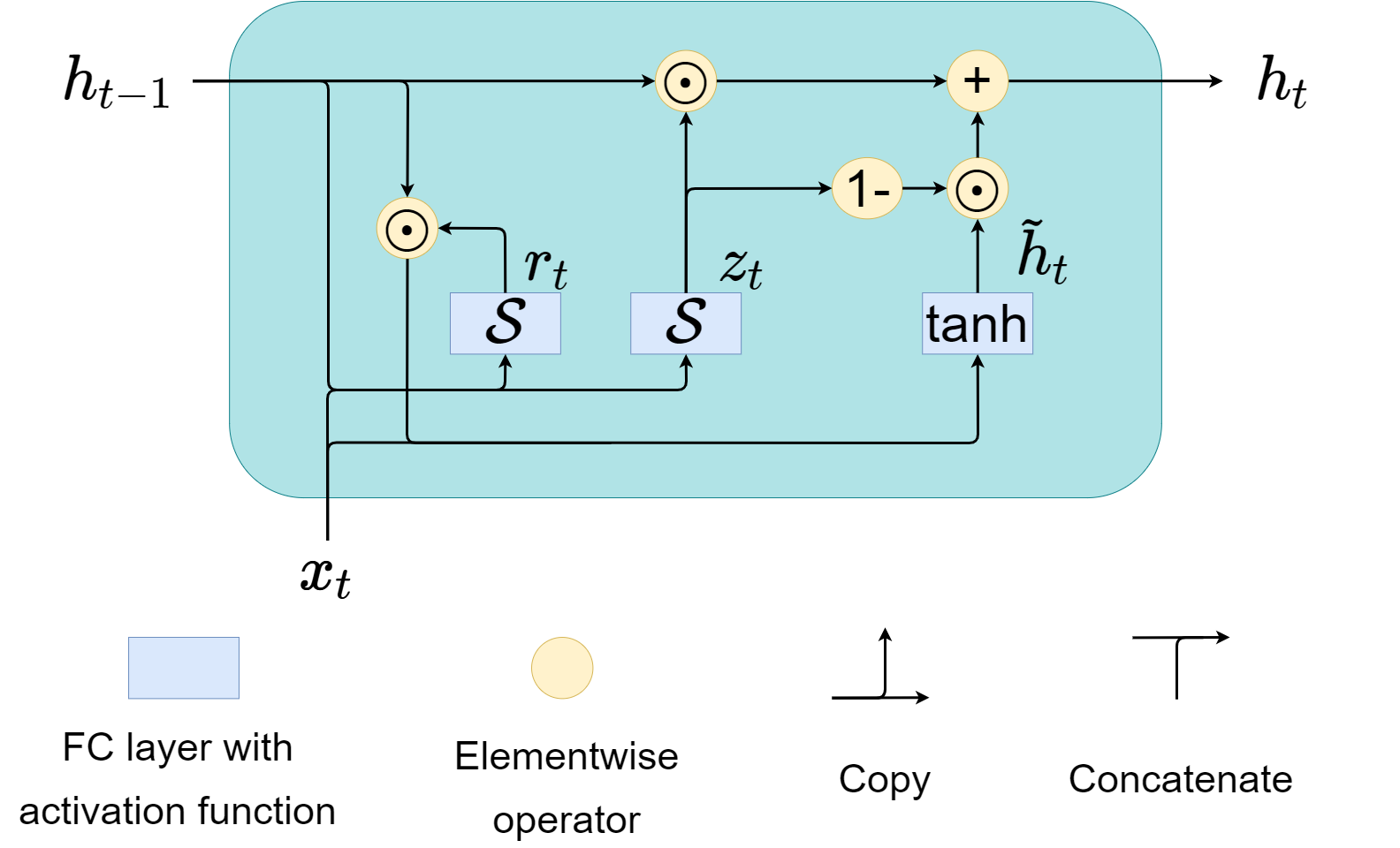

The structure of a GRU cell

has two tanh and one sigmoid function. The following show the mathematical equations for original GRU and LayerNorm GRU.| Original GRU | LayerNorm GRU |

|---|---|

|

|

For more insight, where we simulate two extreme distributions of data and show the before and after effect of LayerNorm.

| Before LayerNorm | After LayerNorm |

|---|---|

|

|

After passing them into LayerNorm, the new distributions lie inside (-4, +4), perfect working area for activation functions.

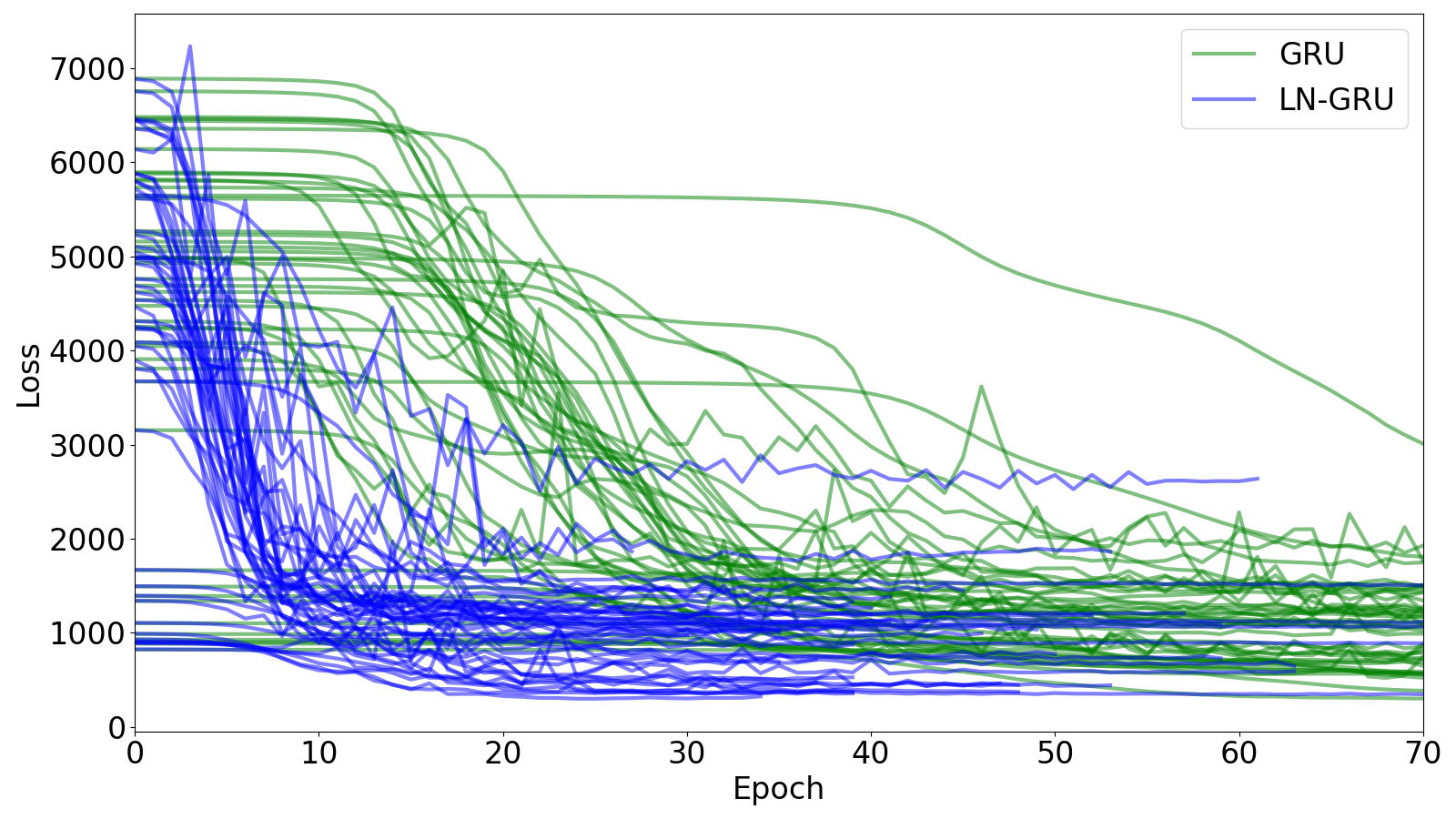

The result from one of my GRU models in BCI.

Ba, Jimmy Lei, Jamie Ryan Kiros, and Geoffrey E. Hinton. "Layer normalization." arXiv preprint arXiv:1607.06450 (2016)