Introduction

IDC study states that 40% of Enteprises in year 2019 will be working to include AI/ML as a part of their transformative strategy. Today, AI/ML is beyond the hype cycle and there are usecases that are providing real business value. Customer looking to start their AI/ML journey understands that AI/ML is hard and are looking to partner with Cloud Providers for support. They are making a choice not based on very specific capabilities or solutions. Customers understands AI/ML projects are explorative and requires multiple iterations to get it right and requires broader capabilities from partners. 10,000+ customers are using AWS today for AI/ML services because they understand that AWS provides deepest and broadest set of services for AI/ML workloads.

In today's workshop, we discuss the capabilities of Amazon SageMaker a machine leanring platform for Developers and Data Scientists. We will define a problem statement and will solve it by apply Machine Learning using Amazon SageMaker. Amazon SageMaker takes undifferentiated heavy lifting involved in Machine Learning process and allows developers and data scientists to focus on solving business problem by following a build, train and deploy pattern.

Let's work on a healthcare fraud identification usecase and apply machine learning to identify anomalous claims that rrequire further investigation. You may find the concepts used in the workshop bit mathemaical. But, I would request you to develop the intution to understand the potential applications of techniques rather than focussing on maths involved. The technique used in the workshop is broadly applicable to multiple problems related to outlier detection on multi-variate data.

Learning Objectives

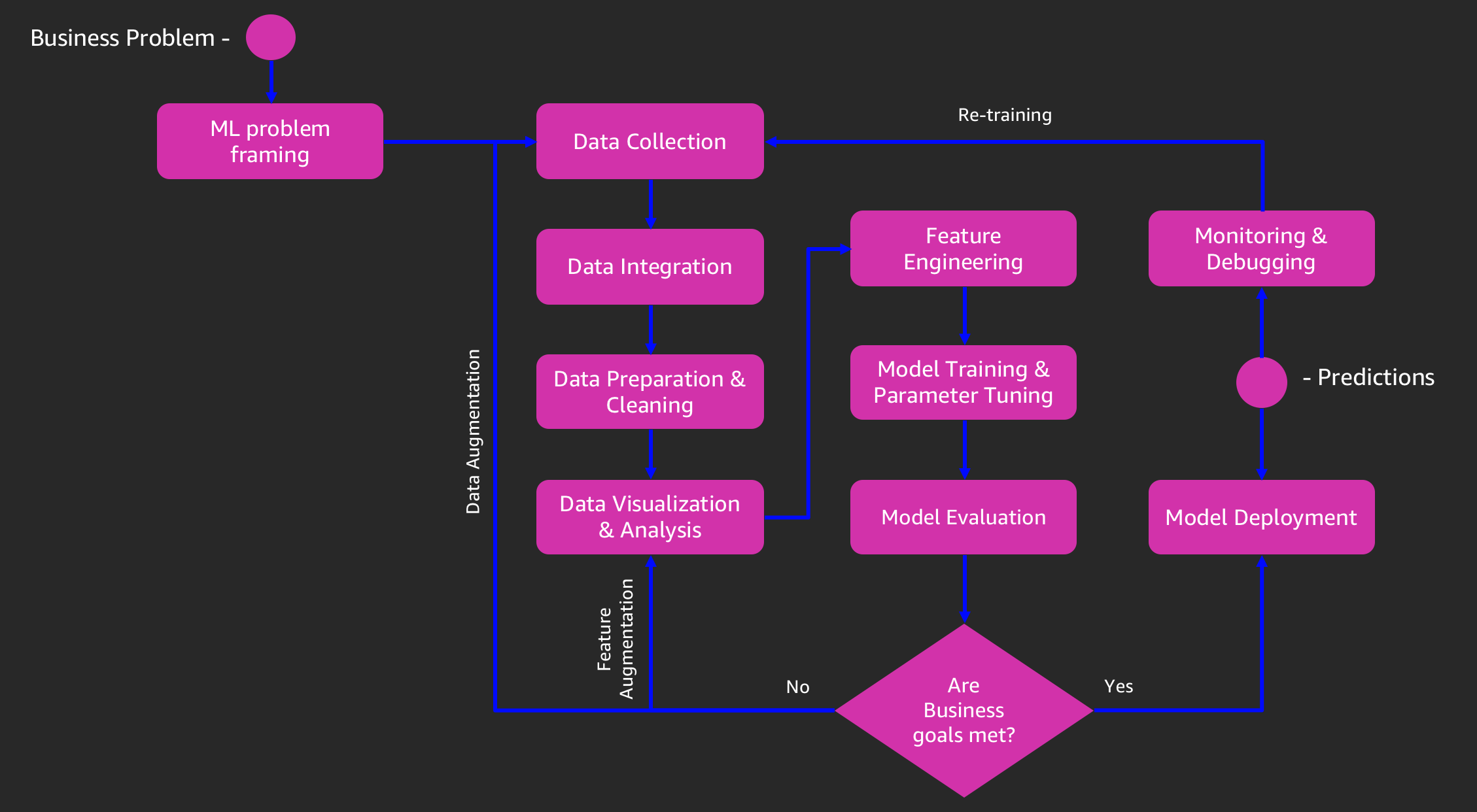

- Develop intution for steps involved in the Machine Learning Process

- Understand and Implement end to end machine learning on Amazon SageMaker to Build, Train and Deploy a model.

- Clone a public gitrepo automatically in Amazon Sagemaker Notebook during the launch.

- Perform feature engineering on categorical data using Word Embeddings with CBOW-Bag of Words-technique

- Train and use PCA algorithm for feature extraction

- Understand how to calculate anomaly score from principal components of PCA model.

- Perform visualization to understand anomalous claims.

Machine Learning process

Lab 0 - Launch an Amazon SageMaker Jupyter Notebook

Prerequisites and assumptions

- To complete this lab, you need an personal Laptop and an AWS account that provides access to AWS services.

Steps

- Sign In to the AWS Console

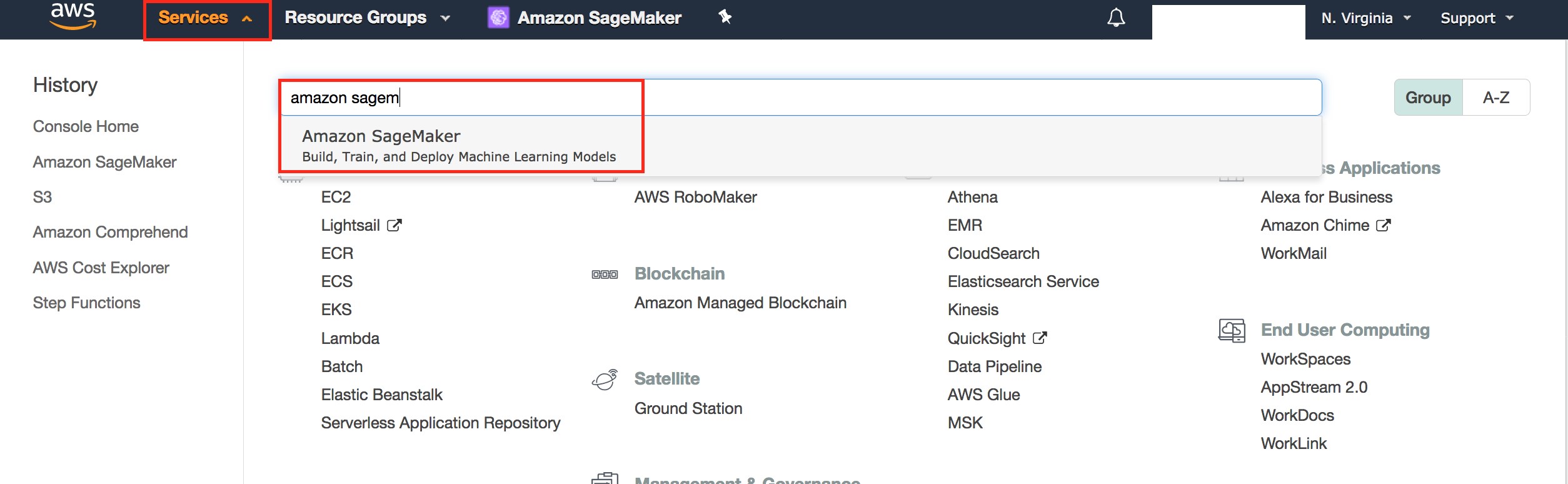

- Click Services, search for Amazon SageMaker and Click Amazon SageMaker in the dropdown

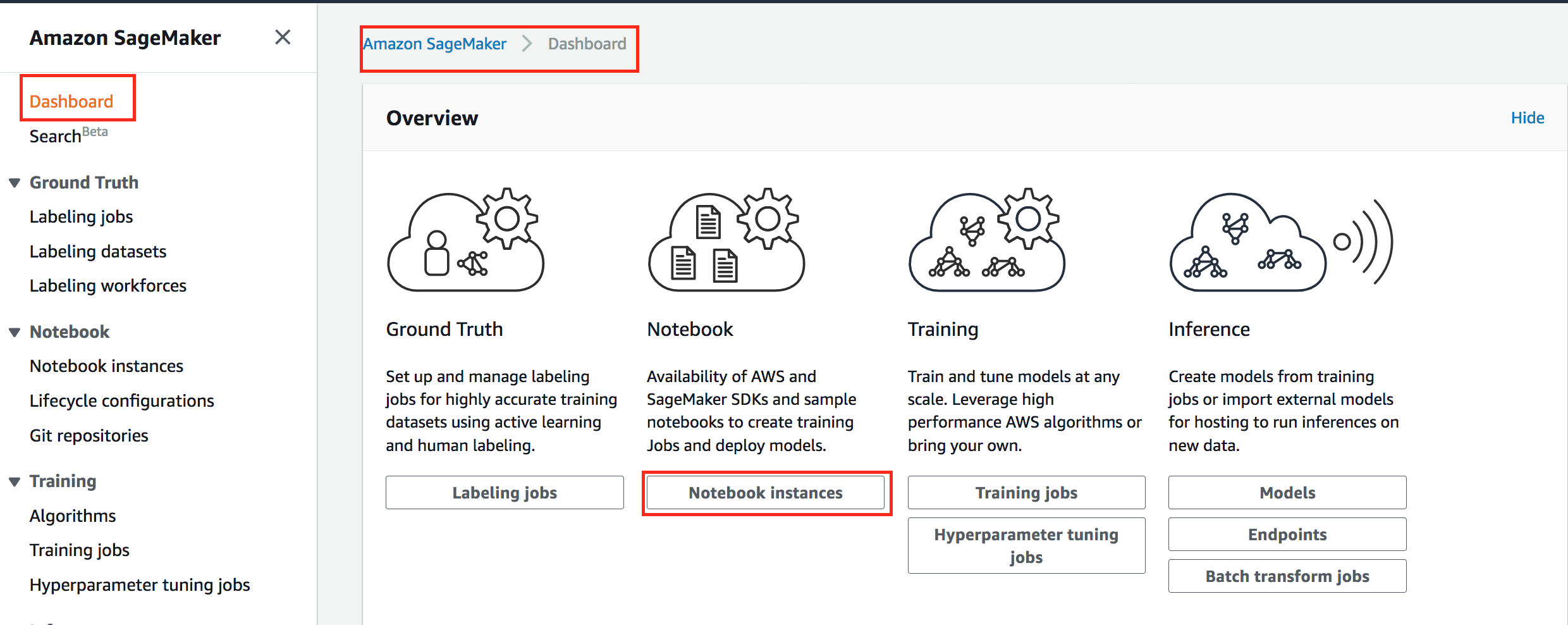

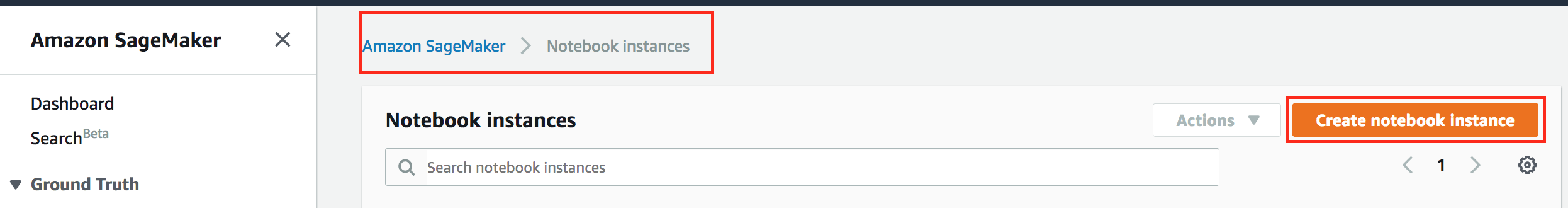

- After you land on Amazon SageMaker console, click on Notebook Instances

- Click Create Notebook

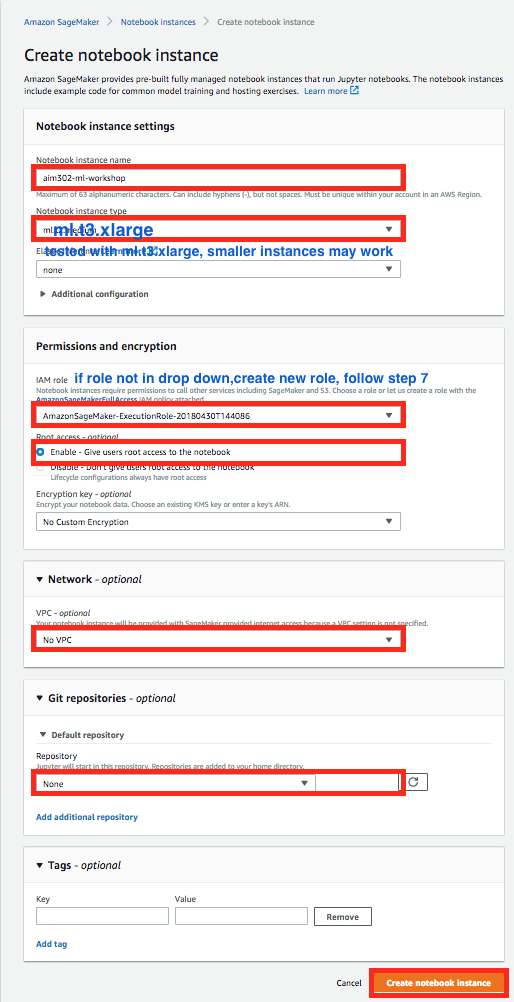

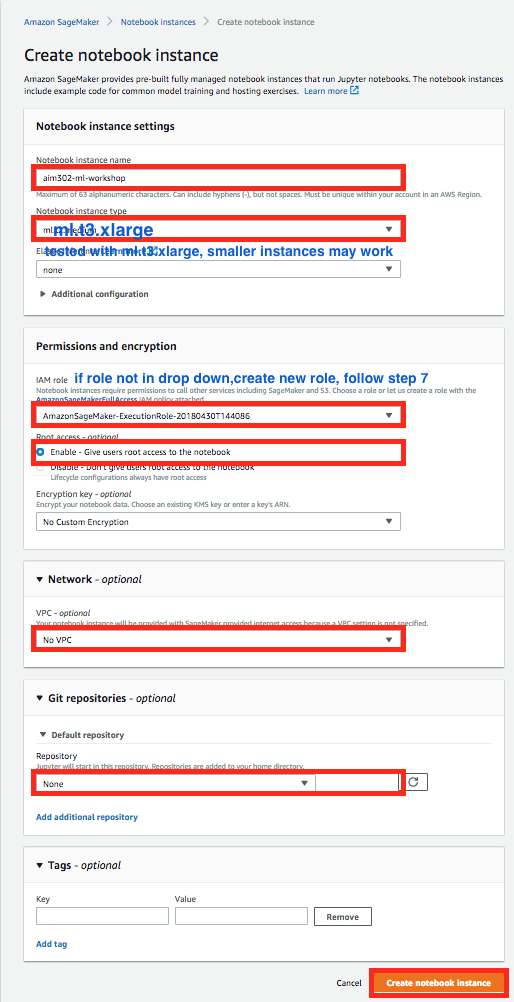

- Give Notebook a name you can remember and fill out configuration details as suggested in the screenshots below.

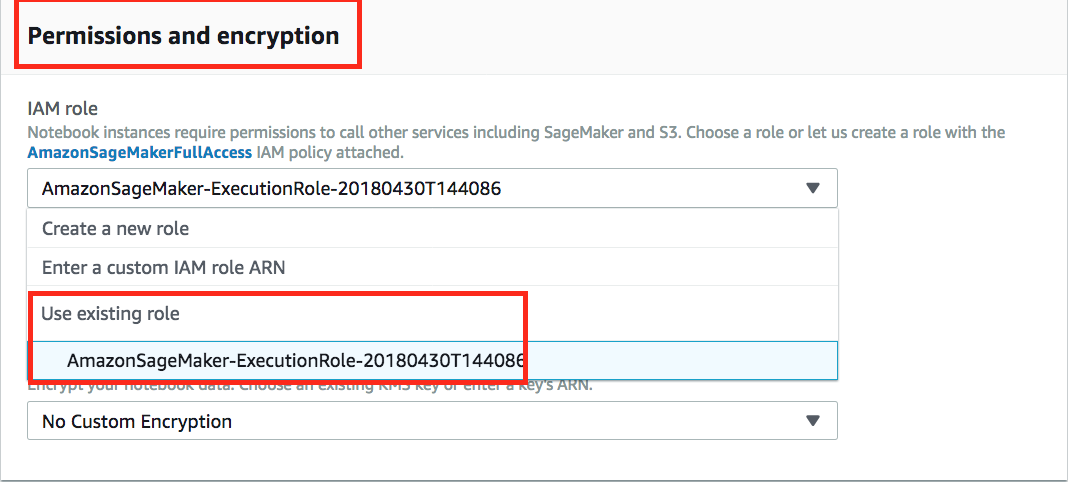

- Select IAM Role

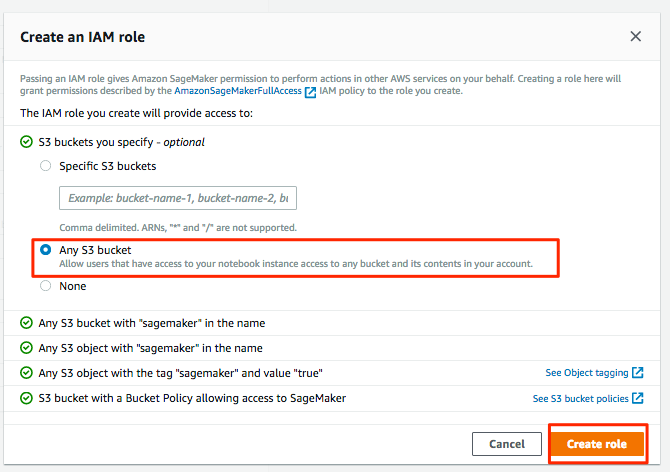

- Create a new role if one doesn't exist.

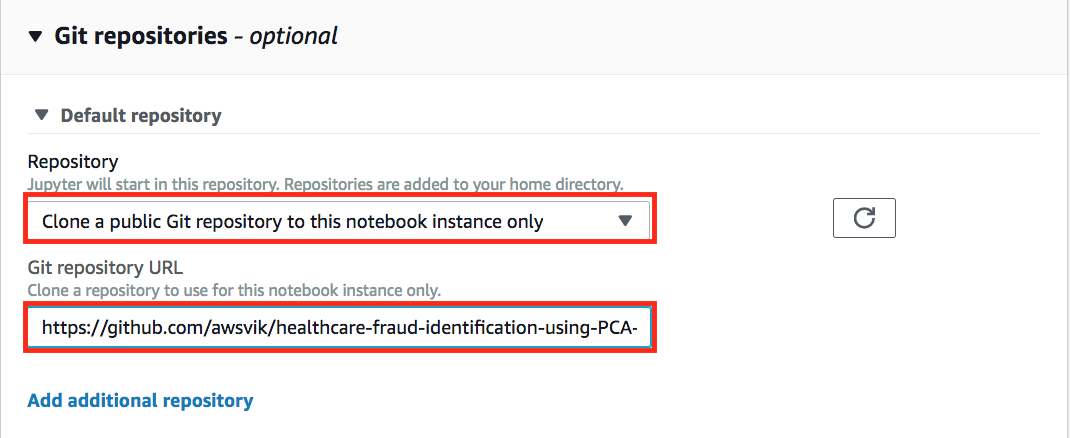

- Privide a path to clone public git repo that we will use today for our workshop to download data dictionary and Jupyter IPython Notebook

- Provide the path of Git Repo.

- Click Create Notebook Instance

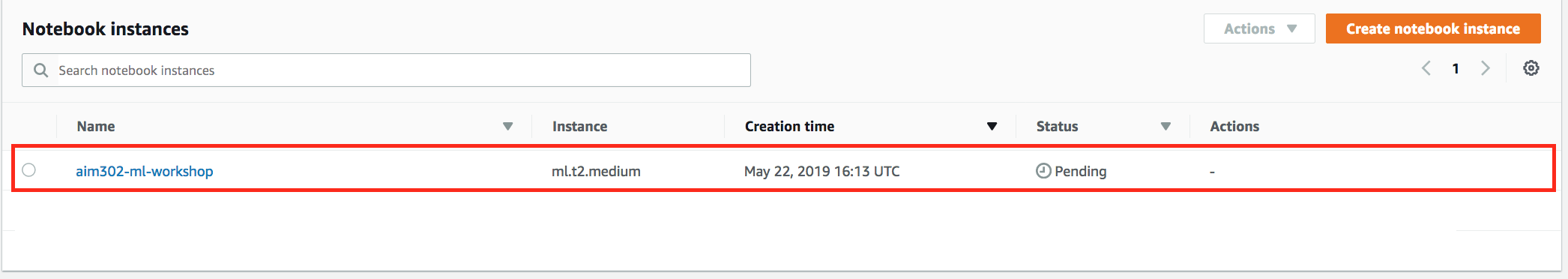

- In the Amazon SageMaker Console-->Notebook Instances, wait for your notebook instance to start. Observe change from Pending to In Service status.

- Remember the name of your notebook instance and Click Open Jupyter for your notebook.

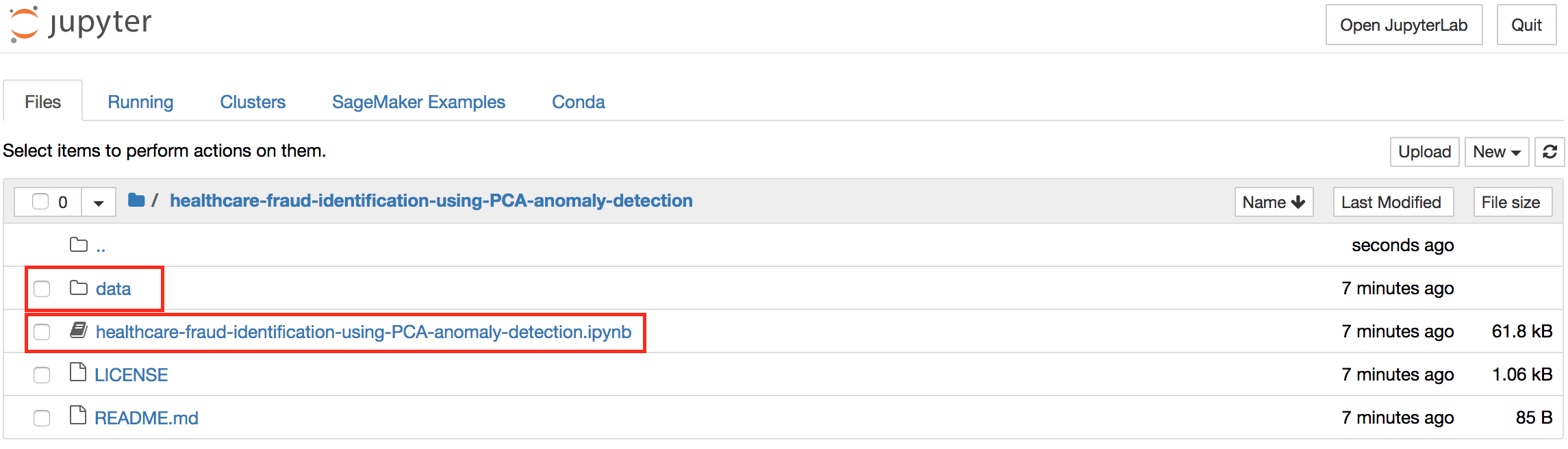

- Validate your data and notebook cloned from Git Repo

Lab 1 - Finish your Lab in Jupter Notebook

- Click on healthcare-fraud-identification-using-PCA-anomaly-detection.ipynb and start working on your lab. From here onwards all the instruction will be in the Jupyter Notebook. Come back and after you have completed all the steps in the Jupyter Notebook and finish rest of the steps suggested below.

Finish the Lab

- Congratulations! you have finished all the labs. Please make sure to delete all resources as mentioned in the section below.

Cleanup Resources

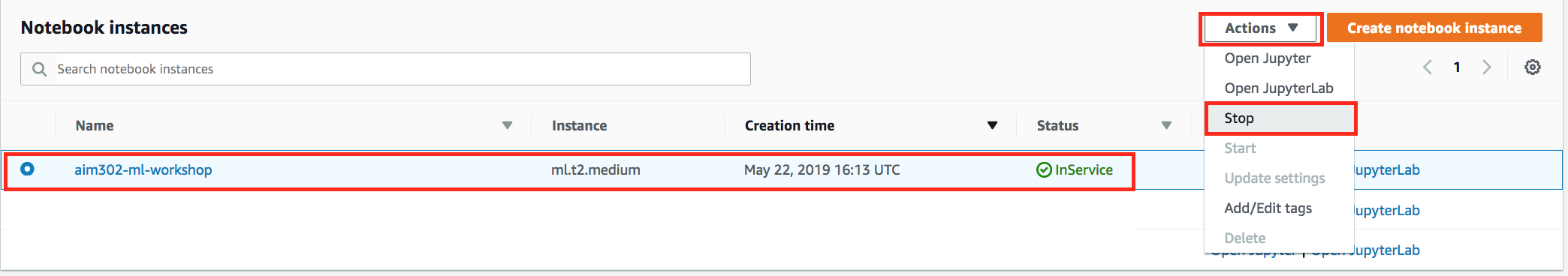

- Go to Amason Sagemaker console to shutdown your notebook instance, select your instance from the list.Select Stop from the Actions drop down menu.

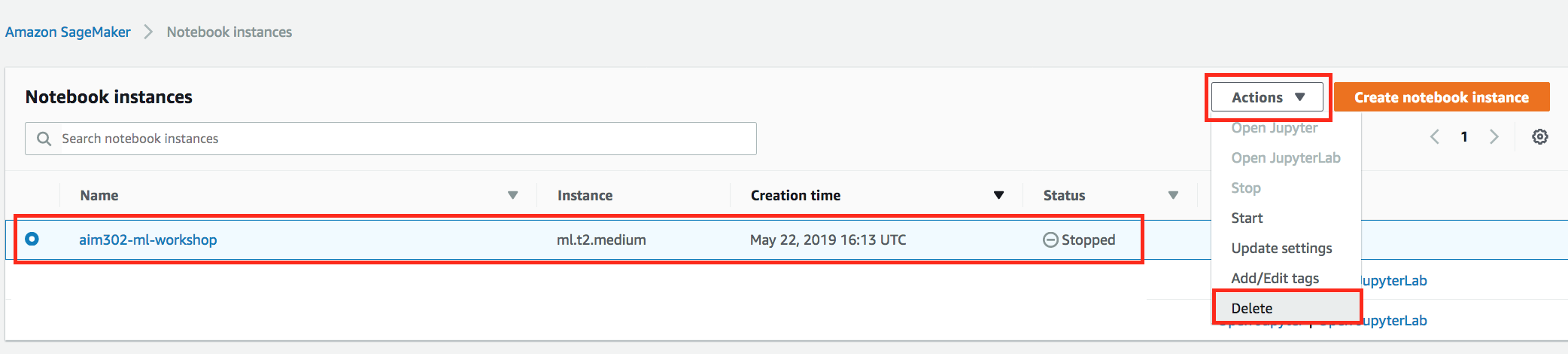

- After your notebook instance is completely Stopped, select Delete fron the Actions drop down menu to delete your notebook instance.

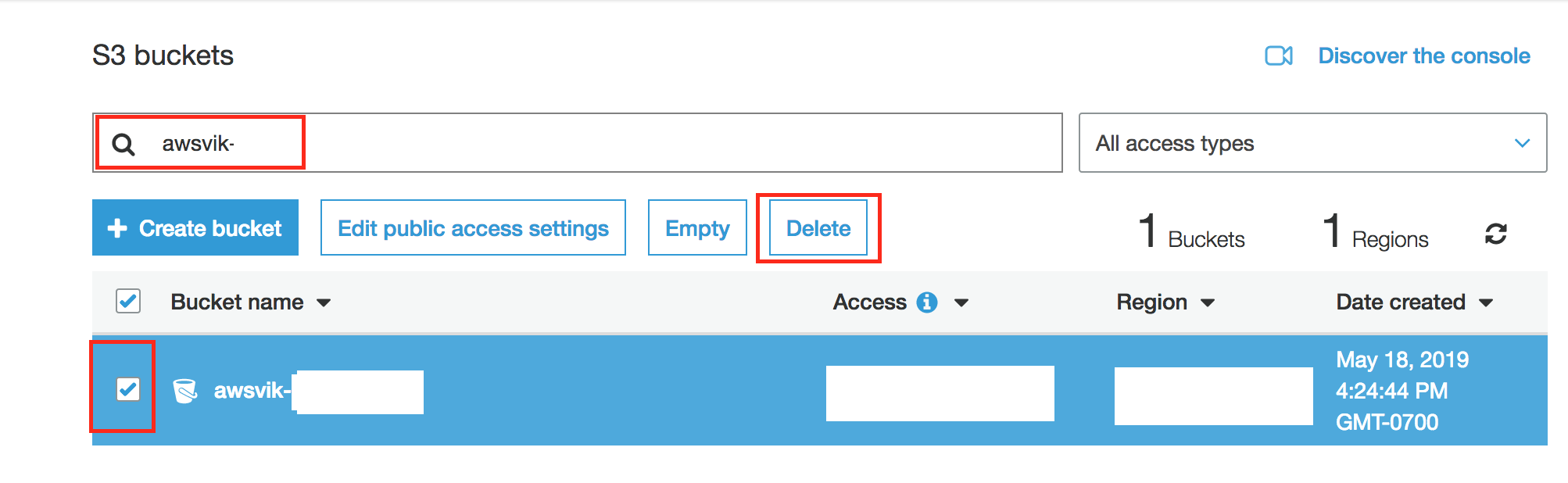

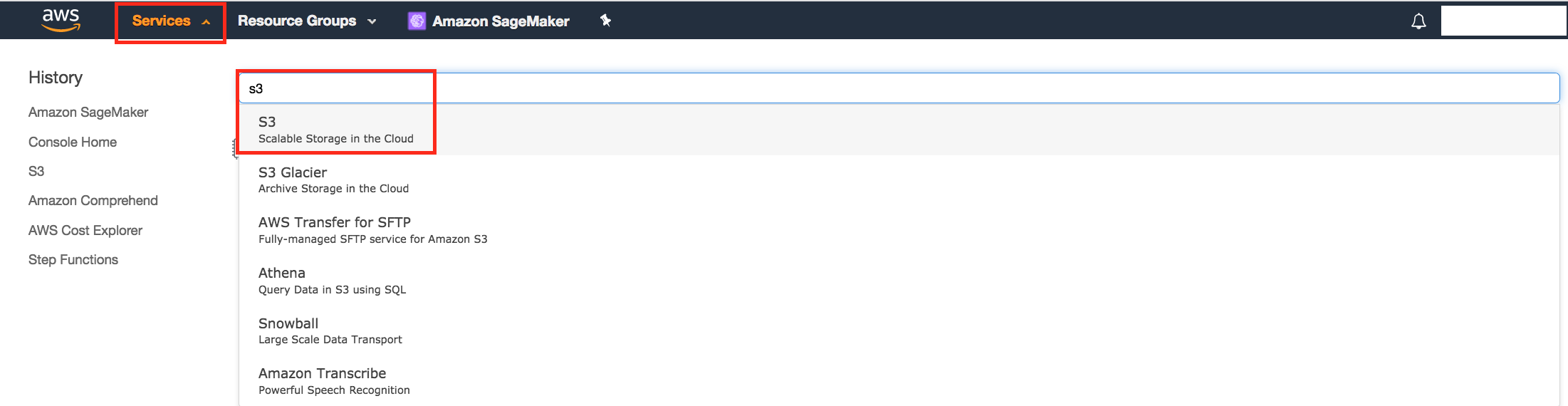

- Navigate to S3 Console.

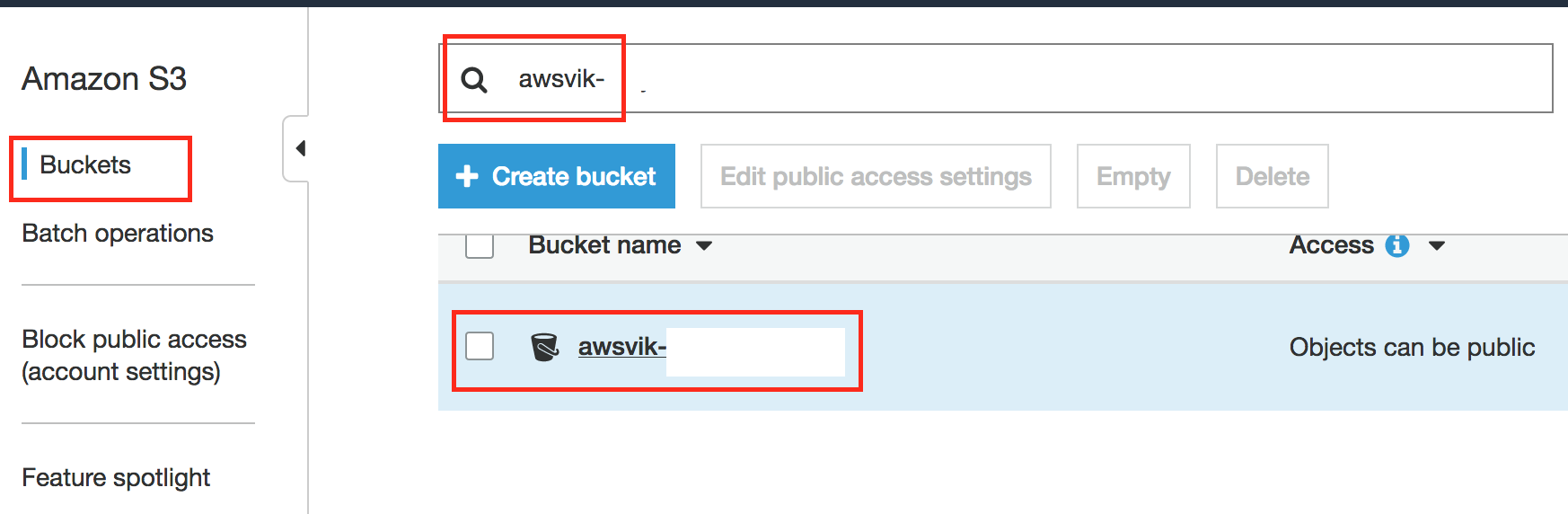

- Find Bucket created in Lab 1 and Click to list objects in the bucket.

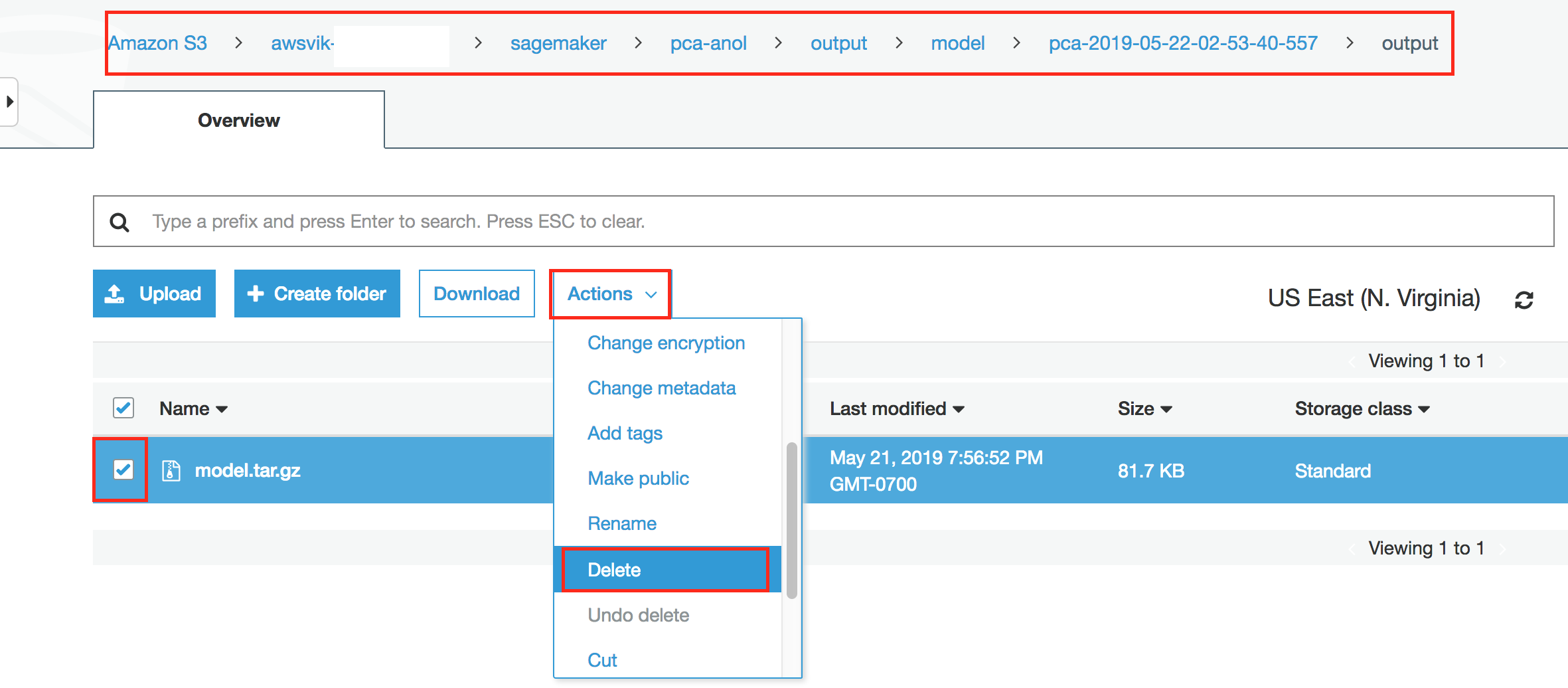

- Navigate to the model-tar.gz and delete it by using Actions menu.

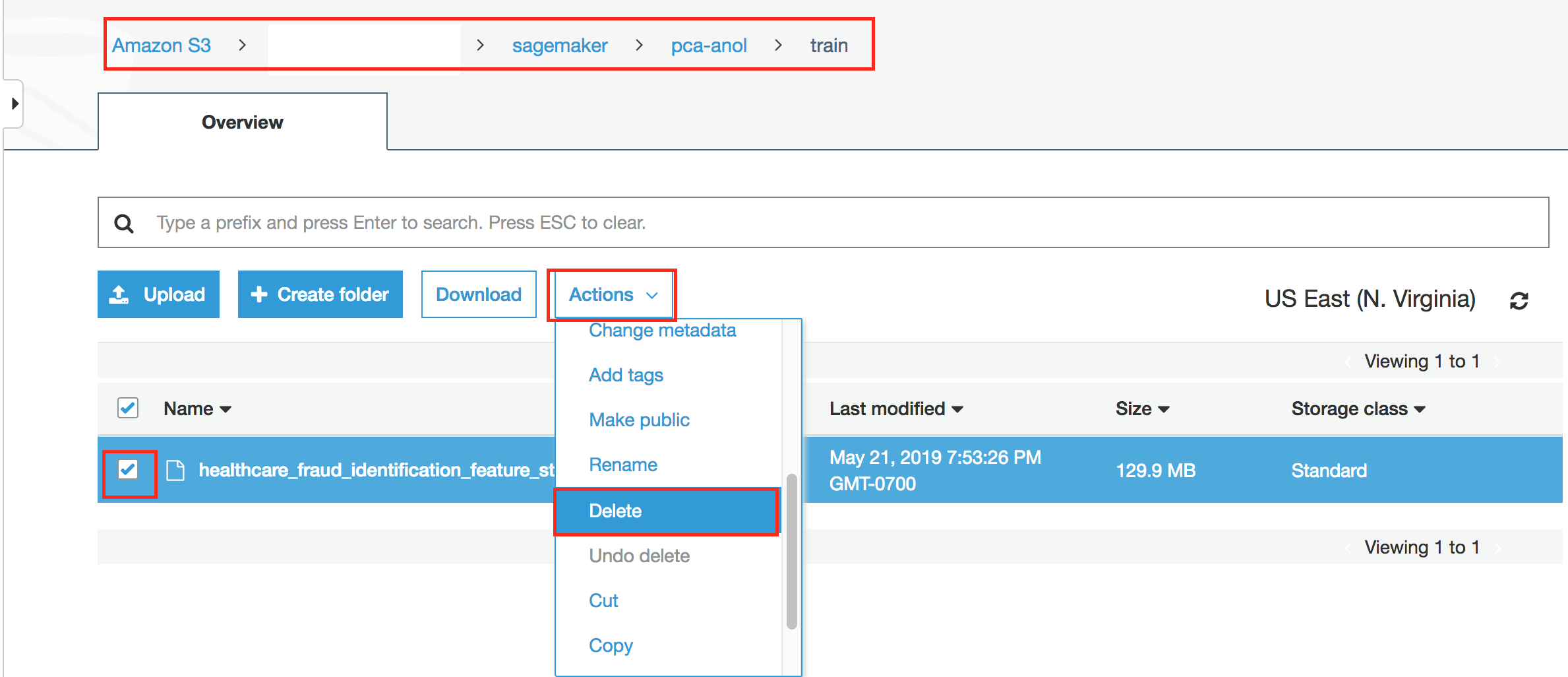

- Navigate to the training data file healthcare_fraud_identification_feature_store and delete it by using Actions menu.

- After all the objects are deleted in the bucket. Go ahead and delete the bucket using the Actions menu.