Denoising Diffusion Step-aware Models

Shuai Yang, Yukang Chen, Luozhou Wang, Shu Liu, Yingcong Chen

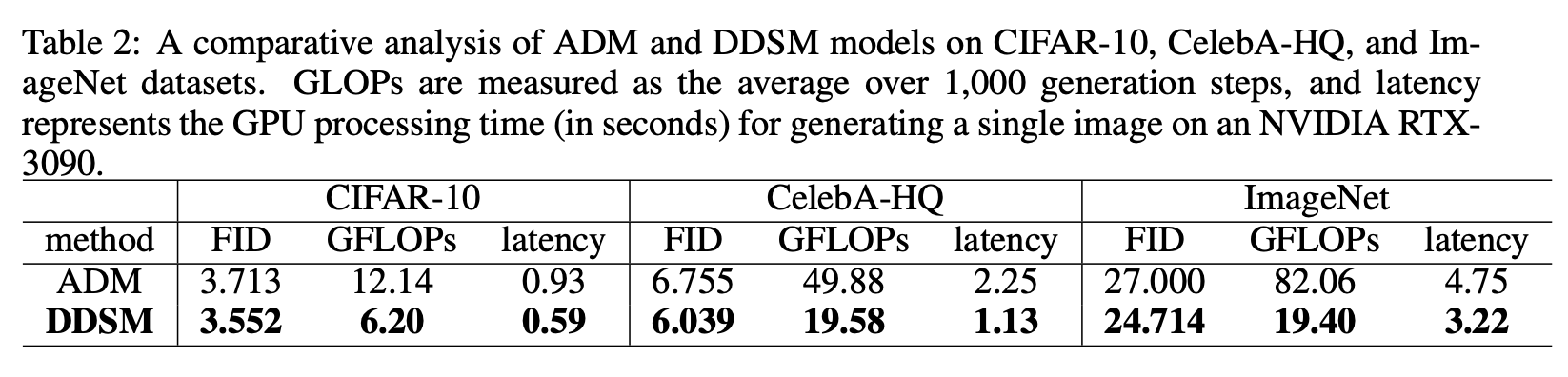

- 🚀 Achieve up to 76% reduction in computational costs for diffusion models without compromising on quality

- 🚀 Compatible with latent diffusion

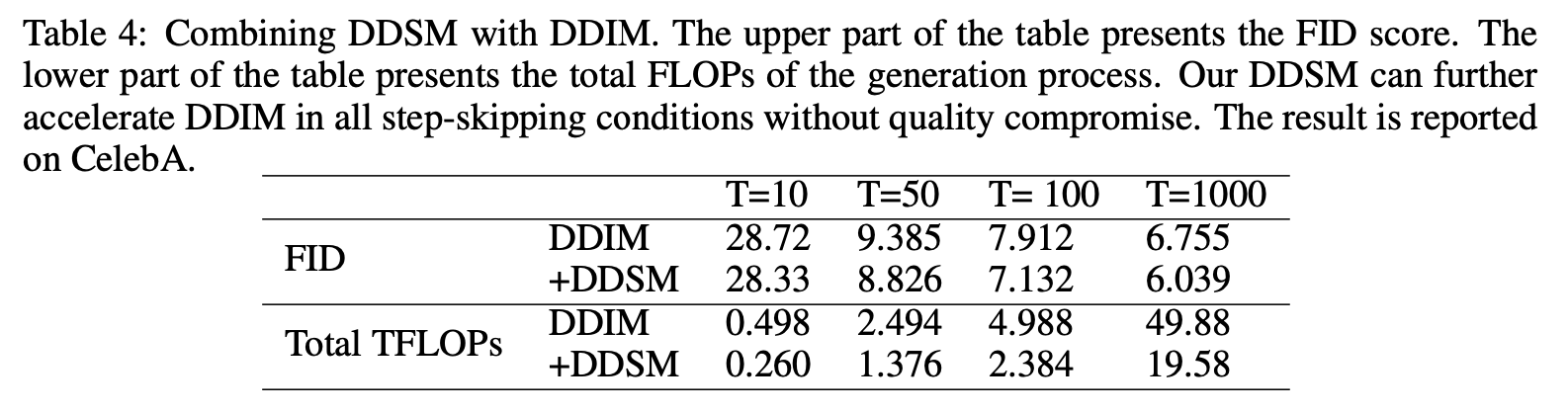

- 🚀 Compatible with sampling schedulers like DDIM and EDM

We introduce DDSM, a novel framework optimizing diffusion models by dynamically adjusting neural network sizes per generative step, guided by evolutionary search. This method reduces computational load significantly—achieving up to 76% savings on tasks like ImageNet generation—without sacrificing generation quality.

pip install -r requirements.txt- prepare the pretrained supernet and flagfile (Download supernet checkpoint)

- prepare the stats of CIFAR-10 for computing FID (Download stats file)

- run the following script

python main.py --flagfile eval/flagfile_eval.txt --notrain --eval_stepaware -parallel --batch_size 1024 --ckpt_name ckpt_450000- prepare the pretrained supernet and flagfile (Download supernet checkpoint)

- run the following script

python main.py --search --flagfile work_dir/flagfile.txt --parallel --batch_size 2048 --ckpt_name ckpt_450000 \

--num_generation 10 --pop_size 50 --num_images 4096 --fid_weight 1.0 --mutation_prob 0.001python main.py --train --flagfile ./config/slimmable_CIFAR10.txt --parallel --logdir=./work_dirIf you find this project useful in your research, please consider citing:

@misc{yang2024denoising,

title={Denoising Diffusion Step-aware Models},

author={Shuai Yang and Yukang Chen and Luozhou Wang and Shu Liu and Yingcong Chen},

year={2024},

eprint={2310.03337},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

- release DDSM training, search and inference code on CIFAR-10.

- release checkpoints.

- make DDSM compatible with diffusers.