This is an official PyTorch implementation of Win. See the ICLR paper and its extension. If you find our Win helpful or heuristic to your projects, please cite this paper and also star this repository. Thanks!

@inproceedings{zhou2022win,

title={Win: Weight-Decay-Integrated Nesterov Acceleration for Adaptive Gradient Algorithms},

author={Zhou, Pan and Xie, Xingyu and Yan, Shuicheng},

booktitle={International Conference on Learning Representations},

year={2022}

}For your convenience to use Win, we briefly provide some intuitive instructions below, then provide some general experimental tips, and finally provide more details (e.g., specific commands and hyper-parameters) for the main experiment in the paper.

Win can be integrated with most optimizers, including SoTA Adan, Adam, AdamW, LAMB and SGD by using the following steps.

Step 1. add Win- and Win2-dependent hyper-parameters by adding the following hyper-parameters to the config:

parser.add_argument('--acceleration-mode', type=str, default="win",

help='whether use win or win2 or vanilla (no acceleration) to accelerate optimizer, choices: win, win2, none')

parser.add_argument('--reckless-steps', default=(2.0, 8.0), type=float, nargs='+',

help='two coefficients used as the multiples of the reckless stepsizes over the conservative stepsize in Win and Win2. (default: (2.0, 8.0)')

parser.add_argument('--max-grad-norm', type=float, default=0.0,

help='Max grad norm (same as clip gradient norm, default: 0.0, no clipping)')reckless-steps: two coefficients used as the multiples of the reckless stepsizes over the conservative stepsize in Win and Win2, namely

Step 2. create corresponding vanilla or Win- or Win2-accelerated optimizer as follows. In this step, we can directly replace the vanilla optimizer by using the following command:

from adamw_win import AdamW_Win

optimizer = AdamW_Win(params, lr=args.lr, betas=(0.9, 0.999), reckless_steps=args.reckless_steps, weight_decay=args.weight_decay, max_grad_norm=args.max_grad_norm, acceleration_mode=args.acceleration_mode)

from adam_win import Adam_Win

optimizer = Adam_Win(params, lr=args.lr, betas=(0.9, 0.999), reckless_steps=args.reckless_steps, weight_decay=args.weight_decay, max_grad_norm=args.max_grad_norm, acceleration_mode=args.acceleration_mode)

from lamb_win import LAMB_Win

optimizer = LAMB_Win(params, lr=args.lr, betas=(0.9, 0.999), reckless_steps=args.reckless_steps, weight_decay=args.weight_decay, max_grad_norm=args.max_grad_norm, acceleration_mode=args.acceleration_mode)

from sgd_win import SGD_Win

optimizer = SGD_Win(params, lr=args.lr, momentum=0.9, reckless_steps=args.reckless_steps, weight_decay=args.weight_decay, max_grad_norm=args.max_grad_norm, nesterov=True, acceleration_mode=args.acceleration_mode) - For hyper-parameters, to make Win and Win2 simple, in all experiments, we tune the vanilla hyper-parameters, e.g. stepsize, weight decay and warmup, around the vanilla values used in the vanilla optimizer.

- For robustness, in most cases, Win and Win2-accelerated optimizers are much more robust to hyper-parameters, e.g. stepsize, weight decay and warmup, than vanilla optimizers, especially on large models. For example, on the ViT-base model, official AdamW would fail and collapse when the default stepsize becomes slightly larger, e.g. 2$\times$-larger, while Win and Win2-accelerated optimizers can tolerate this big stepsize and still achieve very good performance.

- For GPU memory and computational ahead, Win and Win2-accelerated optimizers do not bring big extra GPU memory cost and also computational ahead as shown in the paper. This is because, for most models, the BP process indeed dominates the GPU memory cost, e.g. storing features of all layers to compute the gradient, which is much higher than the memory cost caused by the model parameter itself. But for huge models, they may bring extra memory costs, since they need to maintain one or two extra sequences which are at the same size as the vanilla model parameter. However, this problem can be solved using the ZeroRedundancyOptimizer, which shares optimizer states across distributed data-parallel processes to reduce per-process memory footprint.

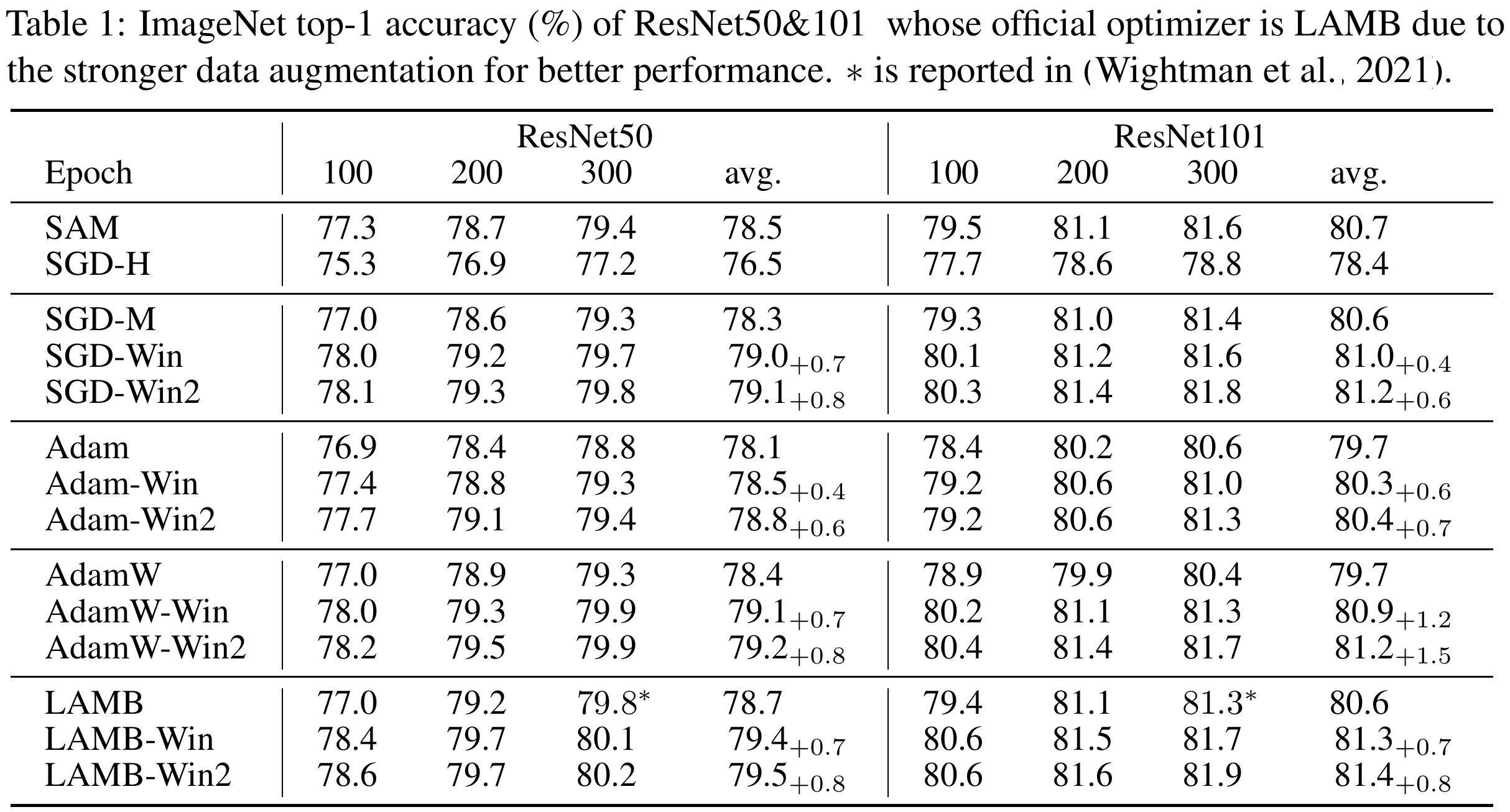

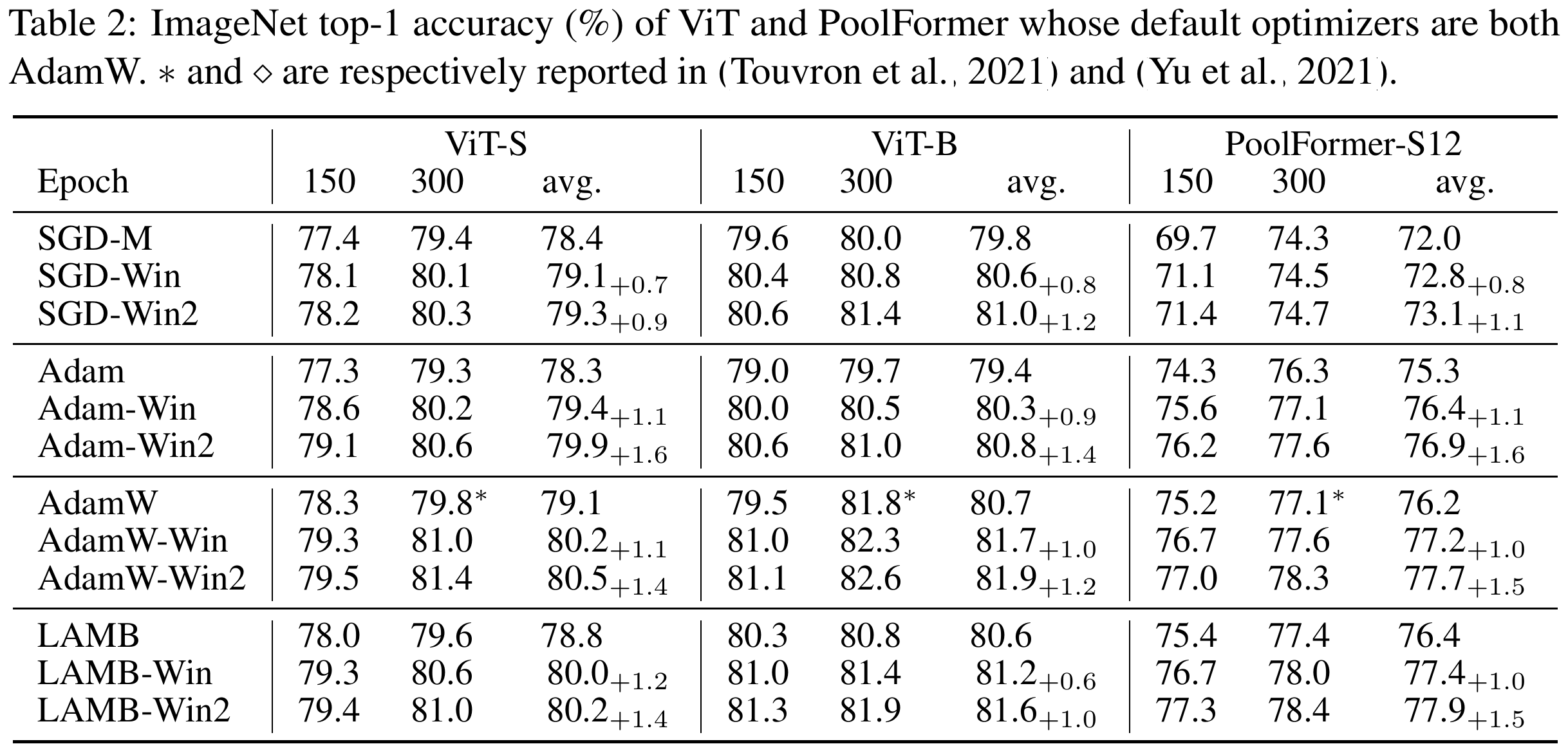

For your convenience to use Win and Win2, we provide the configs and log files for the experiments on ImageNet-1k.

Here we provide the training logs and configs under 300 training epochs

| Model | ResNet50 | ResNet101 | ViT-small | ViT-base |

|---|---|---|---|---|

| SGD-Win | (config, log) | (config, log) | (config, log) | (config, log) |

| SGD-Win2 | (config, log) | (config, log) | (config, log) | (config, log) |

| Adam-Win | (config, log) | (config, log) | (config, log) | (config, log) |

| Adam-Win2 | (config, log) | (config, log) | (config, log) | (config, log) |

| AdamW-Win | (config, log) | (config, log) | (config, log) | (config, log) |

| AdamW-Win2 | (config, log) | (config, log) | (config, log) | (config, log) |

| LAMB-Win | (config, log) | (config, log) | (config, log) | (config, log) |

| LAMB-Win2 | (config, log) | (config, log) | (config, log) | (config, log) |

We will release them soon.