[Paper] [Blog] [Colab][Spaces]

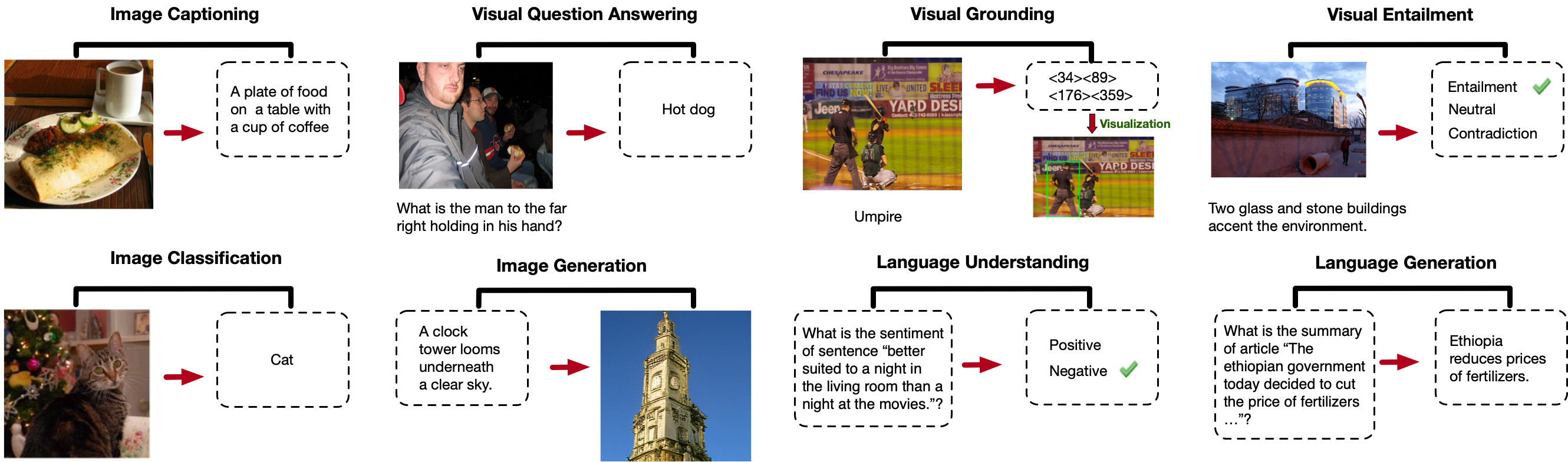

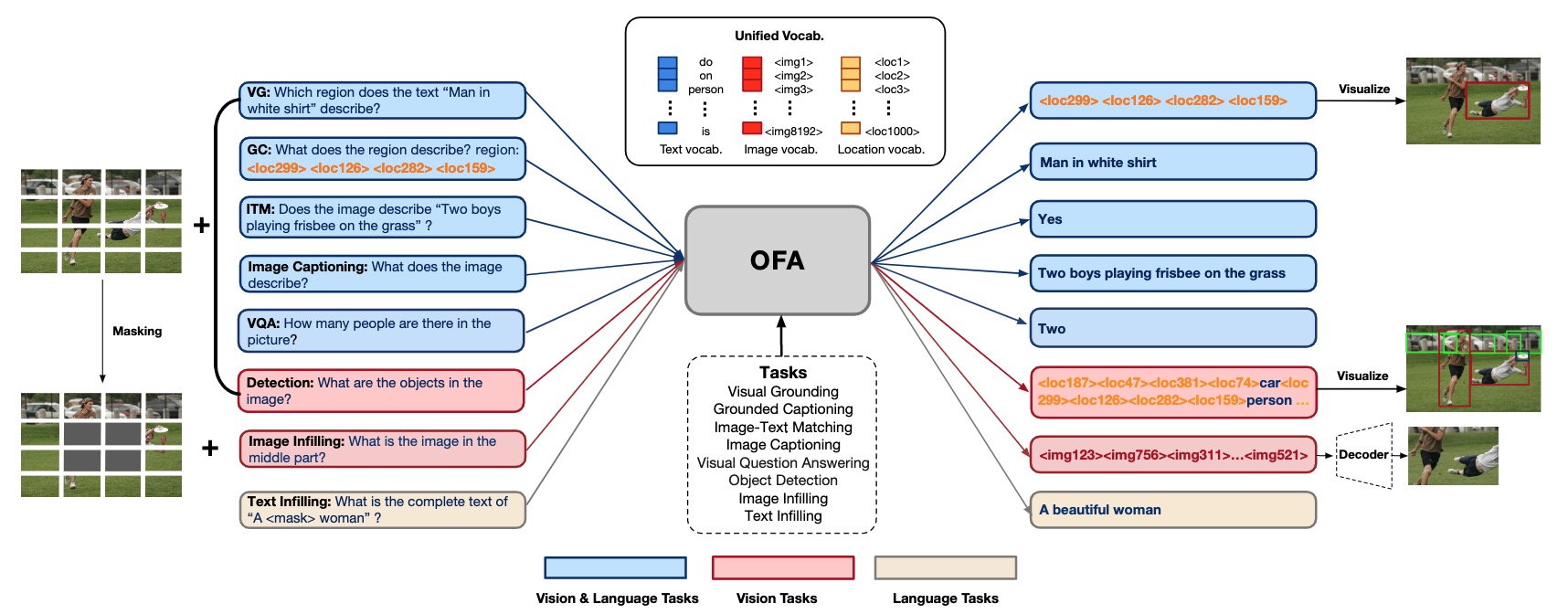

OFA is a unified multimodal pretrained model that unifies modalities (i.e., cross-modality, vision, language) and tasks (e.g., image generation, visual grounding, image captioning, image classification, text generation, etc.) to a simple sequence-to-sequence learning framework. For more information, please refer to our paper: Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework.

- 2022.2.13: Released the demo of image captioning. Have fun!

- 2022.2.11: Released the Colab notebook for image captioning

. Enjoy!

- 2022.2.11: Released the pretrained checkpoint of OFA-Large and the complete (2-staged) finetuning code for image captioning.

- 2022.2.10: Released the inference code & finetuned checkpoint for image captioning, which can reproduce the results on COCO Karparthy test split (149.6 CIDEr)

- To release finetuning and inference codes for multimodal downstream tasks soon, including image captioning, VQA, text-to-image generation, SNLI-VE, Referring expression, comprehension, etc.

- To release codes for pretraining soon.

- python 3.7.4

- pytorch 1.8.1

- JAVA 1.8 (for COCO evaluation)

git clone https://github.com/OFA-Sys/OFA

pip install -r requirements.txtSee datasets.md and checkpoints.md.

To release soon:)

Below we provide methods for fintuning and inference on different downstream tasks.

- Download data and files and put them in the correct directory

- Train

cd run_scripts/caption

nohup sh train_caption_stage1.sh & # stage1, train with cross-entropy loss

nohup sh train_caption_stage2.sh & # stage2, load the best ckpt of stage1 and train with CIDEr optimization - Inference

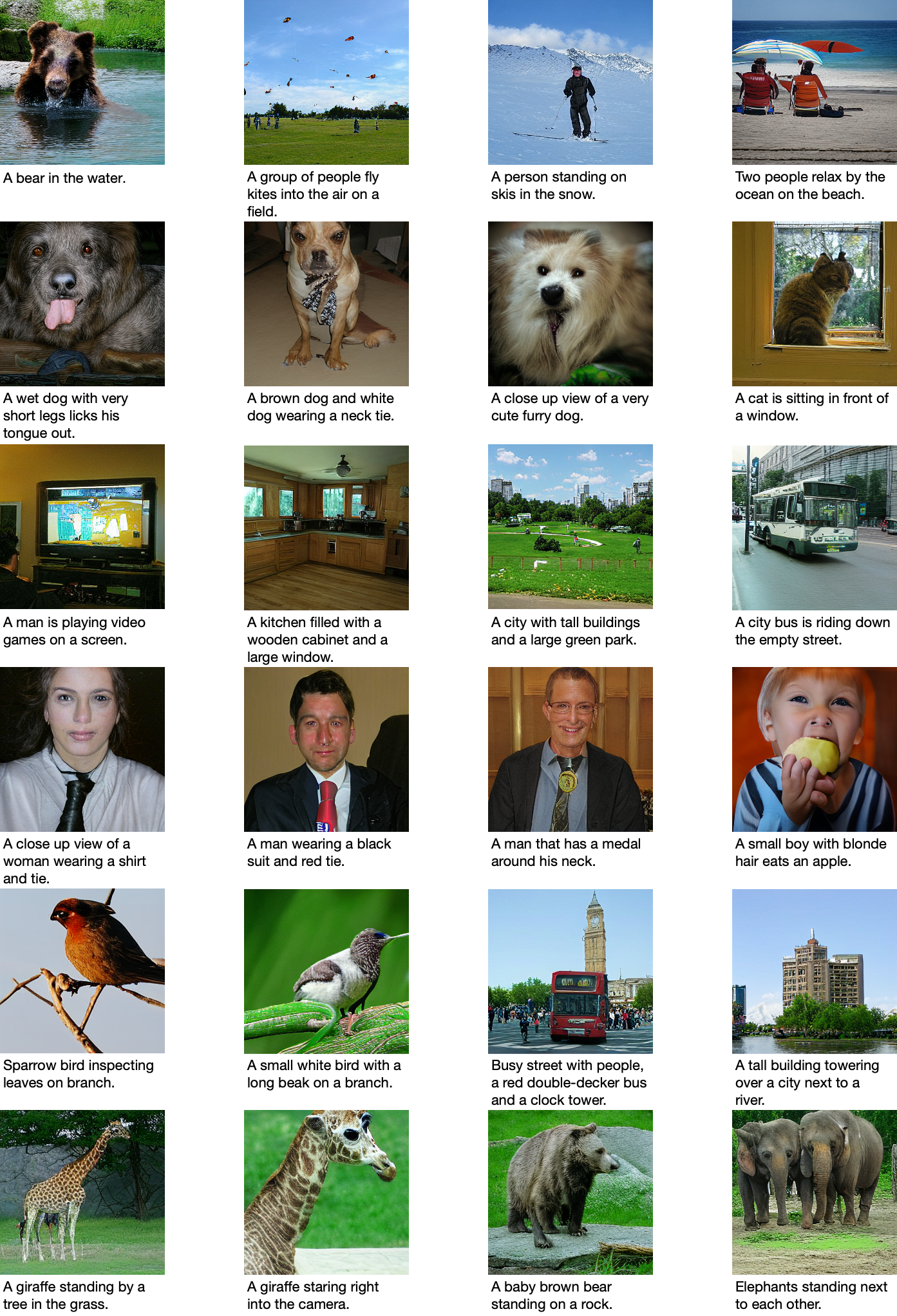

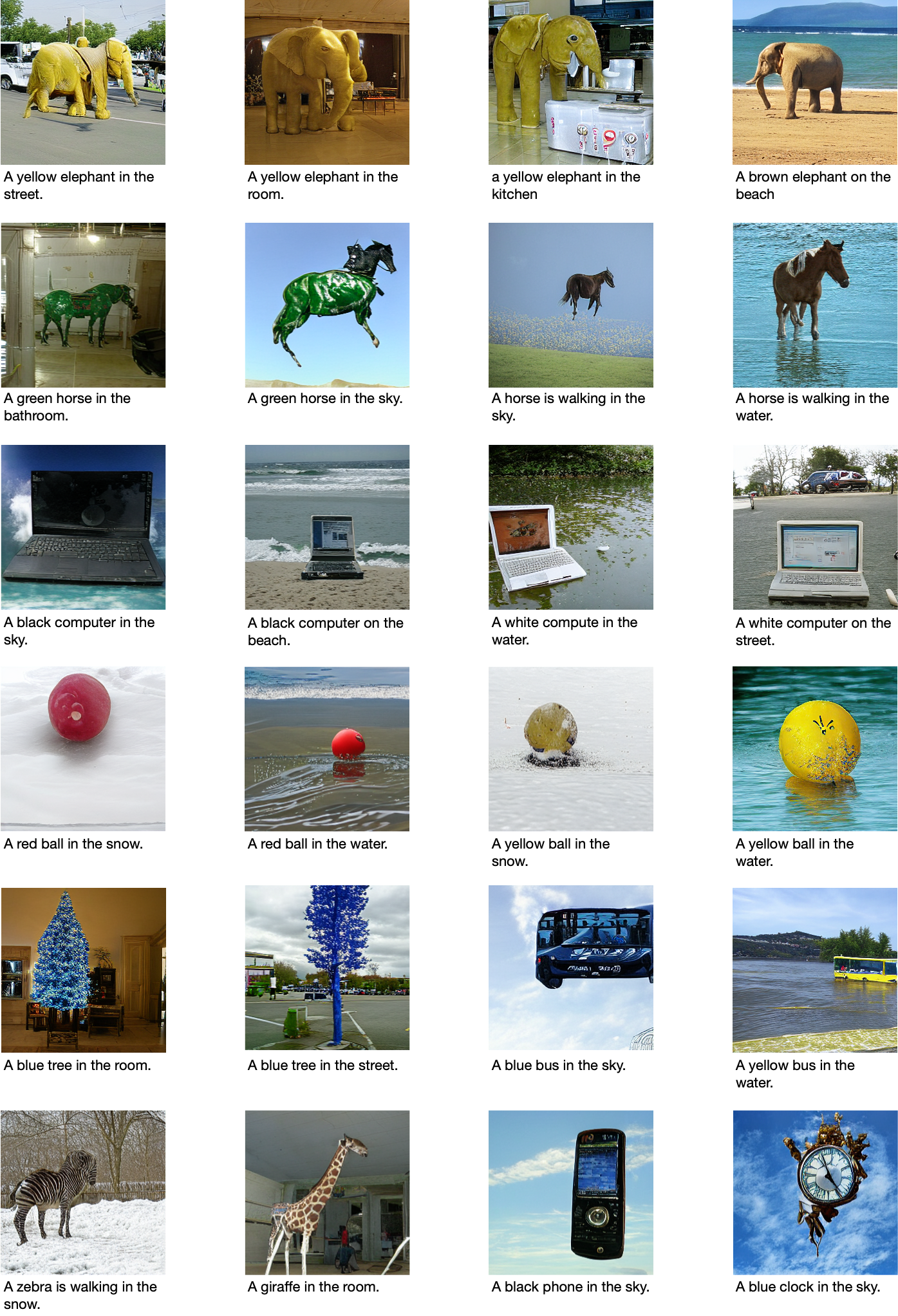

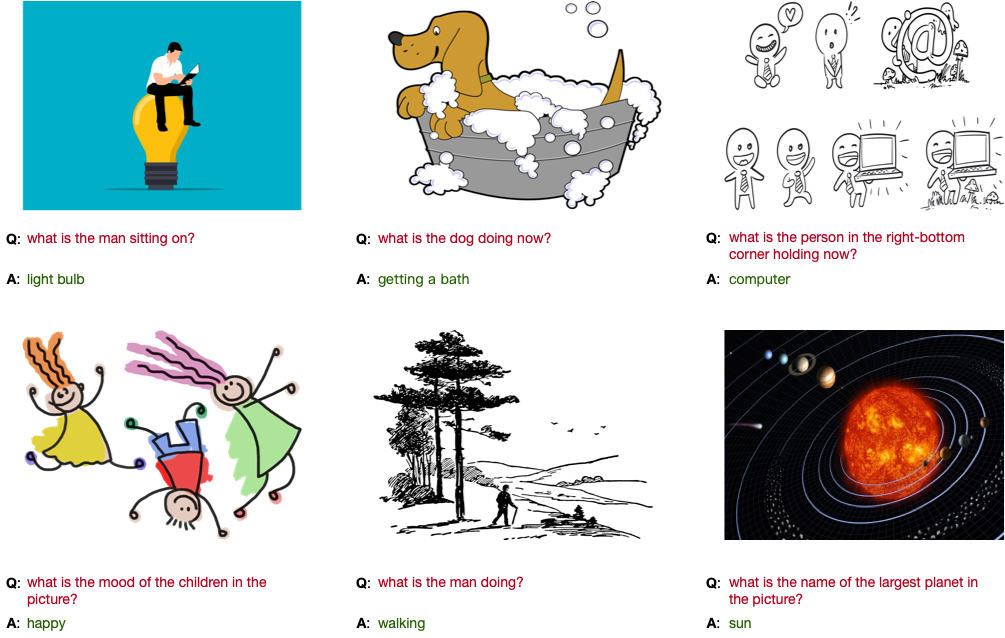

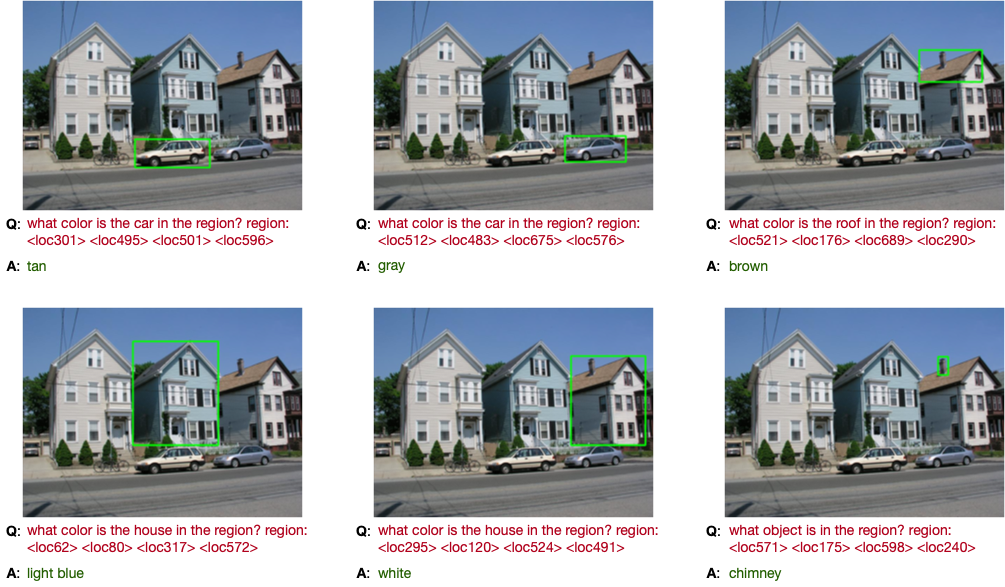

cd run_scripts/caption ; sh evaluate_caption.sh # inference & evaluateBelow we provide examples of OFA in text-to-image generation and open-ended VQA. Also, we demonstrate its performance in unseen task (Grounded QA) as well as unseen domain (Visual Grounding on images from unseen domains).

Please cite our paper if you find it helpful :)

@article{wang2022OFA,

title={Unifying Architectures, Tasks, and Modalities Through a Simple Sequence-to-Sequence Learning Framework},

author={Wang, Peng and Yang, An and Men, Rui and Lin, Junyang and Bai, Shuai and Li, Zhikang and Ma, Jianxin and Zhou, Chang and Zhou, Jingren and Yang, Hongxia},

journal={arXiv e-prints},

pages={arXiv--2202},

year={2022}

}

Apache-2.0