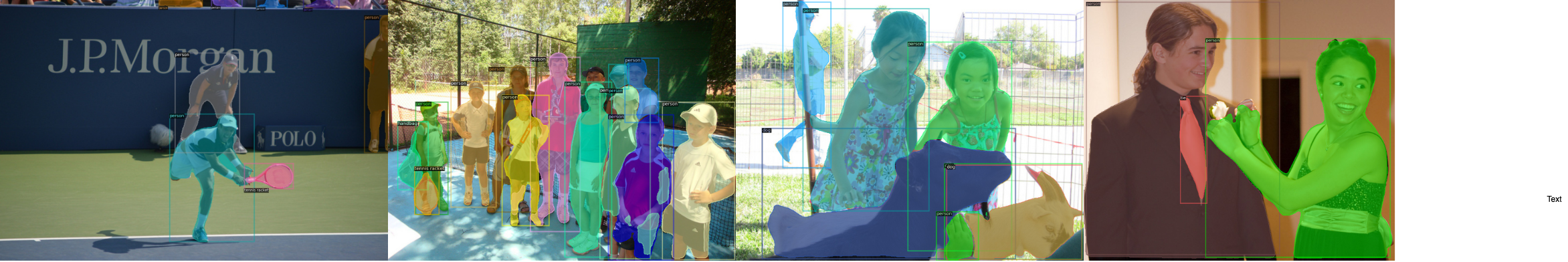

This repository implements YOLACT: Real-time Instance Segmentation on the FCOS: Fully Convolutional One-Stage Object Detection detector. The model with ResNet-101 backbone achieves 35.2 mAP on COCO val2017 set.

The code is based on detectron2. Please check Install.md for installation instructions.

Follows the same way as detectron2.

Single GPU:

python train_net.py --config-file configs/Yolact/MS_R_101_3x.yaml

Multi GPU(for example 8):

python train_net.py --num-gpus 8 --config-file configs/Yolact/MS_R_101_3x.yaml

Please adjust the IMS_PER_BATCH in the config file according to the GPU memory.

Different from the original YOLACT, The repository performs instance segmentation without ROI operations or any box cropping operations, it directly obtains the masks in the whole image size.

First replace the original detectron2 installed postprocessing.py with the file in this repository, as the original file only suit for ROI obtained masks. The path should be like /miniconda3/envs/py37/lib/python3.7/site-packages/detectron2/modeling/postprocessing.py

Single GPU:

python train_net.py --config-file configs/Yolact/MS_R_101_3x.yaml --eval-only MODEL.WEIGHTS /path/to/checkpoint_file

Multi GPU(for example 8):

python train_net.py --num-gpus 8 --config-file configs/Yolact/MS_R_101_3x.yaml --eval-only MODEL.WEIGHTS /path/to/checkpoint_file

Trained model can be download in https://drive.google.com/file/d/1TtkMFtZhacsWVaMQvNtYHhcVxY8T2o8A/view?usp=sharing

After training 36 epochs on the coco dataset using the resnet-101 backbone, the mAP is 0.352 on COCO val2017 dataset: