Official implementation of the paper in AAAI 2024:

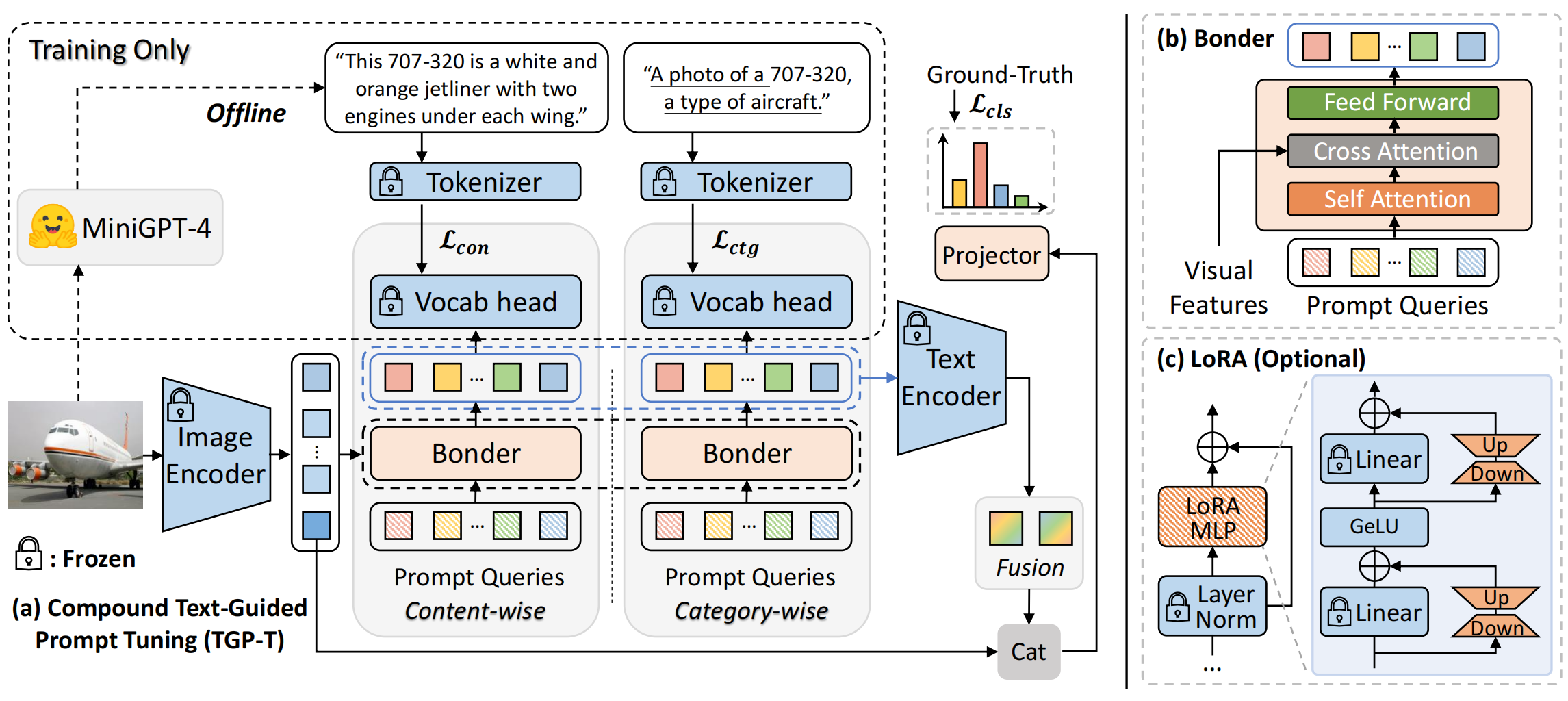

Compound Text-Guided Prompt Tuning via Image-Adaptive Cues

Hao Tan, Jun Li, Yizhuang Zhou, Jun Wan, Zhen Lei, Xiangyu Zhang

[PDF]

TGP-T is an efficient prompt tuning framework for adapting VLMs with significantly lower resource demand. We introduce compound text supervision to guide the optimization of prompts, i.e., category-wise and content-wise text supervision. Through a Bonder structure, we align the generated prompts with visual features. As a result, we only need two prompt inputs to text encoder to produce state-of-the-art performance on 11 datasets for few-shot classification.

We recommend to install the environment through conda and pip.

conda create -n tgpt python=3.8

conda activate tgpt

# Install the dependencies

pip install -r requirements.txtFollow these steps to prepare the datasets:

- Please follow

data/DATASETS.mdto download the 11 datasets along withImageNetV2andImageNet-Sketch.

- Download all the content descriptions and preprocessed data files in GoogleDrive or Baidu Netdisk (passward:ky6d).

- We provide the content descriptions generated by

MiniGPT-4for all 11 datasets. The statistics are shown in the following table. - Each line in the

descriptions.txtcontains three elements separated by\t, i.e., image name, content description and category of the image.

- Put them in the same directory. For example, the directory structure should look like:

imagenet/

|-- descriptions.txt

|-- images/

| |-- train/ # contains 1,000 folders like n01440764, n01443537, etc.

| |-- val/

|-- split_fewshot/

| |-- shot_16-seed_1.pkl # shot_16-seed_2.pkl, shot_8-seed_1.pkl, etc.

Before training, make sure you change the image paths in the split_fewshot/shot_{x}-seed_{x}.pkl.

We provide tools/convert_path.py to get this done. To trigger convertion for all datasets, you can run this simple command:

sh convert_path.sh [/root/path/to/your/data]

# For example

# sh convert_path.sh ./recognition/dataThe running configurations can be modified in configs/configs/dataset.yaml, including number of shots, visual encoders, and hyperparamters.

For simplicity, we provide the hyperparamters achieving the overall best performance on 16 shots for a dataset, which is accord with the scores in our paper. If respectively tuned for different shot numbers, the 1~16-shot performance can be further improved. You can edit the MAX_ITER, LR for fine-grained tuning.

Training. To train on a specific dataset, all you need is train.py :

- Specify the dataset configs by

--config-file - Specify your path to the dataset by

--root - Specify the path where you want to save the results (inlcuding training logs and weights) by

--output-dir - You can turn on the logging on

wandbby enabling--use-wandb. To specify the project name of wandb, you can use--wandb-proj [your-proj-name]

For example, to run on ImageNet:

python train.py --config-file configs/configs/imagenet.yaml --use-wandbReproduction. We also provide an easy way main.sh to reproduce the results in our paper. This will trigger 16-shot training on all 11 datasets, including different seeds. To speed up the training, you can choose to parallel them on multiple GPUs.

sh main.shTo perform distribution shift experiments, all you need is train_xdomain.py. We take "Source" dataset as ImageNet, "Target" datasets as ImageNet-V2 and ImageNet-Sketch.

Running the following command will automatically load the weights you have trained on 16-shot ImageNet. If you haven't trained on ImageNet before, it will first start training and then evaluation.

python train_xdomain.py --use-wandbTo inject rich semantic knowledge into prompts, we take advantages of MiniGPT-4 to generate the content descriptions. Here are some related discussions:

- In order to reduce noise from

MiniGPT-4, i.e., focus on the target object rather than background information, we adjust the input prompts forMiniGPT-4and avoid the model from generating overly long sentences. After testing, we chooseDescribe the {classname} in this image in one sentence.as the final input prompts. MiniGPT-4is introduced only during the training phase and does not cause information leakage in the test phase. Actually, the enhancement brought to visual tasks by general knowledge of large models is indeed highly interesting. We also hope this work can inspire more resource-efficient ways on this.

@inproceedings{tan2024compound,

title={Compound text-guided prompt tuning via image-adaptive cues},

author={Tan, Hao and Li, Jun and Zhou, Yizhuang and Wan, Jun and Lei, Zhen and Zhang, Xiangyu},

booktitle={Proceedings of the AAAI Conference on Artificial Intelligence},

volume={38},

number={5},

pages={5061--5069},

year={2024}

}

This repo benefits from CLIP, CoOp and Cross-Modal Adaptation. Thanks for their wonderful works.