Yixuan Zhu*, Wenliang Zhao*

$\dagger$ , Ao Li, Yansong Tang, Jie Zhou, Jiwen Lu$\ddagger$ * Equal contribution

$\dagger$ Project leader$\ddagger$ Corresponding author

The repository contains the official implementation for the paper "FlowIE: Efficient Image Enhancement via Rectified Flow" (CVPR 2024, oral presentation).

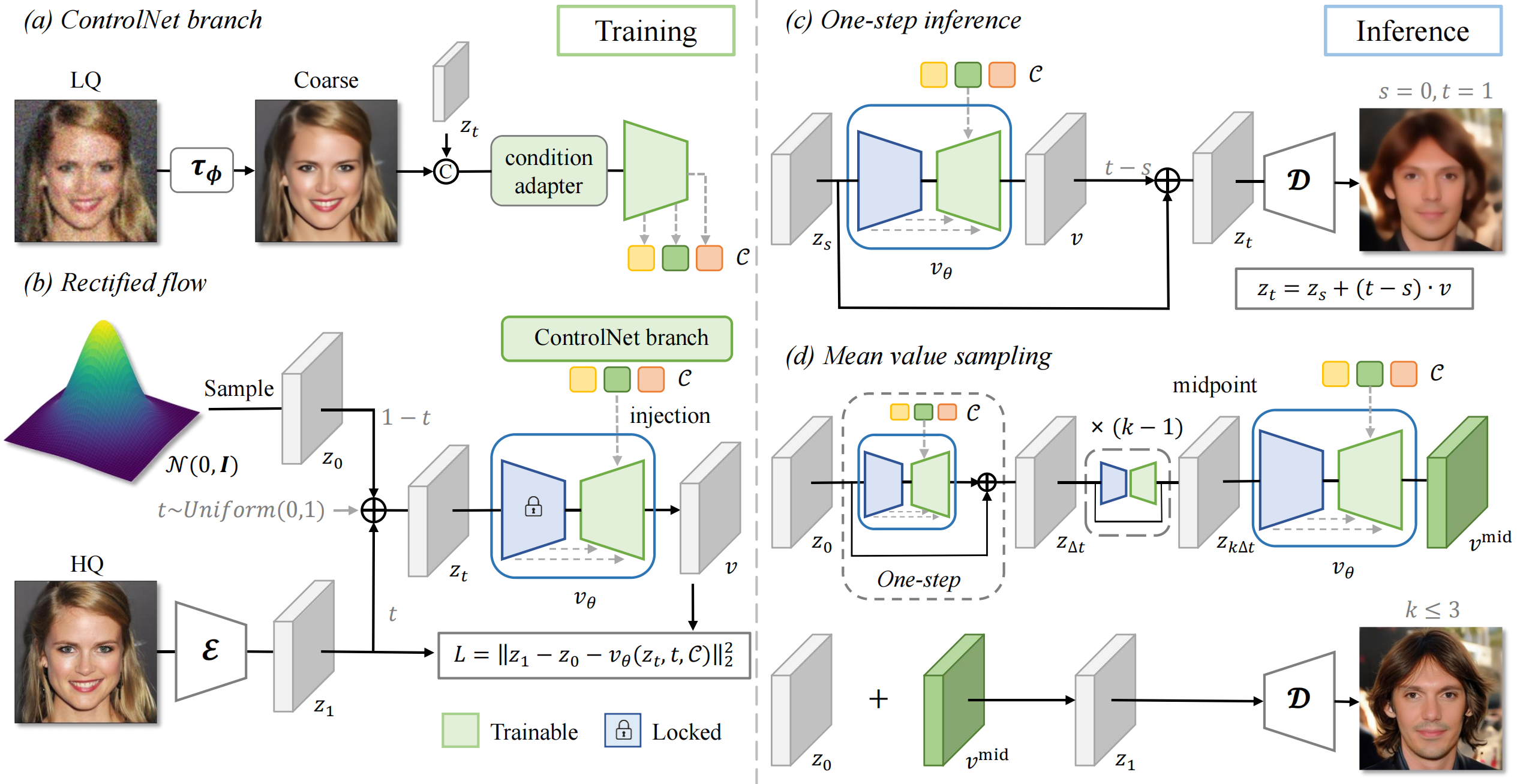

FlowIE is a simple yet highly effective Flow-based Image Enhancement framework that estimates straight-line paths from an elementary distribution to high-quality images.

- Release model and inference code.

- Release code for training dataloader.

We recommend you to use an Anaconda virtual environment. If you have installed Anaconda, run the following commands to create and activate a virtual environment.

conda env create -f requirements.txt

conda activate FlowIEWe prepare the data in a samilar way as GFPGAN & DiffBIR. We list the datasets for BFR and BSR as follows:

For BFR evaluation, please refer to here for BFR-test datasets, which include CelebA-Test, CelebChild-Test and LFW-Test. The WIDER-Test can be found in here. For BFR training, please download the FFHQ dataset.

For BSR, we utilize ImageNet for training. For evaluation, you can refer to BSRGAN for RealSRSet.

To prepare the training list, you need to simply run the script:

python ./scripts/make_file_list.py --img_folder /data/ILSVRC2012 --save_folder ./dataset/list/imagenet

python ./scripts/make_file_list.py --img_folder /data/FFHQ --save_folder ./dataset/list/ffhqThe file list looks like this:

/path/to/image_1.png

/path/to/image_2.png

/path/to/image_3.png

...Please download our pretrained checkpoints from this link and put them under ./weights. The file directory should be:

|-- checkpoints

|--|-- FlowIE_bfr_v1.ckpt

|--|-- FlowIE_bsr_v1.ckpt

...

You can test FlowIE with following commands:

- Evaluation for BFR

python inference_bfr.py --ckpt ./weights/FlowIE_bfr_v1.ckpt --has_aligned --input /data/celeba_512_validation_lq/ --output ./outputs/bfr_exp --has_aligned- Evaluation for BSR

python inference_bsr.py --ckpt ./weights/FlowIE_bsr_v1.ckpt --has_aligned --input /data/testdata/ --output ./outputs/bsr_exp- Quick Test

For a quick test, we collect some test samples in ./assets. You can run the demo for BFR:

python inference_bfr.py --ckpt ./weights/FlowIE_bfr_v1.ckpt --input ./assets/faces --output ./outputs/demoAnd for BSR:

python inference_bsr.py --ckpt ./weights/FlowIE_bsr_v1.pth --has_aligned --input /data/testdata/ --output ./outputs/bsr_exp --tiledYou can use --tiled for patch-based inference and use --sr_scale tp set the super-resolution scale, like 1, 2 or 4. You can set CUDA_VISIBLE_DEVICES=1 to choose the devices.

The evaluation process can be done with one Nvidia GeForce RTX 3090 GPU (24GB VRAM). You can use more GPUs by specifying the GPU ids.

The key component in FlowIE is a path estimator tuned from Stable Diffusion v2.1 base. Please download it to ./weights. Another part is the initial module, which can be found in checkpoints.

Before training, you also need to configure training-related information in ./configs/train_cldm.yaml. Then run this command to start training:

python train.py --config ./configs/train_cldm.yamlWe would like to express our sincere thanks to the author of DiffBIR for the clear code base and quick response to our issues.

We also thank CodeFormer, Real-ESRGAN and LoRA, for our code is partially borrowing from them.

The new version of FlowIE based on Denoising Transformer (DiT) structure will be released soon! Thanks the newest works of DiTs, including PixART and Stable Diffusion 3.

Please cite us if our work is useful for your research.

@misc{zhu2024flowie,

title={FlowIE: Efficient Image Enhancement via Rectified Flow},

author={Yixuan Zhu and Wenliang Zhao and Ao Li and Yansong Tang and Jie Zhou and Jiwen Lu},

year={2024},

eprint={2406.00508},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

This code is distributed under an MIT LICENSE.