Illinois Extended Reality testbed or ILLIXR (pronounced like elixir) is the first fully open-source Extended Reality (XR) system and testbed. The modular, extensible, and OpenXR-compatible ILLIXR runtime integrates state-of-the-art XR components into a complete XR system. The testbed is part of the broader ILLIXR consortium, an industry-supported community effort to democratize XR systems research, development, and benchmarking.

You can find the complete ILLIXR system here.

ILLIXR also provides its components in standalone configurations to enable architects and system designers to research each component in isolation. The standalone components are packaged together in the v1-latest release of ILLIXR.

ILLIXR's modular and extensible runtime allows adding new components and swapping different implementations of a given component. ILLIXR currently contains the following components:

-

Perception

- Eye Tracking

- RITNet **

- Scene Reconstruction

- ElasticFusion **

- KinectFusion **

- Simultaneous Localization and Mapping

- OpenVINS **

- Kimera-VIO **

- Cameras and IMUs

- Eye Tracking

-

Visual

-

Aural

- Audio encoding **

- Audio playback **

(** Source is hosted in an external repository under the ILLIXR project.)

We continue to add more components (new components and new implementations).

Many of the current components of ILLIXR were developed by domain experts and obtained from publicly available repositories. They were modified for one or more of the following reasons: fixing compilation, adding features, or removing extraneous code or dependencies. Each component not developed by us is available as a forked github repository for proper attribution to its authors.

A paper with details on ILLIXR, including its components, runtime, telemetry support, and a comprehensive analysis of performance, power, and quality on desktop and embedded systems.

A talk presented at NVIDIA GTC'21 describing ILLIXR and announcing the ILLIXR consortium: Video. Slides.

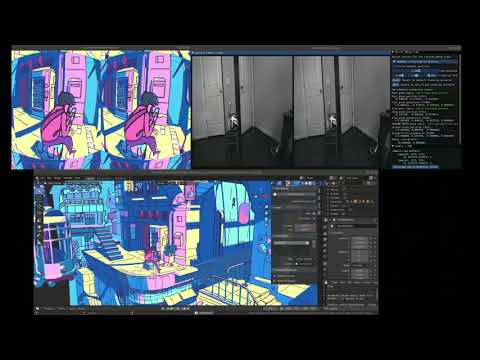

A demo of an OpenXR application running with ILLIXR.

The ILLIXR consortium is an industry-supported community effort to democratize XR systems research, development, and benchmarking. Visit our web site for more information.

We request that you cite our following paper (new version coming soon) when you use ILLIXR for a publication. We would also appreciate it if you send us a citation once your work has been published.

@misc{HuzaifaDesai2020,

title={Exploring Extended Reality with ILLIXR: A new Playground for Architecture Research},

author={Muhammad Huzaifa and Rishi Desai and Samuel Grayson and Xutao Jiang and Ying Jing and Jae Lee and Fang Lu and Yihan Pang and Joseph Ravichandran and Finn Sinclair and Boyuan Tian and Hengzhi Yuan and Jeffrey Zhang and Sarita V. Adve},

year={2021},

eprint={2004.04643},

primaryClass={cs.DC}

}

For more information, see our Getting Started page.

The ILLIXR project started in Sarita Adve’s research group, co-led by PhD candidate Muhammad Huzaifa, at the University of Illinois at Urbana-Champaign. Other major contributors include Rishi Desai, Samuel Grayson, Xutao Jiang, Ying Jing, Jae Lee, Fang Lu, Yihan Pang, Joseph Ravichandran, Giordano Salvador, Finn Sinclair, Boyuan Tian, Henghzhi Yuan, and Jeffrey Zhang.

ILLIXR came together after many consultations with researchers and practitioners in many domains: audio, graphics, optics, robotics, signal processing, and extended reality systems. We are deeply grateful for all of these discussions and specifically to the following: Wei Cu, Aleksandra Faust, Liang Gao, Matt Horsnell, Amit Jindal, Steve LaValle, Steve Lovegrove, Andrew Maimone, Vegard Øye, Martin Persson, Archontis Politis, Eric Shaffer, Paris Smaragdis, Sachin Talathi, and Chris Widdowson.

Our OpenXR implementation is derived from Monado. We are particularly thankful to Jakob Bornecrantz and Ryan Pavlik.

The development of ILLIXR was supported by the Applications Driving Architectures (ADA) Research Center (a JUMP Center co-sponsored by SRC and DARPA), the Center for Future Architectures Research (C-FAR, a STARnet research center), a Semiconductor Research Corporation program sponsored by MARCO and DARPA, and by a Google Faculty Research Award. The development of ILLIXR was also aided by generous hardware and software donations from ARM and NVIDIA. Facebook Reality Labs provided the OpenEDS Semantic Segmentation Dataset.

Wesley Darvin came up with the name for ILLIXR.

ILLIXR is available as open-source software under the permissive University of Illinois/NCSA Open Source License. As mentioned above, ILLIXR largely consists of components developed by domain experts and modified for the purposes of inclusion in ILLIXR. However, ILLIXR does contain software developed solely by us. The NCSA license is limited to only this software. The external libraries and softwares included in ILLIXR each have their own licenses and must be used according to those licenses:

Note that ILLIXR's extensibility allows the source to be configured and compiled using only permissively licensed software.

Whether you are a computer architect, a compiler writer, a systems person, work on XR related algorithms or applications, or just anyone interested in XR research, development, or products, we would love to hear from you and hope you will contribute! You can join the ILLIXR consortium, Discord, or mailing list, or send us an email, or just send us a pull request!