A small and collection of results, if checks speed of GGUF model on different combination of GPUs and CPUs.

Inspired due to conversation in Telegram chat.

https://huggingface.co/spaces/evilfreelancer/msnp-leaderboard

First you need to install ollama to server where you will make tests.

Download models:

ollama pull llama3.1:8b-instruct-q4_0

ollama pull llama3.1:70b-instruct-q4_0Please use models with quantization in name

Then you need to create Python Virtual Environment, then chroot to it:

mkdir msnp-tests

cd msnp-tests

python3 -m venv venv

source venv/bin/activateThen download requirements and tests.py files:

wget https://raw.githubusercontent.com/EvilFreelancer/llm-msnp-tests/refs/heads/main/requirements.txt

wget https://raw.githubusercontent.com/EvilFreelancer/llm-msnp-tests/refs/heads/main/test.pyInstall dependencies:

pip install -r requirements.txtAnd run test:

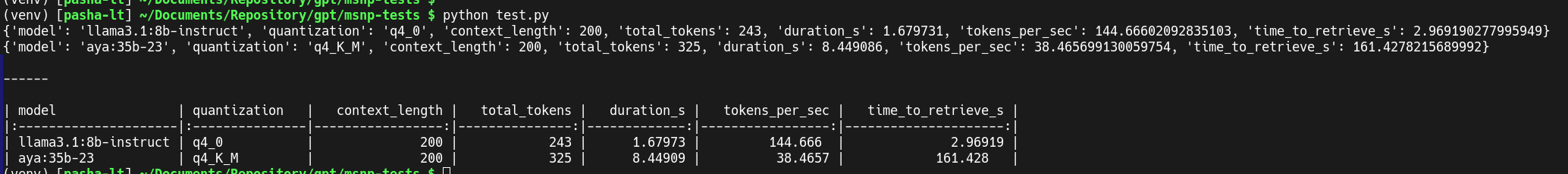

python3 test.py In result will be something like this: