SLIM: Explicit Slot-Intent Mapping with BERT for Joint Multi-Intent Detection and Slot Filling

Pytorch implementation of SLIM: Explicit Slot-Intent Mapping with BERT for Joint Multi-Intent Classification and Slot Filling

Update 24 May:

Thanks for Feifan Song, who reminded us the fact that all the baseline methods are evaluated on MixSNIPS and MixATIS dataset, while our method is evaluate on MixATIS_clean and MixSNIPS_clean. We decide to re-evaluate our method on MixSNIPS and MixATIS dataset, and update the result very soon.

Insight

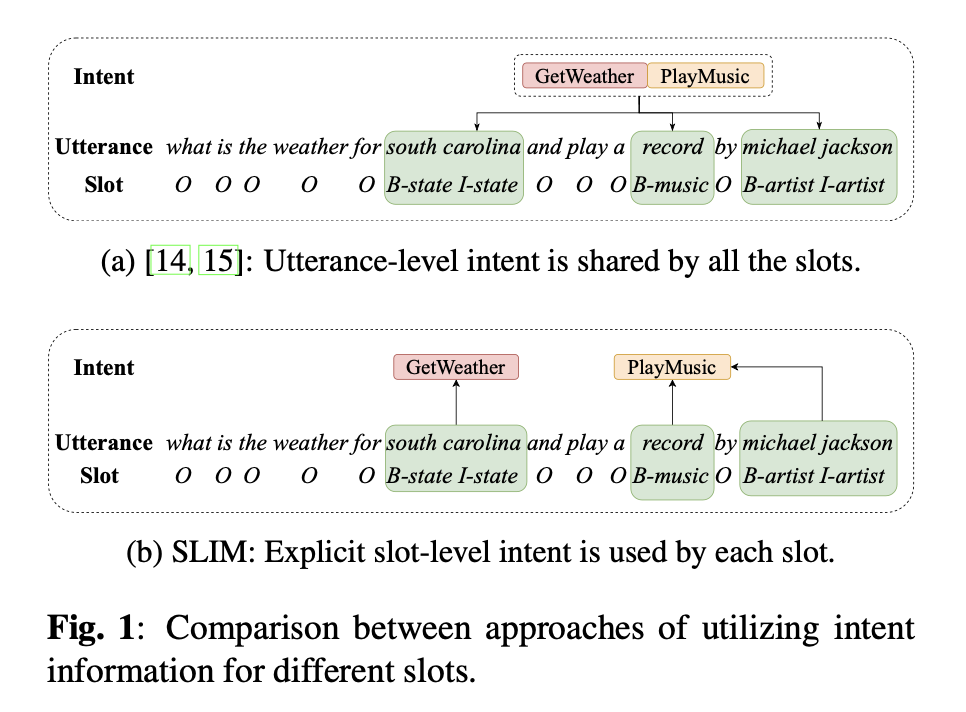

The previous multi-intent works predict intents and slots by feeding the same coarse-grained information distribution to assist slot prediction. However, in the multi-intent setting, different slots are mapped to different intents. Therefore, we take advantage of the slot-intent mapping to guide the intent detection and slot filling.

Model Architecture

- Predict

intentandslotat the same time from one BERT model (=Joint model) - total_loss = intent_loss + slot_coef * slot_loss + slot_intent_coef * slot_intent_loss

Dependencies

Please refer to requirements.txt

Dataset

- The following table includes the train/dev/test split of MixSNIPS and MixATIS. Also, we reports the number of intent labels and slot labels in the training set. Also, based on the mechanism of MixSnips / MixATIS construction, we label the slot-level intent.

| Train | Dev | Test | Intent Labels | Slot Labels | |

|---|---|---|---|---|---|

| MixATIS | 13,161 | 759 | 828 | 21 | 118 |

| MixSnips | 39,776 | 2,198 | 2,199 | 7 | 71 |

- Also, we use DSTC4. The contact of data is Teo Poh Heng

- The number of labels are based on the train dataset.

- Add

UNKfor labels (For intent and slot labels which are only shown in dev and test dataset) - Add

PADfor slot label

Training & Evaluation

All experiments are conducted using a single GeForce GTX TITAN X GPU.

$ python3 main.py --task {task_name} \

--model_type {model_type} \

--model_dir {model_dir_name} \

--do_eval

# For MixSNIPS

$ python3 main.py --task mixsnips \

--model_type multibert \

--model_dir mixsnips_model \

--multi_intent 1 \

--intent_seq 0 \

--tag_intent 1 \

--BI_tag 1 \

--intent_attn 1 \

--cls_token_cat 1 \

--num_mask 6 \

--slot_loss_coef 2 \

--patience 0 \

--seed 25\

--do_train

# For MixATIS

$ python3 main.py --task mixatis \

--model_type multibert \

--model_dir mixatis_model \

--multi_intent 1 \

--intent_seq 0 \

--tag_intent 1 \

--BI_tag 1 \

--intent_attn 1 \

--cls_token_cat 1 \

--num_mask 6 \

--slot_loss_coef 2 \

--patience 0 \

--seed 12\

--do_trainPrediction

$ python3 main.py --task {task_name} \

--model_type {model_type} \

--model_dir {model_dir_name} \

--do_eval

# For MixSNIPS

$ python3 main.py --task mixsnips \

--model_type multibert \

--model_dir mixsnips_model \

--multi_intent 1 \

--intent_seq 0 \

--tag_intent 1 \

--BI_tag 1 \

--intent_attn 1 \

--cls_token_cat 1 \

--num_mask 6 \

--slot_loss_coef 2 \

--patience 0 \

--seed 25 \

--do_eval

# For MixATIS

$ python3 main.py --task mixatis \

--model_type multibert \

--model_dir mixatis_model \

--multi_intent 1 \

--intent_seq 0 \

--tag_intent 1 \

--BI_tag 1 \

--intent_attn 1 \

--cls_token_cat 1 \

--num_mask 6 \

--slot_loss_coef 2 \

--patience 0 \

--seed 12 \

--do_evalResults

- We will later provide the detailed hyperparameter settings

- Only test with

uncasedmodel