Frames Across Multiple Sources (FAMuS)

This repository contains the data for the sub-tasks of FAMuS along with the data preprocessing scripts that can be used to run various models.

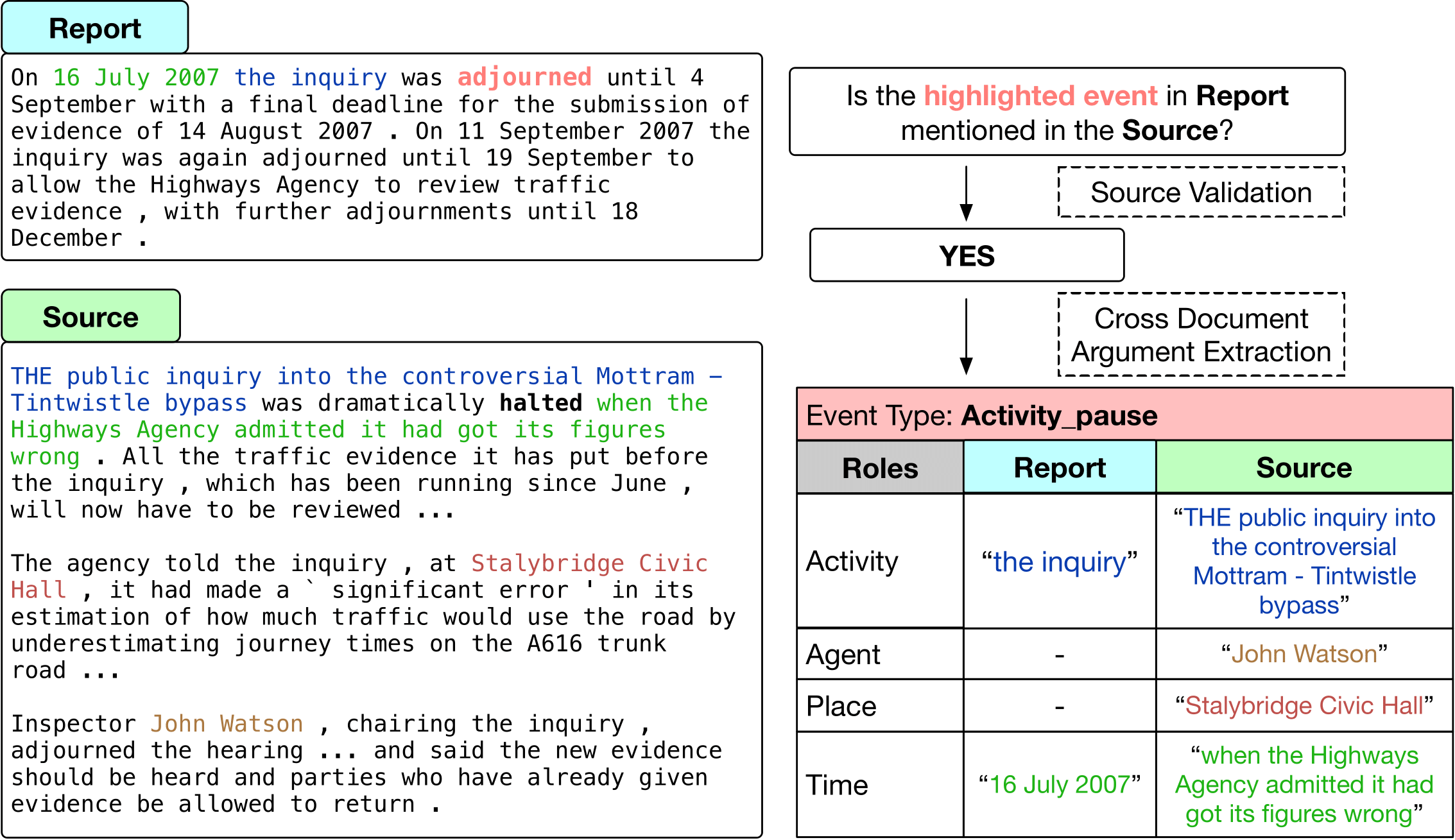

FAMuS introduces two sub-tasks aimed at Cross-Document Event Extraction as shown in the figure below:

The tasks are:

-

Source Validation (SV): Given a report text

$R$ , a target event trigger (mention)$e$ occurring in$R$ , and a candidate source text$S$ , determine whether$S$ contains a description of the same event as the one denoted by$e$ .data/source_validation/contains thetrain/dev/testsplits for this task and a description of all the fields in the data -

Cross Document Argument Extraction (CDAE): Given a report text

$R$ , a target event trigger$e$ in$R$ , and a correct source text$S$ , extract all arguments of$e$ in both$R$ and$S$ . We assume$e$ is assigned an event type from some underlying ontology of event types$E_1, . . . E_N$ , where each$E_i$ has roles$R_1^{(i)}, . . . , R_M^{(i)}$ , and where e’s arguments must each be assigned one of these roles.data/cross_doc_role_extractioncontains thetrain/dev/testsplits for this task description of all the fields in the data

For more details on the tasks, data collection, and models, please read our paper.

We recommend using a fresh Python environment for training/evaluating our model scripts on the dataset. We tested our codebase on Python 3.11

You can create a new conda environment by running the following command:

conda create -n famus python=3.11

conda activate famus

Before installing requirements, we recommend you install Pytorch on your system based on your GPU/CPU configurations following the instructions here. Then inside the root directory of this repo, run:

pip install -r requirements.txt

-

Source Validation: To download the best longformer model from the paper, run the following command from the root of the repo:

bash models/source_validation/download_longformer_model.sh(or directly download from this google drive link)

To reproduce the evaluation metrics in the paper, run the following:

bash src/job_runs/run_source_val_evaluation.sh -

Cross Document Argument Extraction:

To download the best longformer qa models for both report and source from the paper, run the following command from the root of the repo:

bash models/cdae/download_longformer_model.sh(or directly download from this google drive link.)

To run evaluation of the model on dev or test, run the following:

bash src/job_runs/run_cross_doc_roles_qa_evaluation.shYou can find the metrics output in

src/metrics/

The CEAF_RME(a) metric that uses the normalized edit distance (read more in the paper) is implemented in the Iter-X repo and we provide an example notebook showing how to compute the metric on a sample gold and predicted template set from a document.