Comments on source code serve as critical documentation for enabling developers to understand the code's functionality and use it properly. However, it is challenging to ensure that comments accurately reflect the corresponding code, particularly as the software evolves over time. Although increasing interest has been taken in developing automated methods for identifying and fixing inconsistencies between code and comments, the existing methods have primarily relied on heuristic rules.

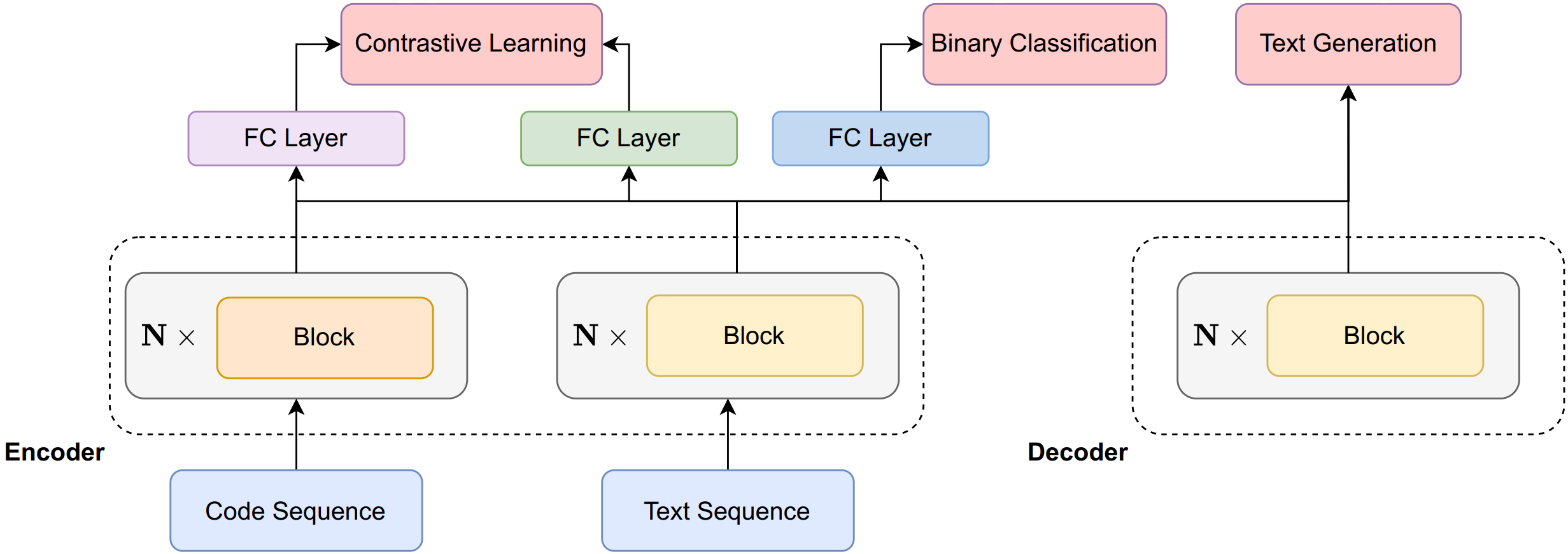

DocChecker is trained on top of encoder-decoder model to learn from code-text pairs. It is jointly pre-trained with three objectives: code-text contrastive learning, binary classification, and text generation. DocChecker is a tool that be used to detect noisy code-comment pairs and generate synthetic comments, enabling it to determine comments that do not match their associated code snippets and correct them. Its effectiveness is demonstrated on the Just-In-Time dataset compared with other state-of-the-art methods.

- (Optional) Creating conda environment

conda create -n docchecker python=3.8

conda activate docchecker- Install from PyPI:

pip install docchecker- Alternatively, build DocChecker from source:

git clone https://github.com/FSoft-AI4Code/DocChecker.git

cd DocChecker

pip install -r requirements.txt .Getting started with DocChecker is simple and quick with our tool by using inference() function.

from DocChecker.utils import inferenceThere are a few notable arguments that need to be considered:

Parameters:

input_file_path(str): the file path that contains source code, if you want to check all the functions in there.raw_code(str): a sequence of source code ifinput_file_pathis not given.language(str, required): the programming language that corresponds your raw_code. We support 10 popular programming languages, including Java, JavaScript, Python, Ruby, Rust, Golang, C#, C++, C, and PHP.output_file_path(str): ifoutput_file_pathis given, the results from our tool will be written inoutput_file_path; otherwise, they will be printed on the screen.

Returns:

- list of dictionaries, including:

function_name: the name of each function in the raw codecode: code snippetdocstring: the docstring corresponding code snippetpredict: the prediction of DocChecker. It returns “Inconsistent!” or “Consistent!”, corresponding the docstring is inconsistent/consistent with the code in a code-text pairrecommend_docstring: If a code-text pair is considered as “Inconsistent!”, DocChecker will replace its docstring by giving comprehensive ones; otherwise, it will keep the original version.

Here's an example showing how to load docchecker model and perform inference on inconsistent detection task:

from DocChecker.utils import inference

code = """

def inject_func_as_unbound_method(class_, func, method_name=None):

# This is actually quite simple

if method_name is None:

method_name = get_funcname(func)

setattr(class_, method_name, func)

def e(message, exit_code=None):

# Print an error log message.

print_log(message, YELLOW, BOLD)

if exit_code is not None:

sys.exit(exit_code)

"""

inference(raw_code=code,language='python')

>>[

{

"function_name": "inject_func_as_unbound_method",

"code": "def inject_func_as_unbound_method(class_, func, method_name=None):\n \n if method_name is None:\n method_name = get_funcname(func)\n setattr(class_, method_name, func)",

"docstring": " This is actually quite simple",

"predict": "Inconsistent!",

"recommended_docstring": "Inject a function as an unbound method."

},

{

"function_name": "e",

"code": "def e(message, exit_code=None):\n \n print_log(message, YELLOW, BOLD)\n if exit_code is not None:\n sys.exit(exit_code)",

"docstring": "Print an error log message.",

"predict": "Consistent!",

"recommended_docstring": "Print an error log message."

}

]We also provide our source code for you to re-pretraining DocChecker.

Setup environment and install dependencies for pre-training.

cd ./DocChecker

pip -r install requirements.txtThe dataset we used comes from CodeXGLUE. It can be downloaded by following the command line:

wget https://github.com/microsoft/CodeXGLUE/raw/main/Code-Text/code-to-text/dataset.zip

unzip dataset.zip

rm dataset.zip

cd dataset

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/python.zip

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/java.zip

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/ruby.zip

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/javascript.zip

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/go.zip

wget https://s3.amazonaws.com/code-search-net/CodeSearchNet/v2/php.zip

unzip python.zip

unzip java.zip

unzip ruby.zip

unzip javascript.zip

unzip go.zip

unzip php.zip

rm *.zip

rm *.pkl

python preprocess.py

rm -r */final

cd ..To re-pretrain, follow the below command line:

python -m torch.distributed.run --nproc_per_node=2 run.py \

--do_train \

--do_eval \

--task pretrain \

--data_folder dataset/pretrain_dataset \

--num_train_epochs 10 To demonstrate the performance of our approach, we fine-tune DocChecker on the Just-In-Time task. The purpose of this task is to determine whether the comment is semantically out of sync with the corresponding code function.

Download data for the Just-In-Time task from here.

We also provide fine-tune settings for DocChecker, whose results are reported in the paper.

# Training

python -m torch.distributed.run --nproc_per_node=2 run.py \

--do_train \

--do_eval \

--post_hoc \

--task just_in_time \

--load_model \

--data_folder dataset/just_in_time \

--num_train_epochs 30

# Testing

python -m torch.distributed.run --nproc_per_node=2 run.py \

--do_test \

--post_hoc \

--task just_in_time \

--data_folder dataset/just_in_time \ We provide an interface for DocChecker at the link. The demonstration can be found at Youtube.

More details can be found in our paper. If you use this code or our package, please consider citing us:

@article{DocChecker,

title={Bootstrapping Code-Text Pretrained Language Model to Detect Inconsistency Between Code and Comment},

author={Anh T. V. Dau, Jin L.C. Guo, Nghi D. Q. Bui},

journal={EACL 2024 - Demonstration track},

pages={},

year={2024}

}If you have any questions, comments or suggestions, please do not hesitate to contact us.

- Website: fpt-aicenter

- Email: support.ailab@fpt.com