A tensorflow implementation of VNect: Real-time 3D Human Pose Estimation with a Single RGB Camera.

For the caffe model/weights required in the repository: please contact the author of the paper.

- Python 3.x

- tensorflow-gpu 1.x

- pycaffe

Fedora 29

pip3 install -r requirements.txt --user

sudo dnf install protobuf-devel leveldb-devel snappy-devel opencv-devel boost-devel hdf5-devel glog-devel gflags-devel lmdb-devel atlas-devel python-lxml boost-python3-devel

git clone https://github.com/BVLC/caffe.git

cd caffe

sudo make all

sudo make runtest

sudo make pycaffe

sudo make distribute

sudo cp .build_release/lib/ /usr/lib64

sudo cp -a distribute/python/caffe/ /usr/lib/python3.7/site-packages/

- Drop the pretrained caffe model into

models/caffe_model. - Run

init_weights.pyto generate tensorflow model.

run_estimator.pyis a script for video stream.- (Recommended)

run_estimator_ps.pyis a multiprocessing version script. When 3d plotting function shuts down inrun_estimator.pymentioned above, you can try this one. run_pic.pyis a script for picture.- (Deprecated)

benchmark.pyis a class implementation containing all the elements needed to run the model. - (Deprecated)

run_estimator_robot.pyadditionally provides ROS network and/or serial connection for communication in robot controlling. - (Deprecated) The training script

train.pyis not complete yet (I failed to reconstruct the model: ( So do not use it. Also pulling requests are welcomed.

[Tips] To run the scripts for video stream:

-

click left mouse button to initialize the bounding box implemented by a simple HOG method;

-

trigger any keyboard input to exit while running.

- With some certain programming environments, the 3d plotting function (from matplotlib) in

run_estimator.pyshuts down. Userun_estimator_ps.pyinstead. - The input image is in BGR color format and the pixel value is mapped into a range of [-0.4, 0.6).

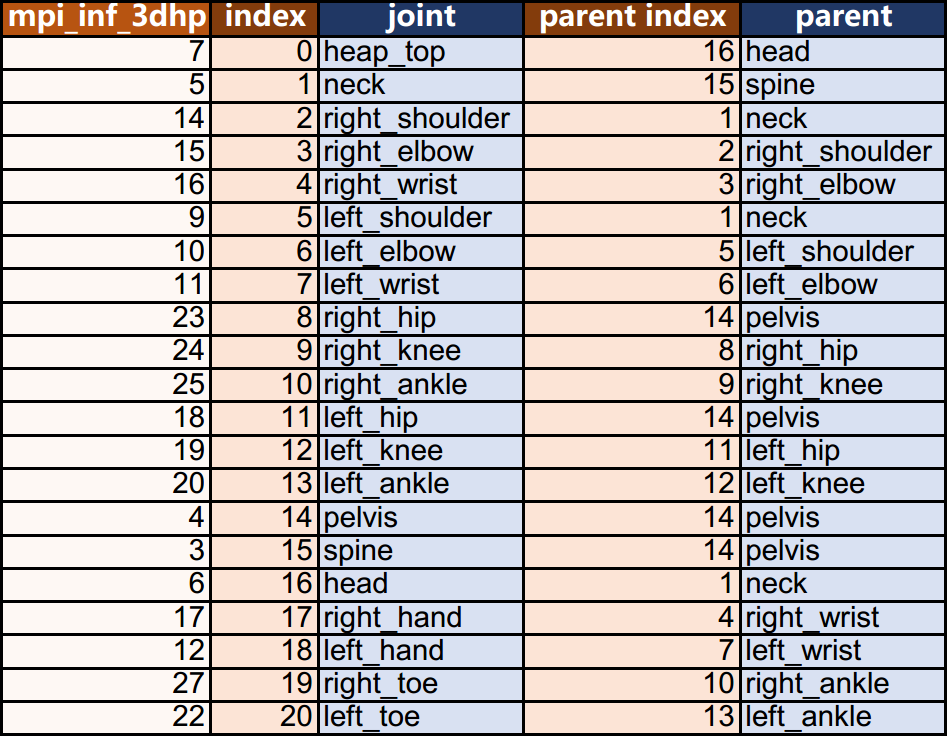

- The joint-parent map (detailed information in

materials/joint_index.xlsx):

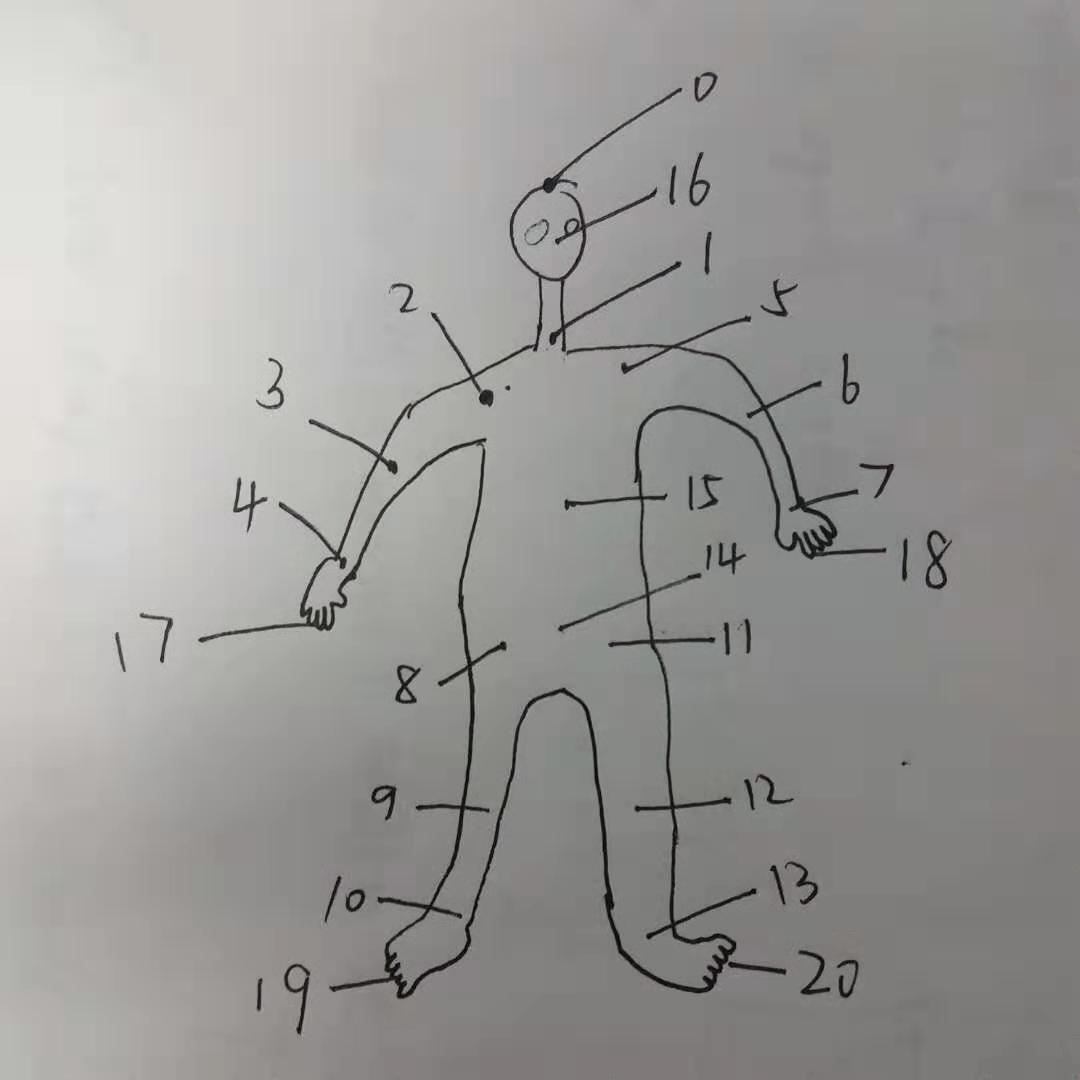

- Here I have a sketch to show the joint positions (don't laugh lol):

- Every input image is assumed to contain 21 joints to be found, which means it is easy to fit wrong results when a joint is actually not in the picture.

For MPI-INF-3DHP dataset, refer to my another repository.

- original MATLAB implementation provided by the paper author.

- timctho/VNect-tensorflow

- EJShim/vnect_estimator