Zhi Cai1,2, Yingjie Gao1,2, Yaoyan Zheng1,2, Nan Zhou1,2 and Di Huang1,2

- Aug-1-24: We open source the code, models.🔥🔥

- Jul-20-24: Crowd-SAM paper is released arxiv link. 🔥🔥

- Jul-1-24: Crowd-SAM has been accepted to ECCV-24 🎉.

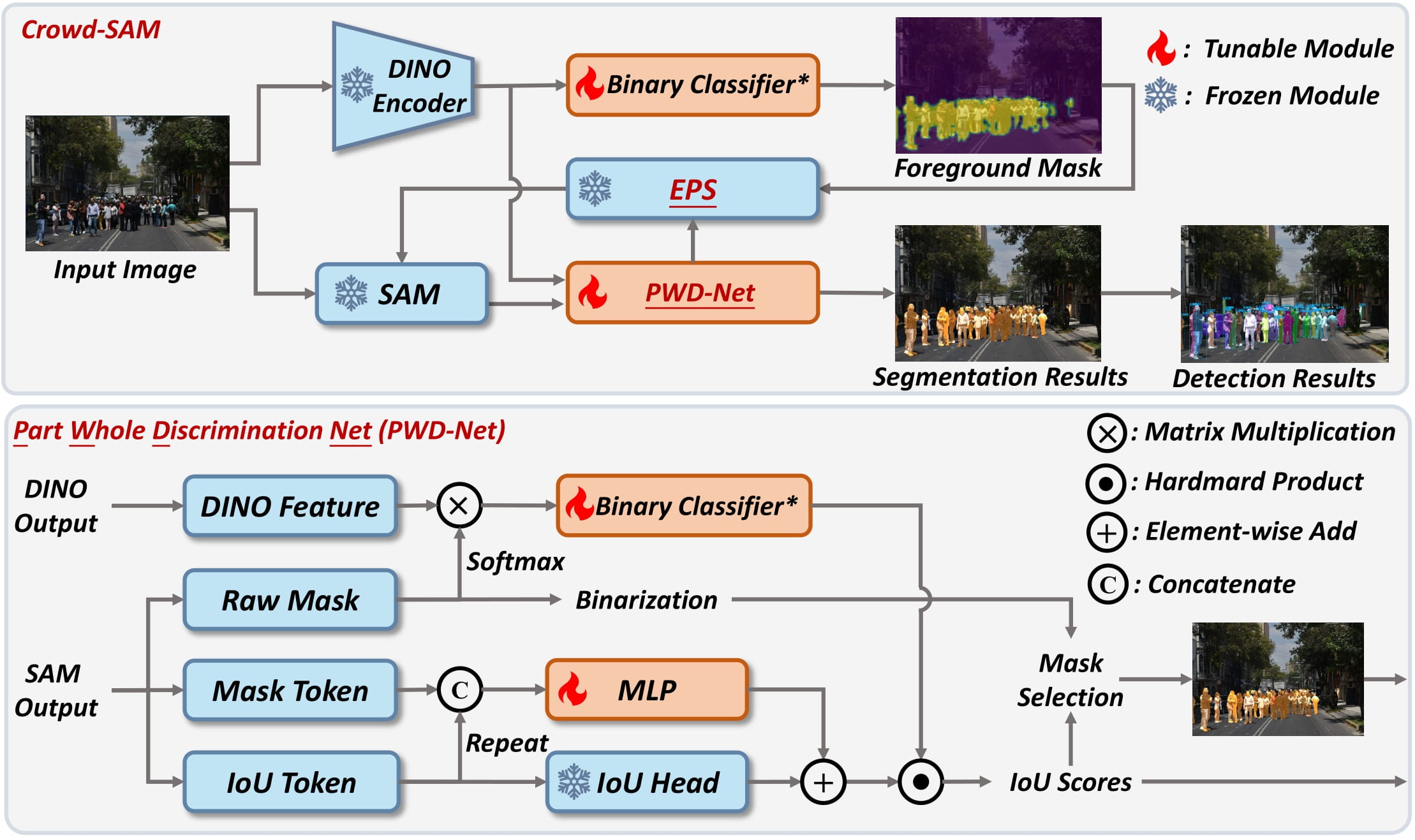

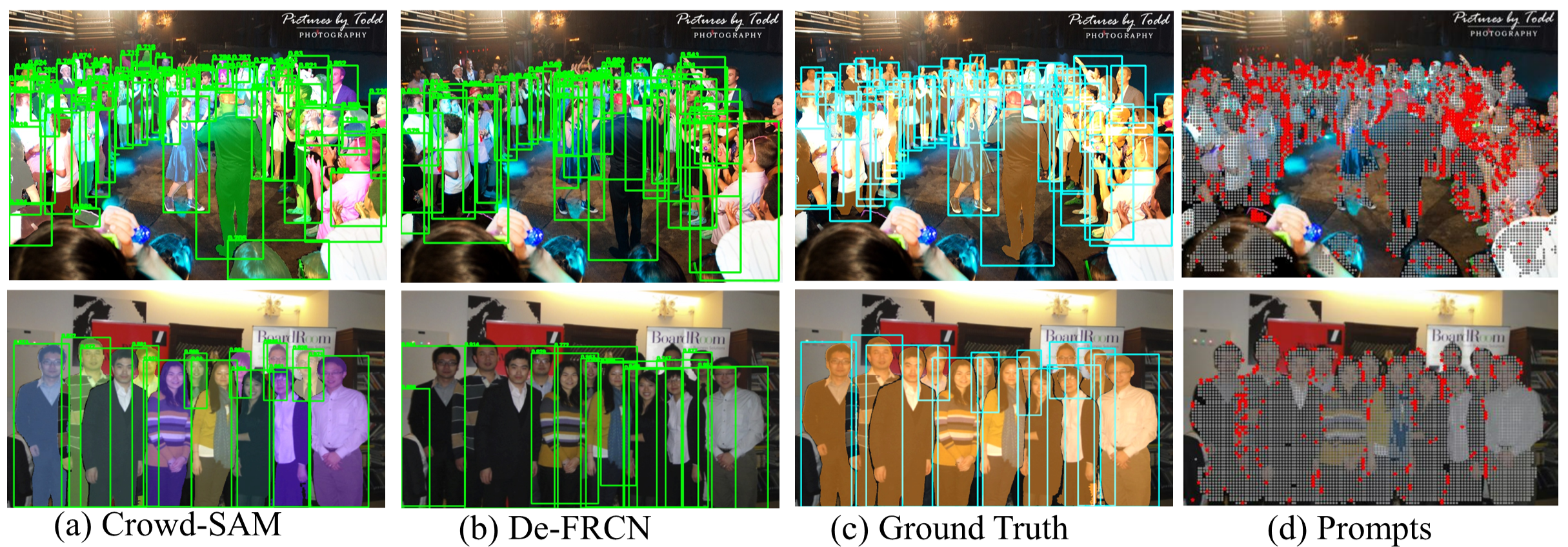

Crowd-SAM is a novel few-shot object detection and segmentation method designed to handle crowded scenes. Generally, object detection requires extensive labels for training, which is quite time-consuming, especially in crowded scenes. In this work, We combine SAM with the specifically designed efficient prompt sampler and a mask selection PWD-Net to achieve fast and accurate pedestrian detection! Crowd-SAM achieves 78.4% AP on the Crowd-Human benchmark with 10 supporting images which is comparable to supervised detectors.

We recommend to use virtual enviroment, e.g. Conda, for installation:

-

Create virtual environment:

conda create -n crowdsam python=3.8

-

Clone this repository:

git clone https://github.com/yourusername/crowd-sam.git cd crowdsam pip install -r requirements.txt git submodule update --init --recursive pip install .

-

Download DINOv2(Vit-L) checkpoint SAM(ViT-L) checkpoint.

Place the donwdloaded weights in the weights directory. If it does not exist, use command

mkdir weightsto create one.

Download the CrowdHuman dataset from the official website. Note that we only need the CrowdHuman_val.zip and annotation_val.odgt. For training data, we have prepared it in the crowdhuman_train directory and please copy the files into ./dataset/crowdhuman before training.

Extract and place the downdloaded zip files in the dataset directory and it should look like this:

crowdsam/

├── dataset/

│ └── crowdhuman/

│ ├── annotation_val.odgt

│ ├── Images

└── ...

Run the script to convert odgt file to json file.

python tools/crowdhuman2coco.py -o annotation_val.odgt -v -s val_visible.json -d dataset/crowdhuman

- To start training the model, run the following command:

python train.py --config_file ./configs/config.yamlOur model configs are written with yaml in the configs directory. Make sure to update the config.yaml file with the appropriate paths and parameters as needed.

We prepare a pretrained adapter weights for CrowdHuman here

- To evaluate the model, we recommend to use the following command for batch evaluation:

python tools/batch_eval.py --config_file ./configs/config.yaml -n num_gpus- To visualize the outputs, use the following command:

python tools/test.py --config_file ./configs/config.yaml --visualize- To run demo on your images, use the following command:

python tools/demo.py --config_file ./configs/config.yaml --input target_directoryThis will run the evaluation script on the test dataset and output the results.

We build our project based on the segment-anything and dinov2.

You can cite our paper with such bibtex:

@inproceedings{cai2024crowd,

title={Crowd-SAM: SAM as a Smart Annotator for Object Detection in Crowded Scenes},

author={Cai, Zhi and Gao, Yingjie and Zheng, Yaoyan and Zhou, Nan and Huang, Di},

booktitle={Proceedings of the European Conference on Computer Vision (ECCV)},

year={2024}

}