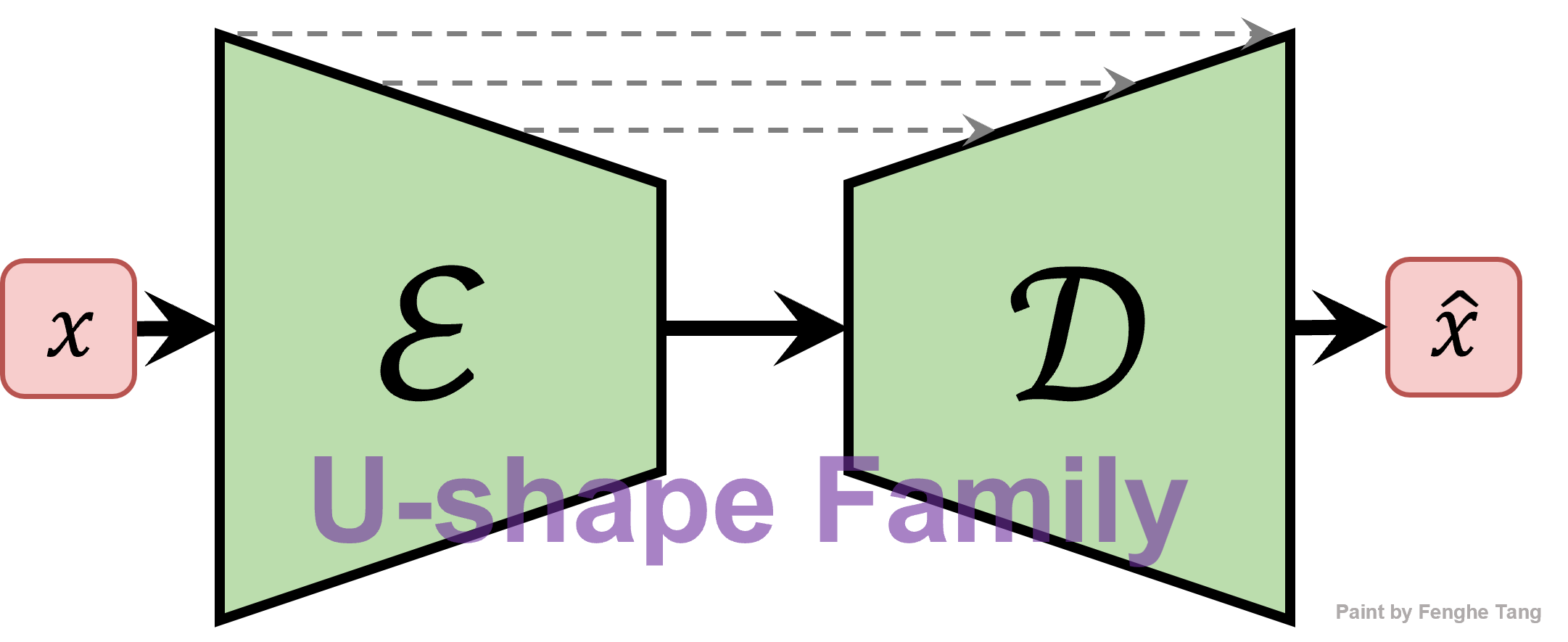

For easy evaluation and fair comparison on 2D medical image segmentation method, we aim to collect and build a medical image segmentation U-shape architecture benchmark to implement the medical 2d image segmentation tasks.

- CMUNeXt is now on this repo ! 😘

This repositories has collected and re-implemented medical image segmentation networks based on U-shape architecture are followed:

| Network | Original code | Reference |

|---|---|---|

| U-Net | Caffe | MICCAI'15 |

| Attention U-Net | Pytorch | Arxiv'18 |

| U-Net++ | Pytorch | MICCAI'18 |

| U-Net 3+ | Pytorch | ICASSP'20 |

| TransUnet | Pytorch | Arxiv'21 |

| MedT | Pytorch | MICCAI'21 |

| UNeXt | Pytorch | MICCAI'22 |

| SwinUnet | Pytorch | ECCV'22 |

| CMU-Net | Pytorch | ISBI'23 |

| CMUNeXt | Pytorch | ISBI'24 |

Please put the BUSI dataset or your own dataset as the following architecture.

├── Medical-Image-Segmentation-Benchmarks

├── data

├── busi

├── images

| ├── benign (10).png

│ ├── malignant (17).png

│ ├── ...

|

└── masks

├── 0

| ├── benign (10).png

| ├── malignant (17).png

| ├── ...

├── your 2D dataset

├── images

| ├── 0a7e06.png

│ ├── 0aab0a.png

│ ├── 0b1761.png

│ ├── ...

|

└── masks

├── 0

| ├── 0a7e06.png

| ├── 0aab0a.png

| ├── 0b1761.png

| ├── ...

├── src

├── main.py

├── split.py

- GPU: NVIDIA GeForce RTX4090 GPU

- Pytorch: 1.13.0 cuda 11.7

- cudatoolkit: 11.7.1

- scikit-learn: 1.0.2

You can first split your dataset:

python split.py --dataset_root ./data --dataset_name busiThen, training and validating your dataset:

python main.py --model [CMUNeXt/CMUNet/TransUnet/...] --base_dir ./data/busi --train_file_dir busi_train.txt --val_file_dir busi_val.txt --base_lr 0.01 --epoch 300 --batch_size 8We train the U-shape based networks with BUSI dataset. The BUSI collected 780 breast ultrasound images, including normal, benign and malignant cases of breast cancer with their corresponding segmentation results. We only used benign and malignant images (647 images). And we randomly split thrice, 70% for training and 30% for validation. In addition, we resize all the images 256×256 and perform random rotation and flip for data augmentation.

| Method | Params (M) | FPS | GFLOPs | IoU | F1-value |

|---|---|---|---|---|---|

| U-Net | 34.52 | 139.32 | 65.52 | 68.61±2.86 | 76.97±3.10 |

| Attention U-Net | 34.87 | 129.92 | 66.63 | 68.55±3.22 | 76.88±3.50 |

| U-Net++ | 26.90 | 125.50 | 37.62 | 69.49±2.94 | 78.06±3.25 |

| U-Net3+ | 26.97 | 50.60 | 199.74 | 68.38±3.35 | 76.88±3.68 |

| TransUnet | 105.32 | 112.95 | 38.52 | 71.39±2.37 | 79.85±2.59 |

| MedT | 1.37 | 22.97 | 2.40 | 63.36±1.56 | 73.37±1.63 |

| SwinUnet | 27.14 | 392.21 | 5.91 | 54.11±2.29 | 65.46±1.91 |

| UNeXt | 1.47 | 650.48 | 0.58 | 65.04±2.71 | 74.16±2.84 |

| CMU-Net | 49.93 | 93.19 | 91.25 | 71.42±2.65 | 79.49±2.92 |

| CMUNeXt | 3.14 | 471.43 | 7.41 | 71.56±2.43 | 79.86±2.58 |

This code-base uses helper functions from CMU-Net and Image_Segmentation.

If you have any questions or suggestions about this project, please contact me through email: 543759045@qq.com