This repository contains code for the ACL 2023 paper, ContraCLM: Contrastive Learning for Causal Language Model.

Work done by: Nihal Jain*, Dejiao Zhang*, Wasi Uddin Ahmad*, Zijian Wang, Feng Nan, Xiaopeng Li, Ming Tan, Ramesh Nallapati, Baishakhi Ray, Parminder Bhatia, Xiaofei Ma, Bing Xiang. (* indicates equal contribution).

- [07-08-2023] Initial release of the code.

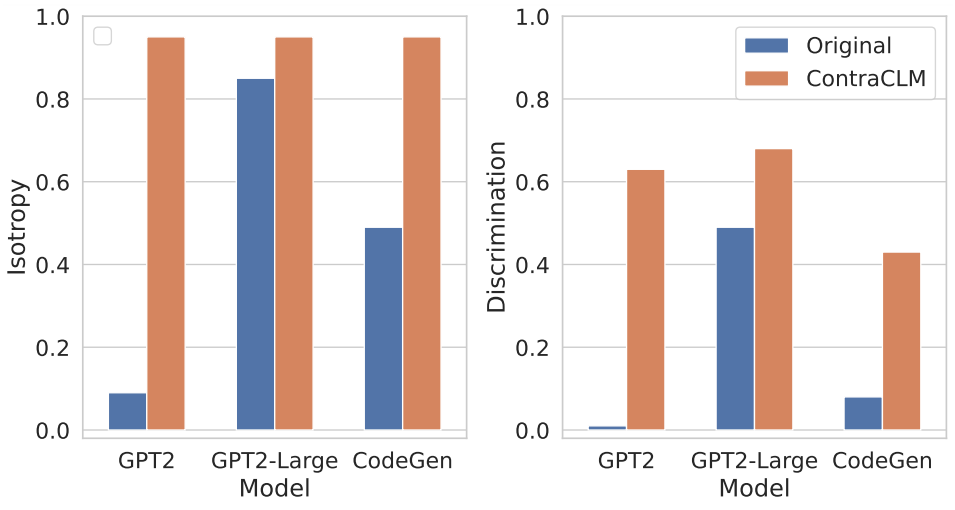

We present ContraCLM, a novel contrastive learning framework which operates at both the token-level and sequence-level. ContraCLM enhances the discrimination of representations from a decoder-only language model and bridges the gap with encoder-only models, making causal language models better suited for tasks beyond language generation. We encourage you to check out our paper for more details.

The setup involves installing the necessary dependencies in an environment and placing the datasets in the requisite directory.

Run these commands to create a new conda environment and install the required packages for this repository.

# create a new conda environment with python >= 3.8

conda create -n contraclm python=3.8.12

# install dependencies within the environment

conda activate contraclm

pip install -r requirements.txtSee here.

In this section, we show how to use this repository to pretrain (i) GPT2 on Natural Language (NL) data, and (ii) CodeGen-350M-Mono on Programming Language (PL) data.

-

This section assumes that you have the train and validation data stored at

TRAIN_DIRandVALID_DIRrespectively, and are within an environment with all the above dependencies installed (see Setup). -

You can get an overview of all the flags associated with pretraining by running:

python pl_trainer.py --helpbash runscripts/run_wikitext.sh

- For quickly testing the code and debug, suggesting run the code with MLE loss only

by setting

CL_Config=$(eval echo ${options[1]})within the script. - All other opotions involves CL loss at either token-level or sequence-level.

-

Configure the variables at the top of

runscripts/run_code.sh. There are lots of options but only the dropout options are explained here (others are self-explanatory):-

dropout_p: The dropout probability value used intorch.nn.Dropout -

dropout_layers: If > 0, this will activate the lastdropout_layerswith probabilitydropout_p -

functional_dropout: If specified, will use a functional dropout layer on top of the token representations output from the CodeGen model

-

-

Set the variable

CLaccording to desired model configuration. Make sure the paths toTRAIN_DIR, VALID_DIRare set as desired. -

Run the command:

bash runscripts/run_code.sh

See the relevant task-specific directories here.

If you use our code in your research, please cite our work as:

@inproceedings{jain-etal-2023-contraclm,

title = "{C}ontra{CLM}: Contrastive Learning For Causal Language Model",

author = "Jain, Nihal and

Zhang, Dejiao and

Ahmad, Wasi Uddin and

Wang, Zijian and

Nan, Feng and

Li, Xiaopeng and

Tan, Ming and

Nallapati, Ramesh and

Ray, Baishakhi and

Bhatia, Parminder and

Ma, Xiaofei and

Xiang, Bing",

booktitle = "Proceedings of the 61st Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = jul,

year = "2023",

address = "Toronto, Canada",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2023.acl-long.355",

pages = "6436--6459"

}

See CONTRIBUTING for more information.

This project is licensed under the Apache-2.0 License.