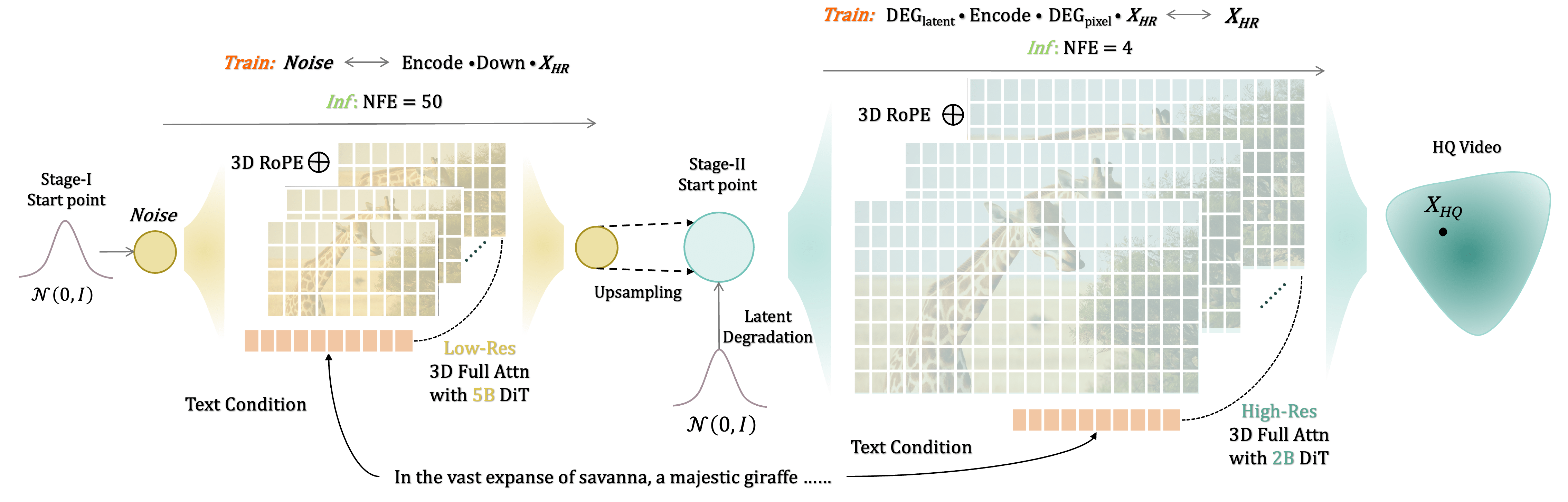

FlashVideo:Flowing Fidelity to Detail for Efficient High-Resolution Video Generation

Shilong Zhang, Wenbo Li, Shoufa Chen, Chongjian Ge, Peize Sun,

Yida Zhang, Yi Jiang, Zehuan Yuan, Bingyue Peng, Ping Luo,

HKU, CUHK, ByteDance

- [2025.02.10] 🔥 🔥 🔥 Inference code and both stage model weights have been released.

In this repository, we provide:

- The stage-I weight for 270P video generation.

- The stage-II for enhancing 270P video to 1080P.

- Inference code of both stages.

- Training code and related augmentation. Work in process PR#12

- Loss function

- Dataset and augmentation

- Configuration and training script

- Implementation with diffusers.

- Gradio.

This repository is tested with PyTorch 2.4.0+cu121 and Python 3.11.11. You can install the necessary dependencies using the following command:

pip install -r requirements.txtTo get the 3D VAE (identical to CogVideoX), along with Stage-I and Stage-II weights, set them up as follows:

cd FlashVideo

mkdir -p ./checkpoints

huggingface-cli download --local-dir ./checkpoints FoundationVision/FlashVideoThe checkpoints should be organized as shown below:

├── 3d-vae.pt

├── stage1.pt

└── stage2.pt

⚠️ IMPORTANT NOTICE ⚠️ : Both stage-I and stage-II are trained with long prompts only. For achieving the best results, include comprehensive and detailed descriptions in your prompts, akin to the example provided in example.txt.

You can conveniently provide user prompts in our Jupyter notebook. The default configuration for spatial and temporal slices in the VAE Decoder is tailored for an 80G GPU. For GPUs with less memory, one might consider increasing the spatial and temporal slice.

flashvideo/demo.ipynbYou can conveniently provide the user prompt in a text file and generate videos with multiple gpus.

bash inf_270_1080p.shThis project is developed based on CogVideoX. Please refer to their original license for usage details.

@article{zhang2025flashvideo,

title={FlashVideo: Flowing Fidelity to Detail for Efficient High-Resolution Video Generation},

author={Zhang, Shilong and Li, Wenbo and Chen, Shoufa and Ge, Chongjian and Sun, Peize and Zhang, Yida and Jiang, Yi and Yuan, Zehuan and Peng, Binyue and Luo, Ping},

journal={arXiv preprint arXiv:2502.05179},

year={2025}

}