- tokenization

- Normalization

- TF/IDF

- stopwords

- Jaccard Similarity

- Cosine Similarity

- Hadamard Product

- one hot vector

- Sigmoid Activation Function

- ReLU Activation Function

- tanh activation function

- softmax function

- Recurrent neural network (RNN)

- Gated Recurrent Unit(GRU)

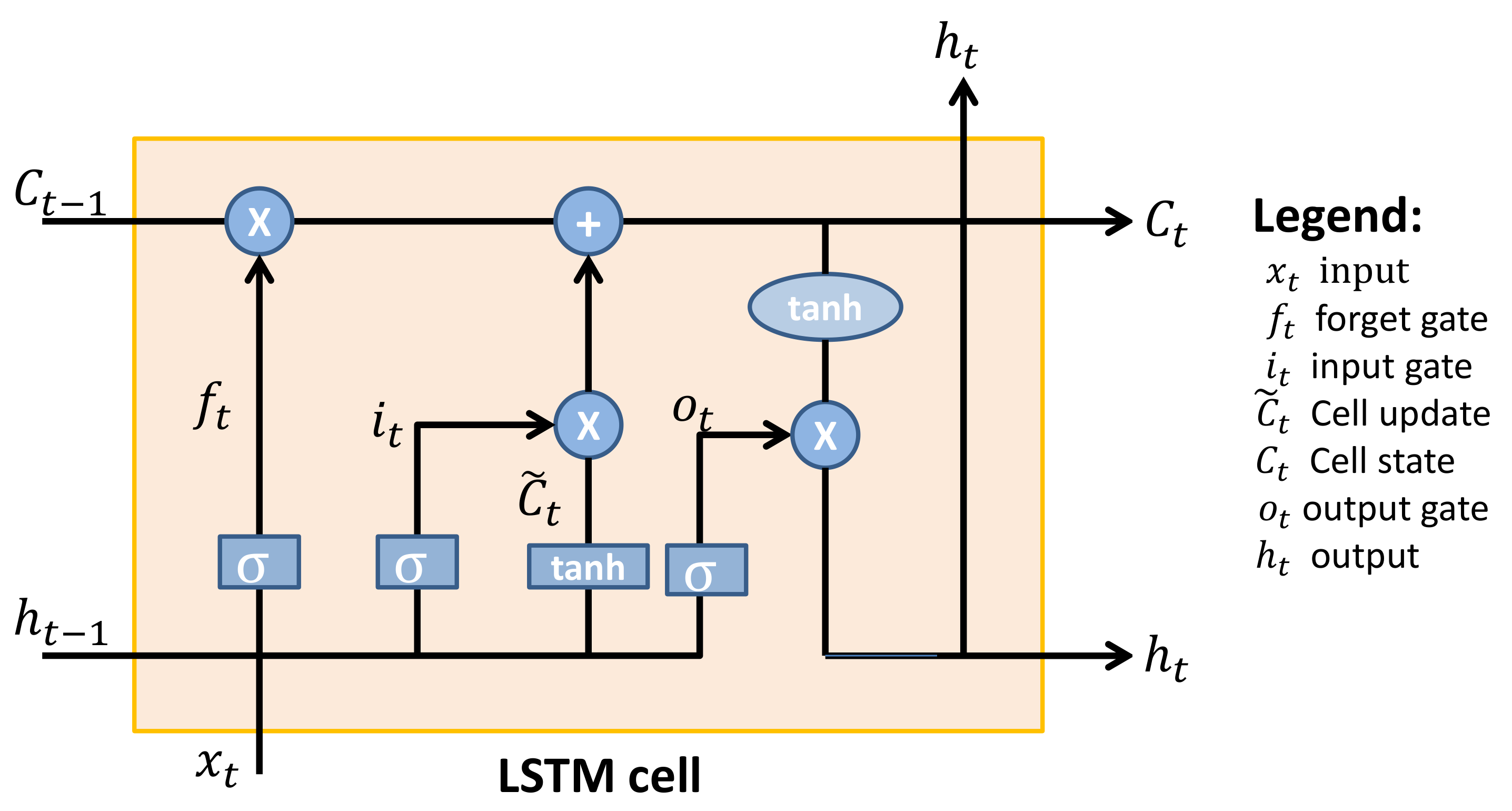

- LSTM

- Feed forward neural network

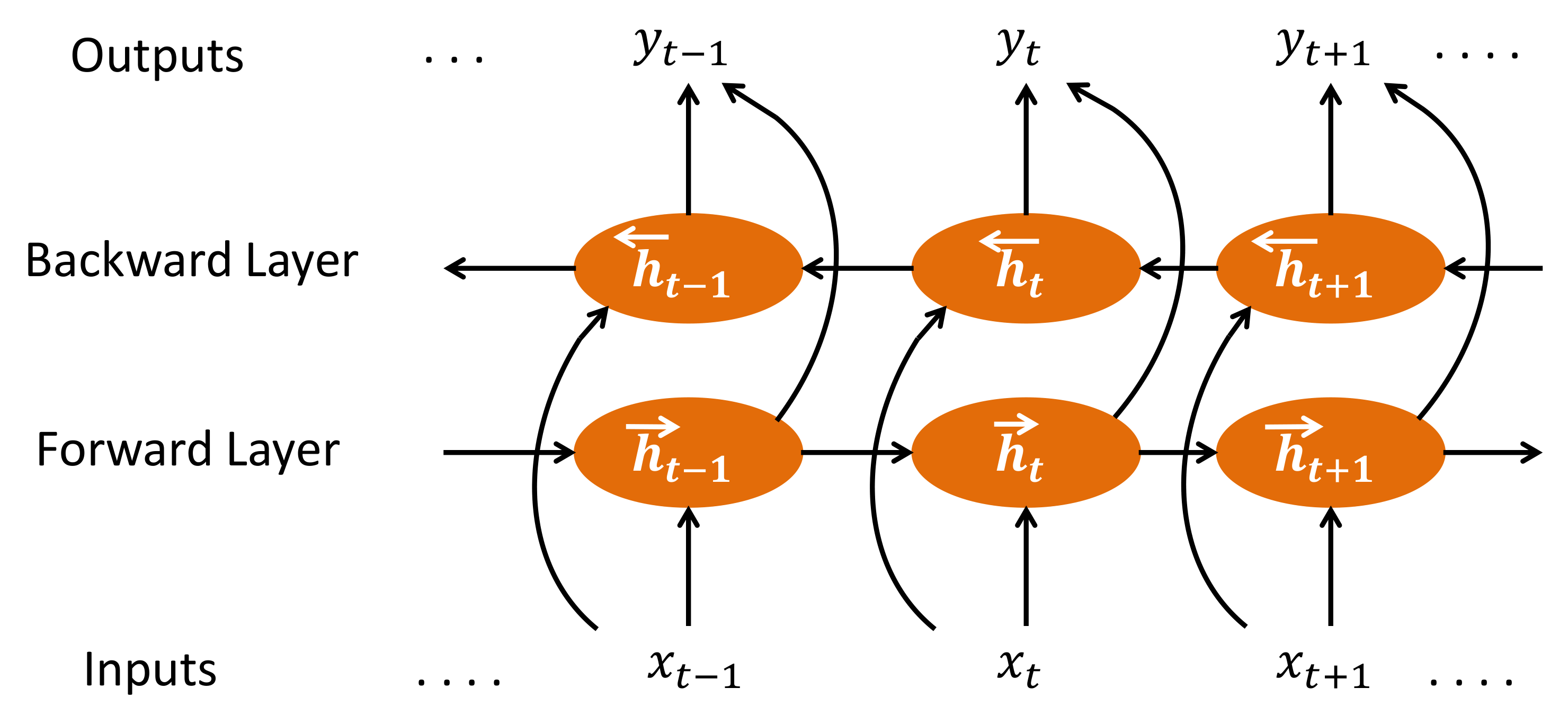

- Bidirectional RNN (BRNN)

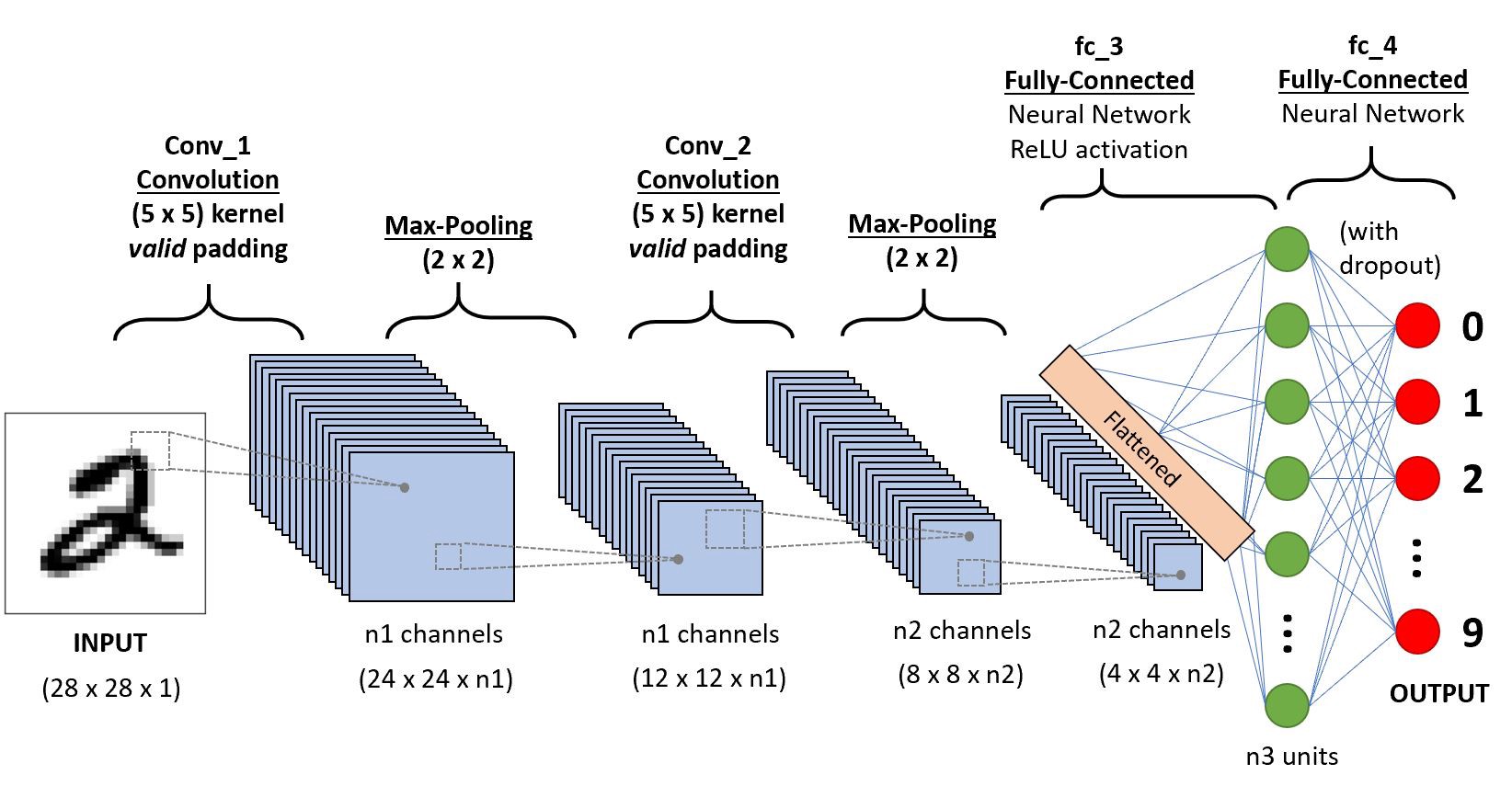

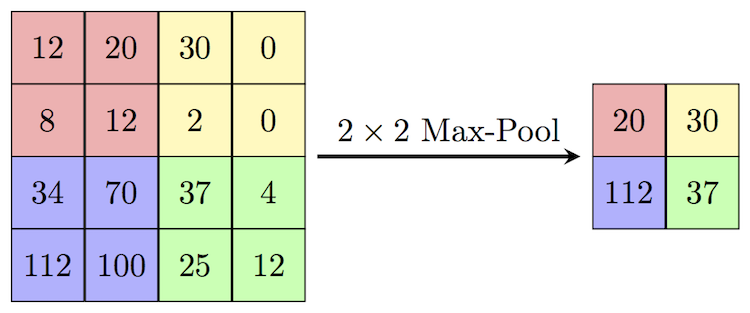

- Convolution Neural Network(CNN)

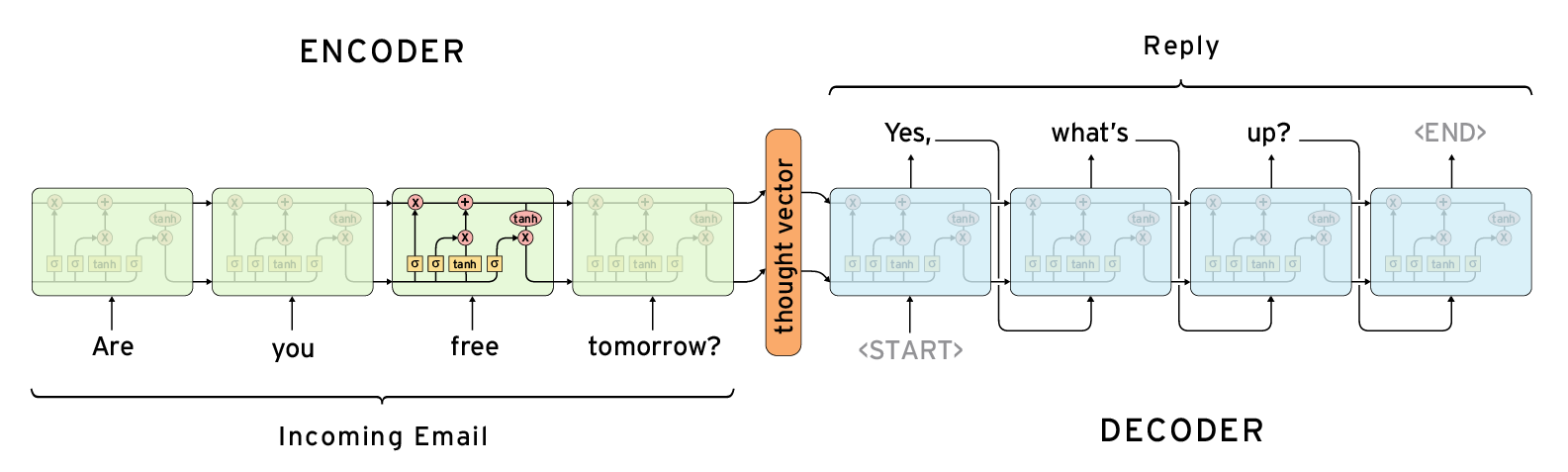

- Encoder-Decoder Model

- Attention Model

- Seq to Seq model

- Transformer

- Teacher Forcing

- Beam Search

- Rules Based Keyword Extraction

- Using support vector machine(SVM)

- Rake Algorithm

- Bag of words

- TF-IDF

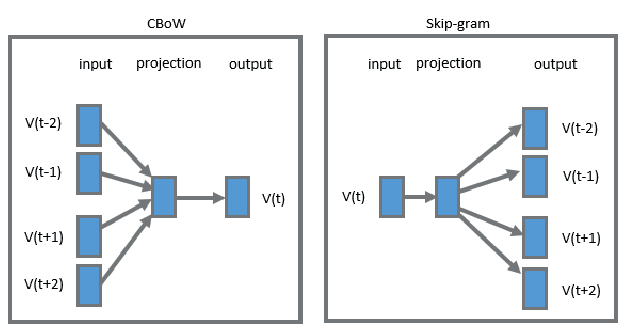

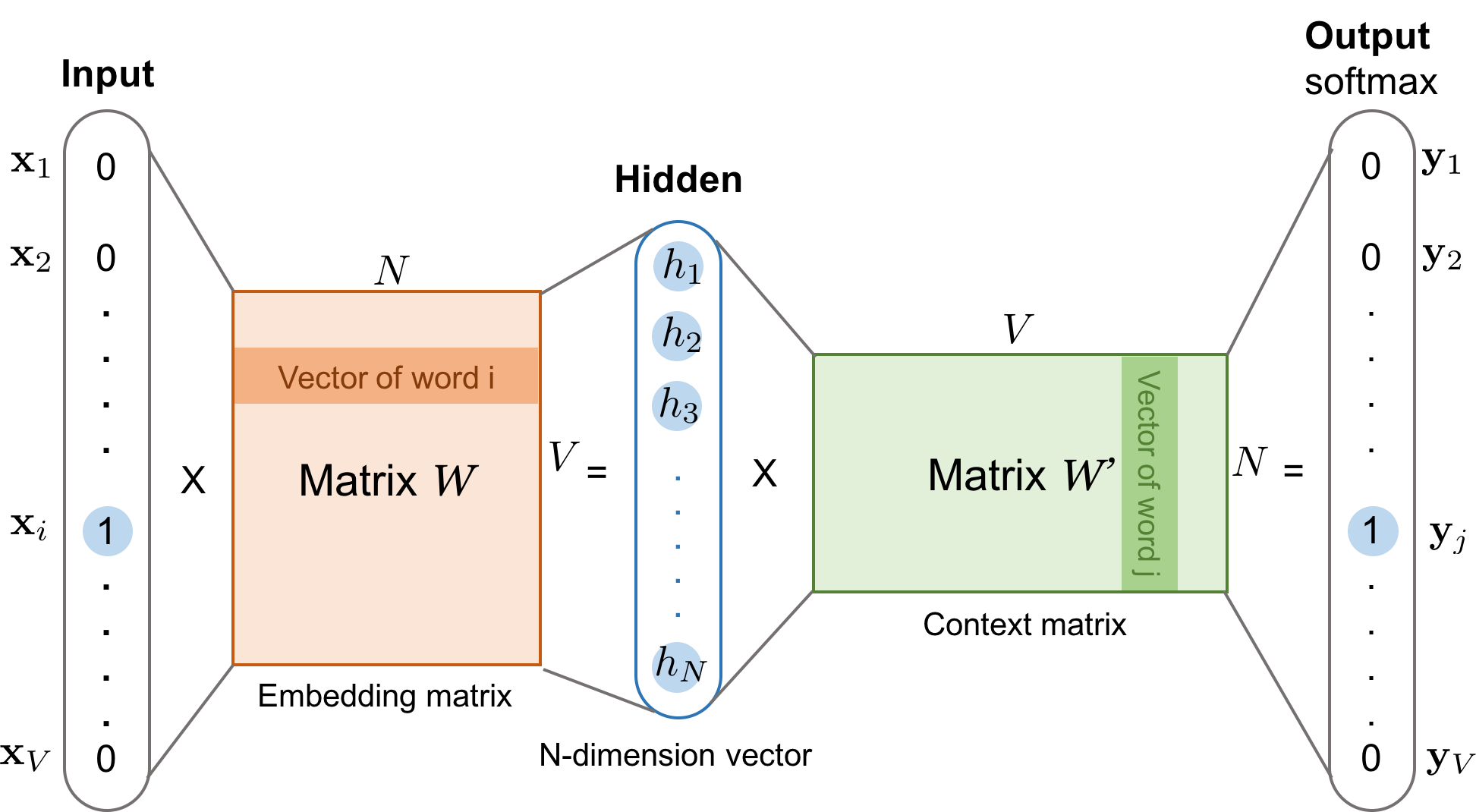

- Skip gram

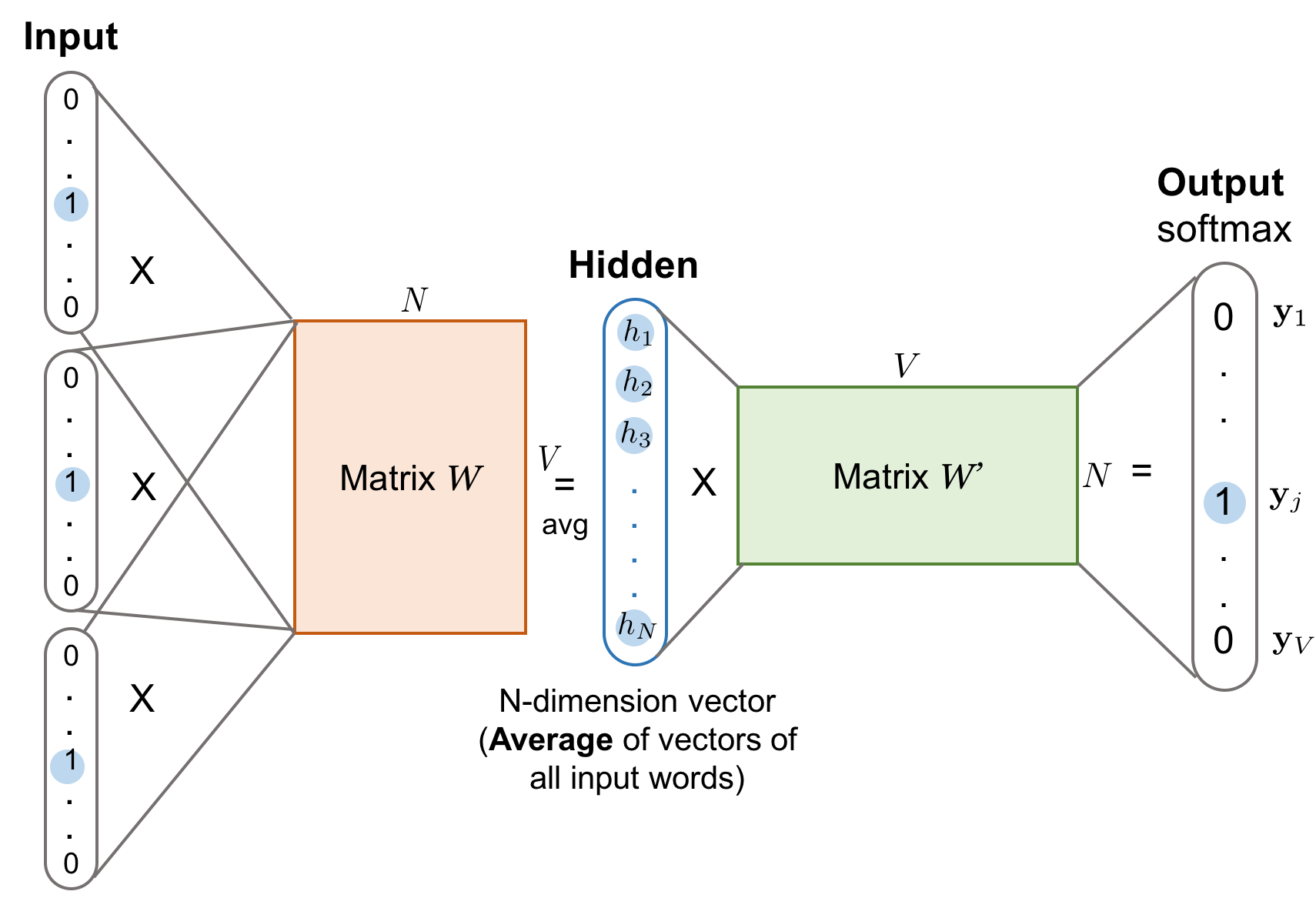

- Cbow

- Loss function

- Difference between CBOW and skip-gram model

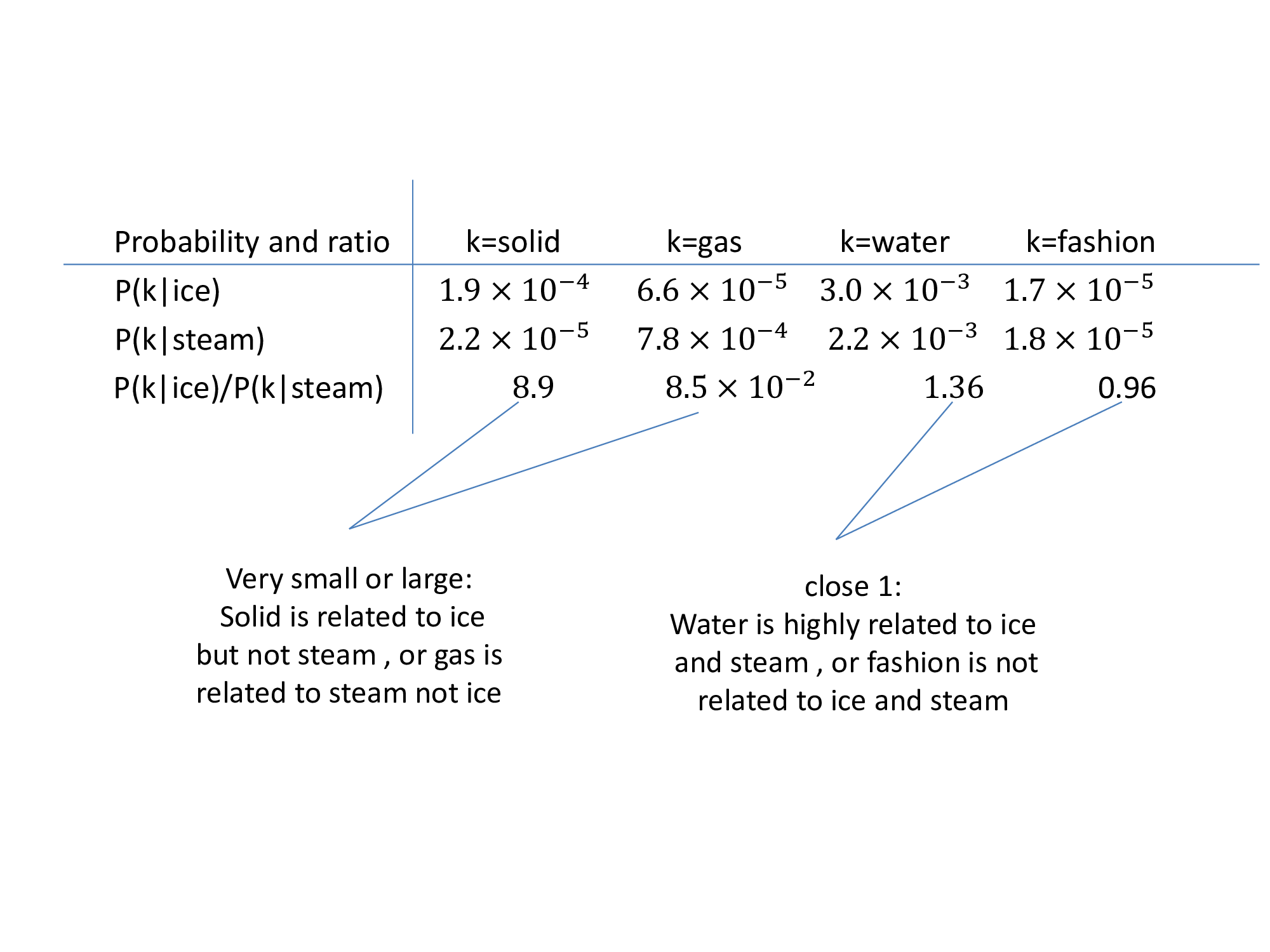

- Glove

- Fasttext

- Doc2vec

- PVDM(Distributed Memory version of Paragraph Vector)

- PVDOBW( Distributed Bag of Words version of Paragraph Vector)

- SentenceBERT

- part1: Sentence Encoder(LSTM and GRU) (Infersent)

- part2: Sentence Encoder (BiLSTM with max/mean pooling) (Infersent)

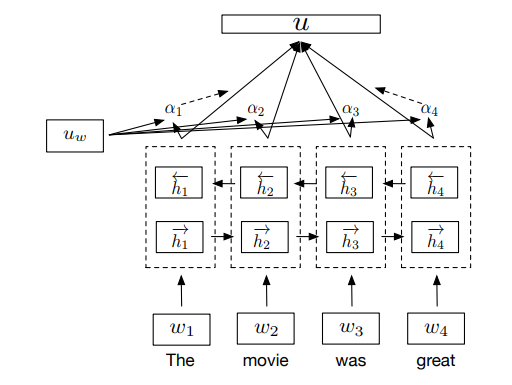

- part3: Sentence Encoder (Self-attentive network) (Infersent)

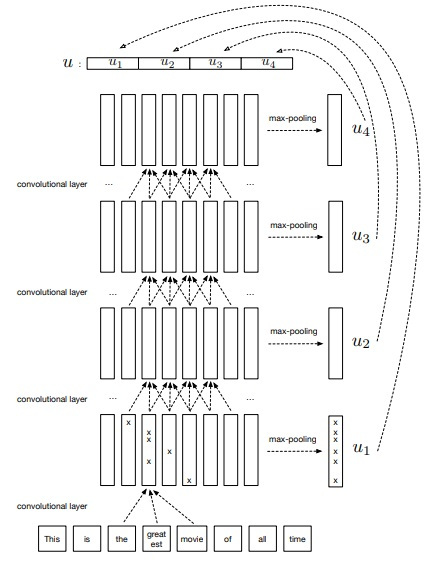

- part4: Sentence Encoder( Hierarchical ConvNet) (Infersent)

- Natural Language Inference Classifier(NLI)

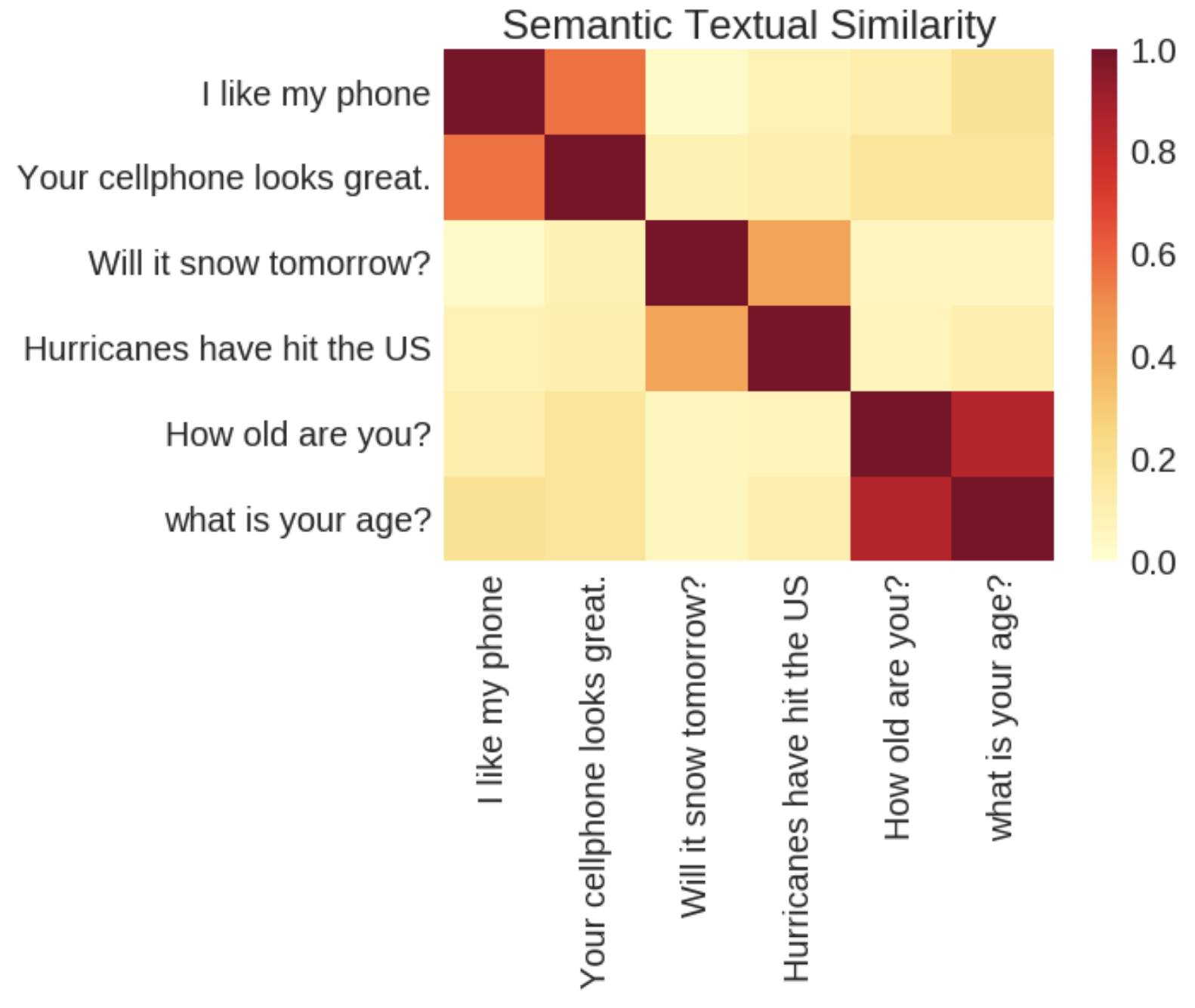

- Universal Sentence Encoder

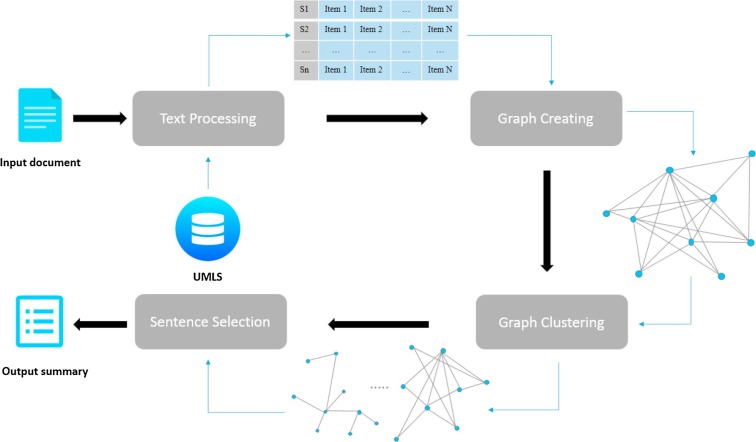

- Graph Base Extractive Summarization

- Feature Base Extractive Summarization

- Topic Base Extractive Summarization

- BSTM base Extractive Summarization

- Grammar Base Extractive summarization

- Neural network base Extractive Summarization

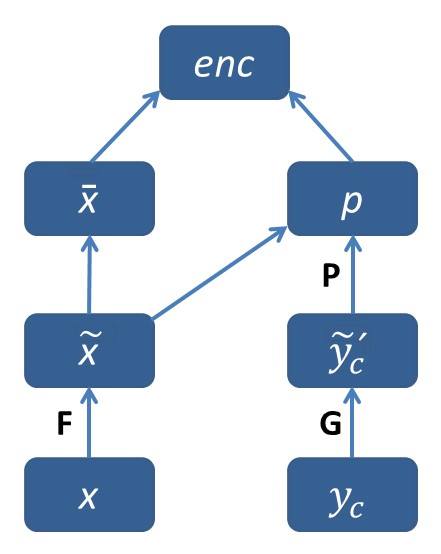

- Encoder Decoder Abstractive Model with RNN

- Abstractive Summarization with Attentive Recurrent Neural Networks

- Neural Attention Model

- Sequence-to-sequence RNNs and Beyond

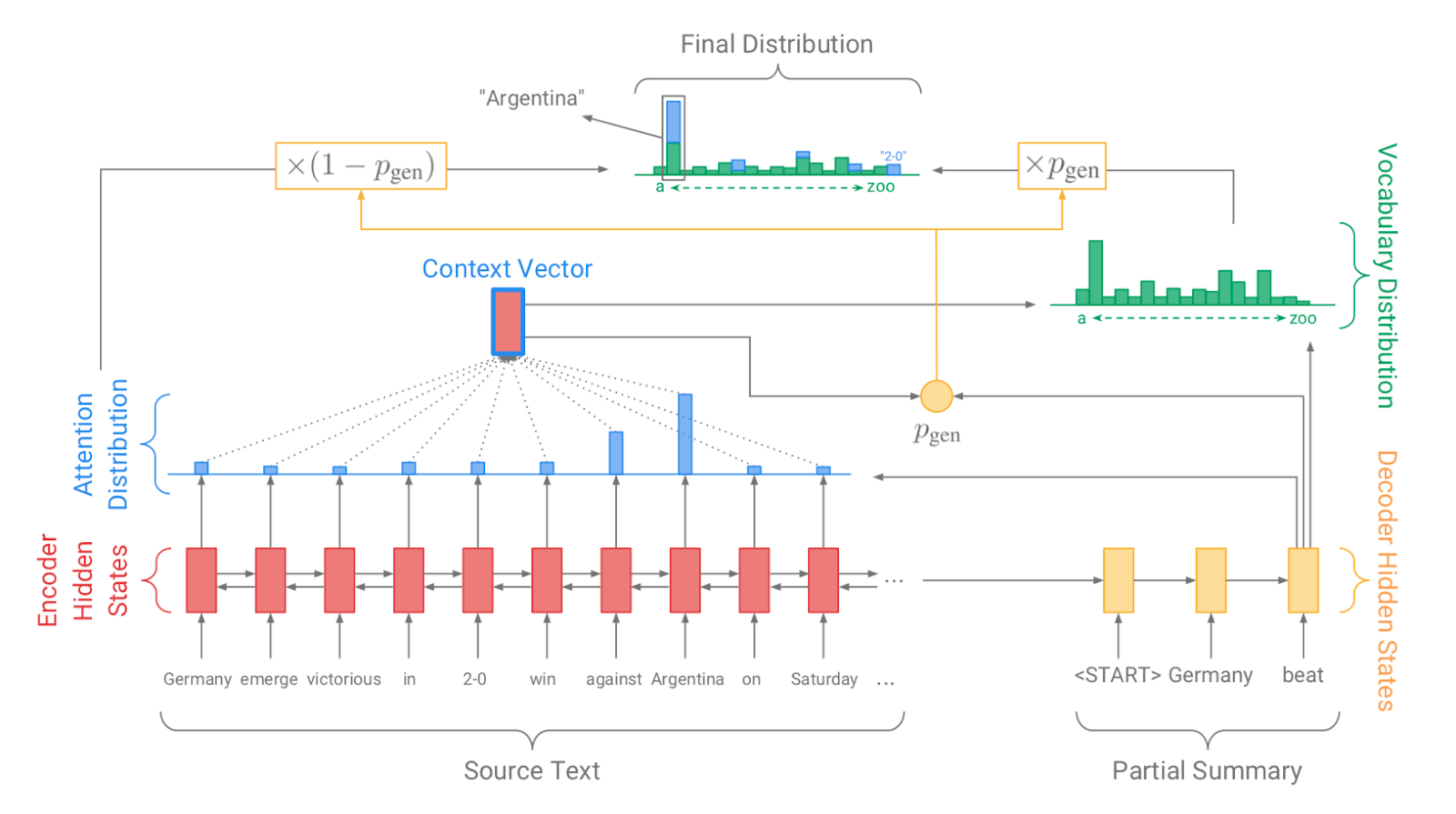

- Summarization with Pointer-Generator Networks

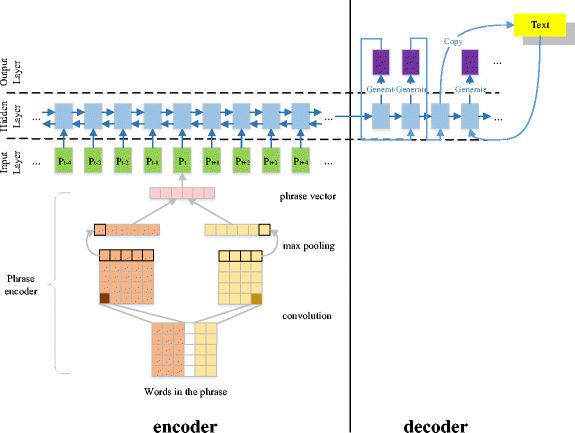

- LSTM and CNN base abstractive model

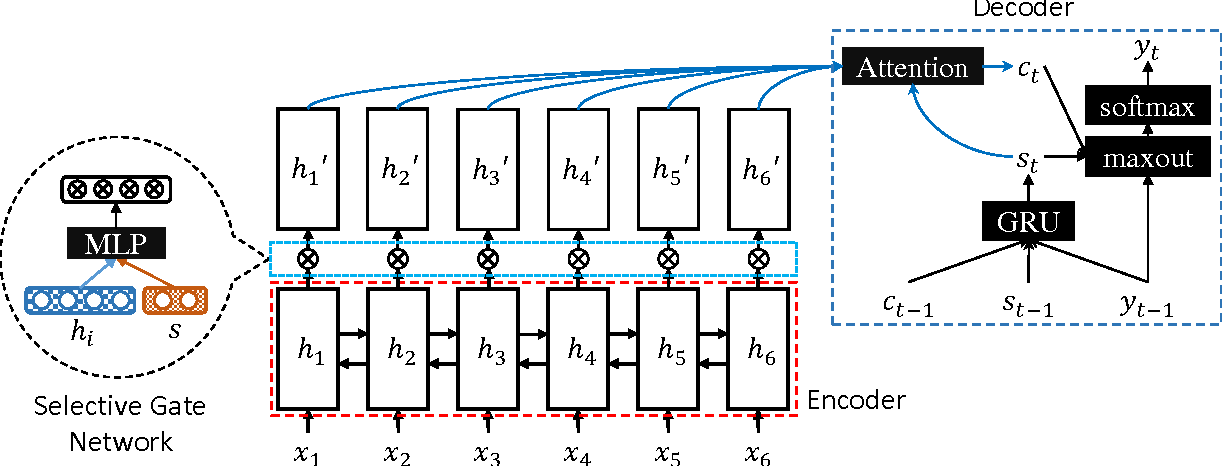

- Selective Encoding for Abstractive Sentence Summarization

- A Deep Reinforced Model for Abstractive Summarization

- Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting

- Query Focused Abstractive Summarization

- Fine-tune BERT for Extractive Summarization

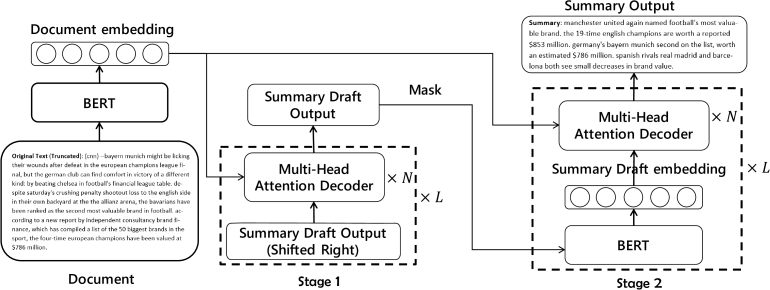

- Pretraining-Based Natural Language Generation for Text Summa-rization(Abstractive)

👉 TF/IDF

TF-IDF is a statistical measure that evaluates how relevant a word is to a document in a collection of documents. The full form of TF is Term Frequency (TF).The full form of IDF is Inverse Document Frequency.TF = no. of times term occurrences in a document / total number of words in a document

IDF = log base e (total number of documents / number of documents which are having term )

There are lots of library in every language for measuring TF-IDF. In NLP, TF/IDF is a necessary step for information retrieval and keyword Extraction. In-Text summarization, as we need to find information from sentences Tf/IDF does that for us. It was much simple and faster than any other model.

👉 Stopwords

Stopwords are the words in any language which does not add much meaning to a sentence. They can safely be ignored without sacrificing the meaning of the sentence.Suppose, a sentence "Dhaka is the capital city of bangladesh" . after removing stop words it looks like ** Dhaka capital city bangladesh** . See, it doesn't change the meaning. So why we need it?

1. Stopwords are rapidly used in sentences. So for getting usefull information, this always create problems.

2. Without stopwords, our model computational power will be better.

3. without stopwords, we can get better accuracy.

In english language nltk library marked 127 words as a stop words.

i, me, my, myself, we, our, ours, ourselves, you, your, yours, yourself,yourselves,

he, him, his, himself, she, her, hers, herself, it, its, itself, they, them,their,

theirs, themselves, what, which, who, whom, this, that, these, those, am, is,are,

was, were, be, been, being, have, has, had, having, do, does, did, doing, a, an,

the, and, but, if, or, because, as, until, while, of, at, by, for, with, about,

against, between, into, through, during, before, after, above, below, to, from,

up, down, in, out, on, off, over, under, again, further, then, once, here, there,

when, where, why, how, all, any, both, each, few, more, most, other, some, such,

no, nor, not, only, own, same, so, than, too, very, s, t, can, will, just, don,

should, now

we can customize it.

👉 Jaccard Similarity

The Jaccard index, also known as Intersection over Union and the Jaccard similarity coefficient (originally given the French name coefficient de communauté by Paul Jaccard), is a statistic used for gauging the similarity and diversity of sample sets.

Here, represents the number of common words between the set A and B;

represents total number of distinct words in the set A and B.

Why we need it?

Finding the relation between two sentences or vectors.

👉 Cosine Similarity

As like as jaccard Similarity, Cosine similarity also works for finding relation of vectors.

Given two vectors of attributes, A and B, the cosine similarity, , is represented using a dot product and magnitude as

where A_i and B_i are components of vector A and B respectively.

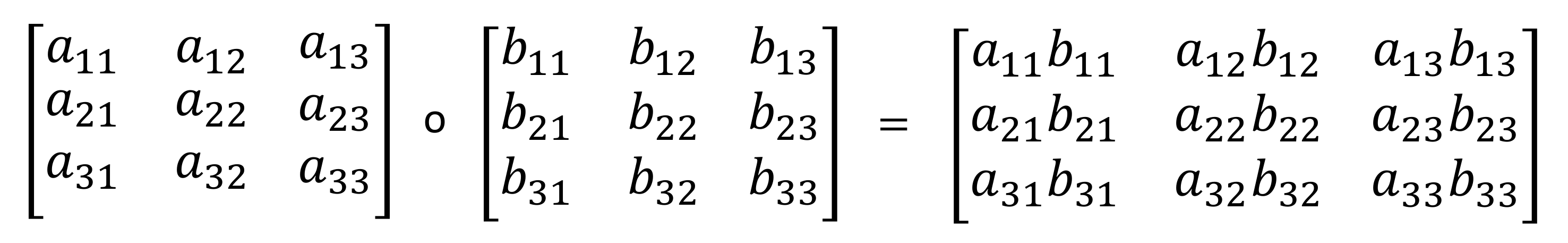

👉 Hadamard Product

In mathematics, the Hadamard product (also known as the element-wise, entrywise or Schur product) is a binary operation that takes two matrices of the same dimensions and produces another matrix of the same dimension as the operands, where each element i, j is the product of elements i, j of the original two matrices.

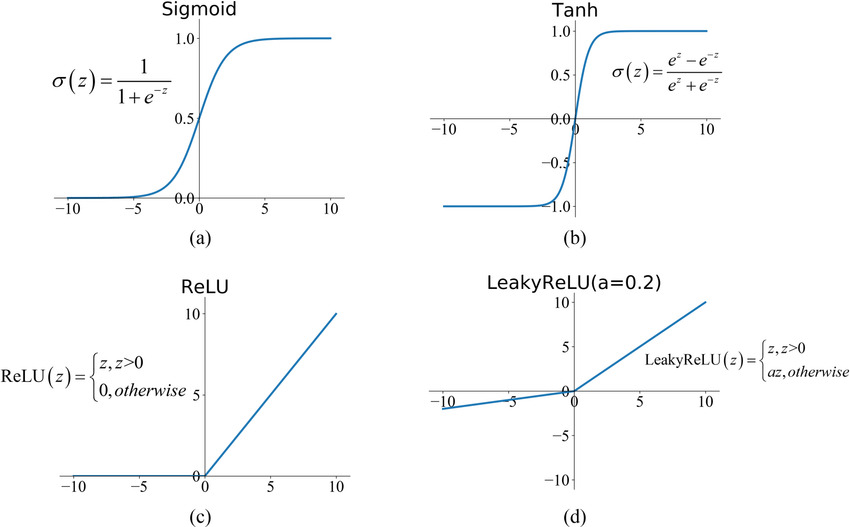

👉 ReLU Activation Function

ReLU stands for rectified linear unit, and is a type of activation function. It is widely used in Activation Function. why do we use ReLU, if we already have sigmoid?

why do we use ReLU, if we already have sigmoid?

1. it takes less time to train or run.

2. It is very faster than other models. Cause there is no complex equation. It doesn’t have the vanishing gradient problem suffered by other activation functions like sigmoid or tanh.

But in relu, if the number is negative it will make number 0. So, if all number is negative, it don't activated the function. And it's called Dying ReLU.

ReLU has so many version. Leaky ReLU is mostly used in deep learning. Instead of making 0, it makes negative value to multiple of a constant factor.

👉 tanh activation function

In sigmoid function, every value transform 0 to 1. In tanh function instead of 0 to 1, it makes -1 to 1.

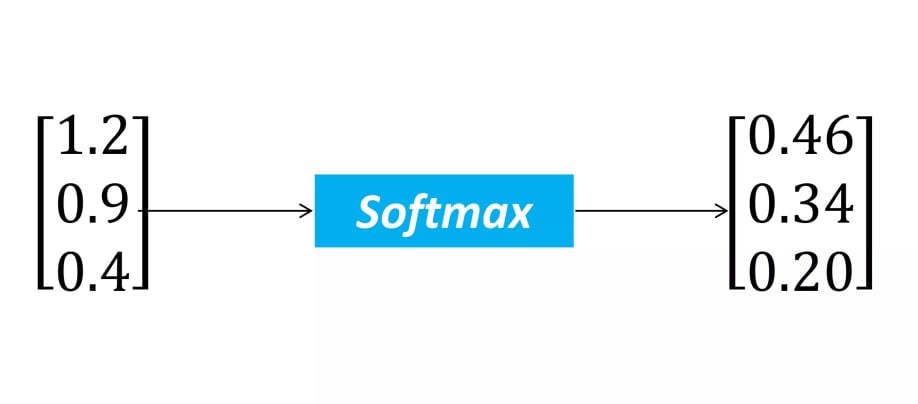

👉 softmax function

The softmax function squashes the outputs of each unit to be between 0 and 1, just like a sigmoid function. But it also divides each output such that the total sum of the outputs is equal to 1.

The output of the softmax function is equivalent to a categorical probability distribution, it tells you the probability that any of the classes are true.

Mathematically the softmax function is shown below, where z is a vector of the inputs to the output layer (if you have 10 output units, then there are 10 elements in z). And again, j indexes the output units, so j = 1, 2, ..., K.

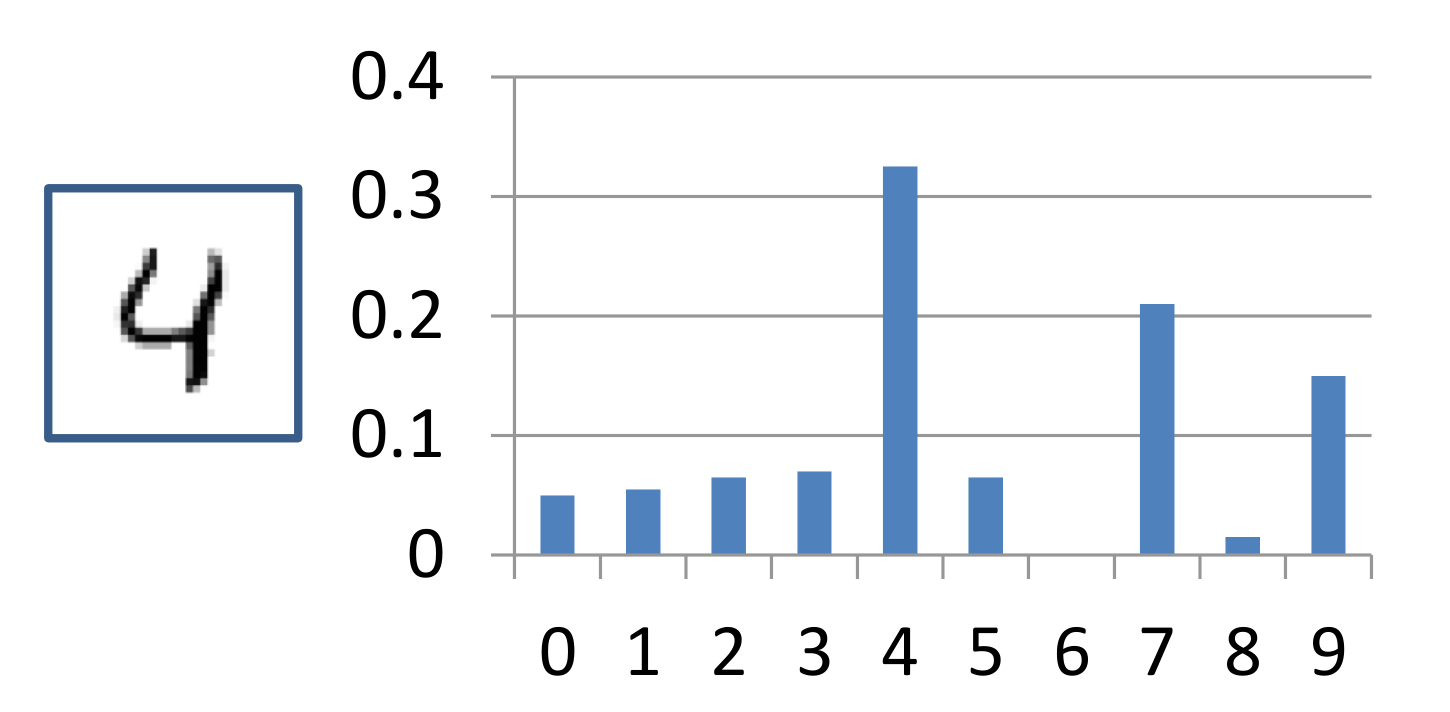

Suppose, we have an image where '4' digit is written on it. Now we want character recognition from that image. And we do all process for recognition of the character and make a vector. Now after using softmax function in our result it looks like,

So softmax function is basically making the vector into the probabilistic vector.

👉 Recurrent neural network (RNN)

Recurrent Neural Networks (RNNs) are widely used for data with some kind of sequential structure. For instance, time series data has an intrinsic ordering based on time. Sentences are also sequential, "I love dogs" has a different meaning than "Dogs I love." So if you are working with sequential model, RNN might be the solution for that.\ A simple architecture for RNN

-

Vector h : is the output of the hidden state after the activation function has been applied to the hidden nodes.

-

Matrices Wx, Wy, Wh: — are the weights of the RNN architecture which are shared throughout the entire network. The model weights of Wx at t=1 are the exact same as the weights of Wx at t=2 and every other time step.

-

Vector Xi: is the input to each hidden state where i=1, 2,…, n for each element in the input sequence.

We can make divide full architecture with two part.

- Forward Propagation

- Backpropagation through time

Forward Propagation:

In Forward Propagation, we calculate everything. Like, output,hidden state,loss function,gradient etc.

-

k is the dimension of the input vector Xi

-

d is the number of hidden nodes

Loss function is calculated by multi-class cross entropy loss function.

OverAll loss function is calculated by below equation.

BackPropagation through time:

In backward Propagation, It's updated ,

,

value based on gradients.

So, overall process look like,

-

Initialize weight matrices Wx, Wy, Wh randomly

-

Forward propagation to compute predictions

-

Compute the loss

4.Backpropagation to compute gradients

-

Update weights based on gradients

-

Repeat steps 2–5

A problem that RNNs face, which is also common in other deep neural nets, is the vanishing gradient problem . Vanishing gradients make it difficult for the model to learn long-term dependencies. For example, if an RNN was given this sentence,

The brown and black dog,which was playing with cat, was a German shepherd

in this sentence, when we want to predict 'shepherd', it needs the input brown. But 'brown' is too far from 'shepherd'. A long term dependency occurs. And it creates gradient loss. Cause gradient follows chain rule. However, there have been advancements in RNNs such as gated recurrent units (GRUs) and long short term memory (LSTMs) that have been able to deal with the problem of vanishing gradients.

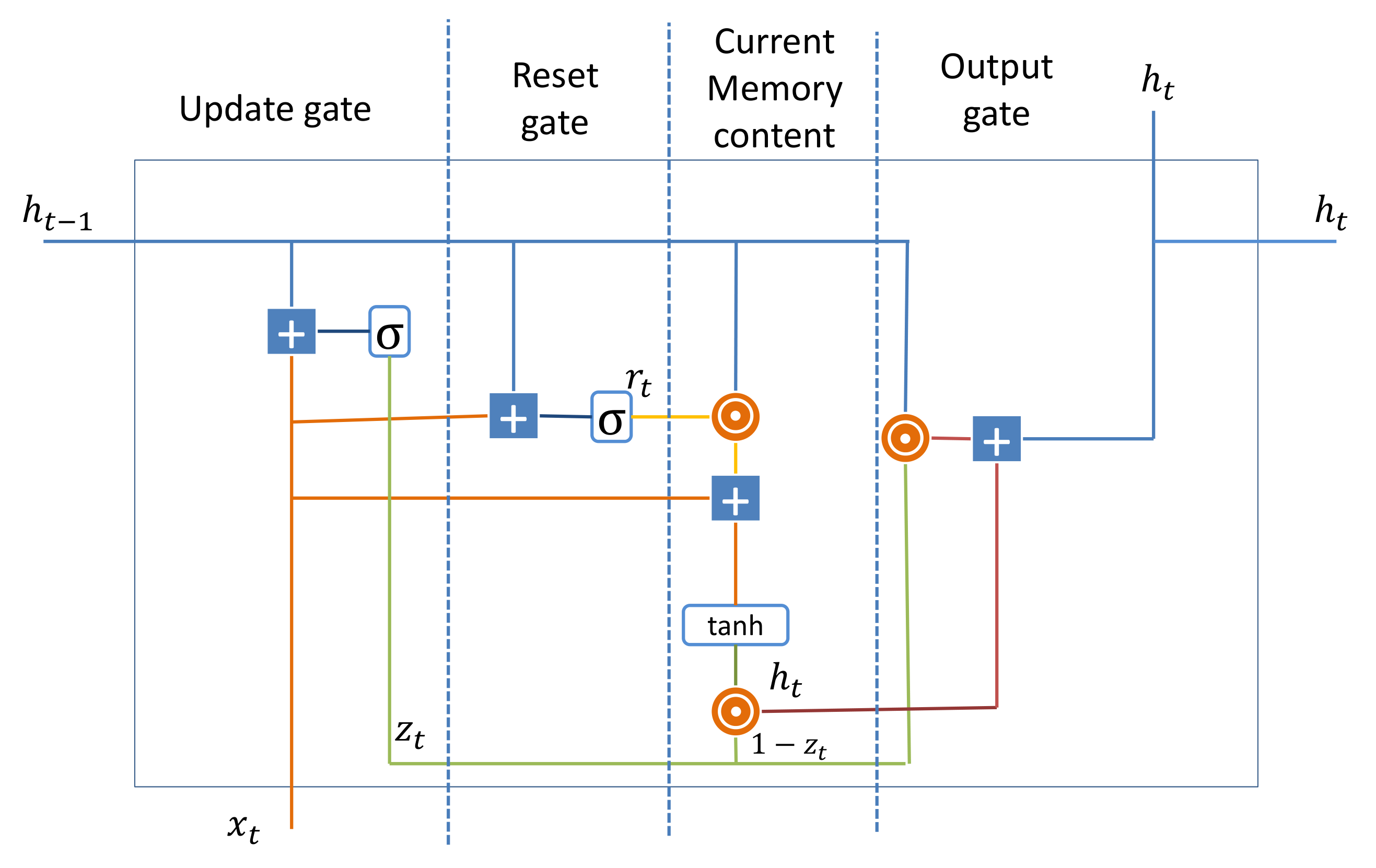

👉 Gated Recurrent Unit(GRU)

To solve the vanishing gradient problem of a standard RNN, GRU uses, so-called, update gate and reset gate. Basically, these are two vectors which decide what information should be passed to the output. The special thing about them is that they can be trained to keep information from long ago, without washing it through time or remove information which is irrelevant to the prediction.

Update Gate:

We start with calculating the update gate

The update gate helps the model to determine how much of the past information (from previous time steps) needs to be passed along to the future.

Reset Gate: this gate is used from the model to decide how much of the past information to forget. To calculate it, we use:

Current memory content: This is a big process, because output depends on it. We can divide it with 4 section.

-

Calculate the Hadamard (element-wise) product between the reset gate

and

. That will determine what to remove from the previous time steps.

-

Sum up the results of step 1 and 2.

-

Apply the nonlinear activation function tanh.

Final memory at current time step: This is the output state. Here we computes output from previous computation.

👉 LSTM

Long Short Term Memory are a special kind of RNN, capable of learning long-term dependencies. They were introduced by Hochreiter \& Schmidhuber (1997) [20], and were refined and popularized by many people in following work. Lstm architecture is similar to GRU.We can divide whole architecture with 4 section,

1. Forget gate( ):

The first step in our LSTM is to decide what information we’re going to throw away from the cell state. This decision is made by a sigmoid layer called the “forget gate layer.” It looks at

and

, and outputs a number between 0 and 1 for each number in the cell state

.

1 represents “completely keep this” while a 0 represents “completely get rid of this.”

2. input gate ( ): The next step is to decide what new information we’re going to store in

the cell state. This has two parts. First, a sigmoid layer called the “input gate layer”

decides which values we’ll update. Next, a tanh layer creates a vector of new candidate

values,

,

that could be added to the state. In the next step, we’ll combine these two to create an

update to the state.

3. update old state: It’s now time to update the old cell state, , into the new cell state

. The previous steps already decided what to do, we just need to actually do it.

We multiply the old state by , forgetting the things we decided to forget earlier. Then we add

. This is the new candidate values, scaled by how much we decided to update each state value.

4. output Gate: Finally, we need to decide what we’re going to output. This output will be based on our cell state, but will be a filtered version. First, we run a sigmoid layer which decides what parts of the cell state we’re going to output. Then, we put the cell state through tanh (to push the values to be between - and 1 and multiply it by the output of the sigmoid gate, so that we only output the parts we decided to.

👉 Feed forward neural network

Feed forward neural network is a simple one directional neural network. In RNN there a backward propagation but here only forward propagation. But in this neural network there is a lots of hidden layer for decision making. for computing hidden layer, in this network sigmoid function is used. output layer is only 1 and 0 activation function. Thats why this network is simplest neural network.

👉 Bidirectional RNN (BRNN)

In 1997 Schuster & mike proposed bidirectional model of RNN [26].

The idea is to split the state neurons of a regular RNN in a part that is responsible for the positive time direction (forward states) and a part for the negative time direction (backward states). Outputs from forward states are not connected to inputs of backward states, and vice versa.

FORWARD PASS:

Run all input data for one time slice through

the BRNN and determine all

predicted outputs.

- Do forward pass just for forward states (from t=1 to t=T ) and backward states (from t=T to t=1).

- Do forward pass for output neurons.

BACKWARD PASS:

Calculate the part of the objective function derivative

for the time slice used in the forward pass.

- Do backward pass for output neurons.

- Do backward pass just for forward states (from t=T to t=1 ) and backward states (from t=1 to t=T).

👉 Convolution Neural Network(CNN)

CNN was introduced by LeCun at 1998 [27]. Images recognition, images classifications. Objects detections, recognition faces etc., are some of the areas where CNNs are widely used.

We already know what is Relu Layer.

Padding:

Sometimes filter does not fit perfectly fit the input image. We have two options for padding:

-

Pad the picture with zeros (zero-padding) so that it fits

-

Drop the part of the image where the filter did not fit. This is called valid padding which keeps only valid part of the image.

pooling: Pooling layers section would reduce the number of parameters when the images are too large. Spatial pooling also called subsampling or downsampling which reduces the dimensionality of each map but retains important information. Spatial pooling can be of different types:

- Max Pooling

- Average Pooling

- Sum Pooling

Max Pooling is the most common among others.Max pooling takes the largest element from the rectified feature map. Taking the largest element could also take the average pooling. Sum of all elements in the feature map call as sum pooling.

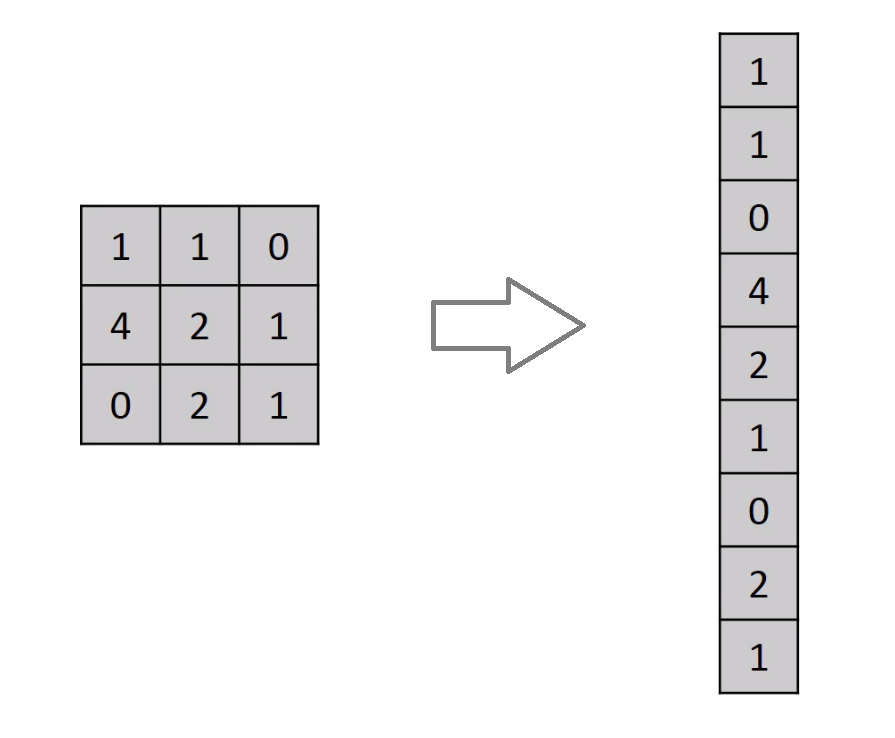

Flattening: An image is nothing but a matrix of pixel values. In Neural network it is difficult to work with 3-dimentional matrix. So we make 2D matrix to 1D matrix by flattened for feeding the next layer.

Fully Connected Layer:

The layer we call as FC layer, we flattened our matrix into vector and feed it into a

fully connected layer like a neural network. Every node connected to others hidden layer node.

Process:

- Provide input image into convolution layer

- Choose parameters, apply filters with strides, padding if requires. Perform convolution on the image and apply ReLU activation to the matrix.

- Perform pooling to reduce dimensionality size

- Add as many convolutional layers until satisfied

- Flatten the output and feed into a fully connected layer (FC Layer)

- Output the class using an activation function (Logistic Regression with cost functions) and classifies images.

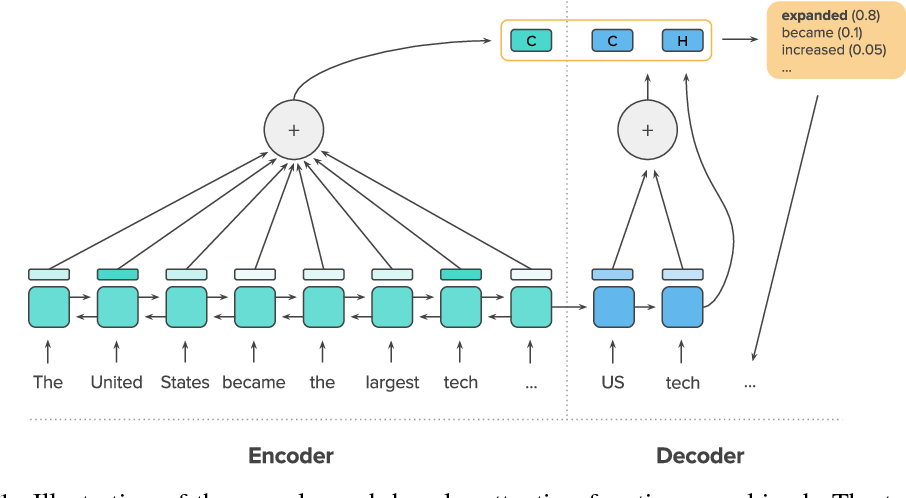

👉 Encoder-Decoder Model

The encoder-decoder model is composed of encoder and decoder like its name. The encoder converts an input document to a latent representation (vector), and the decoder generates a summary by using it. It was develop by google AI [21] and also implemeted it in the google play service. In our message section, this technique is used. So we already know what is it.

In Encoder part, machine will learn context sequentially. We can use any sequential model here for learning purpose. Like, RNN,LSTM,CNN,Seq to seq, GRU etc. In Decoder part, machine Decode what he learn and it depends on what we want. It can be translation,summary,question-answer etc.

In NLP, Encoder Decoder model is hugely used by researcher Cause of good accuracy. In summarization We will see how to summary a text by encoder decoder.

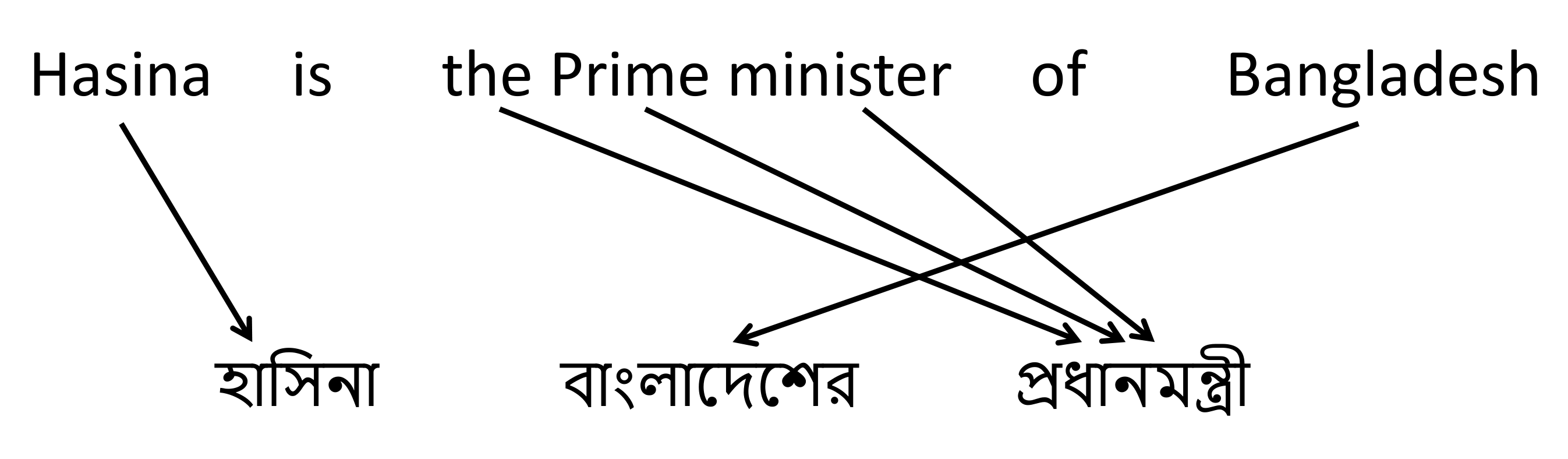

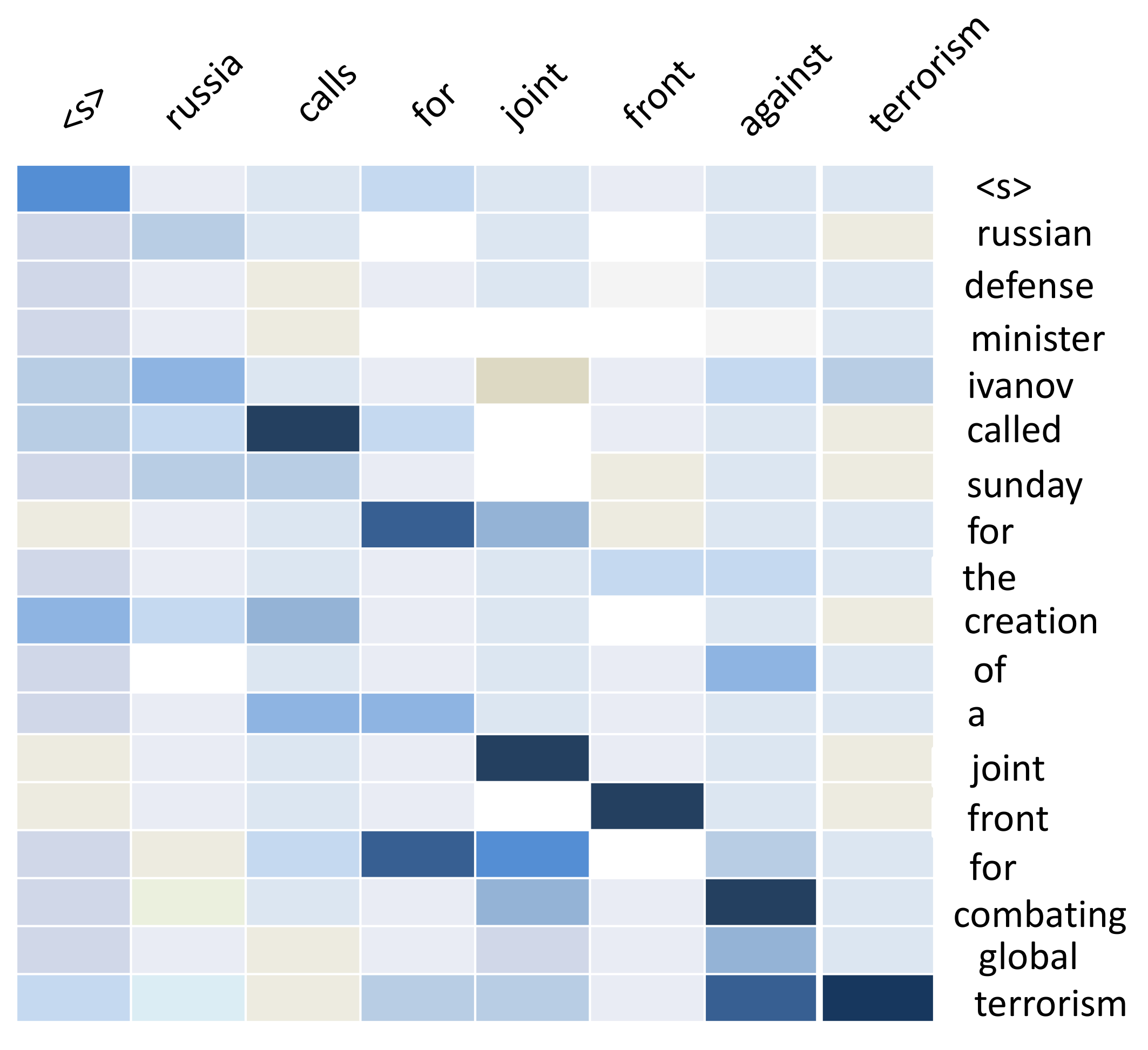

👉 Attention Model

Suppose we want to translate English to bangla.

In this figure, You can see when we translate 'Hasina', We don't need to focus 'Bangladesh'. When we decode something, we don't need to focus all the word. Only we need to focus those word who was closely connected. Here 'Attention' mechanism comes.

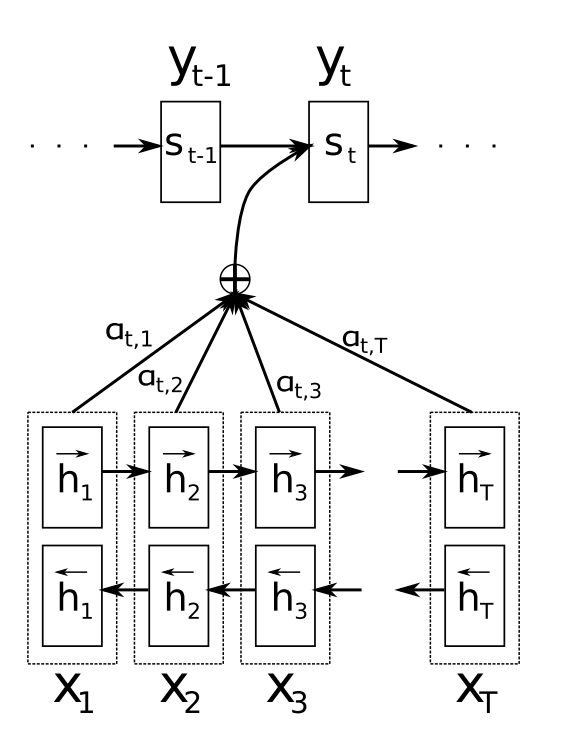

In This figure, we have a bidirectional RNN for encoder. Now when we decode, there is a value comes from every hidden state. Because we need to know which word we need to focus. This model is proposed by Bahdanau at 2014 [25].

In this architecture, they define each conditional probability,

where is an RNN hidden state for time i, computed by

The context vector is, then, computed as a weighted sum of these annotations

The weight of each annotation

is computed by

where,

👉 Seq to Seq model

This model is one of the famous model in NLP. Why? It can solve different types of difficult task that we can't do with other language. This model is also very fast. This model was introduced by google at 2014 [30]. This model also based on encoder-decoder architecture. But we need to give input sequentially and output layer will give us output sequentially. So, there is no big difference.

We can use different types model in encoder and decoder here. Like,

- RNN or Bidirectional RNN

- LSTM or Bidirectional LSTM

- GRU or Bidirectional GRU

👉 Transformer

Transfer learning or transformer is the first transduction model relying entirely on self-attention to compute representations of its input and output without using sequence-aligned RNNs or convolution. How? why is this model much important and famous? This model was introduced by Vaswani at 2017 in his famous paper "Attention is all you need" [37]. They used multi-head attention instead of single-head attention.

From this figure, we can guess how this architecture works.

We already know about "Embedding", "Feed Forward neural network",

"Softmax","Normalization". Now we will know about "Multi-Head Attention" and "Positional Encoding".

Positional Embedding: Since this model contains no recurrence and no convolution,

in order for the model to make use of the

order of the sequence, we must inject some information about the relative or absolute position

of the

tokens in the sequence. To this end, they add "positional encodings" to the input embeddings

at the bottoms of the encoder and decoder stacks. The positional encodings have the same dimension

as the embeddings, so that the two can be summed. They used sine and cosine function for different frequency.

Multi-Head Attention: In single attention, if the input consists of

queries and keys of dimension , and values of dimension

, We compute the dot products of the query with all keys, divide each by

, and apply a softmax function to obtain the weights on the

values.

In Multi-Head Attention model, instead of performing a single attention function with ,

and

dimensions, respectively.

Multi-head attention allows the model to jointly attend to information from different representation

subspaces at different positions. With a single attention head, averaging inhibits this

where,

We have to fixed head size.

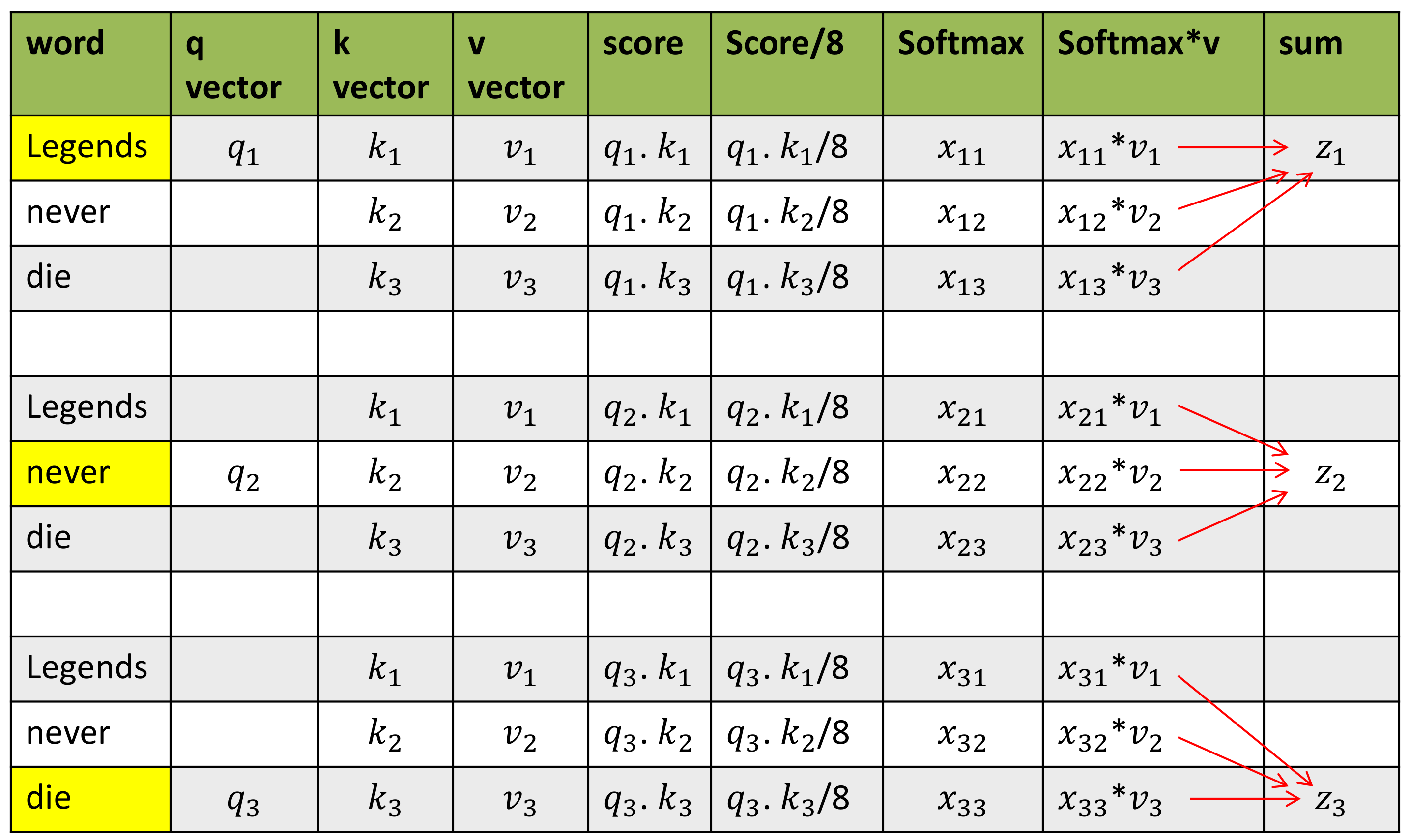

Self Attention: Self-attention, sometimes called intra-attention, is an attention mechanism relating different positions of a single sequence in order to compute a representation of the sequence.Self-attention allows the model to look at the other words in the input sequence to get a better understanding of a certain word in the sequence. Now, let’s see how we can calculate self-attention.

- First, we need to create three vectors from each of the encoder’s input vectors: Query Vector, Key Vector, Value Vector.

- Next, we will calculate self-attention for every word in the input sequence

- Consider this phrase – “Legends Never die”. To calculate the self-attention for the first word “Legends”, we will calculate scores for all the words in the phrase with respect to “legends”. This score determines the importance of other words when we are encoding a certain word in an input sequence

Encoder: The encoder is composed of a stack of N = 6 identical layers. Each layer has two

sub-layers. The first is a multi-head self-attention mechanism, and the second is a simple, position wise fully

connected feed-forward network. We employ a residual connection [10] around each of

the two sub-layers, followed by layer normalization [1]. That is, the output of each sub-layer is

LayerNorm(x + Sublayer(x)), where Sublayer(x) is the function implemented by the sub-layer

itself. To facilitate these residual connections, all sub-layers in the model, as well as the embedding

layers, produce outputs of dimension = 512.

Decoder: The decoder is also composed of a stack of N = 6 identical layers. In addition to the two sub-layers in each encoder layer, the decoder inserts a third sub-layer, which performs multi-head attention over the output of the encoder stack. Similar to the encoder, we employ residual connections around each of the sub-layers, followed by layer normalization. We also modify the self-attention sub-layer in the decoder stack to prevent positions from attending to subsequent positions. This masking, combined with fact that the output embeddings are offset by one position, ensures that the predictions for position i can depend only on the known outputs at positions less than i.

👉 Teacher Forcing

Teacher forcing is a strategy for training recurrent neural networks that uses model output from a prior time step as an input. Teacher forcing works by using the actual or expected output from the training dataset at the current time step y(t) as input in the next time step X(t+1), rather than the output generated by the network.

Lets take an example,

"Dhaka is the capital city of bangladesh". We want to feed this sentence in RNN. We must add [start] and [end] token in the sentence.

now the sentence looks like, "[Start] Dhaka is the capital city of bangladesh [End]".

When we give [Start] token, if it gives "The" as a output, and if we continue this process, whole sequence will be wrong. The model is in the off track and is going to get punished for every subsequent word it generates. This makes learning slower and the model unstable.

Here Teacher Forcing comes, When we calculate error and found we are in off track we will replace it with others word. It makes faster the model.

Lots of teacher forcing approach were invented in recent years, we can use any of it.

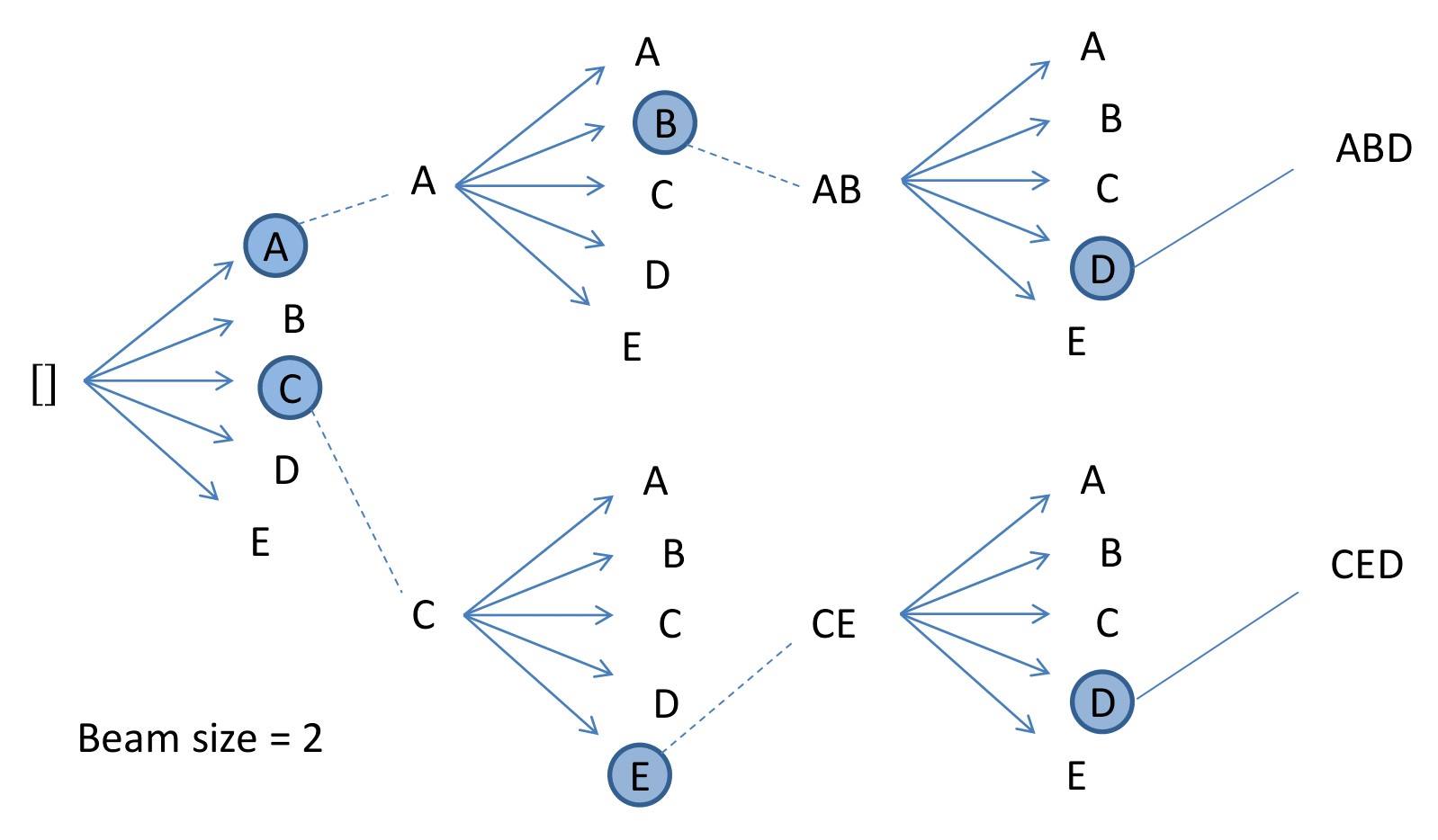

👉 Beam Search

Let's Think about, after calculating a output vector, how we can predict correct sentence. As like as Teacher forcing, If one word is incorrect, entire sequence can be incorrect. After using softmax function, If we take always highest value , it may be give wrong value. In greedy search, always take highest softmax value.

Instead of taking only maximum value we can take few value with highest softmax value. Suppose our Beam=3, We will take 3 value from output vector and make a bfs tree with it.

After taking each of them, we will calculate conditional probability with them in each time step. And we will take maximum probabilistic Sequence for our output.

👉 Introduction

Keyword extraction is tasked with the automatic identification of terms that best describe the subject of a document. Key phrases, key terms, key segments or just keywords are the terminology which is used for defining the terms that represent the most relevant information contained in the document.(source wiki). If you get good keyword from text, your answer will be more accurate. Maximum extractive and abstractive models depend on keyword Extraction.👉 Rules Based Keyword Extraction

Rules-based Keyword extraction basically conditional or pattern type. In 2008 Khoury, Richard, Fakhreddine Karray, and Mohamed S. Kamel published a paper about a rules-based model in keyword extraction using parts of speech hierarchy [1]. In this model, they describe, parts of speech have an important role in keyword extraction. They made a supervised learning model and a training corpus.

- words are split according to their parts of speech tag.

- words are divided into different categories.

- rules and condition check here which looks like a tree, every edge have a different condition.

- if the word goes to the leaf then we will take it as a keyword.

👉 Using support vector machine(SVM)

In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data used for classification and regression analysis. In 2006, Zheng and khuo made a keyword extraction model using SVM [2].

- Pre-processing: For a document, we conduct the sentence split, word tokenization and POS (part-of-speech) tagging by using GATE. Next, employ Linker to analyze the dependency relationships between words in each sentence. After that, we employ tri-gram to create candidate phrases and then filter the phrases whose frequencies are below a predefined threshold. We also exclude the common words in the stop-words list. We conduct gentle stemming by using WordNet. Specifically, we only stem the plural noun, gerund, and passive infinitive by their dictionary form. Finally, we obtain a set of ‘keyword candidate’ for later processing.

- Feature extraction: The input is a bag of words/phrases in a document. We make use of both local context information(POS Feature, Linkage Features, Contextual TFIDF Feature) and global context information(TFIDF Feature, First Occurrence Feature, Position Features) of a word/phrase in a document to define its features. The output is the feature vectors, and each vector corresponds to a word/phrase.

- Learning: The input is a collection of feature vector by step (2). We construct a SVM model that can identify the keyword. In the SVM model, we view a word/phrase as an example, the words/phrases labeled with ‘keyword’ as positive examples, and the other words/phrases as negative examples. We use the labeled data to train the SVM model in advance.

- Extraction: The input is a document. We employ the preprocessing and the feature extraction on it, and obtain a set of feature vectors. We then predict whether or not a word/phrase is a keyword by using the SVM model from step (3). Finally, the output is the extracted keywords for that document.

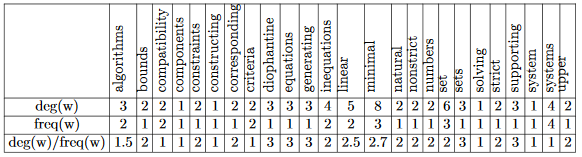

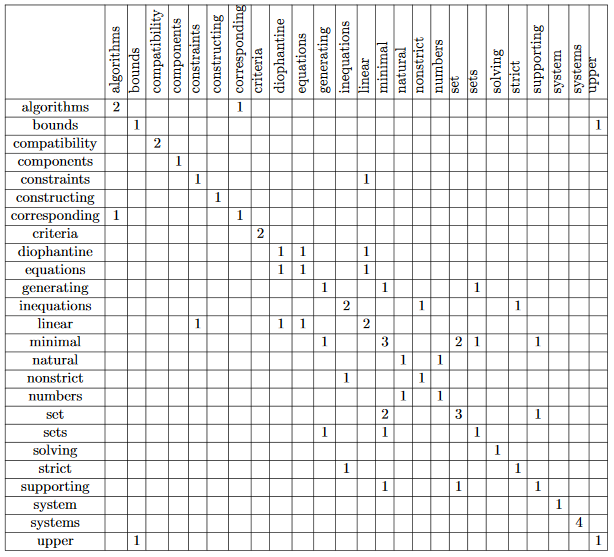

👉 Rake Algorithm

In 2010 Stuart Rose invented new techniques in keyword extraction name Rapid automatic keyword extraction(RAKE). It was one of the popular algorithms used in finding important keywords. In-text Summarization, this algorithm much popular in keyword extraction.Candidate keywords: Split the words by tokenizing them. Remove the stop word and delimiters. All other word is candidate words. Be careful about the position. Cause position matters when we rank them.

Keyword scores: Keyword Scores were calculated from the co-occurrence graph.

Suppose we have some word token after stop word remove.

Compatibility – systems – linear constraints – set – natural numbers – Criteria –

compatibility – system – linear Diophantine equations – strict inequations – nonstrict

inequations – Upper bounds – components – minimal set – solutions – algorithms –

minimal generating sets – solutions – systems – criteria – corresponding algorithms –

constructing – minimal supporting set – solving – systems – systems

For co-occurrence graph we need sorted, unique word. now it looks like,

algorithms – bounds – compatibility – components – constraints – constructing – corresponding –

criteria – diophantine – equations – generating – inequations – linear – minimal – natural –

nonstrict – numbers – set – sets – solving – strict – supporting – system – systems – upper

In co-occurrence we check for every i,j cell. if i'th row and j'th coloum are related or co-related,

we will increase count of this cell. Table 2.1 and Table 2.2 define us how it works.

Extracted keywords After candidate keywords are scored, the top T scoring candidates are selected as keywords for the document. We compute T as one-third the number of words in the graph.

👉 Bag of words

The bag-of-words model is a simplifying representation used in natural language processing and information retrieval (IR). In this model, a text (such as a sentence or a document) is represented as the bag (multiset) of its words, disregarding grammar and even word order but keeping multiplicity.The bag-of-words model is commonly used in methods of document classification where the (frequency of) occurrence of each word is used as a feature for training a classifier. An early reference to "bag of words" in a linguistic context can be found in Zellig Harris's 1954 article on Distributional Structure.[source: wiki] Bag of words process has two steps.

Tokenization: First we tokenize whole sentences. Vectors Creation: after doing the first step, we will make a vector for each sentence. Dividing sentences into words and creating a list with all unique words and also in alphabetical order. \ Let's take an example with three-sentence,

John likes to watch movies.

Mary likes movies too.

Mary also likes to watch football games.

Now after tokenization, our word list look like,

[also,football,games,john,likes,mary,movies,to,too,watch]

After that we need to compute sparse matrix. This sparse matrix represent the bag of words. In sparse matrix every cell represent, occurence of j'th word in i'th sentence.

👉 TF-IDF

TF-IDF value will be increased based on frequency of the word in a document. Like Bag of Words in this technique also we can not get any semantic meaning for words. \ But this technique is mostly used for document classification and also successfully used by search engines like Google, as a ranking factor for content.

Suppose we have three sentences.

A. This pasta is very tasty and affordable.

B. This pasta is not tasty and is affordable.

C. This pasta is very very delicious.

Let's consider each sentence as a document. Here also our first task is tokenization (dividing sentences into words or tokens) and then taking unique words.

👉 Word2vec

The Word2Vec model is used for learning vector representations of words called “word embeddings”. In the previous methods, there was no semantic meaning from words of corpus. Word2vec model takes input as a large size of the corpus and produces output to vector space. This vector space size may be in hundred of dimensionality. Each word vector will be placed on this vector space. word2vec uses 2 types of methods. There are

- Skip-gram

- CBOW (Continuous Bag of Words)

Here one more thing we have to discuss that is window size. window size basically 1-gram,bi-gram,tri-gream,N-gram model.

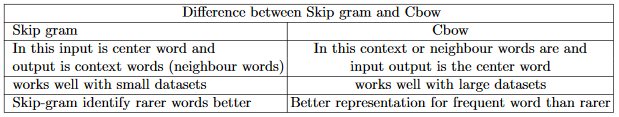

👉 Skip gram (word2vec p1)

In this method , take the center word from the window size words as an input and context words (neighbour words) as outputs. Word2vec models predict the context words of a center word using skip-gram method. Skip-gram works well with a small dataset and identifies rare words really well. In 2013 Mikolov first time told about this method [3].According to Fig,

- Both the input word wi and the output word wj are one-hot encoded into binary vectors x and y of size V.

- First, the multiplication of the binary vector x and the word embedding matrix W of size V×N gives us the embedding vector of the input word wi the i-th row of the matrix W.

- This newly discovered embedding vector of dimension N forms the hidden layer.

- The multiplication of the hidden layer and the word context matrix W' of size N×V produces the output one-hot encoded vector y.

- The output context matrix W' encodes the meanings of words as context, different from the embedding matrix W. NOTE: Despite the name, W' is independent of W, not a transpose or inverse or whatsoever.

👉 Cbow (word2vec p2)

The Continuous Bag-of-Words (CBOW) is another similar model for learning word vectors. It predicts the target word (i.e. “swing”) from source context words (i.e., “sentence should the sword”). Because there are multiple contextual words, we average their corresponding word vectors, constructed by the multiplication of the input vector and the matrix \emph{W}. Because the averaging stage smoothes over a lot of the distributional information, some people believe the CBOW model is better for small dataset.👉 Loss function (word2vec p3)

Both the skip-gram model and the CBOW model should be trained to minimize a well-designed loss/objective function. There are several loss functions we can incorporate to train these language models. like,- Full Softmax

- Hierarchical Softmax

- Cross Entropy

- Noise Contrastive Estimation (NCE)

- Negative Sampling (NEG)

👉 Difference between CBOW and skip-gram model(word2vec p4)

👉 Glove

GloVe stands for global vectors for word representation. It is an unsupervised learning algorithm developed by Stanford for generating word embeddings by aggregating global word-word co-occurrence matrix from a corpus. The resulting embeddings show interesting linear substructures of the word in vector space. GloVe is essentially a log-bilinear model with a weighted least-squares objective. The main intuition underlying the model is the simple observation that ratios of word-word co-occurrence probabilities have the potential for encoding some form of meaning. In Glove we also create sparse matrix. It was directly involved in the probability matrix.For example, consider the co-occurrence probabilities for target words ice and steam with various probe words from the vocabulary. Here are some actual probabilities from a 6 billion word corpus:

👉 Fasttext

Compared to other word embedding methods, FastText (Mikolov et al., 2016) is a new approach which can generate competitive results.[4] [5] This technique is much popular.

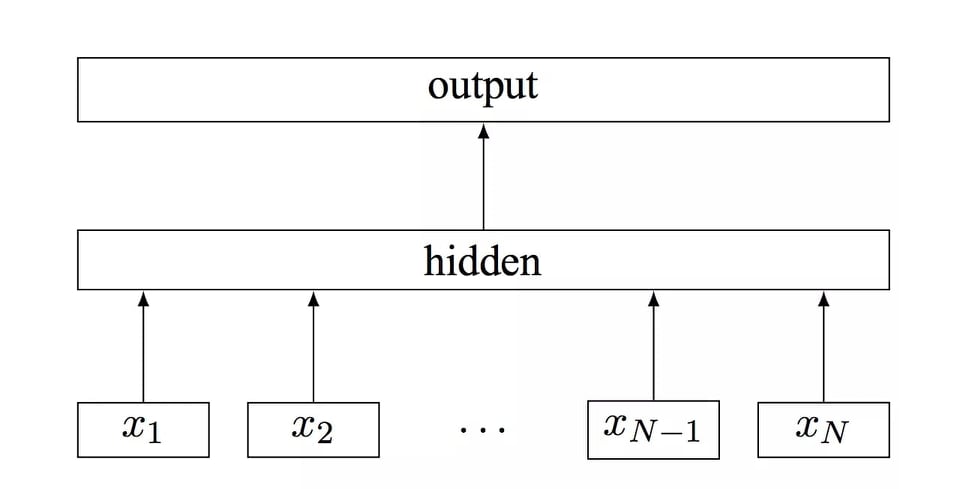

According to the main paper, the model is a simple neural network with only one layer. The bag-of-words representation of the text is first fed into a lookup layer, where the embeddings are fetched for every single word. Then, those word embeddings are averaged, so as to obtain a single averaged embedding for the whole text. At the hidden layer we end up with (n_words x dim) number of parameters, where dim is the size of the embeddings and n_words is the vocabulary size. After the averaging, we only have a single vector which is then fed to a linear classifier: we apply the softmax over a linear transformation of the output of the input layer. The linear transformation is a matrix with {\bf (dim x n_output)}, where {\bf n_output} is the number output classes. In the original paper, the final log-likelihood is:

where,

👉 Introduction

Instead of dealing with words, we can directly work with the sentence. how? Sentence embedding is the answer. Sentence embedding also makes semantic information in vector.

Suppose we have two sentences, 'I don’t like crowded places', 'However, I like one of the world’s busiest cities, New York'. Here, How can we make the machine draw the inference between ‘crowded places’ and ‘busy cities’?

Word embedding can't do that. But we can do it by sentence embedding. There are lots of techniques in recent years. We will talk about a few of them.

- Doc2Vec

- SentenceBERT

- InferSent

- Universal Sentence Encoder

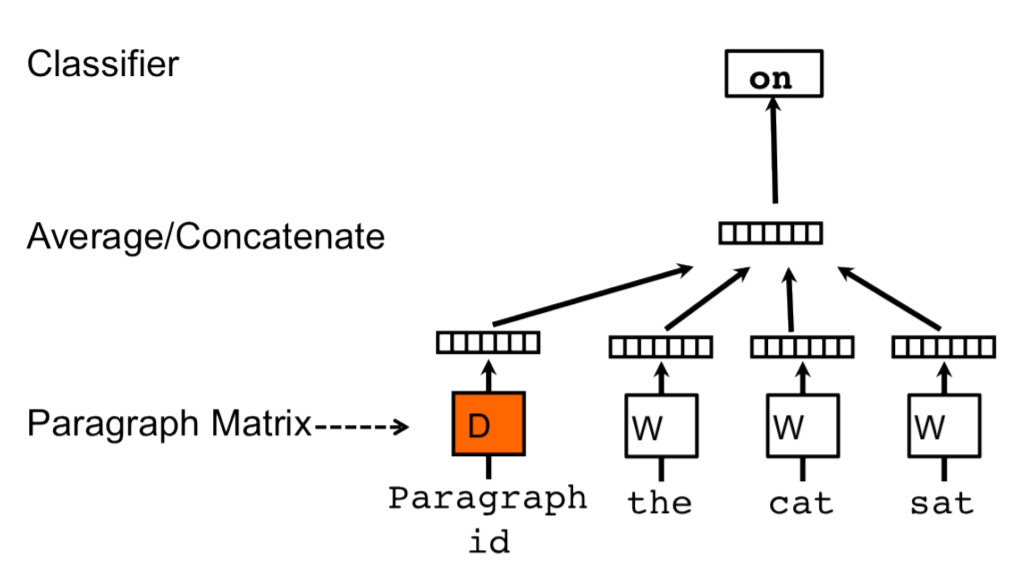

👉 Doc2vec

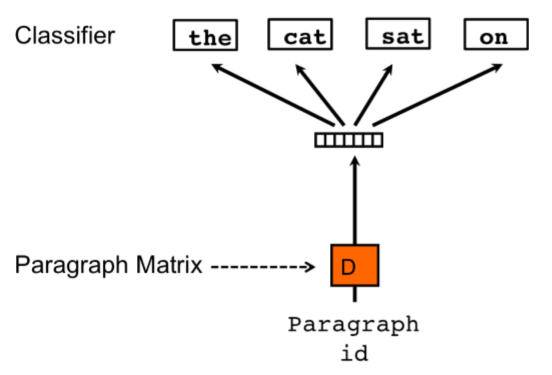

An extension of Word2Vec, the Doc2Vec embedding is one of the most popular techniques out there. Introduced in 2014, it is an unsupervised algorithm and adds on to the Word2Vec model by introducing another ‘paragraph vector’ by T mikolov [6]. In 2015 it was improved by Andrew [7]. Also, there are 2 ways to add the paragraph vector to the model. one is PVDM and another PVDOBW.

👉 PVDM(Distributed Memory version of Paragraph Vector)

We assign a paragraph vector sentence while sharing word vectors among all sentences. Then we either average or concatenate the (paragraph vector and words vector) to get the final sentence representation.

If you notice, it is an extension of the Continuous Bag-of-Word type of Word2Vec where we predict the next word given a set of words. It is just that in PVDM, we predict the next sentence given a set of sentences.

👉 PVDOBW( Distributed Bag of Words version of Paragraph Vector)

PVDOBW is the extention of Skip-gram model in Word2vec. Here, we just sample random words from the sentence and make the model predict which sentence it came from(a classification task).

PVDM is more than enough for most tasks.But we can use both parallelly.

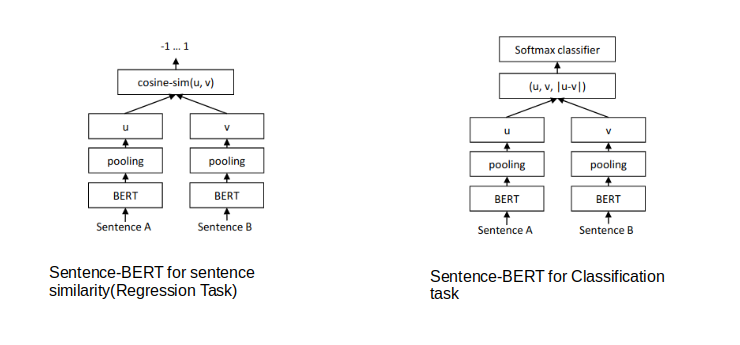

👉 SentenceBERT

Currently, the leader among the pack, SentenceBERT was introduced by Reimers in 2018 [8] and immediately took the pole position for Sentence Embeddings. At the heart of this BERT-based model, there are 4 key concepts:

- Attention

- Transformers

- BERT

- Siamese Network

we will discuss BERT,transformers,Attention model later.

Sentence-BERT uses a Siamese network like architecture to provide 2 sentences as an input. These 2 sentences are then passed to BERT models and a pooling layer to generate their embeddings. Then use the embeddings for the pair of sentences as inputs to calculate the cosine similarity.

Suppose we have some sentences.

- I ate dinner.

- We had a three-course meal.

- Brad came to dinner with us.

- He loves fish tacos.",

- In the end, we all felt like we ate too much.

- We all agreed; it was a magnificent evening.

Now, if we want to find the similarity between these sentences with "I had pizza and pasta". SentenceBert will give us.

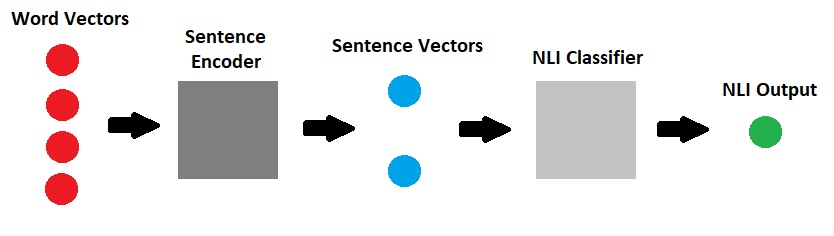

👉 InferSent

InferSent is a sentence embeddings method that provides semantic sentence representations invented by A Conneau in 2017 [9]. It is trained on natural language inference data and generalizes well to many different tasks.

In this model,they examine standard recurrent models such as LSTMs and GRUs, for which we investigate mean and maxpooling over the hidden representations;

The architecture consists of 2 parts:

- One is the sentence encoder that takes word vectors and encodes sentences into vectors

- Another, NLI classifier that takes the encoded vectors in and outputs a class among entailment, contradiction and neutral.

In the author paper, four types of sentence encoder were discussed.

👉 InferSent part1: Sentence Encoder(LSTM and GRU)

First, and simplest, encoders apply recurrent neural networks using either LSTM or GRU modules, as in sequence to sequence encoders. For a sequence of T words (w1, . . . , wT ), the network computes a set of T hidden representations h1, . . . , hT , with ht = -> LSTM(w1, . . . , wT ) (or using GRU units instead). A sentence is represented by the last hidden vector, hT.

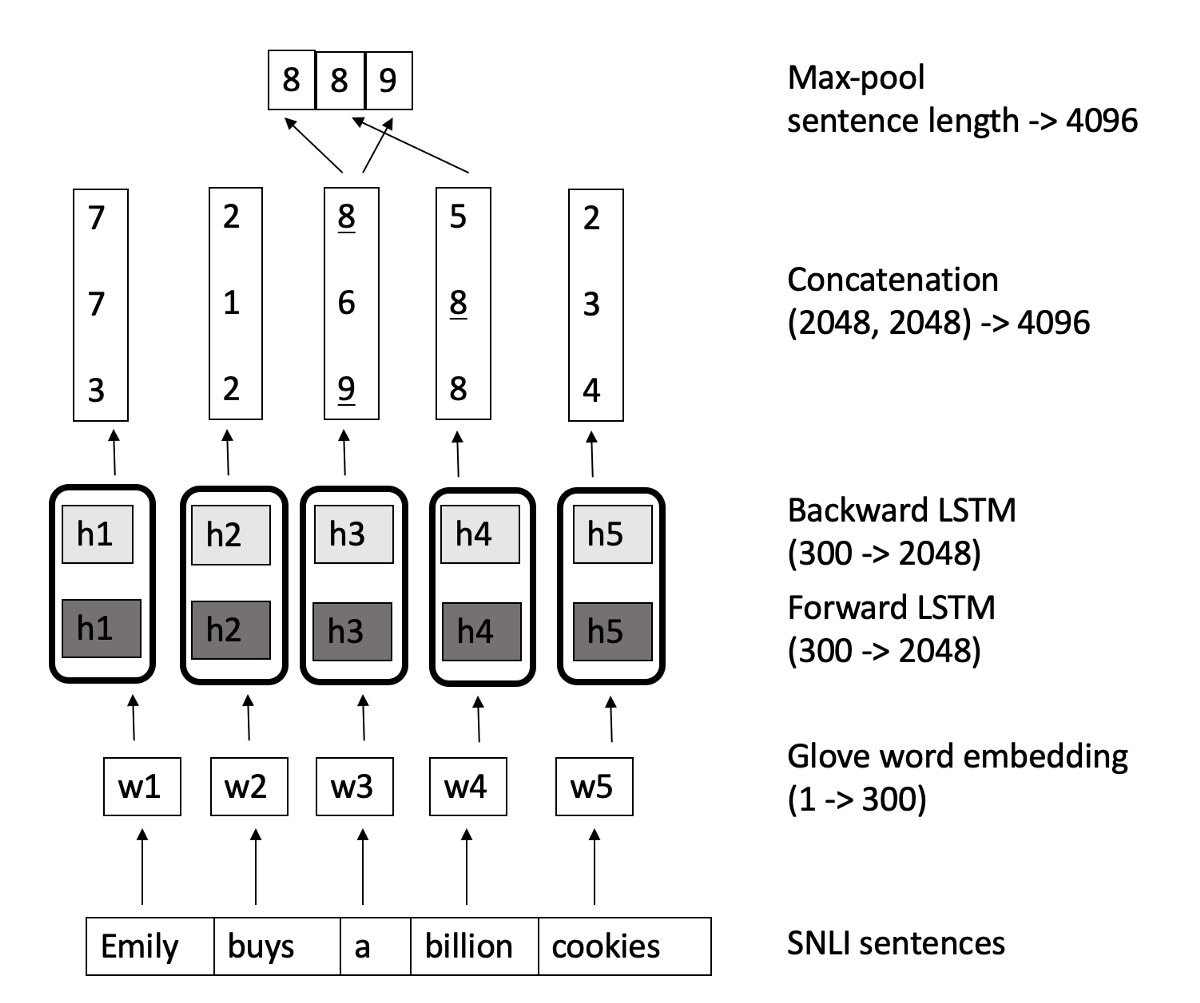

👉 InferSent part2: Sentence Encoder (BiLSTM with max/mean pooling)

It’s a bi-directional LSTM network which computes n-vectors for n-words and each vector is a

concatenation of output from a forward LSTM and a backward LSTM that read the sentence in

opposite direction. Then a max/mean pool is applied to each of the concatenated vectors to

form the fixed-length final vector.

👉 InferSent part3: Sentence Encoder (Self-attentive network)

The self-attentive sentence encoder uses an attention mechanism over the hidden states of a BiLSTM to generate a representation u of an input sentence. The attention mechanism is defined as,

Where are the output hidden

vectors of BiLSTM. These are fed to an affine transformation

which outputs a set of keys

.

The

represent the score of similarity

between the keys and learned context query vector

.

These weights are used to produce the final representation u,

Which is a linear combination of the hidden vectors.

👉 InferSent part4: Sentence Encoder( Hierarchical ConvNet)

One of the currently best performing models on classification tasks is a convolutional architecture termed AdaSent, which concatenates different representations of the sentences at a different level of abstractions. The hierarchical convolutional network introduced in the paper is inspired by that and comprise 4 convolutional layers. At every layer, max-pooling of the feature maps is done to obtain representation. The final sentence embedding is represented by a concatenation of the 4 max-pooled representations.

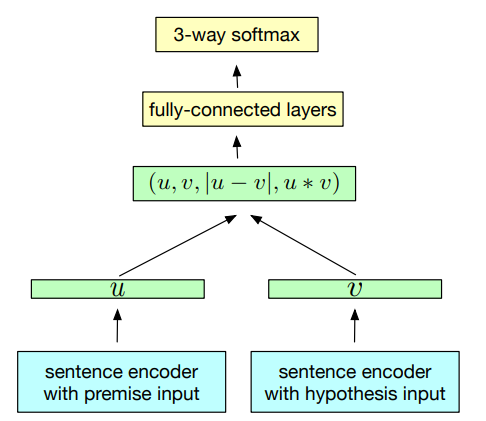

👉 InferSent part5: Natural Language Inference Classifier(NLI)

This section discusses the inference classifier network which takes these sentence embeddings and predicts the output label.

After the sentence vectors are fed as input to this model, 3 matching methods are applied to extract relations between the text, u and hypothesis, v –

After the sentence vectors are fed as input to this model, 3 matching methods are applied to extract relations between the text, u and hypothesis, v –

- concatenation of the two representations (u, v)

- element-wise product (u * v)

- and, absolute element-wise difference (|u - v|)

The resulting vector captures information from both the text, u and the hypothesis, v, and is fed into a 3-class classifier( entailment, contradiction and neutral) consisting of multiple fully connected layers followed by a softmax layer.

👉 Universal Sentence Encoder

One of the most well-performing sentence embedding techniques right now is the Universal Sentence Encoder. And it should come as no surprise from anybody that it has been proposed by Google. The key feature here is that we can use it for Multi-task learning. This means that the sentence embeddings we generate can be used for multiple tasks like sentiment analysis, text classification, sentence similarity, etc, and the results of these asks are then fed back to the model to get even better sentence vectors that before.

This technique was invented by google and authored Cer and Daniel in 2018 [10].

In this model, they use two types of encoder. We can use any of them.

- Transformer

- Deep Averaging Network (DAN)

Both of these models are capable of taking a word or a sentence as input and generating embeddings for the same. The following is the basic flow:

-

Tokenize the sentences after converting them to lowercase

-

Depending on the type of encoder, the sentence gets converted to a 512-dimensional vector

- If we use the transformer, it is similar to the encoder module of the transformer architecture and uses the self-attention mechanism.

- The DAN option computes the unigram and bigram embeddings first and then averages them to get a single embedding. This is then passed to a deep neural network to get a final sentence embedding of 512 dimensions.

-

These sentence embeddings are then used for various unsupervised and supervised tasks like Skipthoughts, NLI, etc. The trained model is then again reused to generate a new 512 dimension sentence embedding.

Similarity of two vector:

👉 Ok i learn about these topics but can't understand how they use these?

Yeah. It's really tough to understand, how they used these topics in Text summarization models. In my opinion, Maximum models used these topics as a sub-portion. So we need to read a paper sequentially and learn which portion we will do with these topics.

👉 Types of Text Summarization

We can divide all summarization model into three categories.

- Extractive Summarization

- Abstractive Summarization

- Combined Approach

👉 Extractive Summarization

Extractive summarization means identifying important sections of the text and generating them verbatim producing a subset of the sentences from the original text. Here, content is extracted from the original data, but the extracted content is not modified in any way.So many Extractive summarization techniques were invented after 2004. Like,

- LexRank (Erkan and Radev, 2004)

- TextRank (Mihalcea and Tarau, 2004)

- RegSum system (Hong and Nenkova, 2014)

- TextTeaser(Jolo Balbin,2013)

- Summarization by LSA(Ferda and Ilyas,2011)

- Quasi-Synchronous Grammar base(Woodsend and Yansong Feng,2010)

- Neural network Base extractive

- An attention-based encoder-decoder(Cheng and Lapata (2016))

👉 Abstractive Summarization

Abstractive summarization is much advanced from extractive and also much good accuracy. It is more fluent and natural summaries.The abstractive model takes advantage of deep learning. In this time so many new models coming with high accuracy.

- Encoder-decoder Model

- CNN and RNN model

- LSTM model

- Traning model like MLE

- Seq to seq model

- Summarization using sentence Compression

- Abstractive Text Summarization using Reinforcement Learning

- Summarization using GRU or BGRU

- Attention base model

- Pointer base model

- Transformer base model like bart

Many Models was invented in recent years using these techniques.

👉 Extractive and Abstractive Combined Approach

As our data like news, articles, blogs, papers, etc increasing day by day. Also out content is much bigger than the old one. The abstractive models take lots of time in computing. The extractive models are much faster. That's why nowadays researchers make a combined way.

- Focusing importance sentences and use abstractive model (Abigail See, Liu, Christopher,2017)

- Query Focused Abstractive Summarization (Tal Baumel, Matan Eyal, Michael Elhadad,2018)

- Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting (chen and bishal,2018)

👉 Graph Base Extractive Summarization

Graph Base Extractive Summarization is the oldest technique. In 2004, Mihalcea and Rada discussed graph base model textrank. This technique was based on PageRank.

In TextRank, We just make relations with sentences by Some processing. we link sentence to sentence. Every Sentence is treated like a node. We can make the graph with word unit.

graph clustering is the major process here. An overall Graph bases model structure:

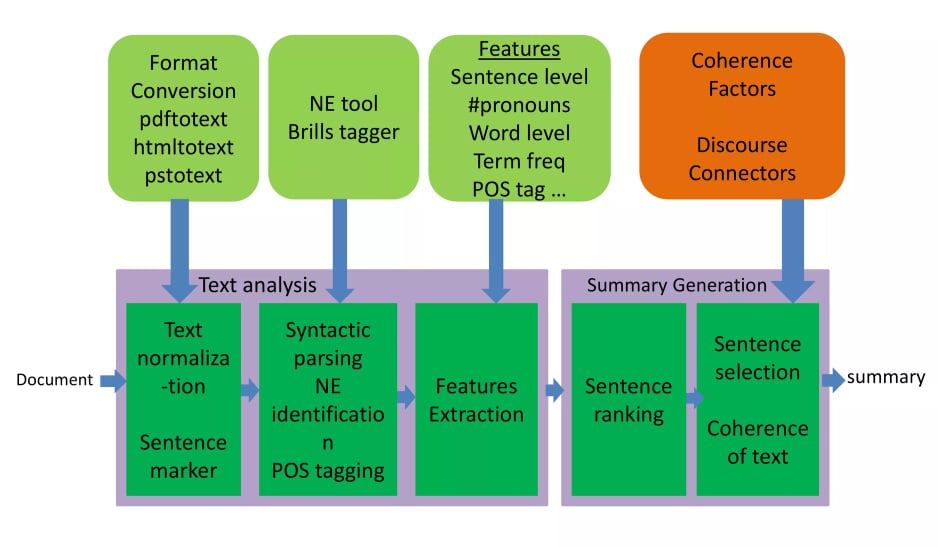

👉 Feature Base Extractive Summarization

In Feature base Model, Sentence are ranked by some features.

- Position of the sentence in input document

- Presence of the verb in the sentence

- Length of the sentence

- Term frequency(TF)

- Named entity tag NE

- Font style

Basically these features are used in feature based model was developed by

Jagadeesh in 2005 [12]. Feature Base Extraction model is better than any other

extraction model. Cause, we can add deep learning feature or another good feature for

ranking sentences.

👉 Topic Base Extractive Summarization

The topic base model calculates the topic of the document and evaluate each sentences by what kinds of topics are included (the "main" topic is highly evaluated when scoring the sentence). Latent Semantic Analysis (LSA) is usually used to detect the topic. It's based on SVD (Singular Value Decomposition). A good representation about text summarization with LSA was given by Ozsoy(2011) [13]. In this paper they used three step.

Step 1(Input Matrix Creation):

The way in which an input matrix is created is very important for summarization, since it affects the

resultingmatrices calculated with SVD. As already mentioned, SVD is a complex algorithm and its complexity

increases withthe size of input matrix, which degrades the performance. In order to reduce the matrix size,

rows of the matrix, i.e. thewords, can be reduced by approaches like removing stop words, using the roots of words

only, using phrases instead ofwords and so on. Also, cell values of matrix can change the results of SVD. There are

different approaches to filling outthe cell values. These approaches are as follows:

- Frequency of word: the cell is filled in with the frequency of the word in the sentence.

- Binary representation: the cell is filled in with 0/1 depending on the existence of a word in the sentence.

- Tf-idf (Term Frequency-Inverse Document Frequency): the cell is filled in with the tf-idf value of the word.A higher tf-idf value means that the word is more frequent in the sentence but less frequent in the wholedocument. A higher value also indicates that the word is much more representative for that sentence thanothers.

- Log entropy: the cell is filled in with the log-entropy value of the word, which gives information on how infor-mative the word is in the sentence.

- Root type: the cell is filled in with the frequency of the word if its root type is a noun, otherwise the cell valueis set to 0.

- Modified Tf-idf: this approach is proposed in Ozsoy et al. [3], in order to eliminate noise from the input matrix.The cell values are set to tf-idf scores first, and then the words that have scores less than or equal to the averageof the row are set to 0.

Step 2(Singular Value Decomposition (SVD)):

SVD is an algebraic method that can model relationships among words/phrases and sen-tences.

In this method, the given input matrix Ais decomposed into three new matrices as follows:

where A is the input matrix (m * n); U is words ×extracted concepts (m * n); Prepresents scaling values, diagonal descending matrix (n * n); and V is sentences ×extracted concepts (n * n).

Step 3(Sentence Selection): using the results of SVD, different algorithms are used to select important sentences. Some of them are discussed in below paper:

- Gong and Liu (2001) [14]

- Steinberger, Josef, and Karel Jezek (2004) [15]

- Murray et al. (2005) [16]

- Cross Method by Steinberger & Jezek(2008) [17]

- topic method

👉 BSTM base Extractive Summarization

BSTm means Bayesian Sentence-based Topic Models. In topic modeling of text documents,

the goal is to infer the words related to a certain topic and the topics discussed in a certain document,

based on the prior analysis of a corpus of documents. It is possible with the help of Bayesian inference that calculates

the probability of an event based on a combination of common sense assumptions and the outcomes of previous related events.

First, They took a single Document

where denotes the model parameter for document d,

denotes the sequence of words, i.e. the content of the document. W is the

vocabulary.

Now we need to calculate

. We will Calculate it based on different topic.

where T is the set of topics. Here, we assume that given a topic, generating words is independent from the document, i.e.

Instead of freely choosing topic unigram models, we further assume that topic unigram models are mixtures of some existing base unigram models, i.e.

where S is the set of base unigram models. Here, we use sentence language models as the base models. One benefit of this assumption is that each topic is represented by meaningful sentences, instead of directly by keywords.

👉 Grammar Base Extractive summarization

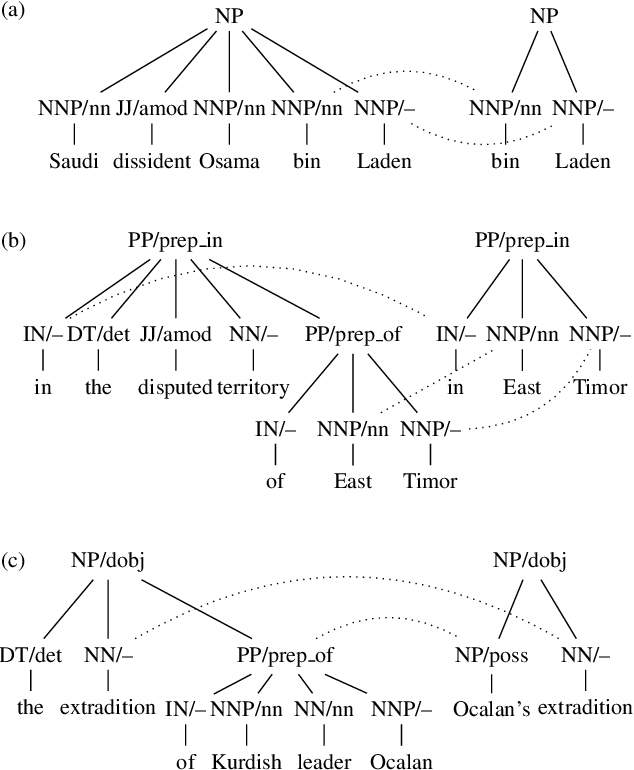

The grammar base model parses the text and constructs a grammatical structure, then select/reorder substructures. In 2010, Woodsend and Kristian told in their paper how grammatical structure helps us to build summarization [18]. They build every sentences into a grammatical structure and reduces length.

In this figure, you can see how they reduces 'Saudi dissident Osama bin Laden' to bin laden. Another example "in the disputed territory of East Timor" to "in East Timor". Last one is "the extradition of Kurdish leader Ocalan" to "Ocalan's extradition".

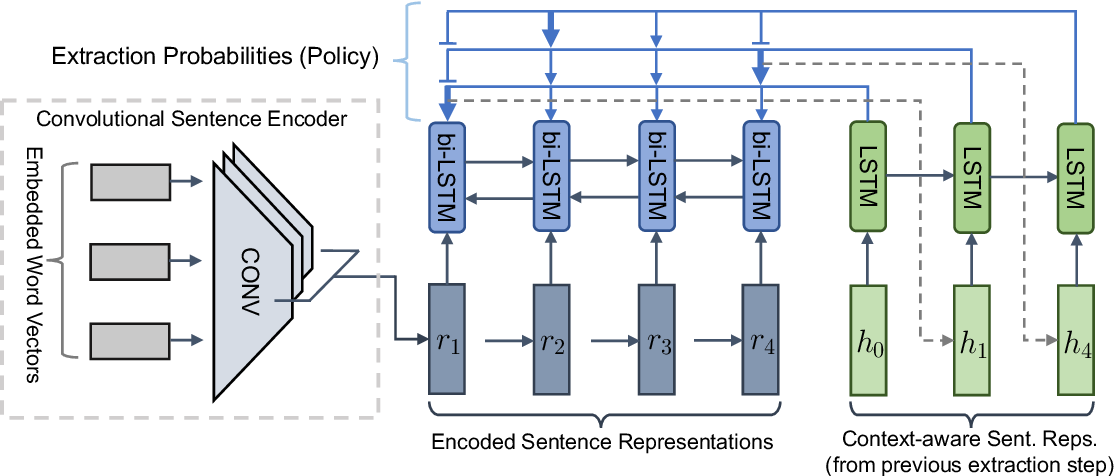

👉 Neural network base Extractive Summarization

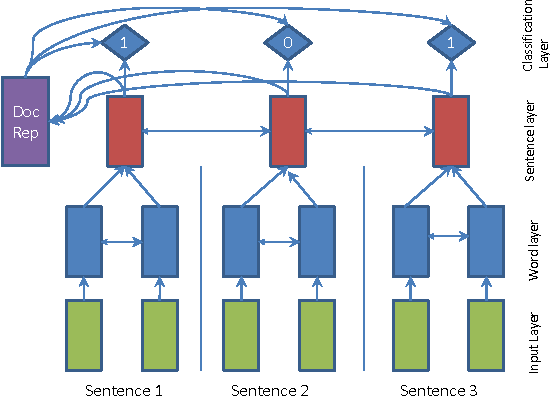

In 2016, Nallapati and Ramesh proposed an extractive model by Recurrent Neural Network(RNN) and named it "SummaRuNNer" [19]

SummerRunner basically a two-layer RNN based sequence classifier. The bottom layer operates at word level within each sentence, while the top layer runs over sentences. Double-pointed arrows indicate a bi-directional RNN. The top layer with 1’s and 0’s is the sigmoid activation based classification layer that decides whether or not each sentence belongs to the summary. The decision at each sentence depends on the content richness of the sentence, its salience with respect to the document, its novelty with respect to the accumulated summary representation and other positional features

👉 Encoder Decoder Abstractive Model with RNN

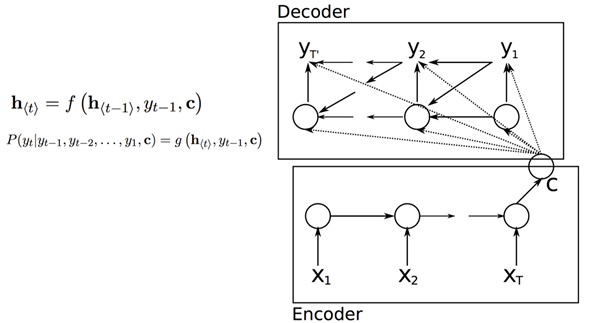

You can see the Dictionary Section, What is RNN and Encoder Decoder Model. We will now discuss Encoder Decoder Model with RNN. Cho and Kyunghyun in 2014 [22] showed us, how we can encode and decode sequential context by RNN. We can learn whole input by RNN and. After encode we will get a hidden layer. From this Hidden Layer we will decode the Output. RNN is good for unsupervised machine Learning. In 2009 Wang Proposed it [23].

RNN did a great job in encoder decoder model. Lots of encoder-decoder model with RNN was build in last few years. we will see them later.

👉 Abstractive Summarization with Attentive Recurrent Neural Networks

This model is another modified version of Encoder decoder model with rnn. Difference between two of them is in Encoder machanism.

This model was proposed by Alexander in 2016 [24]. In this model they used Conditional RNN.

Let x denote the input sentence consisting of a

sequence of M words x = ], where each word

is part of vocabulary V, of size

|V| = V . Our task is to generate a target sequence

y =

], of N words, where N < M,

such that the meaning of x is preserved: y = argmax(y)

, where y is a random variable denoting a sequence of N words.

Typically the conditional probability is modeled by a parametric function with parameters

:

. Training involves finding the

which maximizes the conditional probability of

sentence-summary pairs in the training corpus.

{\bf Recurrent Decoder: } The above conditional is modeled using an RNN:

where is the hidden state of the RNN:

Final form of RNN Model is,

where is the sigmoid function and

is the softmax function. So this is not like LSTM Long process.

Attentive Encoder:

for an input sentence x we denote by the d

dimensional learnable embedding of the i-th word (

). In addition the position i of the word

is also associated with a learnable embedding

of size d

). Then the full embedding for

i-th word in x is given by

. Let us denote by

)

a learnable weight matrix

which is used to convolve over the full embeddings

of consecutive words. Let there be d such matrices

The output of convolution is given

by:

where is the j-th column of the matrix

. Thus

the d dimensional aggregate embedding vector

is

defined as

= [

, . . . ,

]. Note that each word

in the input sequence is associated with one aggregate embedding vector

.

The vectors

can be

seen as a representation of the word which captures

the position in which it occurs in the sentence and

also the context in which it appears in the sentence.

In our experiments the width q of the convolution

matrix

was set to 5. To account for words at the

boundaries of x we first pad the sequence on both

sides with dummy words before computing the aggregate vectors

’s.

Given these aggregate vectors of words, we compute the context vector

(the encoder output) as:

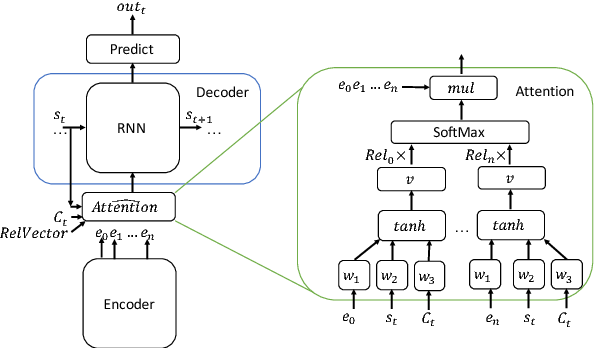

👉 Neural Attention Model

Neural Attention model is the combined of attention model and BRNN. This model was indtoduced by Alexander at 2017 [28].

In this model, We use attention based encoder for finding important sentences.

Here,

👉 Sequence-to-sequence RNNs and Beyond

This model was introduced by Nallapati at 2016. In this model they focused on all type of seq to seq model problem. example:

- In sequence to sequence model we need to train large vocabulary. But here we will train a subset of vocabulary.\ Large Vocab Trick, instead of softmax over the entire vocabulary, softmax over the vocabulary from the source document as well as the top frequent words in the vocabulary

- In other model, For finding important keyword , we need to train vocabulary (embedding layer). But here, they focused on TF-IDF , Pos tag, Position, NER etc. what is the benefit? of course much faster than neural network.

- In seq to seq model, we don't handle unknown or rare word. But in this model we handle it by pointer or copy from original text.

- For long term dependency, they used here attention mechanism.

👉 Summarization with Pointer-Generator Networks

This model is similar to Seq-to-Seq model with RNNs and beyond. This model was proposed by see at 2017 [32]. This model also reduced Seq to Seq model problems.

In this model they Pointed two problem in seq to seq attention model.

-

Unknown and rare word problem: When we found unknown word but important, we marked it as 'UNK'. For that reason we didn't get better result in seq to seq model. In this model they used pointer generator for overcome this problem. How? In pointer generator network we will directly pass unknown word. It allows both copying words via pointing, and generating words from a fixed vocabulary.

-

repeating problem: Repetition is a common problem for sequence-to-sequence models. example: "germany beat germany beat germany beat.." may be produced in seq to seq model. They used a coverage vector to overcome this problem. Coverage vector

basically sum of attention distributions over all previous decoder timesteps. For every present timestamp, they used it to change output state.

👉 LSTM and CNN base abstractive model

In 2019, Song proposed a Phrase encoder [29]. This encoder is based on Lstm-CNN. This encoder takes Phrases instead of words.The phrases are composed of several words to express the overall meaning, and it is a key concept in the field of phrase filling and phrase extraction.Phrases can be roughly divided into three major types: subject phrases, relational phrases and object phrases. for example in "Mary wants to go home", the subject phrase is "Mary", the relational phrase is "wants to go" and the object phrase is "home".

what is the difference between others model and this model? This model is the extended of Lstm based model. Here in input sequence they didn't give words. In this model, they used phrase encoder to encode words to phrase.

So this full model we will divide into three parts.

Phrase encoder:

We opted for a convolutional neural network model

for representing phrases for two reasons. Firstly, single layer CNNs can be trained

effectively (without any long-term dependencies in the model) and secondly, CNNs have been

successfully used for sentence-level classification tasks such as sentiment analysis. Let d

denote the dimension of word embedding, and s a document phrase consisting of a

sequence of n words ( ,…,

) which can be represented by a dense column matrix W(n * d)

. We apply a temporal narrow convolution between Wand a kernel K(c * d) of

width c as follows:

Where is the Hadamard Product followed by a sum over all elements.

denotes the j-th

element of the i-th feature map

and b is the bias. We perform max pooling over time to obtain

a single feature (the i-th feature) representing the phrase under the kernel K with width c:

Recurrent document encoder: This encoder type we already know. We can use here Bidirectional GRU or Bidirectional LSTM. Any type of RNN we can use here. We already discussed it.

Decoder: In decoder Section, based on the sequence-to-sequence model, this model divides decoder part into two different modes: generate mode and copy mode. In this mode, we calculate output conditional probability. We already know how seq to seq decoder works.

👉 Selective Encoding for Abstractive Sentence Summarization

This model also focused on input sequence. This model was introduced by Zhou at 2017 [33]. This model was extended seq to seq model by a selective gate. we can divide whole model with three part.

1. Sentence encoder: Its nothing but a bi-GRU network for reading input sequence like others model.

2. Selective Mechanism: Here comes the extended part of this model. For selective mechanism they used a selective gate here. the selective gate network in their model takes two vector

inputs, the sentence word vector and the sentence representation vector s. The sentence word

vector

is the output of the BiGRU encoder and represents the meaning and context information of

word

. The sentence vector s is used to represent the meaning of the sentence.

we concatenate the last forward hidden state and backward hidden state

as the sentence representation s:

For each time step i, the selective gate takes the

sentence representation s and BiGRU hidden hi as

inputs to compute the gate vector

where and

are weight matrices, b is the

bias vector,

denotes sigmoid activation function, and

is element-wise multiplication.

3. Summary Decoder: This decoder also a bi-GRU. They used the same process of seq-to-seq model or other Decoder.

👉 A Deep Reinforced Model for Abstractive Summarization

This model also reduced SeqtoSeq model problems. This model was introduced by Paulus at 2017 [34]. we can divide this model into 5 parts.

- INTRA-TEMPORAL ATTENTION ON INPUT SEQUENCE: This model used Nallapati's [31] model. in that model have shown that such an intra-temporal attention can reduce the amount of repetitions when attending over long documents. In this model they used bilinear function for attention. After that they normalize the sequence.

- INTRA-DECODER ATTENTION: A closely-related intra-RNN attention function was used here. For reducing repating pharse they used here also attention. If a sentence or word already written, they did a less attention to those words.

- TOKEN GENERATION AND POINTER: To generate a token, this decoder used either a token-generation softmax layer or a pointer mechanism to copy rare or unseen from the input sequence. they used a switch function that decides at each decoding step whether to use the token generation or the pointer.

- SHARING DECODER WEIGHTS: In addition to using the same embedding matrix

for the encoder and the decoder sequences, we introduce some weight-sharing between this embedding matrix and the

matrix of the tokengeneration layer.

- REPETITION AVOIDANCE AT TEST TIME: Another way to avoid repetitions comes from observation that in both the CNN/Daily Mail and NYT datasets, ground-truth summaries almost never contain the same trigram twice. Based on this observation, they forced their decoder to never output the same trigram more than once during testing.

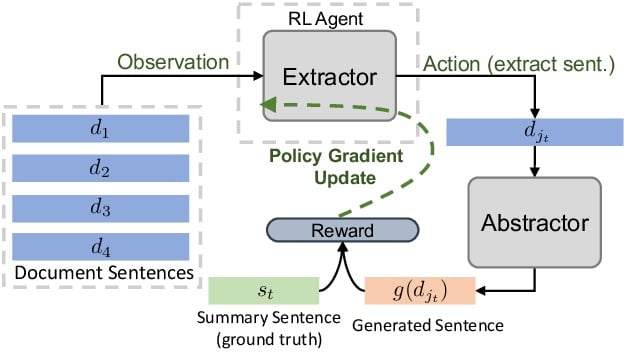

👉 Fast Abstractive Summarization with Reinforce-Selected Sentence Rewriting

Now, we will see a Extractive and abstractive combined approach. This approach was introduced by Chen at 2018 [35].

We can divide this model with two part,

1. Extractor Agent: In this section they used bi-directional LSTM for long term dependency between sentences. And they used here phrase as a input in these Bi-LSTM. We already know CNN(convolutional neural network) can produce phrase. They used here convolutional sentence encoder. now for sentence selection and to train a Pointer Network, they used another LSTM. For rare/unknown word they used copy machanism.

2. Abstractor Network: This is nothing but a attention based machine translation by Bahdanau [25]. We already how it works. So abstractor network here is a machine translation. After using this model we will get generated summary.

👉 Query Focused Abstractive Summarization

Query focused model basically a combination of seqtoseq model + attention model + Query Relevance . we will discuss Baumel's proposed model at 2018 [36].

I think we already guess how this model works by this figure We already know how seq to seq model with attention mechanism works. Now we will see how Query Relevance works here. They adopt the approach to QFS. The QFS task is split into two stages, a relevance model determines the extent to which passages in the source documents are relevant to the input query; and a generic summarization method is applied to combine the relevant passages into a coherent summary. In this paper, they consider very simple relevance models and do not attempt to optimize them – they compare relevance measures based on unigram overlap between query and sentences, and TF*IDF and Word2vec encodings with cosine distance between the query and sentences.

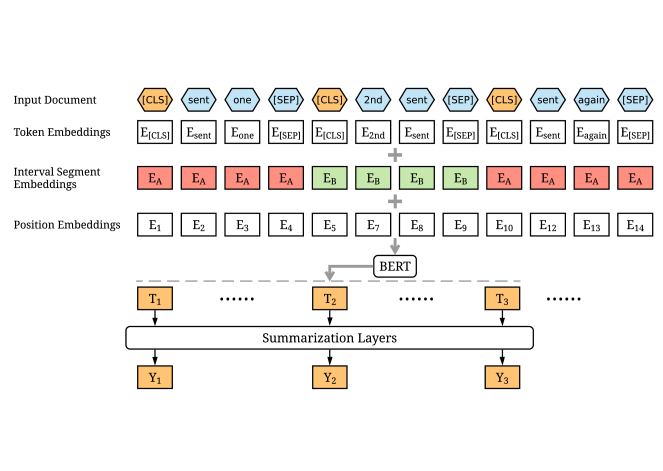

👉 Fine-tune BERT for Extractive Summarization

BERT is a pre-trained Transformer. We already know what is transformer. We will use BERT for extractive summarization. This model was introduced by Liu at 2019 [38].

BERT is a pre-trained Transformer. We just need to give positional Embedding and Token Embedding of our input sequence.

It will give us output sequence.

Encoding Multiple Sentences: As illustrated in , we insert a [CLS] token before each sentence and a [SEP] token after each

sentence.

Encoding Multiple Sentences: As illustrated in , we insert a [CLS] token before each sentence and a [SEP] token after each

sentence.

Interval Segment Embeddings: We use interval segment embeddings to distinguish multiple

sentences within a document. For or

conditioned on i is odd or even. For example, for

[

] we will assign

[

]. This is the main difference between "BERT" and

"BERT For Summarization". In BERT, there is no divider. All are

.

Fine-tuning with Summarization Layers: After getting Summarization layers, We can add "Linear Layer", "Transformer Layer", "RNN Layer" or "LSTM Layer". The more we add, more better result we will get.

👉 Pretraining-Based Natural Language Generation for Text Summarization(Abstractive)

This model is Bi-directional pre-trained base Summarization model. It was introduced by Zhang at 2019 [39].

Encoder: they simply use BERT as the encoder. It first maps the input sequence to word embeddings and then computes document embeddings as the encoder’s output.

Summary Draft Decoder: In the draft decoder, they first introduce BERT’s word embedding matrix to map the previous summary draft outputs

{ , . . . ,

} into embeddings vectors {

, . . . ,

} at

time step. Note that as the input sequence of the decoder is

not complete, they do not use the BERT network to predict the

context vectors here.

Then they introduce an N layer Transformer decoder to

learn the conditional probability

. Transformer’s

encoder-decoder multi-head attention helps the decoder learn

soft alignments between summary and source document. At

the t-th time step, the draft decoder predicts output probability conditioned on previous outputs and encoder hidden

representations.

Copy Mechanism: As some summary tokens are out-of-vocabulary words and occurs in input document, they incorporate copy mechanism based on the Transformer decoder. They use here copy gate with only two types of value [0,1].