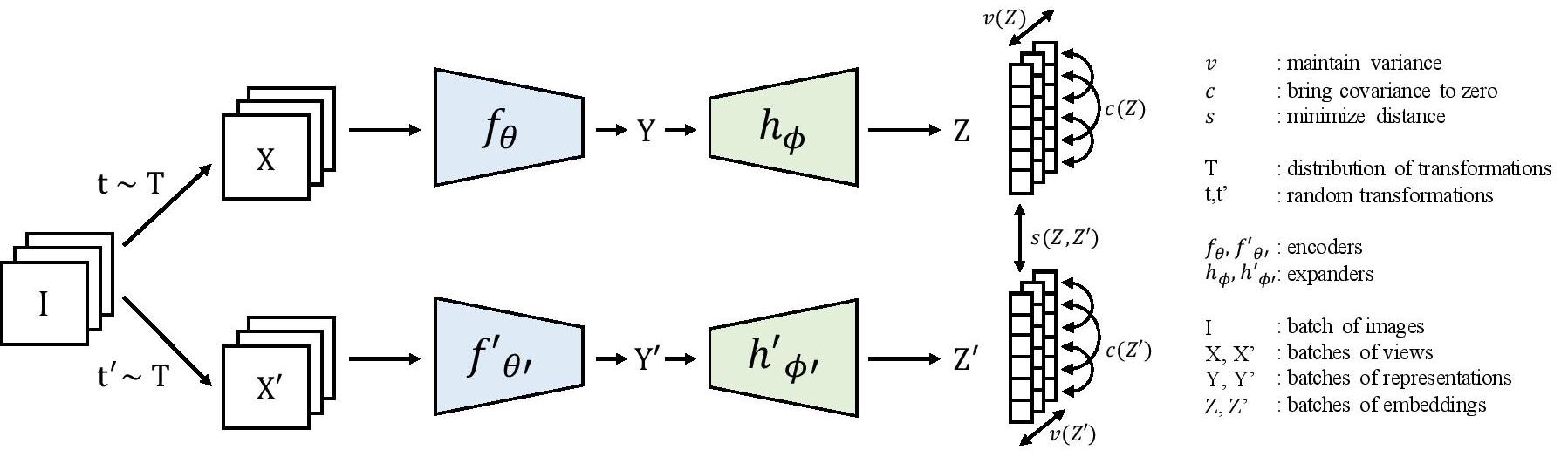

This repository provides a PyTorch implementation and pretrained models for VICReg, as described in the paper VICReg: Variance-Invariance-Covariance Regularization For Self-Supervised Learning

Adrien Bardes, Jean Ponce and Yann LeCun

Meta AI, Inria

You can choose to download only the weights of the pretrained backbone used for downstream tasks, or the full checkpoint which contains backbone and projection head weights.

| arch | params | accuracy | download | |||||

|---|---|---|---|---|---|---|---|---|

| ResNet-50 | 23M | 73.2% | backbone only | full ckpt | ||||

| ResNet-50 (x2) | 93M | 75.5% | backbone only | full ckpt | ||||

| ResNet-200 (x2) | 250M | 77.3% | backbone only | full ckpt | ||||

import torch

resnet50 = torch.hub.load('facebookresearch/vicreg:main', 'resnet50')

resnet50x2 = torch.hub.load('facebookresearch/vicreg:main', 'resnet50x2')

resnet200x2 = torch.hub.load('facebookresearch/vicreg:main', 'resnet200x2')Install PyTorch (pytorch.org) and download ImageNet. The code has been developed for PyTorch version 1.8.1 and torchvision version 0.9.1, but should work with other versions just as well.

To pretrain VICReg with MobileNetV2 on a single node with 4 GPUs for 100 epochs, run:

python -m torch.distributed.launch --nproc_per_node=4 main_vicreg.py --data-dir /scratch/datasets/imagenet/ --exp-dir /path/to/experiment/ --arch mobilenetv2 --epochs 100 --batch-size 256 --base-lr 0.3

To pretrain VICReg with submitit (pip install submitit) and SLURM on 4 nodes with 8 GPUs each for 1000 epochs, run:

python run_with_submitit.py --nodes 2 --ngpus 8 --data-dir $DATA_ILSVRC2012 --exp-dir experiment_slurm --arch mobilenetv2 --epochs 1000 --batch-size 2048 --base-lr 0.2

To evaluate a pretrained MobileNetV2 backbone on linear classification on ImageNet, run:

python evaluate.py --data-dir /scratch/datasets/imagenet/ --pretrained /path/to/checkpoint/mobilenetv2.pth --exp-dir /path/to/experiment/ --lr-head 0.02

To evaluate a pretrained ResNet50-model on semi-supervised fine-tunning on 1% of ImageNet labels, run:

python evaluate.py --data-dir /path/to/imagenet/ --pretrained /path/to/checkpoint/resnet50.pth --exp-dir /path/to/experiment/ --weights finetune --train-perc 1 --epochs 20 --lr-backbone 0.03 --lr-classifier 0.08 --weight-decay 0

To evaluate a pretrained ResNet50-model on semi-supervised fine-tunning on 10% of ImageNet labels, run:

python evaluate.py --data-dir /path/to/imagenet/ --pretrained /path/to/checkpoint/resnet50.pth --exp-dir /path/to/checkpoint/resnet.pth --weights finetune --train-perc 10 --epochs 20 --lr-backbone 0.01 --lr-classifier 0.1 --weight-decay 0

This repository is built using the Barlow Twins repository.

This project is released under MIT License, which allows commercial use. See LICENSE for details.

If you find this repository useful, please consider giving a star ⭐ and citation:

@inproceedings{bardes2022vicreg,

author = {Adrien Bardes and Jean Ponce and Yann LeCun},

title = {VICReg: Variance-Invariance-Covariance Regularization For Self-Supervised Learning},

booktitle = {ICLR},

year = {2022},

}