Yet another simple implementation of GAN and Wasserstein GAN using TensorFlow 2.

Following advice from the original GAN paper [Goo+14], we trained G by maximizing ln[D(G(z))] to avoid vanishing gradient.

We implemented the WGAN-LP variant [PFL17] in place of the ordinary WGAN [ACB17]. The gradient penalty is computed by perturbing the concatenation of real and fake data with Gaussian noises.

-

To train GAN on MNIST:

python main.py --model GAN --dataset MNIST

-

To train WGAN on CIFAR-10:

python main.py --model WGAN --dataset CIFAR10

-

To see available parameters and explaninations:

python main.py --help

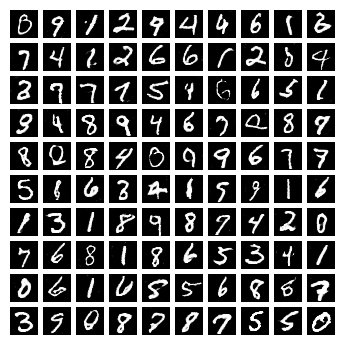

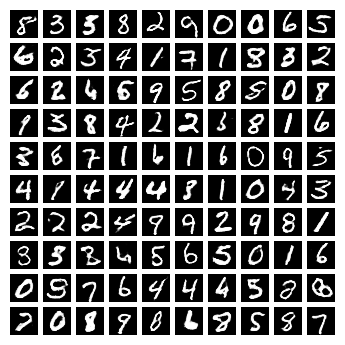

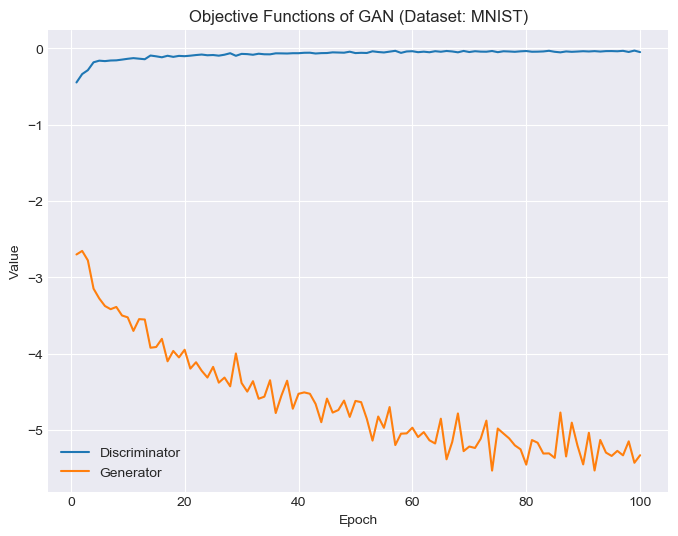

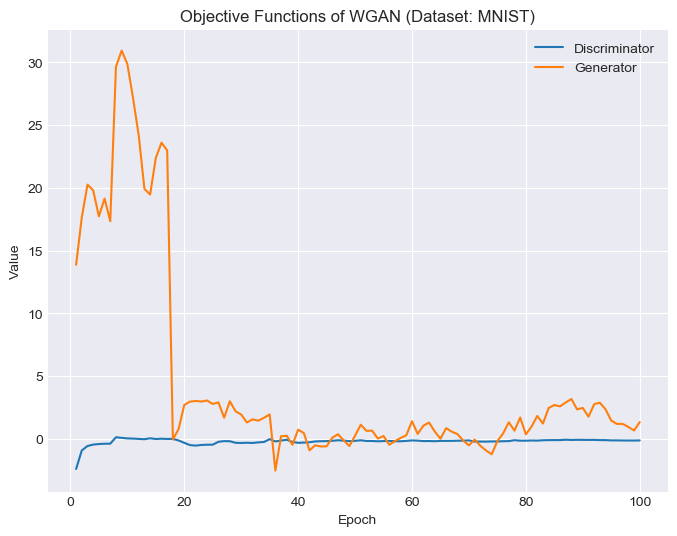

For the MNIST dataset, we picked 16 seeds (respectively for both models) to showcase how the generators evolve. We also randomly generated 100 samples after 100 epochs of training. The (median) objective function values for GAN and WGAN are displayed at the end.

| GAN | WGAN |

|---|---|

|

|

|

|

|

|

Likewise, here we can see how the models evolve when training on the CIFAR-10 dataset. The generators turn out can be quite unstable for this data, fine tuning is therefore needed in order to get decent results.

| GAN | WGAN |

|---|---|

|

|

|

|

The following tutorials/repos have provided immense help for this implementation:

-

Denis Lukovnikov's WGAN-GP reproduction.

-

Junghoon Seo's WGAN-LP reproduction.

-

Martin Arjovsky, Soumith Chintala, and Léon Bottou. Wasserstein GAN. 2017. arXiv: 1701.07875 [stat.ML].

-

Ian Goodfellow et al. Generative Adversarial Nets. 2014. In: Advances in Neural Information Processing Systems 27.

-

Ishaan Gulrajani et al. Improved Training of Wasserstein GANs. 2017. arXiv: 1704.00028 [cs.LG].

-

Naveen Kodali et al. On Convergence and Stability of GANs. 2017. arXiv: 1705.07215 [cs.AI].

-

Henning Petzka, Asja Fischer, and Denis Lukovnicov. On the regularization of Wasserstein GANs. 2017. arXiv: 1709.08894 [stat.ML].

-

Tim Salimans et al. Improved Techniques for Training GANs. 2016. arXiv: 1606.03498 [cs.LG].