MatchZoo is a toolkit for text matching. It was developed with a focus on facilitating the designing, comparing and sharing of deep text matching models. There are a number of deep matching methods, such as DRMM, MatchPyramid, MV-LSTM, aNMM, DUET, ARC-I, ARC-II, DSSM, and CDSSM, designed with a unified interface. Potential tasks related to MatchZoo include document retrieval, question answering, conversational response ranking, paraphrase identification, etc. We are always happy to receive any code contributions, suggestions, comments from all our MatchZoo users.

| Tasks | Text 1 | Text 2 | Objective |

|---|---|---|---|

| Paraphrase Indentification | string 1 | string 2 | classification |

| Textual Entailment | text | hypothesis | classification |

| Question Answer | question | answer | classification/ranking |

| Conversation | dialog | response | classification/ranking |

| Information Retrieval | query | document | ranking |

MatchZoo is still under development. Before the first stable release (1.0), please clone the repository and run

git clone https://github.com/faneshion/MatchZoo.git

cd MatchZoo

python setup.py install

In the main directory, this will install the dependencies automatically.

For usage examples, you can run

python matchzoo/main.py --phase train --model_file examples/toy_example/config/arci_ranking.config

python matchzoo/main.py --phase predict --model_file examples/toy_example/config/arci_ranking.config

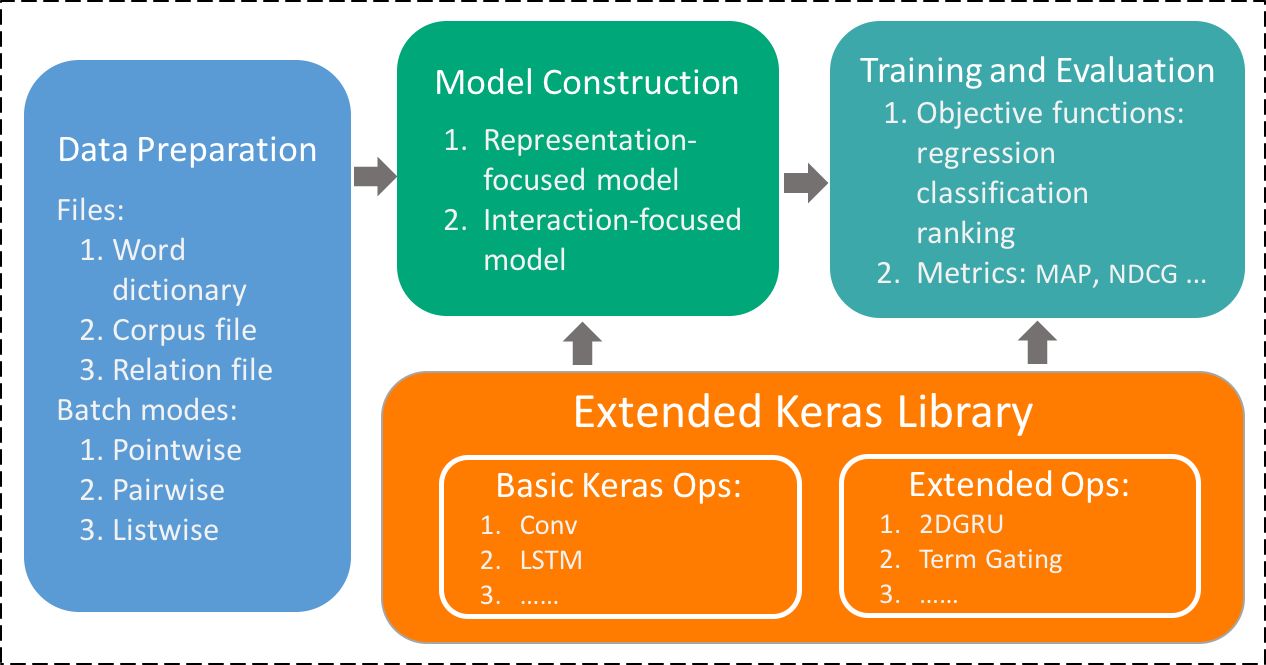

The architecture of the MatchZoo toolkit is described in the Figure in what follows,

There are three major modules in the toolkit, namely data preparation, model construction, training and evaluation, respectively. These three modules are actually organized as a pipeline of data flow.The data preparation module aims to convert dataset of different text matching tasks into a unified format as the input of deep matching models. Users provide datasets which contains pairs of texts along with their labels, and the module produces the following files.

- Word Dictionary: records the mapping from each word to a unique identifier called wid. Words that are too frequent (e.g. stopwords), too rare or noisy (e.g. fax numbers) can be filtered out by predefined rules.

- Corpus File: records the mapping from each text to a unique identifier called tid, along with a sequence of word identifiers contained in that text. Note here each text is truncated or padded to a fixed length customized by users.

- Relation File: is used to store the relationship between two texts, each line containing a pair of tids and the corresponding label.

- Detailed Input Data Format: a detailed explaination of input data format can be found in MatchZoo/data/example/readme.md.

In the model construction module, we employ Keras library to help users build the deep matching model layer by layer conveniently. The Keras libarary provides a set of common layers widely used in neural models, such as convolutional layer, pooling layer, dense layer and so on. To further facilitate the construction of deep text matching models, we extend the Keras library to provide some layer interfaces specifically designed for text matching.

Moreover, the toolkit has implemented two schools of representative deep text matching models, namely representation-focused models and interaction-focused models [Guo et al.].

For learning the deep matching models, the toolkit provides a variety of objective functions for regression, classification and ranking. For example, the ranking-related objective functions include several well-known pointwise, pairwise and listwise losses. It is flexible for users to pick up different objective functions in the training phase for optimization. Once a model has been trained, the toolkit could be used to produce a matching score, predict a matching label, or rank target texts (e.g., a document) against an input text.

Here, we adopt WikiQA dataset for an example to illustrate the usage of MatchZoo. WikiQA is a popular benchmark dataset for answer sentence selection in question answering. We have provided a script to download the dataset, and prepared it into the MatchZoo data format. In the models directory, there are a number of configurations about each model for WikiQA dataset.

Take the DRMM as an example. In training phase, you can run

python matchzoo/main.py --phase train --model_file examples/wikiqa/config/drmm_wikiqa.config

In testing phase, you can run

python matchzoo/main.py --phase predict --model_file examples/wikiqa/config/drmm_wikiqa.config

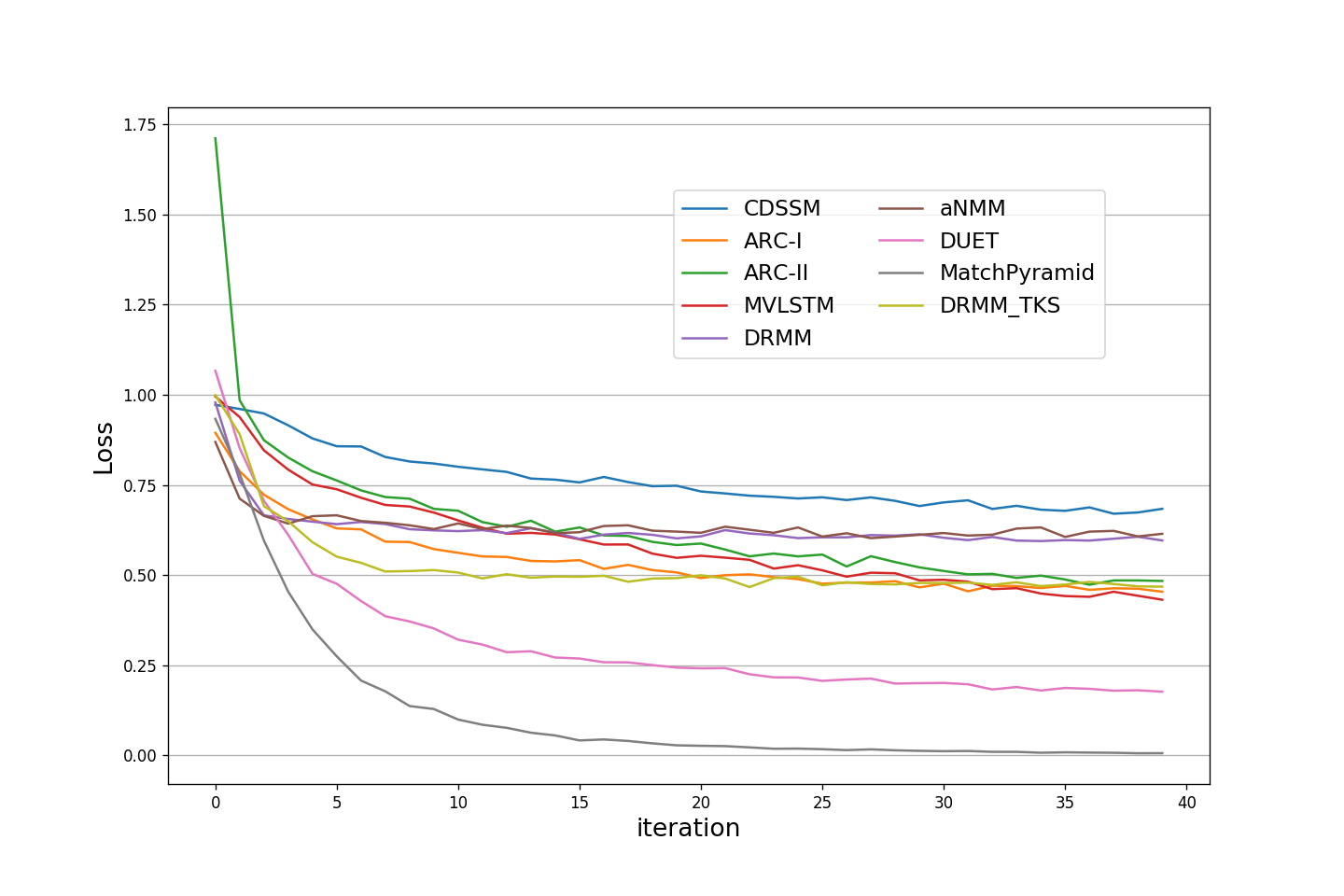

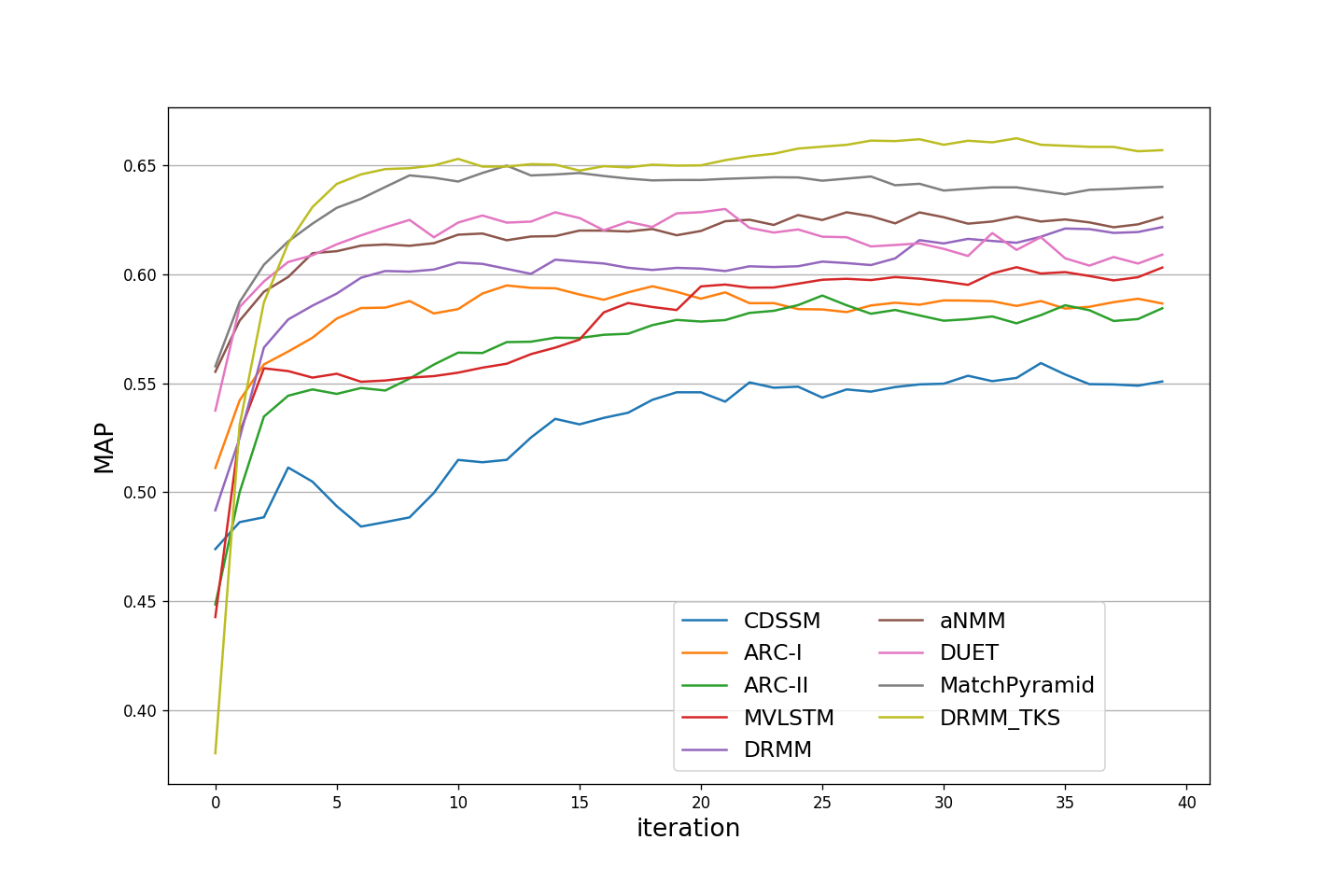

We have compared 10 models, the results are as follows.

I will update more models.

| Models | NDCG@3 | NDCG@5 | PRECISION@1 | MAP |

|---|---|---|---|---|

| DSSM | 0.3412 | 0.4179 | None | 0.3840 |

| DSSM_Hegx | 0.5680 | 0.6352 | 0.4008 | 0.5833 |

| CDSSM | 0.5489 | 0.6084 | None | 0.5593 |

| CDSSM_Hegx | 0.4407 | 0.5219 | None | 0.4652 |

| ARC-I | 0.5680 | 0.6317 | None | 0.5870 |

| ARC-I_Hegx | 0.5793 | 0.6360 | 0.4473 | 0.5934 |

| ARC-II | 0.5647 | 0.6176 | None | 0.5845 |

| ARC-II_Hegx | 0.5651 | 0.6238 | 0.4473 | 0.5911 |

| MV-LSTM | 0.5818 | 0.6452 | None | 0.5988 |

| MV-LSTM_Hegx | 0.6168 | 0.6667 | 0.5021 | 0.6316 |

| DRMM | 0.6107 | 0.6621 | None | 0.6195 |

| DRMM_Hegx | 0.6125 | 0.6646 | 0.4768 | 0.6220 |

| aNMM | 0.6160 | 0.6696 | None | 0.6297 |

| aNMM_Hegx | 0.6249 | 0.6691 | 0.4852 | 0.6283 |

| DUET | 0.6065 | 0.6722 | None | 0.6301 |

| DUET_Hegx | 0.6322 | 0.6773 | 0.5232 | 0.6500 |

| MatchPyramid | 0.6317 | 0.6913 | None | 0.6434 |

| MatchPyramid_Hegx | 0.6160 | 0.6689 | 0.4641 | 0.6215 |

| DRMM_TKS | 0.6458 | 0.6956 | None | 0.6586 |

| DRMM_TKS_Hegx | 0.6500 | 0.6958 | 0.5148 | 0.6555 |

| EAttention | 0.6129 | 0.6698 | 0.4979 | 0.6326 |

| EAttention_100d | 0.6273 | 0.6766 | 0.4810 | 0.6319 |

| ECautiousAttention | 0.5549 | 0.6255 | 0.4051 | 0.5758 |

| ESelfAttention | 0.5815 | 0.6392 | None | 0.6022 |

| ECrossAttention | 0.5787 | 0.6423 | None | 0.6052 |

| ELSTMAttention | 0.6212 | 0.6816 | 0.4852 | 0.6332 |

| ELSTMAttention_100d | 0.6329 | 0.6816 | 0.5148 | 0.6451 |

| BiMPM | 0.6370 | 0.7050 | 0.5232 | 0.6498 |

| BiMPM_100d | 0.6516 | 0.7170 | 0.5485 | 0.6691 |

| MergedAttention | 0.6074 | 0.6632 | 0.4852 | 0.6221 |

| BiGRU | 0.6152 | 0.6733 | 0.4895 | 0.6293 |

| BiGRU_100d | 0.6227 | 0.6803 | 0.4979 | 0.6355 |

| BiLSTM | 0.6010 | 0.6679 | 0.4895 | 0.6264 |

| BiLSTM_100d | 0.6072 | 0.6636 | 0.4810 | 0.6240 |

| MultiBiGRU | 0.5907 | 0.6612 | 0.4852 | 0.6135 |

| MultiBiLSTM | 0.5694 | 0.6331 | 0.4768 | 0.5845 |

| K-NRM | 0.6268 | 0.6693 | None | 0.6256 |

| AMANDA | 0.5803 | 0.6370 | 0.4388 | 0.5972 |

The MAP of each models are depicted in the following figure,

Here, the DRMM_TKS is a variant of DRMM for short text matching. Specifically, the matching histogram is replaced by a top-k maxpooling layer and the remaining part are fixed.- DRMM

this model is an implementation of A Deep Relevance Matching Model for Ad-hoc Retrieval.

- model file: models/drmm.py

- model config: models/drmm_ranking.config

- MatchPyramid

this model is an implementation of Text Matching as Image Recognition

- model file: models/matchpyramid.py

- model config: models/matchpyramid_ranking.config

- ARC-I

this model is an implementation of Convolutional Neural Network Architectures for Matching Natural Language Sentences

- model file: models/arci.py

- model config: models/arci_ranking.config

- DSSM

this model is an implementation of Learning Deep Structured Semantic Models for Web Search using Clickthrough Data

- model file: models/dssm.py

- model config: models/dssm_ranking.config

- CDSSM

this model is an implementation of Learning Semantic Representations Using Convolutional Neural Networks for Web Search

- model file: models/cdssm.py

- model config: models/cdssm_ranking.config

- ARC-II

this model is an implementation of Convolutional Neural Network Architectures for Matching Natural Language Sentences

- model file: models/arcii.py

- model config: models/arcii_ranking.config

- MV-LSTM

this model is an implementation of A Deep Architecture for Semantic Matching with Multiple Positional Sentence Representations

- model file: models/mvlstm.py

- model config: models/mvlstm_ranking.config

- aNMM

this model is an implementation of aNMM: Ranking Short Answer Texts with Attention-Based Neural Matching Model

- model file: models/anmm.py

- model config: models/anmm_ranking.config

- DUET

this model is an implementation of Learning to Match Using Local and Distributed Representations of Text for Web Search

- model file: models/duet.py

- model config: models/duet_ranking.config

- K-NRM

this model is an implementation of End-to-End Neural Ad-hoc Ranking with Kernel Pooling

- model file: models/knrm.py

- model config: models/knrm_ranking.config

- models under development:

Match-SRNN, DeepRank ....

@article{fan2017matchzoo,

title={MatchZoo: A Toolkit for Deep Text Matching},

author={Fan, Yixing and Pang, Liang and Hou, JianPeng and Guo, Jiafeng and Lan, Yanyan and Cheng, Xueqi},

journal={arXiv preprint arXiv:1707.07270},

year={2017}

}

- Jiafeng Guo

- Institute of Computing Technolgy, Chinese Academy of Sciences

- HomePage

- Yanyan Lan

- Institute of Computing Technolgy, Chinese Academy of Sciences

- HomePage

- Xueqi Cheng

- Institute of Computing Technolgy, Chinese Academy of Sciences

- HomePage

- python2.7+

- tensorflow 1.2+

- keras 2.06+

- nltk 3.2.2+

- tqdm 4.19.4+

- h5py 2.7.1+

- Yixing Fan

- Institute of Computing Technolgy, Chinese Academy of Sciences

- Google Scholar

- Liang Pang

- Institute of Computing Technolgy, Chinese Academy of Sciences

- Google Scholar

- Liu Yang

- Center for Intelligent Information Retrieval, University of Massachusetts Amherst

- HomePage

We would like to express our appreciation to the following people for contributing source code to MatchZoo, including Yixing Fan, Liang Pang, Liu Yang, Yukun Zheng, Lijuan Chen, Jianpeng Hou, Zhou Yang, Niuguo cheng etc..

Feel free to post any questions or suggestions on GitHub Issues and we will reply to your questions there. You can also suggest adding new deep text maching models into MatchZoo and apply for joining us to develop MatchZoo together.

Update in 12/10/2017: We have applied another WeChat ID: CLJ_Keep. Anyone who want to join the WeChat group can add this WeChat id as a friend. Please tell us your name, company or school, city when you send such requests. After you added "CLJ_Keep" as one of your WeChat friends, she will invite you to join the MatchZoo WeChat group. "CLJ_Keep" is one member of the MatchZoo team.