ACL paper · Pre-trained Models · Dataset

Table of Contents

This is the official code for the paper Fine-Grained Controllable Text Generation Using Non-Residual Prompting.

The paper was accepted at ACL-2022, and official reviews and responses can be found at ACL Reviews.pdf

A short video summary of the work can be found on Youtube.

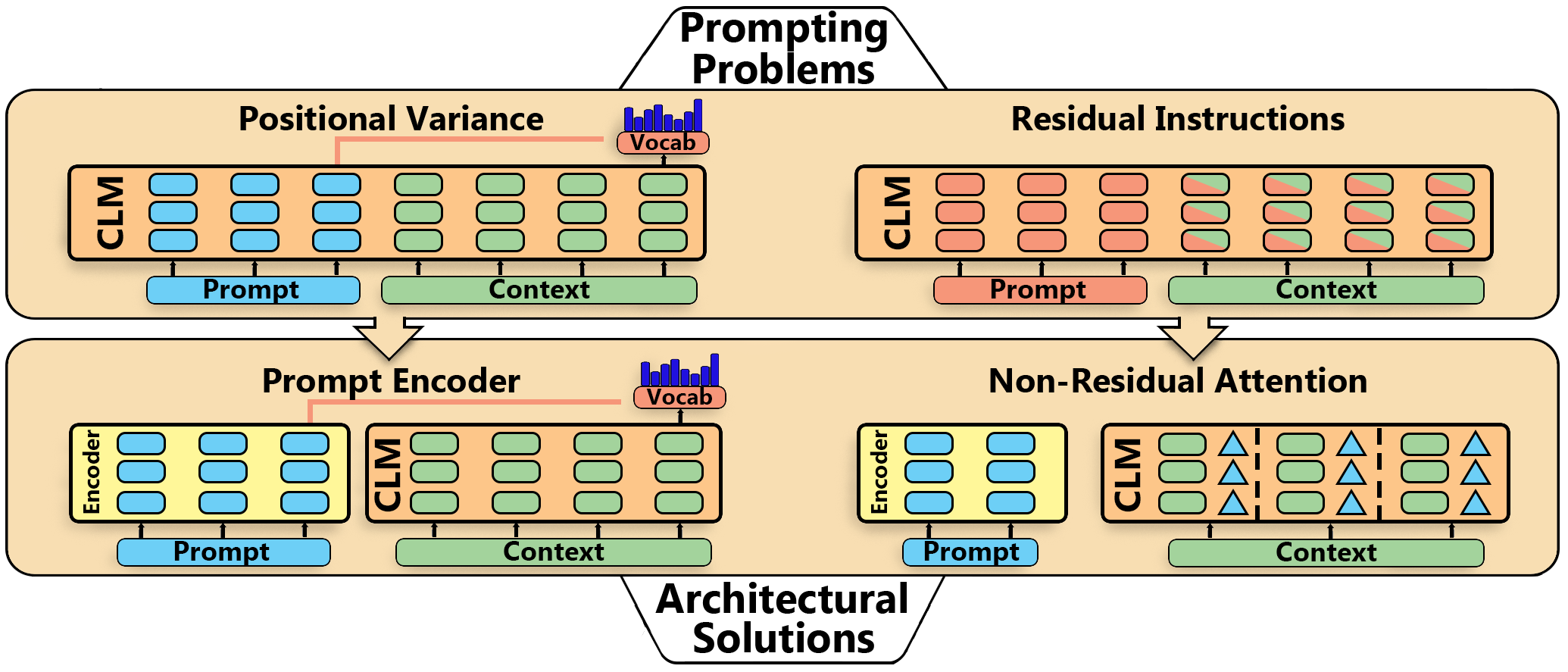

Controlling the generative process for large causal language models is at large an unsolved problem. In our approach, we try to move towards a more steerable generation process by enabling high-level prompt instructions at arbitrary time steps.

We introduce a separate language model for the prompt instructions, that generate positionally invariant hidden states. This prompt model is then used to steer the generative model. Using non-redisual attention, we keep track of two streams of information in the original generative model. This allows us to steer the text generation without leaving any disruptive footprints in the hidden states.

The packages can be found in the requirements file. The only strong requirement is to use the version transformers==4.8.1.

pip install -r requirements.txt

After installing the required packages, generating text is easily done by

using ./inference.sh. It contains some arguments:

- TARGET_WORDS: The space-separated words to be included in the generated text.

- CONTEXT: The context that the model continues to generate from.

- SENTENCE_LENGTH: The target sentence length to instruct the model with

- GENERATE_LENGTH: The number of tokens in the resulting text

- NUM_BEAMS: The number of beams used within beam search

The trained model used in the paper will be downloaded automatically from HuggingFace.

Here are some example outputs, showing the target words used, followed by the generated texts:

------------ ['magazine', 'read', 'plenty']

The magazine was read by plenty of people, but it wasn't a hit.

"I don't know why," he said. "It just didn't

------------ ['Sweden', 'summer', 'boat', 'water']

The boat was used to transport water for the Summer Olympics in Stockholm, Sweden during the 1980s and 1990s.

It is believed to have been built by

------------ ['dog', 'breed', 'brown', 'camping', 'park']

The park is open to the public for camping, hiking and dog-sledding at Brown's Dog Park. The park also has a brown bear breeding program that

------------ ['king', 'queen', 'wedding', 'castle', 'knight']

The king and queen were at the castle for a wedding when they heard of their son's death. The King and Queen went to see him, but he was not

To evaluate texts, use ./evaluate.sh that takes an input file with a json list of texts as a positional argument.

Note that the order of the texts within this list must correspond to the order of samples in the evaluation dataset.

This file got some settings that can be configured:

- DATASET:

common_genorc2genDatasets are automatically loaded from HuggingFace - PPL_MODEL_NAME: The name of the model to use, the paper uses

gpt2-xl - PPL_BATCH_SIZE: With many texts and access to a large GPU, this can be increased for faster evaluation.

- CONTEXT: The custom context that was used when generating the texts. For example, when generating with no context (common_gen) the model may need an initial string to condition the generation on, like 'The'.

- SENTENCE_LEVEL: Leave as

--sentence_levelfor sentence-level evaluation, or empty string for Free Text evaluation.

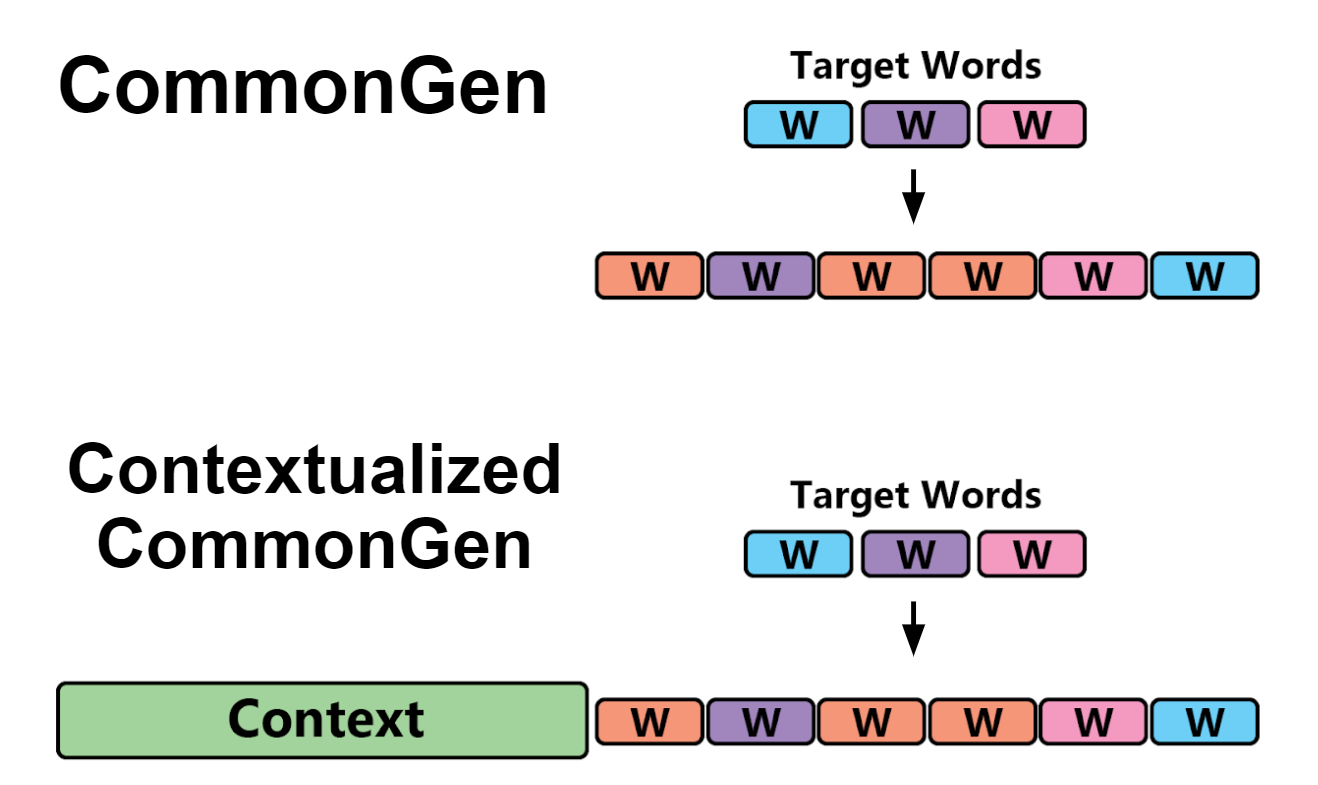

In this paper we also introduce a new dataset that is based on the CommonGen dataset. In the CommonGen dataset the objective of the task is to generate text that includes a given set of target words and that adheres to common sense. These examples are however all formulated without any context, where we believe that many application areas need to take context into account.

Therefore, to complement CommonGen, we provide an extended test set of CommonGen, called Contextualized CommonGen Dataset (C2Gen) where a context is provided for each set of target words. The task is therefore reformulated to both generate commonsensical text which includes the given words, and also have the generated text adhere to the given context as shown on the Figure below.

The dataset is uploaded on HuggingFace, so you can directly inspect the dataset here and incorporate it into your framework: Non-Residual-Prompting/C2Gen.

Contributions are what make the open source community such an amazing place to learn, inspire, and create. Any contributions you make are greatly appreciated.

If you have a suggestion that would make this better, please fork the repo and create a pull request. You can also simply open an issue with the tag "enhancement". Don't forget to give the project a star! Thanks again!

If you have questions regarding the code or otherwise related to this Github page, please open an issue.

For other purposes, feel free to contact me directly at: Fredrik.Carlsson@ri.se

Distributed under the MIT License. See LICENSE for more information.

If you find this repository helpful, feel free to cite our publication Fine-grained controllable text generation via Non-Residual Prompting:

@inproceedings{carlsson-etal-2022-fine,

title = "Fine-Grained Controllable Text Generation Using Non-Residual Prompting",

author = {Carlsson, Fredrik and Öhman, Joey and Liu, Fangyu and

Verlinden, Severine and Nivre,Joakim and Sahlgren, Magnus},

booktitle = "Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers)",

month = may,

year = "2022",

address = "Dublin, Ireland",

publisher = "Association for Computational Linguistics",

url = "https://aclanthology.org/2022.acl-long.471",

pages = "6837--6857",

}