V4D: Voxel for 4D Novel View Synthesis

Wanshui Gan, Hongbin Xu, Yi Huang, Shifeng Chen, Naoto Yokoya

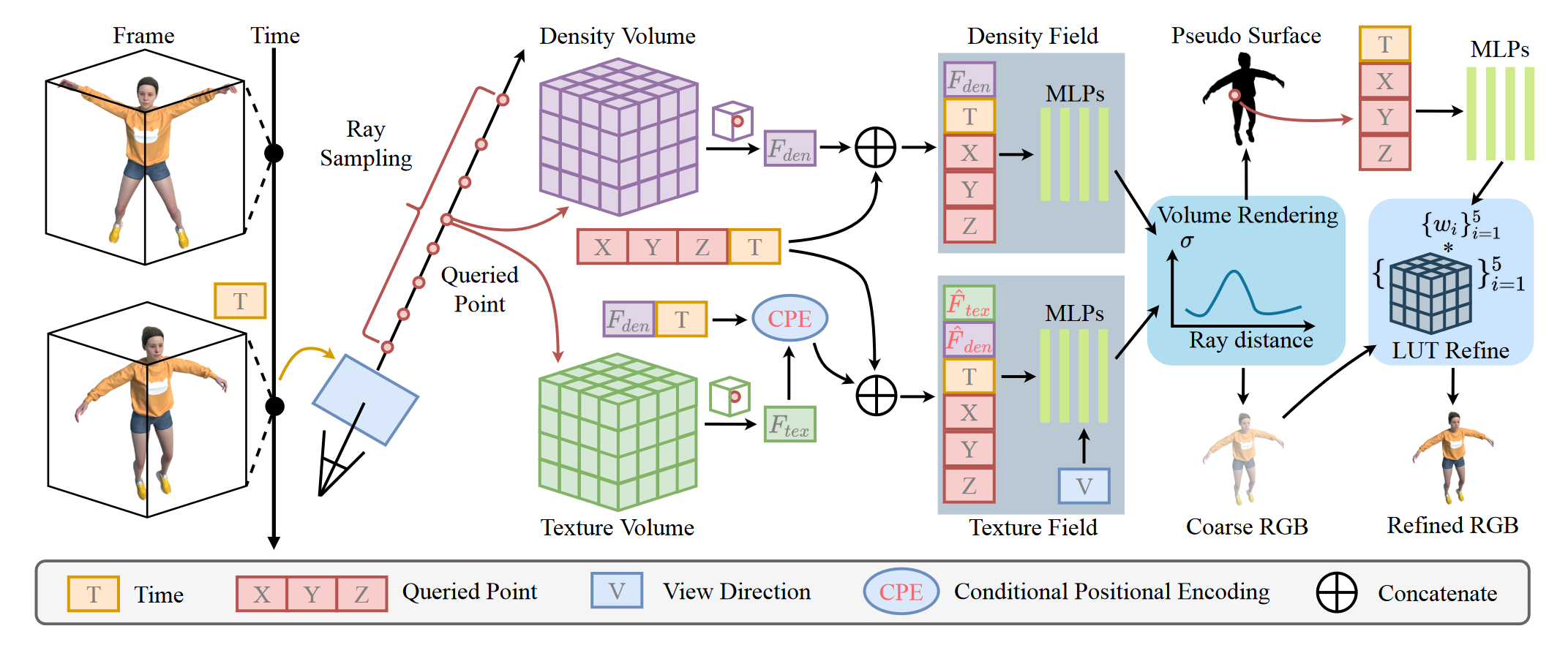

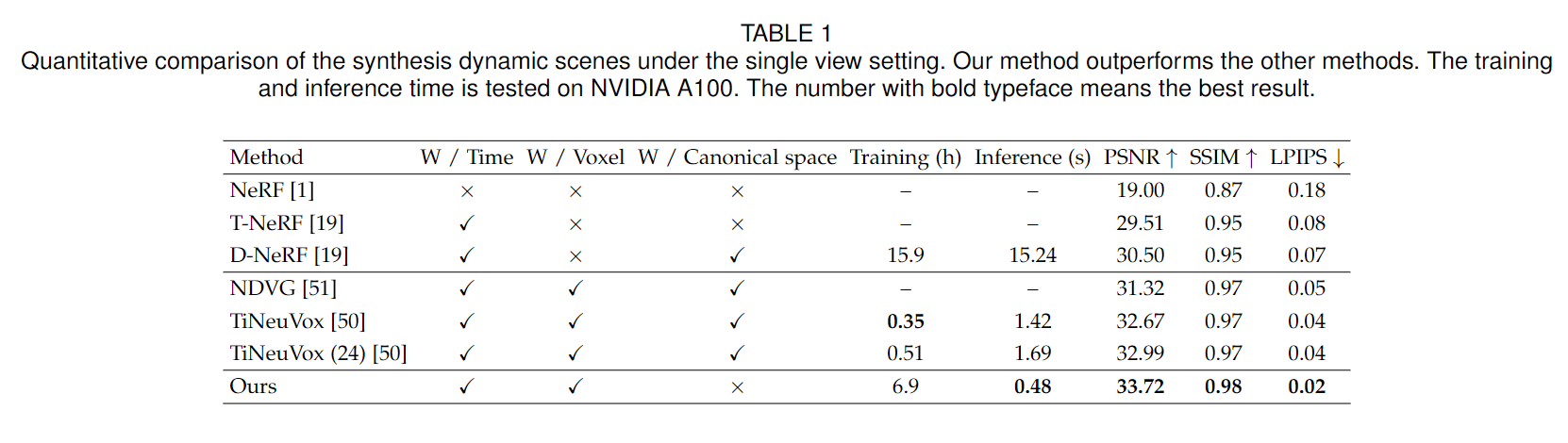

Neural radiance fields have made a remarkable breakthrough in the novel view synthesis task at the 3D static scene. However, for the 4D circumstance (e.g., dynamic scene), the performance of the existing method is still limited by the capacity of the neural network, typically in a multilayer perceptron network (MLP). In this paper, we utilize 3D Voxel to model the 4D neural radiance field, short as V4D, where the 3D voxel has two formats. The first one is to regularly model the 3D space and then use the sampled local 3D feature with the time index to model the density field and the texture field by a tiny MLP. The second one is in look-up tables (LUTs) format that is for the pixel-level refinement, where the pseudo-surface produced by the volume rendering is utilized as the guidance information to learn a 2D pixel-level refinement mapping. The proposed LUTs-based refinement module achieves the performance gain with little computational cost and could serve as the plug-and-play module in the novel view synthesis task. Moreover, we propose a more effective conditional positional encoding toward the 4D data that achieves performance gain with negligible computational burdens. Extensive experiments demonstrate that the proposed method achieves state-of-the-art performance at a low computational cost.

- July. 20, 2023 The first and preliminary version is realeased. Code may not be cleaned thoroughly, so feel free to open an issue if any question.

- lpips

- mmcv (refer to : https://github.com/open-mmlab/mmcv)

- imageio

- imageio-ffmpeg

- opencv-python

- pytorch_msssim

- torch

- torch_scatter (download the suitable whl file for your envs from https://pytorch-geometric.com/whl/, then pip install ** .whl)

- torchsearchsorted (refer to : https://github.com/aliutkus/torchsearchsorted)

Try to prepare the envs by

sh run.sh

For synthetic scenes:

The dataset provided in D-NeRF is used. You can download the dataset from dropbox. Then organize your dataset as follows.

For real dynamic scenes:

The dataset provided in HyperNeRF is used. You can download scenes from Hypernerf Dataset and organize them as Nerfies.

Change the relative base path in the configs in the code to match your data structure.

├── data

│ ├── nerf_synthetic

│ │ │──trex

│ ├

│ ├── real

For training synthetic scenes, such as trex, run

basedir=./logs/dynamic_synthesis

python run.py --config configs/dynamic/synthesis/trex.py --render_test --basedir $basedir

set --video_only to generate the fixed time, fixed view, and novel view time video, we have not tested this function on real scenes yet.

For training real scenes, such as broom, run

basedir =./logs/dynamic_real

python run.py --config configs/dynamic/real/broom.py --render_test --basedir $basedir

Some messages for the training

-

The tv loss is now implemented on the original Pytorch, and the training time may vary by around 1 hour for each independent training. For now, we did not investigate it further and we report the fastest training time. We try to use the cuda version from [DirectVoxGO] to achieve faster training, but with the performance drop on some scenes, which still need to finetune the hyperparameter of the weight.

-

At present, the result on the paper is with the 4196 rays for training, if you change with larger number like 8196, the network could achieve obvious performance gain, but with longer training time!

-

We provide the fine box for synthesis dataset. You can set the search_geometry to True if you want to calculate it by yourself.

All the source results can be found in this link.

This repository is partially based on DirectVoxGO, TiNeuVox and D-NeRF. Thanks for their awesome works.

If you find this repository/work helpful in your research, welcome to cite the paper and give a ⭐.

@article{gan2023v4d,

title={V4d: Voxel for 4d novel view synthesis},

author={Gan, Wanshui and Xu, Hongbin and Huang, Yi and Chen, Shifeng and Yokoya, Naoto},

journal={IEEE Transactions on Visualization and Computer Graphics},

year={2023},

publisher={IEEE}

}