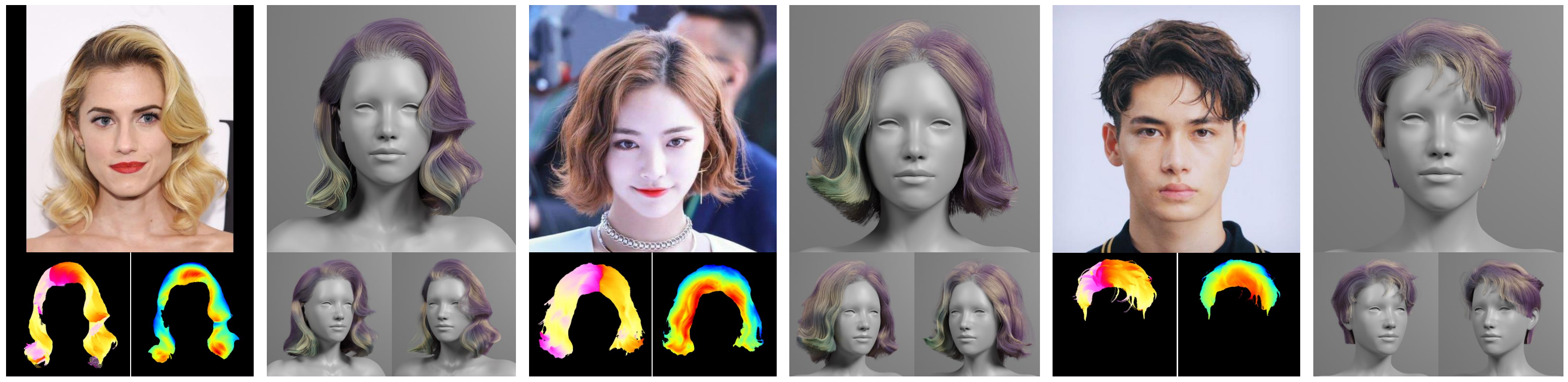

HairStep: Transfer Synthetic to Real Using Strand and Depth Maps for Single-View 3D Hair Modeling (CVPR2023 Highlight) [Projectpage]

All data of HiSa & HiDa is hosted on Google Drive:

| Path | Files | Format | Description |

|---|---|---|---|

| HiSa_HiDa | 12,503 | Main folder | |

| ├ img | 1,250 | PNG | Hair-centric real images at 512×512 |

| ├ seg | 1,250 | PNG | Hair masks |

| ├ body_img | 1,250 | PNG | Whole body masks |

| ├ stroke | 1,250 | SVG | Hair strokes (vector curves) manually labeled by artists |

| ├ strand_map | 1,250 | PNG | Strand maps with body mask |

| ├ camera_param | 1,250 | NPY | Estimated camera parameters for orthogonal projection |

| ├ relative_depth | 2,500 | Folder for the annotation of the ordinal relative depth | |

| ├ pairs | 1,250 | NPY | Pixel pairs randomly selected from adjacent super-pixels |

| ├ labels | 1,250 | NPY | Ordinal depth labels for which pixels are closer in pixel pairs |

| ├ dense_depth_pred | 2,500 | Folders for dense depth maps generated by our domain adaptive depth estimation method | |

| ├ depth_map | 1,250 | NPY | Nomalized depth maps (range from 0 to 1, the closer the bigger) |

| ├ depth_vis | 1,250 | PNG | Visulization of depth maps |

| ├ split_train.json | 1 | JSON | Split file for training |

| └ split_test.json | 1 | JSON | Split file for testing |

The HiSa & HiDa dataset and pre-trained checkpoints based on it are available for non-commercial research purposes only. All real images are collected from the Internet. Please contact Yujian Zheng and Xiaoguang Han for questions about the dataset.

git clone --recursive https://github.com/GAP-LAB-CUHK-SZ/HairStep.git

cd HairStep

conda env create -f environment.yml

conda activate hairstep

pip install torch==1.9.0+cu111 torchvision==0.10.0+cu111 -f https://download.pytorch.org/whl/torch_stable.html

pip install -r requirements.txt

cd external/3DDFA_V2

sh ./build.sh

cd ../../

Code is tested on torch1.9.0, CUDA11.1, Ubuntu 20.04 LTS.

Put collected and cropped potrait images into ./results/real_imgs/img/.

Download the checkpoint of SAM and put it to ./checkpoints/SAM-models/.

Download checkpoints of 3D networks and put them to ./checkpoints/recon3D/.

CUDA_VISIBLE_DEVICES=0 python -m scripts.img2hairstep

CUDA_VISIBLE_DEVICES=0 python scripts/get_lmk.py

CUDA_VISIBLE_DEVICES=0 python -m scripts.opt_cam

CUDA_VISIBLE_DEVICES=0 python -m scripts.recon3D

Results will be saved in ./results/real_imgs/.

- Share the HiSa & HiDa datasets

- Release the code for converting images to HairStep

- Release the code for reconstructing 3D strands from HairStep

- Release the code for computing metrics HairSale & HairRida

- Release the code for training and data pre-processing

(within a few days before the end of Feb., sorry for the delay)

Note: A more compact and efficient sub-module for 3D hair reconstruction has been released, which has comparable performance to NeuralHDHair* reported in the paper.

The original hair matting approach is provided by Kuaishou Technology, which cannot be released. The substitute method based on SAM fails sometimes.

Please cite our paper as below if you find this repository is helpful:

@inproceedings{zheng2023hairstep,

title={Hairstep: Transfer synthetic to real using strand and depth maps for single-view 3d hair modeling},

author={Zheng, Yujian and Jin, Zirong and Li, Moran and Huang, Haibin and Ma, Chongyang and Cui, Shuguang and Han, Xiaoguang},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={12726--12735},

year={2023}

}

This repository is based on some excellent works, such as HairNet, PIFu, 3DDFA_V2, SAM and Depth-in-the-wild. Many thanks.