A Survey on Language, Multimodal, and Scientific GPT Models: Examing User-Friendly and Open-Sourced Large GPT Models

Continuously updating

The original paper is released on arxiv.

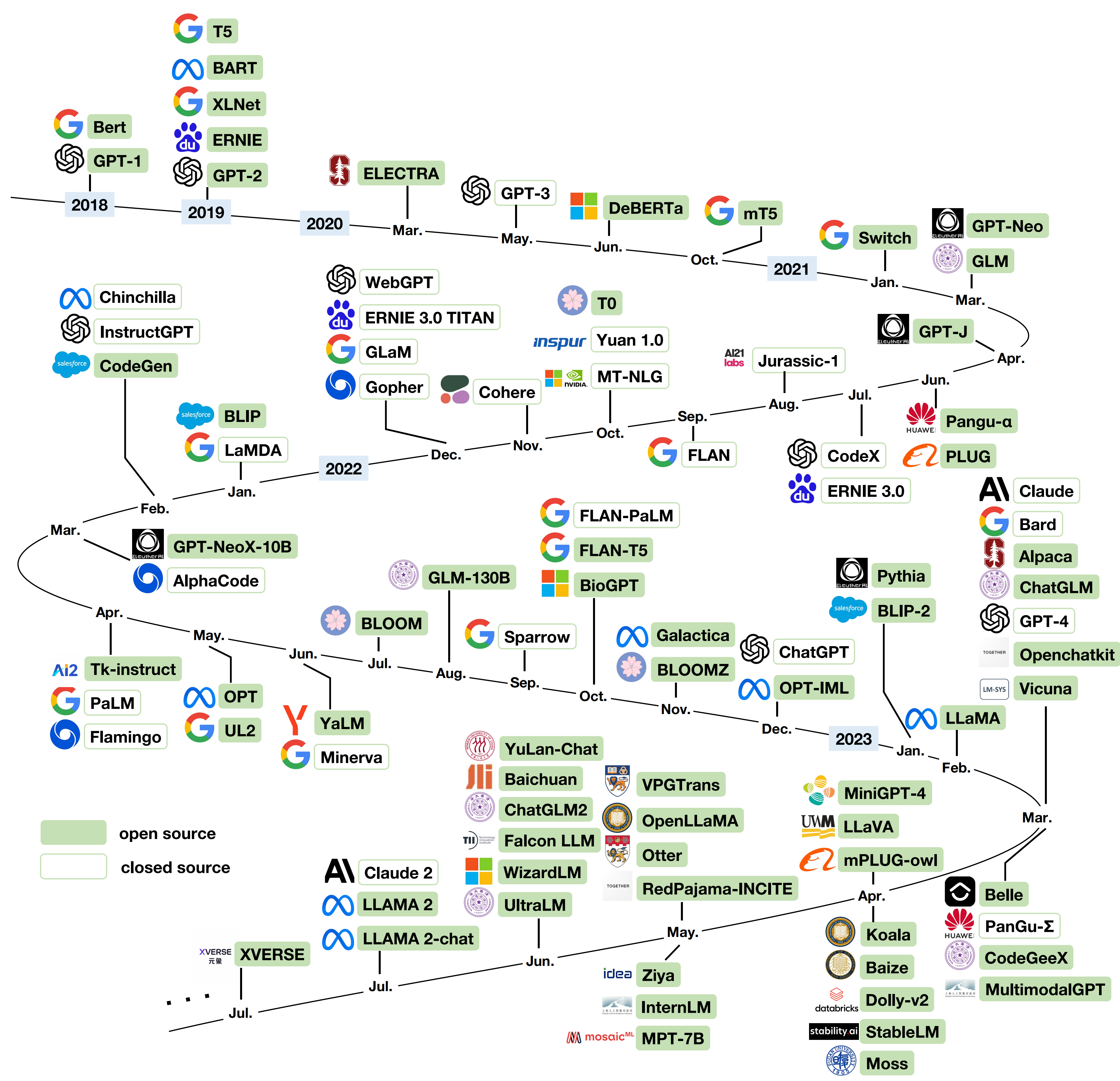

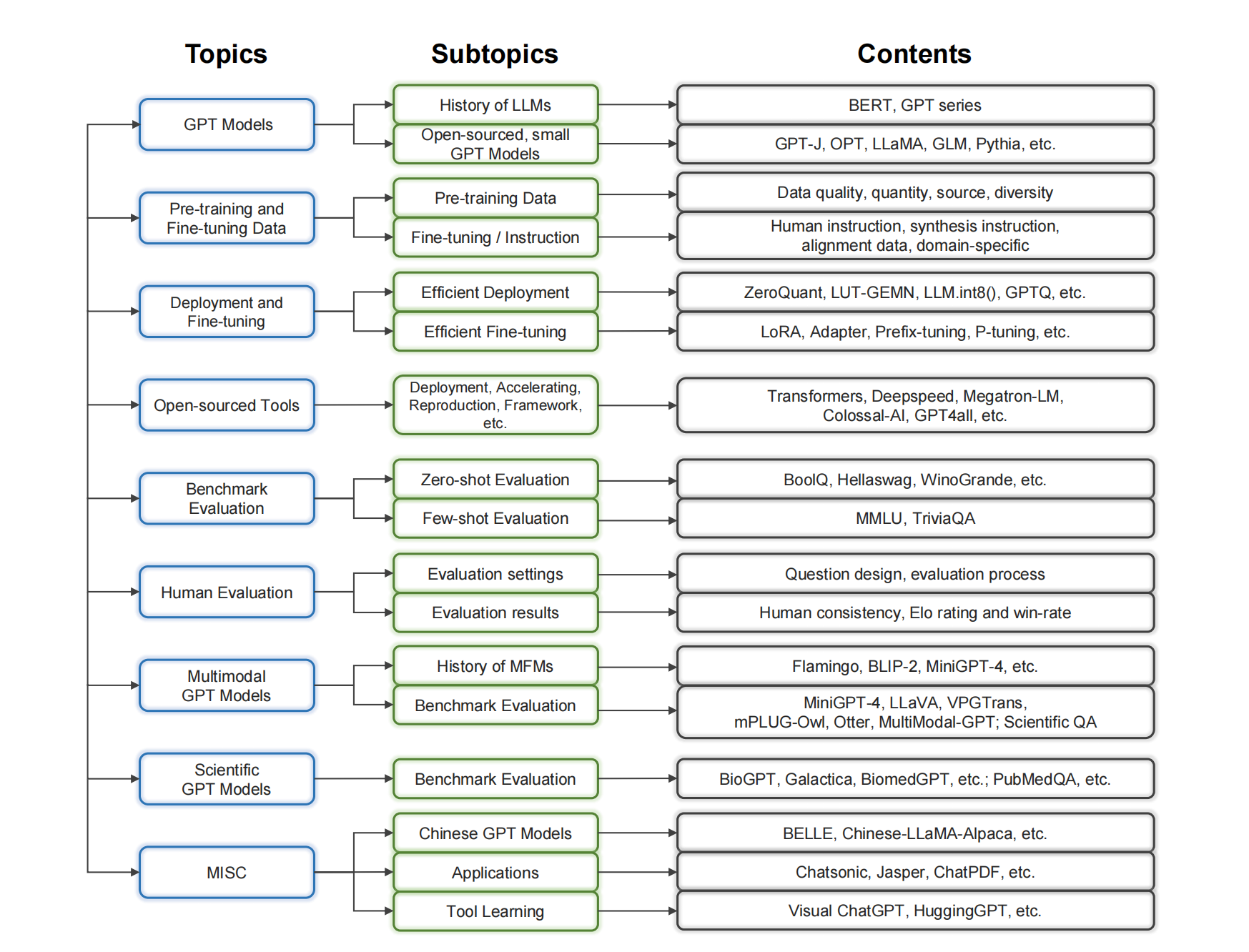

The advent of GPT models has brought about a significant transformation in the field of NLP. These models, such as GPT-4, demonstrate exceptional capabilities in various NLP tasks. However, despite their impressive capabilities, large GPT models have inherent limitations that restrict their widespread adoption, usability, and fine-tuning. The need for user-friendly, relatively small, and open-sourced alternative GPT models arises from the desire to overcome these limitations while retaining high performance. In this survey paper, we provide an examination of alternative open-sourced models of large GPTs, focusing on user-friendly and relatively small models (near 10B) that facilitate easier deployment and accessibility.

- Investigate the architecture, design principles, and trade-offs of user-friendly and relatively small alternative GPT models, focusing on their ability to overcome the challenges posed by large GPT models.

- Present the data collection and analyze the pre-training data source, data quality, quantity, diversity, and finetuning data including instruction data, alignment data, and also the domain-specific data for domain-specific models.

- Survey the techniques for efficient deployment and fine-tuning of these GPT models.

- Introduce ongoing open-source projects and initiatives for user-friendly GPT model reproduction and deployment.

- Provide a thorough analysis of benchmark evaluations and offer human evaluations of these relatively small GPT models to give some human-liked recommendations in real usage.

- Explore the extension of GPT models to multimodal settings, focusing on models that integrate NLP with computer vision, and also place special focus on user-friendly scientific GPT models and biomedical domains

The overview of the content is shown in Figure 1.

Related papers/links for open LLMs (List is updating)

Language Domain

- Exploring the limits of transfer learning with a unified text-to-text transformer. JMLR 2020. [paper] [code & models] [Huggingface models]

- mT5: A massively multilingual pre-trained text-to-text transformer. NAACL 2021. [paper] [code & models] [Huggingface models]

- GPT-Neo: Large Scale Autoregressive Language Modeling with Mesh-Tensorflow. [code & models] [Huggingface models]

- Gpt-neox-20b: An open-source autoregressive language model. arxiv 2022. [paper] [code] [original models] [Huggingface models]

- GPT-J-6B: A 6 Billion Parameter Autoregressive Language Model. [code & models] [Huggingface models]

- Opt: Open pre-trained transformer language models. arxiv 2022. [paper] [code] [Huggingface models]

- BLOOM: A 176b-parameter open-access multilingual language model. arxiv 2022. [paper] [Huggingface models]

- Crosslingual Generalization through Multitask Finetuning. arxiv 2022. [paper] [Huggingface models]

- Glm: General language model pretraining with autoregressive blank infilling. ACL 2022. [paper] [code & models] [Huggingface models]

- GLM-130B: An Open Bilingual Pre-trained Model. ICLR 2023. [paper] [code & models]

- ChatGLM-6B [code & models] [Huggingface models]

- ChatGLM2-6B [code & models] [Huggingface models]

- LLaMA: Open and Efficient Foundation Language Models. arxiv 2023. [paper] [code & models]

- OpenLLaMA: An Open Reproduction of LLaMA. [code & models]

- Stanford Alpaca: An Instruction-following LLaMA Model. [code & models]

- Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90% ChatGPT Quality. [blog] [code & models]

- StableLM: Stability AI Language Models. [code & models]

- Baize. [code & models]

- Koala: A Dialogue Model for Academic Research. [blog] [code & models]

- WizardLM: Empowering Large Pre-Trained Language Models to Follow Complex Instructions. [code & models]

- Large-scale, Informative, and Diverse Multi-round Dialogue Data, and Models. [code & models]

- YuLan-Chat: An Open-Source Bilingual Chatbot. [code & models]

- Pythia: Interpreting Transformers Across Time and Scale. arxiv 2023. [paper] [code & models]

- Dolly. [code & models]

- OpenChatKit. [code & models]

- BELLE: Be Everyone's Large Language model Engine. [code & models]

- RWKV: Reinventing RNNs for the Transformer Era. arxiv 2023. [paper] [code & models] [Huggingface models]

- ChatRWKV. [code & models]

- MOSS. [code & models]

- RedPajama-INCITE. [blog] [Huggingface models]

- Introducing MPT-7B: A New Standard for Open-Source, Commercially Usable LLMs. [blog] [code] [Huggingface models]

- Introducing Falcon LLM. [blog] [Huggingface models]

- InternLM. [code & models]

- Baichuan-7B. [code & models]

- Llama 2: Open Foundation and Fine-Tuned Chat Models. arxiv 2023. [paper] [code & models]

- Introducing Qwen-7B: Open foundation and human-aligned models. code & models]

- XVERSE-13B. [code & models]

Multimodal Domain

- Flamingo: a Visual Language Model for Few-Shot Learning. NeurIPS 2022. [paper]

- BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. arxiv 2023. [paper] [code]

- MiniGPT-4: Enhancing Vision-Language Understanding with Advanced Large Language Models. arxiv 2023. [paper] [website, code & models]

- Visual Instruction Tuning. arxiv 2023. [paper] [website, code & models]

- mPLUG-Owl: Modularization Empowers Large Language Models with Multimodality. arxiv 2023. [paper] [code & models]

- Transfer Visual Prompt Generator across LLMs. arxiv 2023. [paper] [webste, code & models]

- Otter: A Multi-Modal Model with In-Context Instruction Tuning. arxiv 2023. [paper] [code & models]

- MultiModal-GPT: A Vision and Language Model for Dialogue with Humans. arxiv 2023. [paper] [code & models]

Scientific Domain

- BioGPT: Generative Pre-trained Transformer for Biomedical Text Generation and Mining. Bioinformatics 2022. [paper] [code & models]

- Galactica: A Large Language Model for Science. arxiv 2022. [paper] [models]

- BiomedGPT: A Unified and Generalist Biomedical Generative Pre-trained Transformer for Vision, Language, and Multimodal Tasks. arxiv 2023. [paper] [code & models]

- MolXPT: Wrapping Molecules with Text for Generative Pre-training. ACL 2023. [paper] [code & models]

- Translation between Molecules and Natural Language. EMNLP 2022. [paper] [code & models]

Table 1. Statistical overview of open large language models in recent years, categorized by base models Outer pipes Cell padding No sorting

| Model | #Param | Backbone | Release Date | Training Data Size |

|---|---|---|---|---|

| T5 (enc-dec) [github] | 60M, 220M, 770M, 3B, 11B | Base Model | 2019-10 | 1T tokens |

| mT5 (enc-dec) [github] | 300M, 580M, 1.2B, 3.7B, 13B | Base Model | 2020-10 | 1T tokens |

| GPT-Neo [github] | 125M, 350M, 1.3B, 2.7B | Base Model | 2021-03 | 825GB |

| GPT-NeoX [github] | 20B | Base Model | 2022-02 | 825GB |

| GPT-J [github] | 6B | Base Model | 2021-06 | 825GB |

| OPT [github] | 125M, 1.3B, 2.7B, 6.7B, 13B, 30B, 66B, 175B | Base Model | 2022-05 | 180B tokens |

| BLOOM | 560M, 1.1B, 1B7, 3B, 7.1B, 176B | Base Model | 2022-07 | 366B tokens |

| BLOOMZ | 560M, 1.1B, 1B7, 3B, 7.1B, 176B | BLOOM | 2022-11 | - |

| GLM [github] | 110M, 335M, 410M, 515M, 2B, 10B, 130B | Base Model | 2021-03 | |

| English Wikipedia | - | |||

| GLM-130B [github] | 130B | Base Model | 2022-08 | - |

| ChatGLM [github] | 6B | GLM | 2023-03 | - |

| ChatGLM2 [github] | 6B | GLM | 2023-06 | - |

| LLaMA [github] | 7B, 13B, 33B, 65B | Base Model | 2023-02 | 1.4T tokens |

| OpenLLaMA [github] | 3B, 7B | Replicate of LLaMA | 2023-05 | |

| Alpaca [github] | 7B | LLaMA | 2023-03 | 52K |

| Vicuna [github] | 7B, 13B | LLaMA | 2023-03 | 70K |

| StableVicuna [github] | 13B | LLaMA | Vicuna | - |

| BAIZE [github] | 7B, 13B, 30B | LLaMA | 2023-04 | 54K/57K/47K |

| Koala [github] | 13B | LLaMA | 2023-04 | - |

| WizardLM [github] | 7B, 13B, 30B | LLaMA | 2023-06 | 250k/70k |

| UltraLM [github] | 13B | LLaMA | 2023-06 | - |

| Pythia [github] | 70M, 160M, 410M, 1B, 1.4B, 2.8B, 6.9B, 12B | Base Model | 2023-01 | 299.9B tokens/207B tokens |

| Dolly-v2 [github] | 12B | Pythia | 2023-04 | \textasciitilde 15k |

| Openchatkit [github] | 7B | Pythia | 2023-03 | |

| BELLE-7B [github] | 7B | Pythia | 2023-03 | 1.5M |

| StableLM-Alpha [github] | 3B, 7B | Base Model | 2023-04 | 1.5T tokens |

| StableLM-Tuned-Alpha [github] | 7B | StableLM | 2023-04 | - |

| RWKV [github] | 169M, 430M, 1.5B, 3B, 7B, 14B | Base Model | - | 825GB |

| ChatRWKV [github] | 7B, 14B | RWKV | 2022-12 | - |

| moss-moon-003-base [github] | 16B | base model | 2023-04 | 700B tokens |

| moss-moon-003-sft [github] | 16B | moss-moon-003-base | 2023-04 | 1.1 million |

| RedPajama-INCITE | 3B, 7B | Base Model | 2023-05 | 1.2T tokens |

| MPT-7B [github] | 7B | Base Model | 2023-05 | 1T tokens |

| MPT-7B-Chat [github] | 7B | MPT-7B | 2023-05 | - |

| Falcon LLM | 7B, 40B | Base Model | 2023-06 | 1T tokens |

| InternLM [github] | 7B | Base Model | 2023-06 | trillions of tokens |

| InternLM Chat [github] | 7B | InternLM | 2023-06 | - |

| Baichuan [github] | 7B | Base Model | 2023-06 | 1.2T tokens |

| LLAMA 2 [github] | 7B, 13B, 70B | Base Model | 2023-07 | 2T tokens |

| LLAMA 2-CHAT [github] | 7B, 13B, 70B | LLAMA 2 | 2023-07 | 27,540 instruction tuning data, 2,919,326 human preference data |

| Qwen [github] | 7B | Base Model | 2023-08 | 2.2T tokens |

| Qwen-Chat [github] | 7B | Qwen | 2023-08 | - |

- C4 (https://www.tensorflow.org/datasets/catalog/c4), mC4 (https://www.tensorflow.org/datasets/catalog/c4#c4multilingual_nights_stay)

- The Pile (https://pile.eleuther.ai/)

- ROOTS corpus

- xP3 (extended from P3) (https://huggingface.co/datasets/bigscience/xP3)

- BooksCorpus

- English CommonCrawl, Github, Wikipedia, Gutenberg Books3, Stack Exchange

- Quora, StackOverflow, MedQuAD

- ShareGPT (https://sharegpt.com), HC3 (https://huggingface.co/datasets/Hello-SimpleAI/HC3)

- Stanford's Alpaca (https://huggingface.co/datasets/tatsu-lab/alpaca), Nomic-AI's gpt4all, Databricks labs' Dolly, and Anthropic's HH

- UltraChat

- OIG (https://laion.ai/blog/oig-dataset/) dataset

- BELLE's Chinese Dataset (https://github.com/LianjiaTech/BELLE/tree/main/data/1.5M)

- RedPajama-Data

Related papers

- ZeroQuant: Efficient and Affordable Post-Training Quantization for Large-Scale Transformers. arxiv 2022. [paper]

- LUT-GEMM: Quantized Matrix Multiplication based on LUTs for Efficient Inference in Large-Scale Generative Language Models. arxiv 2022. [paper]

- LLM.int8(): 8-bit Matrix Multiplication for Transformers at Scale. Neurips 2022. [paper]

- GPTQ: Accurate Post-Training Quantization for Generative Pre-trained Transformers. ICLR 2023. [paper]

- SmoothQuant: Accurate and Efficient Post-Training Quantization for Large Language Models. ICML 2023. [paper]

- LLM-QAT: Data-Free Quantization Aware Training for Large Language Models. arxiv 2023. [paper]

Related papers

- Parameter-Efficient Transfer Learning for NLP. ICML 2019. [paper]

- LoRA: Low-Rank Adaptation of Large Language Models. ICLR 2022. [paper]

- The power of scale for parameter-efficient prompt tuning. EMNLP 2021. [paper]

- GPT Understands, Too. arxiv 2021. [paper]

- Prefix-Tuning: Optimizing Continuous Prompts for Generation. ACL 2021. [paper]

- P-Tuning: Prompt Tuning Can Be Comparable to Fine-tuning Across Scales and Tasks. ACL 2022. [paper]

- QLoRA: Efficient Finetuning of Quantized LLMs. arxiv 2023. [paper]

TABLE 5: Overview of open-source efforts and tools development I have swapped the "tool" and "category" columns in the markdown table as requested:

| Category | Tool | Application | Released by | Link |

|---|---|---|---|---|

| Deployment | Transformers | LLM training and deployment | Huggingface | https://huggingface.co/transformers |

| Colossal-AI | Unified system to train and deploy large-scale models | HPC-AI Tech | https://colossalai.org/ | |

| GPT4all | Large and personalized language models training and deployment on common hardware | Nomic AI | https://gpt4all.io/ | |

| PandaLM | System providing automated and reproducible comparisons among various LLMs | Westlake University | https://github.com/WeOpenML/PandaLM | |

| MLC LLM | Solution allowing LLMs to be deployed natively | MLC AI | https://mlc.ai/mlc-llm/ | |

| Accelerating | Deepspeed | Accelerating training and inference of large-scale models | Microsoft | https://github.com/microsoft/DeepSpeed |

| Megatron-LM | Accelerating training and inference of large-scale models | Nvidia | https://github.com/NVIDIA/Megatron-LM | |

| Reproduction | MinGPT | Re-implementation of GPT which is clean, interpretable and educational | Stanford University | https://github.com/karpathy/minGPT |

| RedPajama | An effort to produce reproducible and fully-open language models | ETH Zurich | https://together.xyz/blog/redpajama | |

| Framework | LangChain | Framework for integration of LLMs with other computational sources and knowledge | LangChain | https://python.langchain.com/ |

| xTuning | Framework providing fast, efficient and simple fine-tuning of LLMs | Stochastic | https://github.com/stochasticai/xturing | |

| Evaluation | Open LLM Leaderboard | LM evaluation leaderboard | Huggingface | https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard |

| Framework | Scikit-LLM | Framework integrating LLMs into scikit-learn for enhanced text analysis tasks | Tractive | https://github.com/iryna-kondr/scikit-llm |

| AlpacaFarm | Simulation framework for methods that learn from human feedback | Stanford | https://github.com/tatsu-lab/alpaca_farm/ | |

| h2oGPT | LLM finetuning framework and chatbot UI with document(s) question-answer capabilities | H2O.ai | https://github.com/h2oai/h2ogpt | |

| Software | Open-Assistant | Customized and personalized chat-based assistant | LAION AI | https://github.com/LAION-AI/Open-Assistant |

| MetaGPT | Multi-agent framework to tackle tasks with multiple agents | Open-Source Community | https://github.com/geekan/MetaGPT | |

| Finetuning | PEFT | Library for finetuning LLMs with only part of parameters | Huggingface | https://huggingface.co/docs/peft |

Upcoming soon ...

TABLE 16. ChatGPT Alternatives on Different Applications

| Field | Software | Backbone | Url |

|---|---|---|---|

| Writing | ChatSonic | GPT-4 | https://writesonic.com/chat |

| Jasper Chat | GPT 3.5 and others | https://www.jasper.ai/chat | |

| Search Engines | ChatSonic on Opera | GPT-4 | https://writesonic.com/chatsonic-opera |

| NeevaAI | ChatGPT | https://neeva.com/ | |

| Coding | Copilot | Codex | https://github.com/features/copilot |

| Tabnine | GPT-2 | https://www.tabnine.com/ | |

| Codewhisperer | - | https://aws.amazon.com/cn/codewhisperer | |

| Language Learning | Elsa | - | https://elsaspeak.com/en |

| DeepL Write | - | https://www.deepl.com/translator | |

| Research | Elicit | - | https://elicit.org |

| ChatPDF | ChatGPT | https://www.chatpdf.com | |

| Copilot in Azure Quantum | GPT-4 | https://quantum.microsoft.com/ | |

| Productivity (team work) | CoGram | - | https://www.cogram.com |

| Otter | - | https://otter.ai | |

| Chatexcel | - | https://chatexcel.com | |

| AI Anywhere | ChatGPT, GPT-4 | https://www.ai-anywhere.com/#/dashboard | |

| Conversation | Replika | A model with 774M parameters | https://replika.com |

| Character AI | GPT-4 | https://beta.character.ai | |

| Poe | Multiple Models (GPT-4, LLaMA, ...) | https://poe.com | |

| Building customized AI | Botsonic AI chatbot | GPT-4 | https://writesonic.com/botsonic |

If you find our paper/repository useful, please kindly cite our paper.

@misc{gao2023examining,

title={Examining User-Friendly and Open-Sourced Large GPT Models: A Survey on Language, Multimodal, and Scientific GPT Models},

author={Kaiyuan Gao and Sunan He and Zhenyu He and Jiacheng Lin and QiZhi Pei and Jie Shao and Wei Zhang},

year={2023},

eprint={2308.14149},

archivePrefix={arXiv},

primaryClass={cs.CL}

}