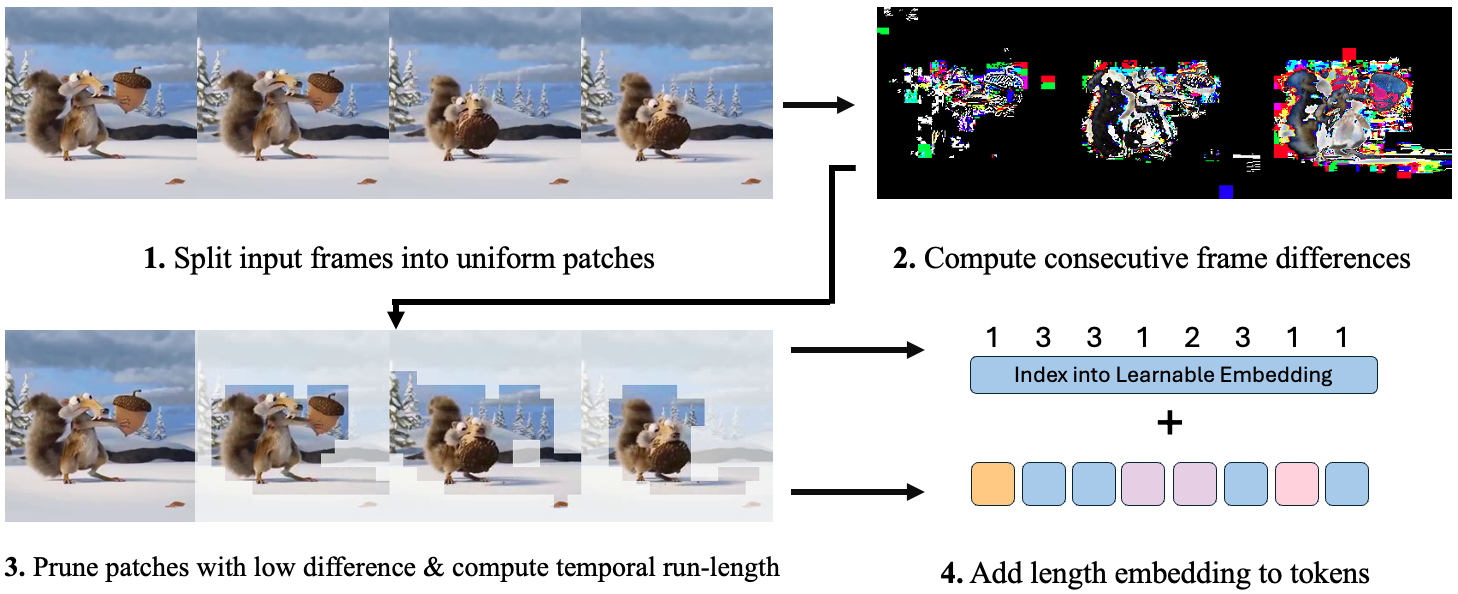

Official code for "Don't Look Twice: Faster Video Transformers with Run-Length Tokenization" at NeurIPS 2024 (spotlight).

Rohan Choudhury, Guanglei Zhu, Sihan Liu, Koichiro Niinuma, Kris Kitani, László Jeni

Robotics Institute, Carnegie Mellon University

Clone our repo recursively:

git clone --recursive https://github.com/rohan-choudhury/rlt.git

cd rltThe recursive is important to ensure that submodules are cloned as well.

We use mamba for environment management. Set up the environment with the following:

mamba env create -f environment.yaml

mamba activate rltWe use AVION's fast decode ops for efficient video decoding. Please refer these instructions for setting up Decord. We restate them briefly here.

Go to the decord directory and run the following commands to compile the library:

cd third_party/decord

mkdir build && cd build

cmake .. -DCMAKE_BUILD_TYPE=Release

makeThen, set up the python bindings:

cd ../python

python3 setup.py install --userIf there are errors or issues with the path installation, please refer to the Decord installation instructions. Alternatively, you can use the pip install decord command to install the standard version of the library without the faster cropping operations, but you will likely run into dataloading bottlenecks.

We provide detailed instructions for downloading and setting up the Kinetics-400 and Something-Something V2 datasets in DATASETS.md.

We provide checkpoints for different model scales that can be used to measure the effect of RLT-out-of-the-box. These are finetuned on Kinetics from the original VideoMAE-2 repo. For fine-tuning models, we suggest using the checkpoints provided in the original VideoMAE-2 repo, which are not fine-tuned. If there are any additional checkpoints you would like, please feel free to post an issue and we will respond as soon as possible.

| Model | Dataset | Top-1 Accuracy (base) | Top-1 Accuracy (RLT) | Pretrained Checkpoint |

|---|---|---|---|---|

| ViT-B/16 | Kinetics-400 | 80.9 | 80.9 | Download Link |

| ViT-L/16 | Kinetics-400 | 84.7 | 84.7 | Download Link |

| ViT-H/16 | Kinetics-400 | 86.2 | 86.2 | Download Link |

To run standard evaluation on Kinetics, use the following command:

# eval on Kinetics

python src/train.py experiment=val_vit_mae.yaml

To run evaluation with RLT turned on, use

# eval on Kinetics with RLT

python src/train.py experiment=val_vit_mae.yaml model.tokenizer_cfg.drop_policy='rlt'Similarly, to run training, use the following command:

# train on Kinetics

python src/train.py experiment=train_vit_mae.yaml

By default, RLT is not enabled. To use, you can explicity set the flag in the command line as in the evaluation step, or set it in the config file directly. You can also enable the length encoding scheme from the paper by modifying the config file accordingly. Finally, we provide support for logging with Weights&Biases, but this is turned off by default. To turn it back on, uncomment the logger: wandb line in the config file.

If you use our code or the paper, cite our work:

@article{choudhury2024rlt,

author = {Rohan Choudhury and Guanglei Zhu and Sihan Liu and Koichiro Niinuma and Kris Kitani and László Jeni},

title = {Don't Look Twice: Faster Video Transformers with Run-Length Tokenization},

booktitle = {Advances in Neural Information Processing Systems},

year = {2024}

}