GSLB is a Graph Structure Learning (GSL) library and benchmark based on DGL and PyTorch. We integrate diverse datasets and state-of-the-art GSL models.

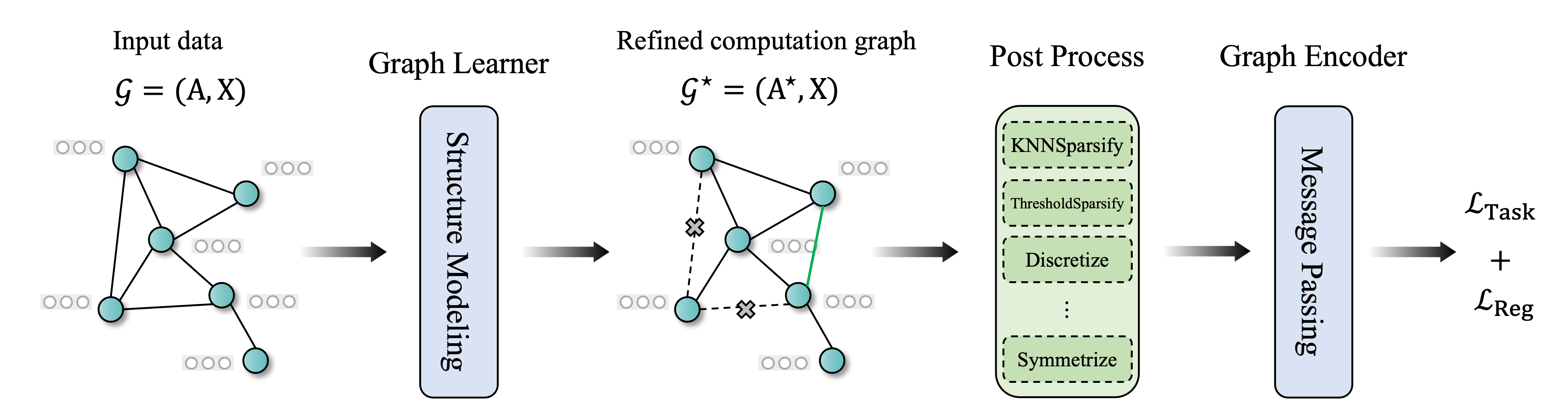

Graph Structure Learning (GSL) aims to optimize both the parameters of Graph Neural Networks (GNNs) and the computation graph structure simultaneously. GSL methods start with input features and an optimal initial graph structure. Its corresponding computation graph is iteratively refined through a structure learning module. With the refined computation graph ,GNNs are used to generate graph representations. Then parameters of the GNNs and the structure modeling module are jointly updated, either simultaneously or alternatively, util a preset stopping condition is satisfied.

If you want to explore more information about GSL, please refer to our Paper, survey, and paper collection.

GSLB needs the following requirements to be satisfied beforehand:

- Python 3.8+

- PyTorch 1.13

- DGL 1.1+

- Scipy 1.9+

- Scikit-learn

- Numpy

- NetworkX

- ogb

- tqdm

- easydict

- PyYAML

- DeepRobust

To install GSLB with pip, simply run:

pip install GSLB

Then, you can import GSL from your current environment.

If you want to quickly run an existing GSL model on a graph dataset:

python main.py --dataset dataset_name --model model_name --num_trails --gpu_num 0 --use_knn --k 5 --use_mettack --sparse --metric acc --ptb_rate 0. --drop_rate 0. --add_rate 0.Optional arguments:

--dataset : the name of graph dataset

--model : the name of GSL model

--ntrail : repetition count of experiments

--use_knn : whether to use knn graph instead of the original graph

--k : the number of the nearest neighbors

--drop_rate : the probability of randomly edge deletion

--add_rate : the probability of randomly edge addition

--mask_feat_rate : the probability of randomly mask features

--use_mettack : whether to use the structure after being attacked by mettack

--ptb_rate : the perturbation rate

--metric : the evaluation metric

--gpu_num : the selected GPU number

Example: Train GRCN on Cora dataset, with the evaluation metric is accuracy.

python main.py --dataset cora --model GRCN --metric acc

If you want to quickly generate a perturbed graph by Mettack:

python generate_attack.py --dataset cora --ptb_rate 0.05

Step 1: Load datasets

from GSL.data import *

# load a homophilic or heterophilic graph dataset

data = Dataset(root='/tmp/', name='cora')

# load a perturbed graph dataset

data = Dataset(root='/tmp/', name='cora', use_mettack=True, ptb_rate=0.05)

# load a heterogeneous graph dataset

data = HeteroDataset(root='/tmp/', name='acm')

# load a graph-level dataset

data = GraphDataset(root='/tmp/', name='IMDB-BINARY', model='GCN')Step 2: Initialize the GSL model

from GSL.model import *

from GSL.utils import accuracy, macro_f1, micro_f1

model_name = 'GRCN'

metric = 'acc'

device = torch.device("cuda" if torch.cuda.is_available() else "cpu")

# the hyper-parameters are recorded in config

config_path = './configs/{}_config.yaml'.format(model_name.lower())

# select a evaluation metric

eval_metric = {

'acc': accuracy,

'macro-f1': macro_f1,

'micro-f1': micro_f1

}[metric]

model = GRCN(data.num_feat, data.num_class, evel_metric,

config_path, dataset_name, device)Step 3: Train GSL model

model.fit(data)Currently, we have implemented the following GSL algorithms:

Feel free to cite this work if you find it useful to you!

@article{li2023gslb,

title={GSLB: The Graph Structure Learning Benchmark},

author={Li, Zhixun and Wang, Liang and Sun, Xin and Luo, Yifan and Zhu, Yanqiao and Chen, Dingshuo and Luo, Yingtao and Zhou, Xiangxin and Liu, Qiang and Wu, Shu and others},

journal={arXiv preprint arXiv:2310.05174},

year={2023}

}