GIMM-VFI performs generalizable continuous motion modeling and interpolations between two adjacent video frames at arbitrary timesteps.

📖 For more visual results of GIMM-VFI, go checkout our project page.

- 2024.11.18: Train code is release! We have also resolved an issue with

DS_SCALE, which should be a float between 0 and 1 for high-resolution interpolation, such as for 2K and 4K frames. - 2024.11.08: The ComfyUI version of GIMM-VFI is now available in the ComfyUI-GIMM-VFI repository, thanks to the dedicated efforts of @kijai :)

- 2024.11.06: Test codes and model checkpoints are publicly available now. A perceptually enhanced version of GIMM-VFI is also released along with this update.

- Pytorch 1.13.0

- CUDA 11.6

- CuPy

# git clone this repository

git clone https://github.com/GSeanCDAT/GIMM-VFI

cd GIMM-VFI

# create new conda env

conda create -n gimmvfi python=3.7 -y

conda activate gimmvfi

# install other python dependencies

pip install -r requirements.txt

GIMM-VFI can be implemented with different flow estimators. As described in our paper, we provide RAFT-based GIMM-VFI-R and FlowFormer-based GIMM-VFI-F in this repo.

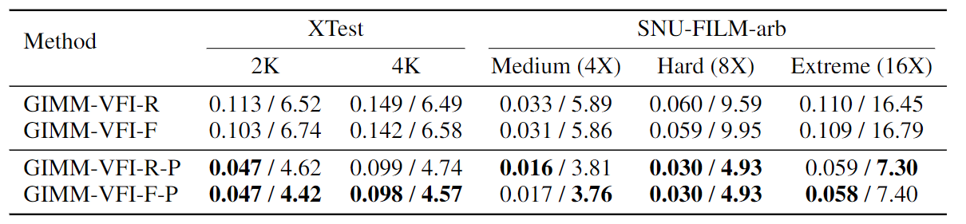

Additionally, we release a perceptually enhanced version of GIMM-VFI that incorporates an additional learning objective, the LPIPS loss, during training. Denoted as GIMM-VFI-R-P and GIMM-VFI-F-P, these enhanced variants achieve substantial improvements in perceptual interpolation.

All the model checkpoints can be found from this link. Please put them into ./pretrained_ckpt folder after downloading.

Interpolation demos can be create through the following command:

sh scripts/video_Nx.sh YOUR_PATH_TO_FRAME YOUR_OUTPUT_PATH DS_SCALE N_INTERP

The model variant by default is GIMM-VFI-R-P. You can change the model variant in scripts/video_Nx.sh.

Here is an example usage for 9X interpolation:

sh scripts/video_Nx.sh demo/input_frames demo/output 1 9

The expected interpolation output:

DS_SCALE is the downsampling scale factor ranging from 0 to 1. can be adjusted for high-resolution interpolations. For instance, you can perform 8X interpolation for 2K frames using following command:

sh scripts/video_Nx.sh demo/2K_input_frames demo/2K_output 0.5 8

The expected interpolation output can be found here.

In our practice, we tested GIMM-VFI on 2K and 4K frames for 8X interpolations on Nvidia V100 GPUs. Following is our settings and the corresponding memory usages:

[2K interpolation] DS_SCALE: 0.5 Memory-Usage: 7932MiB

[4K interpolation] DS_SCALE: 0.25 Memory-Usage: 10922MiB

-

Download the Vimeo90K, SNU-FILM and X4K1000FPS datasets.

-

Obtain the motion modeling benchmark datasets, Vimeo-Triplet-Flow (VTF) and Vimeo-Septuplet-Flow (VSF), by extracting optical flows from the Vimeo90K triplet and septuplet test sets using FlowFormer.

The file structure should be like:

├── data

├── SNU-FILM

├── test

├── test-easy.txt

├── test-medium.txt

├── test-hard.txt

├── test-extreme.txt

├── x4k

├── test

├── Type1

├── Type2

├── Type3

├── vimeo90k

├── vimeo_septuplet

├── sequences

├── flow_sequences

├── vimeo_triplet

├── sequences

├── flow_sequences

On the VTF benchmark:

sh scripts/bm_VTF.sh

On the VSF benchmark:

sh scripts/bm_VSF.sh

On the SNU-FILM-arb benchmark:

sh scripts/bm_SNU_FILM_arb.sh

On the X4K benchmark:

sh scripts/bm_X4K.sh

The model variants can be changed inside the shell scripts.

Following is the general command for training:

sh scripts/train.sh YOUR_CONFIG OUTPUT_DIR PRETRAINED_CKPT NUM_GPU

Specifically, you can train GIMM and GIMM-VFI by following the instructions below.

sh scripts/train.sh configs/gimm/gimm.yaml ./work_dirs '' 2

sh scripts/train.sh configs/gimmvfi/gimmvfi_r_arb.yaml pretrained_ckpt/gimm.pt 8

sh scripts/train.sh configs/gimmvfi/gimmvfi_f_arb.yaml pretrained_ckpt/gimm.pt 8

If you find our work interesting or helpful, please leave a star or cite our paper.

@inproceedings{guo2024generalizable,

title={Generalizable Implicit Motion Modeling for Video Frame Interpolation},

author={Guo, Zujin and Li, Wei and Loy, Chen Change},

booktitle={Advances in Neural Information Processing Systems},

year={2024}

}

The code is based on GINR-IPC and draws inspiration from several other outstanding works including RAFT, FlowFormer, AMT, softmax-splatting, EMA-VFI, MoTIF and LDMVFI.