Over a period of nine years in deep space, the NASA Kepler space telescope has been out on a planet-hunting mission to discover hidden planets outside of our solar system.

To help process this data, I created some machine learning models capable of classifying candidate exoplanets from the raw dataset.

In this homework assignment, I performed as:

- Preprocess the dataset prior to fitting the model.

- Used

MinMaxScalerto scale the numerical data. - Separated the data into training and testing data.

- Used

GridSearchto tune model parameters. - Tuned and comparedtwo different classifiers.

- Models used were:

Logistic Regression (LR)Random Forest Classifier (RFC)

- Models Design:

- Imported my dependencies as well as loaded the expoplanet_data.csv file.

- Build both models using all 41 features

- Instead of deleting columns a priori, I used the base model to evaluate feature importance, and filter the data to include relevant features only.

- I build a second model by selecting the features model and using the filtered data. *Tuned the model parameters using GridSearchCV.

- Build the final model using the tuned parameters.

- Evaluated both models and extracted, as csv and sav files, both Accuracy Report Data Frames.

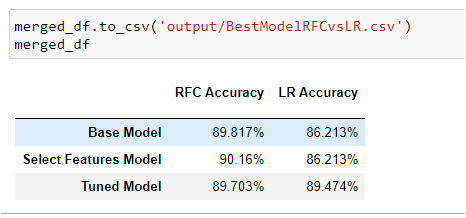

- Performed and merged both Accuracy Report Data Frames as a First Glance Comparison Report.

- Models Comparison and Results:

- The Comparison Report, at first glance, we can see that

Random Forest Classifier (RFC)is more accurate thanLogistic Regression (LR)by so little margin!

* Eventhough, we can also see that the `Tuned Model` applying the Grid Search CV also refine our accuracy target.

* Finally, as for Random Forest, we can see that is highly effective applying a feature selection than Logistic Regression model.

* Conclusions: Given the relatively high accuracy of the RFC model, I believe it to be a reasonable predictor of exoplanet candidacy. However, a model leveraging deep learning techniques might prove superior. - The Comparison Report, at first glance, we can see that

-

Started by cleaning the data, filtering features, and scaling the data.

-

Tryed a simple model first, and then tuned the model using

GridSearch. -

When hyper-parameter tuning, some models have parameters that depend on each other, and certain combinations will not create a valid model.

-

Worked both Models and my Comparison Report in separated Jupyter notebooks, in orde to avoid coding confusion.

-

My Jupyter Notebooks for each model are hosted on GitHub.

-

Created a file for my best model and push to GitHub

-

Included a README.md file that summarizes my assumptions and findings.

-

Submitted the link from my GitHub project to Bootcamp Spot.