This project aims to develop an automatic technique to detect and analyse picture-related bias in online news articles, by integrating various machine learning models into the methodology. Picture-related bias refers to the deviation from objective reporting in media, where journalists use images to influence the perception of an event or issue.

The project uses a dataset of real online news articles scraped from six Maltese newspapers: Times of Malta, The Shift, Malta Today, The Malta Independent, Malta Daily, and Gozo News. The dataset contains information such as article title, caption, body, image URL, and publication date.

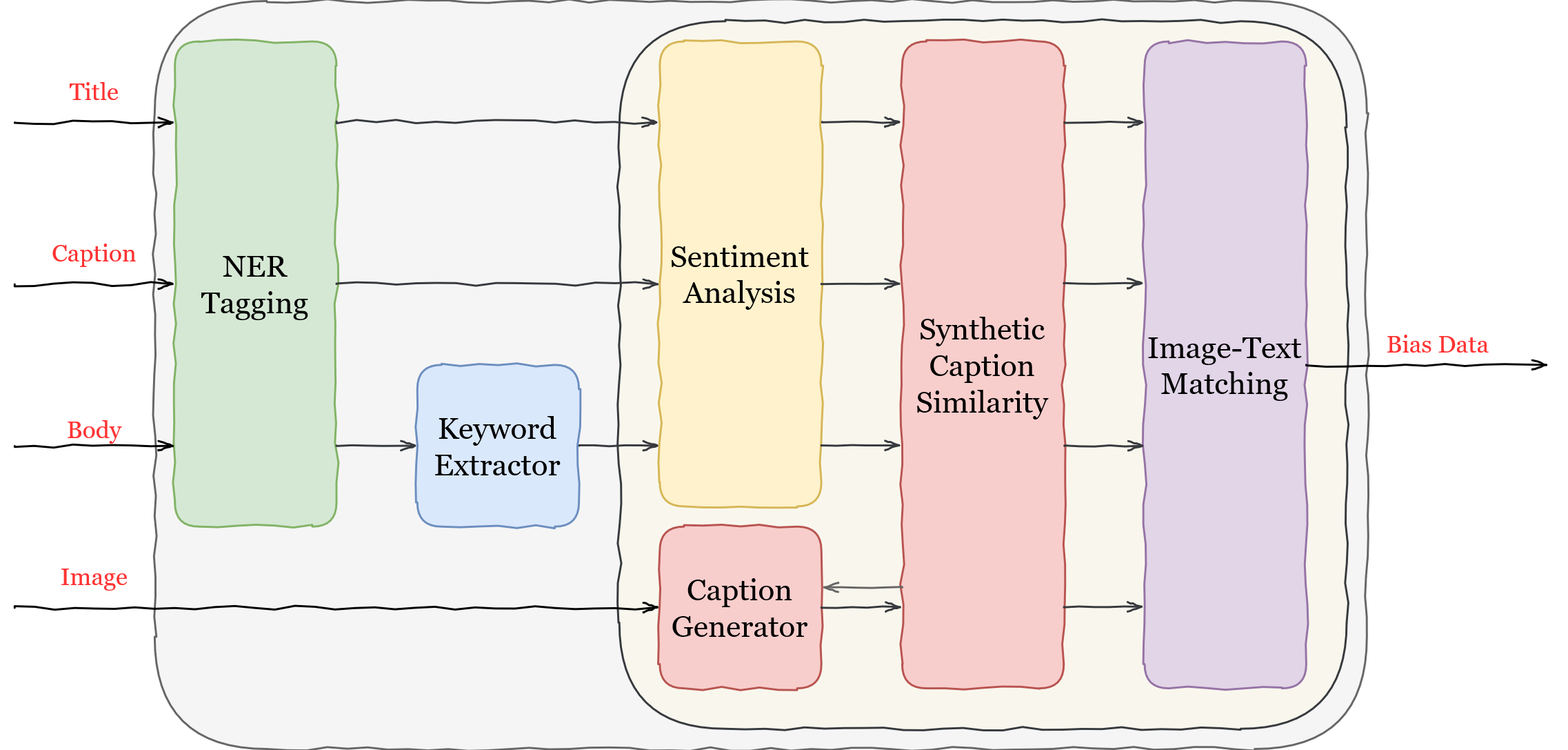

The project employs a machine-learning data-transformation pipeline to extract bias-indicative features from the news article dataset. The pipeline consists of the following steps:

- Named Entity Recognition (NER) Tagging: Identifies named entities such as persons, organisations, and locations in the article text using flair.

- Keyword Extraction: Extracts the most important topic words in the article text using KeyBERT.

- Sentiment Analysis: Computes the sentiment score of the article text using NewsMTSC.

- Caption Generation: Generates a synthetic caption for the article image using BLIP, a state-of-the-art Vision-Language Pre-training (VLP) model that incorporates both image and text in a multi-modal fashion. The

BLIP-1branch contains the deprecated BLIP implementation while themainbranch contains the updated BLIP-2 version - Synthetic Caption Similarity: Computes the cosine similarity between the synthetic caption and the article text using all-MiniLM-L6-v2, a transformer-based model for text similarity.

- Image-Text Matching: Computes the image-text matching score between the article image and the article text using BLIP.

The project evaluates the results of the data-transformation pipeline to investigate potential picture-related bias in the online news articles. The evaluation consists of the following analyses:

| Analysis | Description |

|---|---|

| Article Similarity to Synthetic Captions | Compares the similarity between the article title, caption, and body to the synthetic caption generated by BLIP, and visualises the results using maxdiff barcharts and scatter plots. |

| Article Similarity to Images | Compares the similarity between the article title, caption, and body to the article image, and visualises the results using barcharts and density plots. |

| Image-Text Similarity against Article Sentiment | Examines the relationship between the image-text similarity score and the article sentiment score, and visualises the results using stacked barcharts. |

| Application in Media Bias Research | Demonstrates how the proposed technique can be used to fact-check older studies, as well as to alleviate some of the manual work performed by researchers in picture-related media bias studies. |

A table of the aggregated mean results of the demonstration. All values represent a percentage. ∗ denotes that Caption metrics were not included while calculating the mean.

| Newspaper | Synthetic Caption Similarity | Image Similarity | Positive Sent. | Neutral Sent. | Negative Sent. |

|---|---|---|---|---|---|

| Times of Malta | 15.99 | 30.99 | 13.87 | 65.55 | 20.58 |

| The Shift | 9.27 | 20.78 | 11.71 | 62.68 | 25.60 |

| Malta Today | 10.80 | 24.15 | 16.79 | 67.03 | 16.18 |

| Malta Independent* | 10.96 | 29.92 | 21.27 | 62.56 | 16.17 |

| Malta Daily∗ | 13.46 | 50.79 | 28.81 | 58.72 | 12.47 |

| Gozo News∗ | 13.58 | 38.76 | 25.17 | 69.46 | 5.37 |

The project demonstrates a novel machine-learning technique to investigate picture-related bias in online news articles, by utilising various state-of-the-art models and methods. The project shows promising results for the adoption of this technique by media bias researchers, as well as for the empowerment of journalists and news readers in analysing the potential presence of picture-related bias. The project also suggests some future improvements and extensions for the technique.

gabriel.hili@um.edu.mt