[Ruixiao on 16th Feb 2022]

这是我个人的fork版本,主要用于对nuScenes-KITTI转换的代码分析。

This is my fork version which will be mainly used for nuScenes2KITTI code analysis.

主要涉及到的代码为convert文件夹中的nu2kitti、scripts文件夹中的convert.py。

I will mainly focus on convert/nusc2kitti.py and scripts/convert.py.

This paper has been accpeted by Conference on Computer Vision and Pattern Recognition (CVPR) 2020.

Train in Germany, Test in The USA: Making 3D Object Detectors Generalize

by Yan Wang*, Xiangyu Chen*, Yurong You, Li Erran, Bharath Hariharan, Mark Campbell, Kilian Q. Weinberger, Wei-Lun Chao*

Prepare Datasets (Jupyter notebook)

We develop our method on these datasets:

- KITTI object detection 3D dataset

- Argoverse dataset v1.1

- nuScenes dataset v1.0

- Lyft Level 5 dataset v1.02

- Waymo dataset v1.0

-

Configure

dataset_pathin config_path.py.Raw datasets will be organized as the following structure:

dataset_path/ | kitti/ # KITTI object detection 3D dataset | training/ | testing/ | argo/ # Argoverse dataset v1.1 | train1/ | train2/ | train3/ | train4/ | val/ | test/ | nusc/ # nuScenes dataset v1.0 | maps/ | samples/ | sweeps/ | v1.0-trainval/ | lyft/ # Lyft Level 5 dataset v1.02 | v1.02-train/ | waymo/ # Waymo dataset v1.0 | training/ | validation/ -

Download all datasets.

For

KITTI,ArgoverseandWaymo, we provide scripts for automatic download.cd scripts/ python download.py [--datasets kitti+argo+waymo] -

Convert all datasets to

KITTI format.cd scripts/ python -m pip install -r convert_requirements.txt python convert.py [--datasets argo+nusc+lyft+waymo] -

Split validation set

We provide the

train/valsplit used in our experiments under split folder.cd split/ python replace_split.py -

Generate

carsubsetWe filter scenes and only keep those with cars.

cd scripts/ python gen_car_split.py

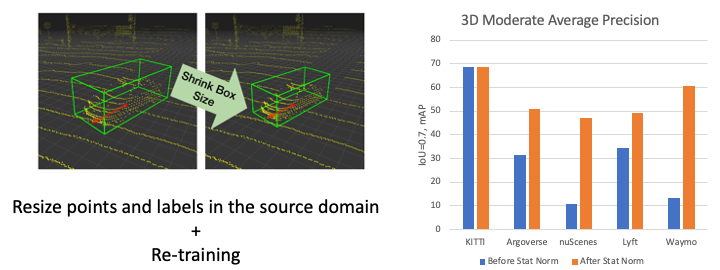

Statistical Normalization (Jupyter notebook)

-

Compute car size statistics of each dataset. The computed statistics are stored as

label_stats_{train/val/test}.jsonunder KITTI format dataset root.cd stat_norm/ python stat.py -

Generate rescaled datasets according to car size statistics. The rescaled datasets are stored under

$dataset_path/rescaled_datasetsby default.cd stat_norm/ python norm.py [--path $PATH]

We use PointRCNN to validate our method.

-

Setup PointRCNN

cd pointrcnn/ ./build_and_install.sh -

Build datasets in PointRCNN format.

cd pointrcnn/tools/ python generate_multi_data.py python generate_gt_database.py --root ...The

NuScencedataset has much less points in each bounding box, so we have to turn of theGT_AUG_HARD_RATIOaugmentation. -

Download the models pretrained on source domains from google drive using gdrive.

cd pointrcnn/tools/ gdrive download -r 14MXjNImFoS2P7YprLNpSmFBsvxf5J2Kw -

Adapt to a new domain by re-training with rescaled data.

cd pointrcnn/tools/ python train_rcnn.py --cfg_file ...

cd pointrcnn/tools/

python eval_rcnn.py --ckpt /path/to/checkpoint.pth --dataset $dataset --output_dir $output_dir We provide evaluation code with

- old (based on bbox height) and new (based on distance) difficulty metrics

- output transformation functions to locate domain gap

python evaluate/

python evaluate.py --result_path $predictions --dataset_path $dataset_root --metric [old/new]@inproceedings{wang2020train,

title={Train in germany, test in the usa: Making 3d object detectors generalize},

author={Yan Wang and Xiangyu Chen and Yurong You and Li Erran and Bharath Hariharan and Mark Campbell and Kilian Q. Weinberger and Wei-Lun Chao},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={11713-11723},

year={2020}

}