tpunicorn (or pu for short) is a Python library and command-line

program for managing TPUs. For example, if you have a preemptible TPU

named foo, then pu babysit foo will recreate it automatically

whenever it preempts.

See examples.

- Twitter: @theshawwn

- HN: sillysaurusx

- Discord: ML Community

- Patreon: thank you!

# Install pu

sudo pip3 install -U tpunicorn

(Use sudo pip3 at your own risk. It's potentially easier, since

pu is guaranteed to end up on your PATH regardless of your

platform, but see installation caveats

for a discussion of the tradeoffs.)

# View your TPUs

pu list

# Recreate a TPU named foo

pu recreate foo

# Watch a TPU named foo. If it preempts, recreate it automatically

pu babysit foo

Skip ahead to examples to see what else pu can do.

-

puassumes you can successfully rungcloud compute tpus list. If so, then you're done! Otherwise, see the Troubleshooting section. -

Rather than

sudo pip3 install -U tpunicorn, you can install via a more "recommended" approach. (For example, the magic wormhole project lists some reasons you might want to avoidsudo pip3.)

Option 1: a local install

pip3 install --user -U tpunicorn

# add python's user directory to your PATH

echo 'export PATH="$HOME/.local/bin:$PATH"' >> ~/.bashrc

# restart your shell

exec -l $SHELL

# does pu work?

pu list

Option 2: a virtualenv

virtualenv venv

source venv/bin/activate

pip3 install tpunicorn

pu list

Unfortunately, you may experience a serious slowdown when using venv.

pu is implemented by shelling out to gcloud compute tpus ..., and

gcloud seems to be very unhappy when it's run inside of a

virtualenv. gcloud compute tpus list takes ~8 seconds for me, which

is a noticeable delay, and makes pu list quite uncomfortable to use.

I've attempted to debug this, but as far as I can tell, the slowdown

is somewhere deep inside of gcloud internals related to reconfiguring

paths. I assume it's detecting the venv and doing some sort of

reconfiguration to account for it.

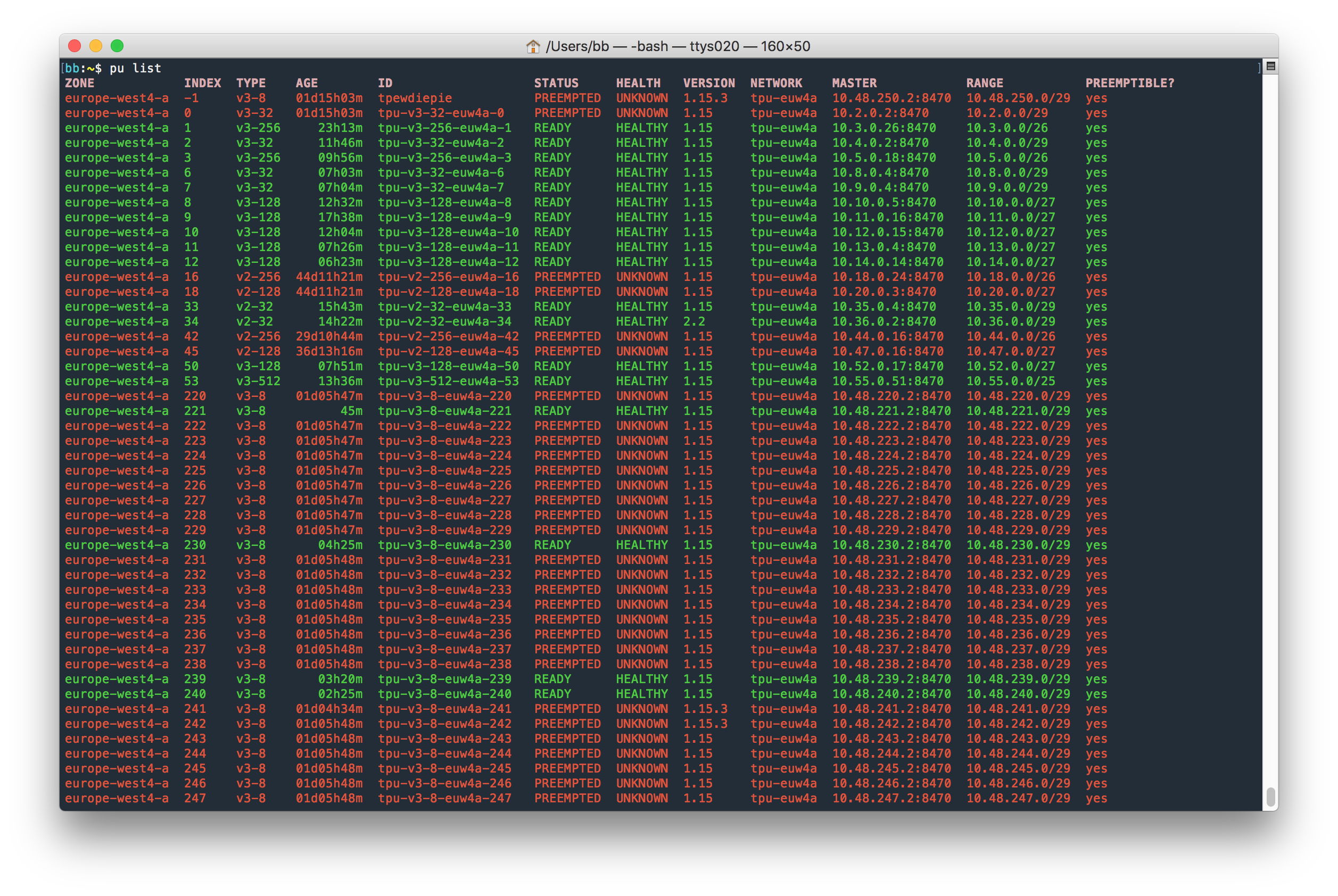

pu list shows your TPUs.

The INDEX is determined by checking whether your TPU name ends with

a number. It's common to create TPUs named like tpu1, tpu2, etc.

If you use such a naming scheme, the number becomes its INDEX and

you can refer to the TPU by number via the command line, which is far

easier than typing out the whole name.

(If two TPUs have the same index, an error is thrown if you attempt to refer to either of them by number, since that would be ambiguous.)

pu top is like htop for TPUs. Every few seconds, it clears the

screen and runs pu list, i.e. it shows you the current status of all

your TPUs. Use Ctrl-C to quit.

pu recreate <TPU> recreates an existing TPU, waits for the TPU's

health to become HEALTHY, then runs the commands specified via -c <command>. To run multiple commands, pass multiple -c <command>

options.

# Recreate a TPU named foo

pu recreate foo# Recreate a TPU named foo, but only if it's PREEMPTED. Don't prompt

# for confirmation. After the TPU recreates and is HEALTHY, run a

# command.

pu recreate foo --preempted --yes -c 'echo This only runs after the TPU is HEALTHY'# `pu babysit foo` is roughly equivalent to the following. (The -c

# options are provided here for illustration purposes; you can pass

# those to `pu babysit` as well.)

while true

do

pu recreate foo --preempted --yes \

-c "echo TPU recreated. >> logs.txt" \

-c "pkill -9 -f my_training_program.py"

sleep 30

donepu babysit <TPU> will watch the specified TPU, recreating it

whenever it preempts. You can specify commands to run afterwards via

-c <command>. (For example, a command to kill your current training

session, or send you a message.) To run multiple commands, pass

multiple -c <command> options.

In a terminal, simulate a training session:

while true

do

bash -c 'echo My Training Session; sleep 10000'

echo restarting

sleep 1

doneIn a separate terminal, babysit a TPU named my-tpu:

pu babysit my-tpu -c 'pkill -9 -f "My Training Session"'Whenever the TPU preempts, that command will:

- recreate the TPU named

my-tpu - wait for the TPU's health to become

HEALTHY - kill our simulated training session

The simulated training session will echo "restarting", indicating that it was successfully killed and the training process restarted itself.

In a real-world scenario, be sure that the pkill command only kills

one specific instance of your training script. For example, if

you run multiple training sessions with a script named train.py

using different TPU_NAME environment vars, a naive pkill command

like pkill -f train.py would kill all of your training sessions,

rather than the one associated with the TPU.

(To solve that, I normally pass the TPU name as a command-line

argument, then run pkill -9 -f <TPU>.)

Also, be sure to pass pkill -9 rather than pkill. That way, your

training session will be restarted even if it's frozen.

Lastly, consider running your actual training script like so:

while true

do

timeout --signal=SIGKILL 11h <your training command>

echo restarting

sleep 30

done

This will force-kill your training command after a maximum of 11 hours of training (rather than waiting the theoretical maximum of 24 hours before your TPU preempts). This way, if your training session freezes for some reason (e.g. the TPUEstimator API stops making progress) then you'll lose no more than a few hours of training time.

Without this, we kept running into situations like "wake up the next day and discover that the training session has been frozen for the last 12 hours." We're still not entirely sure why. Suffice to say, if your training session takes an hour to get into a stable state, you'll lose only ~2 hours in the usual case (no freezes; everything normal) and gain several hours in the worst case (the training loop froze and no one noticed).

You might feel tempted to put a pu recreate $TPU_NAME -y command

inside that while loop. After all, if your training session

terminates, shouldn't it recreate the TPU? Perhaps; feel free to try

it out and see if you like it. In our experience, we've found it's

more effective to manage our TPUs separately

rather than try to solve both concerns in the same script.

pu list shows the current status of all your TPUs. You can use

-t/--tpu <TPU> to print the status of one specific TPU. To print the

status of multiple TPUs, pass multiple -t <TPU> options.

# List TPU named foo. If it doesn't exist, throw an error.

pu list -t foo# Dump the TPU in json format. If it doesn't exist, throw an error.

pu list -t foo --format json# List TPUs named foo or bar. If foo or bar don't exist, don't throw an

# error. For each TPU, print a line of JSON. Then use `jq` to extract

# some interesting subfields, and format the result using `column`.

pu list -t foo -t bar -s --format json | \

jq '.name+" "+.state+" "+(.health//"UNKNOWN")' -c -r | column -tUsage: tpunicorn babysit [OPTIONS] TPU

Checks TPU every INTERVAL seconds. Recreates the TPU if (and only if) the

tpu has preempted.

Options:

--zone [asia-east1-c|europe-west4-a|us-central1-a|us-central1-b|us-central1-c|us-central1-f]

--dry-run

-i, --interval <seconds> How often to check the TPU. (default: 30

seconds)

-c, --command TEXT After the TPU has been recreated and is

HEALTHY, run this command. (Useful for

killing a training session after the TPU has

been recreated.)

--help Show this message and exit.

Usage: tpunicorn recreate [OPTIONS] TPU

Recreates a TPU, optionally switching the system software to the specified

TF_VERSION.

Options:

--zone [asia-east1-c|europe-west4-a|us-central1-a|us-central1-b|us-central1-c|us-central1-f]

--version <TF_VERSION> By default, the TPU is recreated with the

same system software version. You can set

this to use a specific version, e.g.

`nightly`.

-y, --yes

--dry-run

-p, --preempted Only recreate TPU if it has

preempted. (Specifically, if the tpu's STATE

is "PREEMPTED",proceed; otherwise do

nothing.)

-c, --command TEXT After the TPU is HEALTHY, run this

command. (Useful for killing a training

session after the TPU has been recreated.)

--help Show this message and exit.

Usage: tpunicorn list [OPTIONS]

List TPUs.

Options:

--zone [asia-east1-c|europe-west4-a|us-central1-a|us-central1-b|us-central1-c|us-central1-f]

--format [text|json]

-c, --color / -nc, --no-color

-t, --tpu TEXT List a specific TPU by id.

-s, --silent If listing a specific TPU by ID, and there

is no such TPU, don't throw an error.

--help Show this message and exit.

Usage: tpunicorn reimage [OPTIONS] TPU

Reimages the OS on a TPU.

Options:

--zone [asia-east1-c|europe-west4-a|us-central1-a|us-central1-b|us-central1-c|us-central1-f]

--version <TF_VERSION> By default, the TPU is reimaged with the

same system software version. (This is handy

as a quick way to reboot a TPU, freeing up

all memory.) You can set this to use a

specific version, e.g. `nightly`.

-y, --yes

--dry-run

--help Show this message and exit.

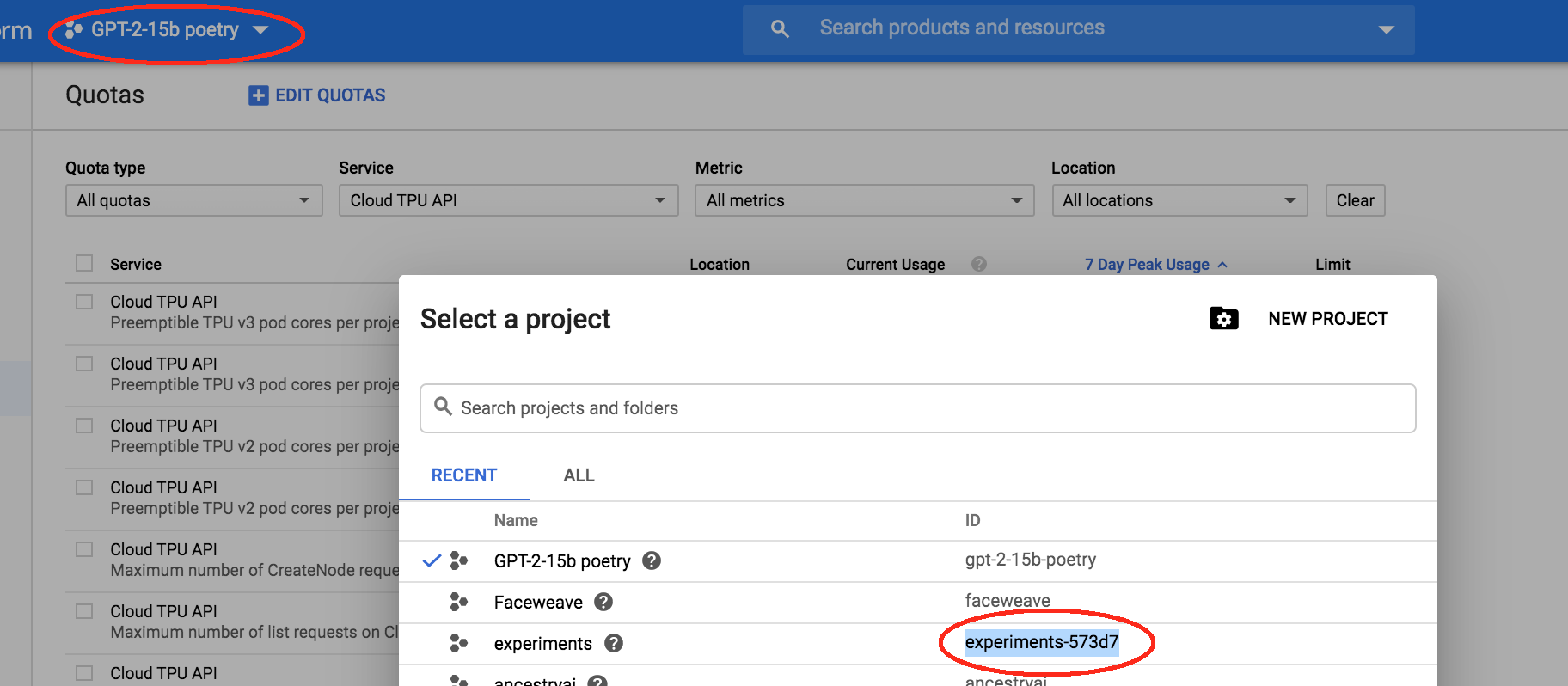

- Ensure your project is set

gcloud config set project <your-project-id>

Note that the project ID isn't necessarily the same as the project name. You can get it via the GCE console:

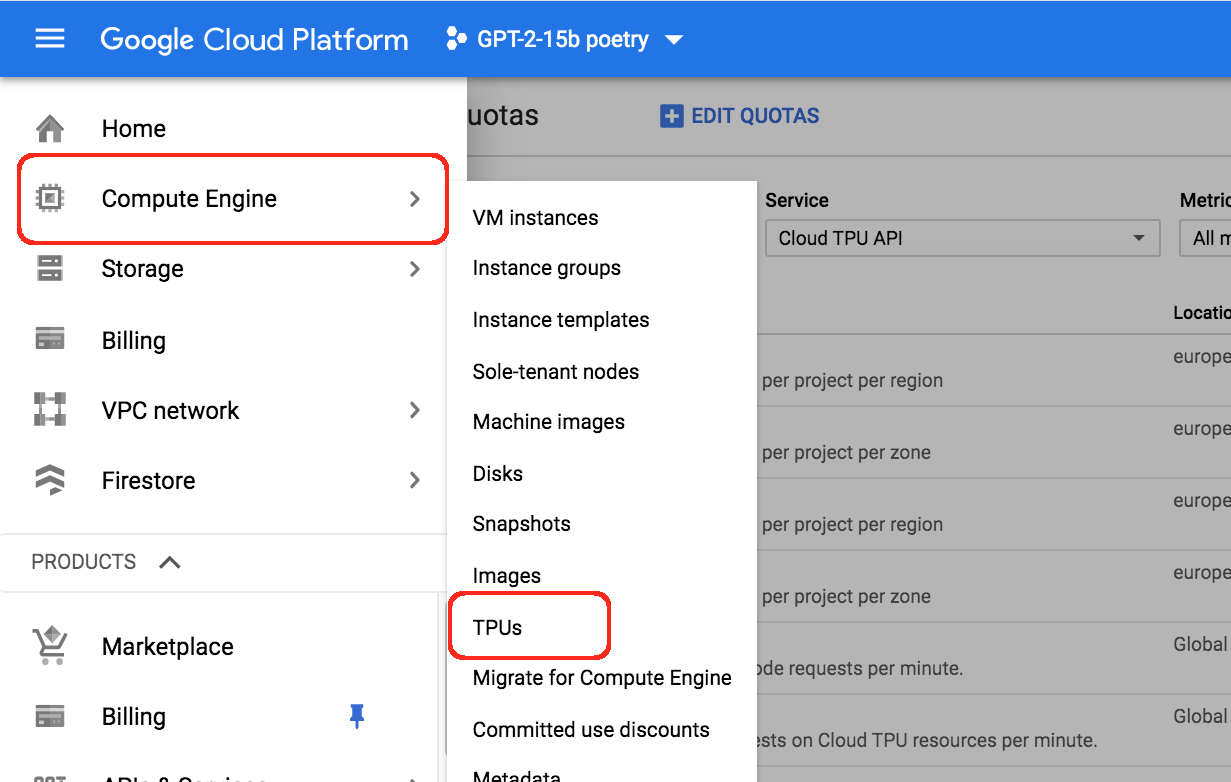

While you're there, go the Cloud TPU page:

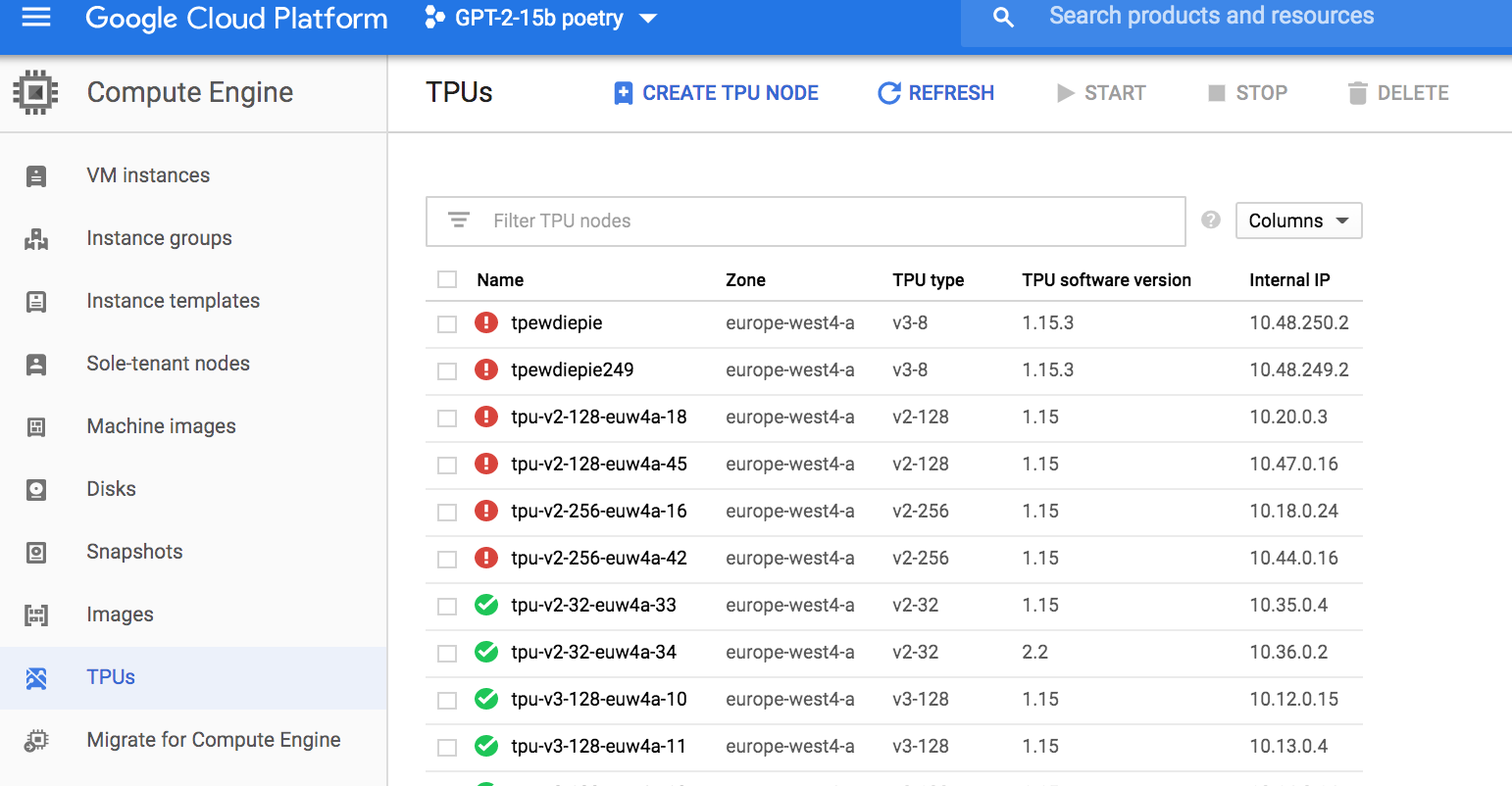

If it asks you to enable the Cloud TPU API, then do so. Afterwards you should see the GCE TPU dashboard:

Create a TPU using "Create TPU node" to verify that your project has TPU quota in the desired region.

- Ensure your command-line tools are properly authenticated

Use gcloud auth list to see your current account.

If security isn't a concern, you can use gcloud auth login followed

by gcloud auth application-default login to log in as your primary

Google identity. Usually, this means that your terminal now has "root

access" to all GCE resources.

If you're on a server, you might want to use a service account instead.

-

create a service account, granting it the "TPU Admin" role for TPU management, or "TPU Viewer" role for read-only viewing.

-

Upload the keyfile to your server. (I use

wormhole send ~/keys.jsonfor that. You can install it withpip install magic-wormhole.)

For example, I created a tpu-test service account, then created a

~/tpu_key.json keyfile:

$ gcloud iam service-accounts keys create ~/tpu_key.json --iam-account tpu-test@gpt-2-15b-poetry.iam.gserviceaccount.com

created key [03db745322b4e7c4e9e2036386d1e908eb2e1a52] of type [json] as [/Users/bb/tpu_key.json] for [tpu-test@gpt-2-15b-poetry.iam.gserviceaccount.com]

Then I sent that ~/tpu_key.json file to my server, and activated the

service account:

$ gcloud auth activate-service-account tpu-test@gpt-2-15b-poetry.iam.gserviceaccount.com --key-file ~/tpu_key.json

Activated service account credentials for: [tpu-test@gpt-2-15b-poetry.iam.gserviceaccount.com]

I checked gcloud auth list to verify I'm now using that service

account:

$ gcloud auth list

Credentialed Accounts

ACTIVE ACCOUNT

shawnpresser@gmail.com

* tpu-test@gpt-2-15b-poetry.iam.gserviceaccount.com

To set the active account, run:

$ gcloud config set account `ACCOUNT`

At that point, as long as you've run gcloud config set project <your-project-id>,

then gcloud compute tpus list --zone europe-west4-a should be successful.

(To avoid having to pass --zone europe-west4-a to all your gcloud

commands, you can make it the default zone:

gcloud config set compute/zone europe-west4-a

As far as I know, it's completely safe to make a "TPU Viewer" service

account world-readable. For example, if you want to let everyone view

your TPUs for some reason, you can

simply stick the ~/tpu_key.json file somewhere that anyone can

download.

(If this is mistaken, please DM me on twitter.)

If you're an ML enthusiast, join our TPU Podcast Discord Server. There are now ~400 members, with ~60 online at any given time:

There are a variety of interesting channels:

#papersfor pointing out interesting research papers#researchfor discussing ML research#showand#samplesfor showing off your work#hardwarefor hardware enthusiasts#ideasfor brainstorming#tensorflowand#pytorch#cats,#doggos, and of course#memes- A "bot zone" for interacting with our discord bots, such as using

!waifu red_hair blue_eyesto generate an anime character using stylegan:

- Quite a few more.

If you found this library helpful, consider joining my patreon.