This project will no longer be maintained by Intel. Intel has ceased development and contributions including, but not limited to, maintenance, bug fixes, new releases, or updates, to this project. Intel no longer accepts patches to this project.

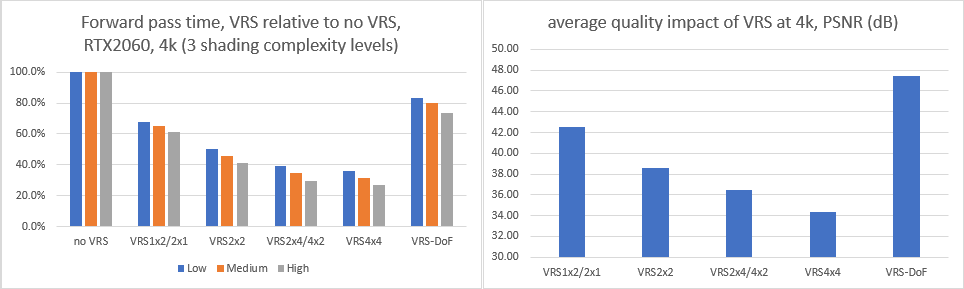

The main motivation for this sample is to explore quality vs performance trade-offs that are the major part of using Variable Rate Shading, as well as demonstrate using VRS in combination with an effect such as Depth of Field in a forward renderer. It is mainly focused on Tier 1 (per-draw call) VRS but a lot of the learnings should be useful for Tier 2 or mixed implementations. Data generated by an earlier version of this sample was used for the "Use Variable Rate Shading (VRS) to Improve the User Experience in Real-Time Game Engines." Siggraph 2019 talk (https://software.intel.com/en-us/videos/use-variable-rate-shading-vrs-to-improve-the-user-experience-in-real-time-game-engines) which covers a lot of the VRS topics related to this sample.

VRS lets one run pixel shaders at lower frequency (effectively, resolution) while running the geometry rasterization, depth testing and blending at the full resolution. The 'Tier 1' feature subset allows changing the pixel shading rate on a per draw call basis, while the 'Tier 2' extends it to a per primitive (triangle) or per screen space image tile (with an 8x8 or 16x16 pixel granularity). In other words, VRS lets the developer selectively reduce pixel shading cost in areas where they do not significantly contribute to visual quality, while preserving the full resolution geometry, depth/stencil testing and blending detail. As a rule of thumb, VRS will be useful if it can be targeted to a specific subset of draw calls or pixels, especially when the geometry, depth testing (stencil, blending) is significantly more impactful to image quality than pixel shading. Conversely, it is not a good idea to apply VRS unselectively, such as, for example, to a whole full screen post-process draw pass, because it will result in both worse performance and quality compared to just doing a lower resolution pass - a possible exception to this would be a full screen pass that performs depth testing or blending, as these will still happen at full resolution with VRS.

For more detail please refer to the API specification: https://microsoft.github.io/DirectX-Specs/d3d/VariableRateShading.html

This sample is using a relatively simple forward renderer with material shading based mostly on Filament (https://github.com/google/filament), with a few post-process effects and a simple GI based on IBL light probes. We use the Bistro dataset with roughly 700 meshes and 130 materials. Meshes are a good mix of small and large triangles, which is useful to evaluate the rendering pipeline behavior with VRS. The panel on the left provides various options for evaluating VRS under different scenarios, as well as a scripted performance and quality benchmark and other tools. Classic FPS camera movement (WSAD+mouse) is enabled by right clicking anywhere in the window area.

For this demo, we settle on a relatively simple 'VRS base rate' approach. We compute a single integer value based on a couple of criteria, which gets converted to the VRS shading rate on a per draw call granularity.

This 'VRS base rate' is a simple sum of individual 'VRS offset' values. In our case we have only two:

- Per-object 'VRS offset' gets computed based on the amount of blur that the object receives from the Depth of Field effect.

- Per-material 'VRS offset' is a fixed setting determined by an artist or automatically. They are simply added together and clamped to [0, 4] range. For more granularity, it would make sense to use a float instead of integer. Then, this number is simply mapped into 1x1, 1x2/2x1, 2x2, 2x4/4x2 and 4x4 shading rates, with the horizontal vs vertical choice based on a per-material preference.

Before we go into these individual steps in more detail, it should be noted that this approach is more suitable to Forward (Forward+, etc.) renderers and Tier 1 VRS and is just one of the ways to utilize VRS. VRS Tier 2 enables various other approaches such as detailed in Martin Fuller's excellent GDC 2019 talk: https://www.youtube.com/watch?v=2vKnKba0wxk

It is also interesting to note that the 2x1/1x2 shading rates often provide the biggest performance savings, with the quality that is roughly in between the no VRS (1x1) and 2x2. Each next VRS shading rate provides diminishing returns with regards to performance savings as the GPU starts hitting various bottlenecks, while the quality loss is always at least proportional to the reduction of pixels being shaded (see the Siggraph 2019 presentation for more detail). In the same way, 4x2 and 2x4 shading rates provide just slightly lower performance savings compared to 4x4 but a lot higher quality. So, it is good not to ignore the rectangular shading rates even though they might appear a bit more obscure than non-rectangular ones.

The only effect in this demo that drives the VRS rate is the Depth of Field effect. Other good candidates would be motion blur, fog or similar. To compute per-object DoF VRS offset, we take the object-oriented bounding box and compute, conservatively, how much blurring it receives from the effect, represented as the [0, 1] normalized value. The code for this is in the 'vaDepthOfField::ComputeConservativeBlurFactor' function. We than convert this [0, 1] value into the 'VRS offset' based on the user settings. Please check the "Use Variable Rate Shading (VRS) to Improve the User Experience in Real-Time Game Engines." Siggraph 2019 talk for more detail.

Some materials suffer particularly badly from shading rate reduction while others are a lot less sensitive. We use a per-material 'VRS offset' setting to reduce the VRS quality loss on specific materials. This is initialized automatically using the "Material VRSRateOffset setup" script which simply goes through all the materials and tests various shading rates across 32 scene locations, finding the materials that show the highest MSE (Mean Squared Error) / lowest PSNR metric using non-VRS images as a baseline. This process does not take into consideration the amount of performance savings that we can get from the materials, which varies due to individual shader complexity and the number of pixels rendered, and thus is not fully automatic. We then use these settings as a starting point and manually tweak the per-material offsets for the optimal visual quality loss vs performance gain trade-off. This could be more automated, but we did not investigate further.

We previously implied that we map 'VRS base rate' value of 1 to VRS shading rate 1x2/2x1 and value of 3 to VRS shading rate 2x4/4x2 but we didn't explain how we decide whether to use horizontal or vertical rate. We do this based on a h/v per-material preference setting, which is either set manually or using automatic profiling based on image quality metrics.

In this demo we automate it ("Optimize material horiz/vert VRS preference" button on the left panel) by following these simple steps.

- We use 32 camera locations from the automated flythrough to collect non-VRS reference images

- For each material and each camera location, we check whether the 2x1 ('horizontal') or 1x2 ('vertical') is closer to the 1x1 reference and increment the horizontal or vertical score.

- We repeat this for every material.

In the end, we end with a per-material preference that guides us whether to translate value 1 of 'VRS base rate' to 2x1 or 1x2. The visual benefit of this is obvious but also measurable: with our scene at 2560x1600 x32 images, PSNR (higher is better, logarithmic scale) for 'all horizontal' 2x1 is 39.6dB, for 'all vertical' 1x2 is 40.2dB and for per-material is 41.2dB. (For a reference, 2x2 is 36.7dB). There are some considerations though, and reasons why this approach might not work in your use case:

- If we compute per-material preference using 2x4/4x2 (instead of 1x2/2x1) rates, this drops our 1x2/2x1 PSNR from 41.2dB to 41.0dB indicating that what's best for 1x2/2x1 isn't necessarily the best for 2x4/4x2 but it is still a lot better than not having a preference.

- If the automated test does not have coverage of the specific material (for ex., material is used by a poorly lit or obscured object), it will not work. Still, even in this worst-case scenario it will simply not cause any harm. We can have the automated system output the list of these materials for manual, artist tuning.

- In our test case (first/third person game), the camera up vector is relatively constant regardless of location. The horizontal/vertical preference will not hold up in a game with no camera constraints (such as a flight simulator - although even then the orientation of the items in the cockpit interior will be relatively fixed with regards to the screen). This is also an issue in cases where lighting can completely change, as the per-material horizontal//vertical preference setting does not help with effects such as shadows. Still, automated preference search might hold up in most scenarios.

- Results can slightly differ if the starting point is different such as resolution or when material preferences were updated, saved, and then the test was restarted (but in this case they tend to converge to same final result)

In this demo we use 131 materials, and out of those the automated system has determined that 67 are best with horizontal preference, 45 with vertical and 19 have too little coverage or too little difference.

We have noticed two separate penalties to frequently changing shading rate through the RSSetShadingRate:

- On some hardware/software configurations there is a noticeable CPU cost to calling RSSetShadingRate (on the DX12 API or driver level) - therefore it is advisable to cache previous value that was set to the command list and only change when needed. For example, on RTX 2060 in close to CPU bound scenarios (low res, 100+FPS), VRS can actually cause performance regression.

- On some Intel integrated graphics hardware changing VRS shading rate can cause a partial pipeline flush, introducing both a fixed performance cost and preventing parallelization between draw calls on the GPU.

For this reason opaque draw calls in this sample are sorted by the VRS shading rate (this behavior can be switched on/off with the "Sort draw calls by VRS shading rate"). Depth pre-pass draw calls never use VRS (and are sorted front to back) while transparencies remaining always sorted back to front for correctness.

Even in a forward renderer, some effects such as SSAO are often precomputed as a fullscreen texture and sampled in a pixel shader, causing VRS-related artifacts. This is because at the lower shading rate only one value is sampled from the fullscreen texture and used to represent the whole VRS block of pixels, which causes the effect to bleed between different surfaces (across geometry boundaries). Worse yet, if point sampling is used, there will be no filtering and also, since the sampling location is exactly at the boundary between (full resolution) pixels, sampling behavior is somewhat undefined. To avoid this worst case, it is enough to sample with the linear filter for VRS rates up to 2x2, while the rates above 2x2 require both using linear sampler and an additional MIP layer for the fullscreen texture. In that case it is also advisable to use a negative MIP bias of -1.0 or similar, to allow for correct filtering with rectangular VRS rates (1x2, 2x1, 2x4, 4x2).

In short, when using VRS and sampling from a fullscreen texture, to avoid the worst of the artifacts (bleeding/haloing, instability), at least enable linear filter. To ensure filtering works for 2x4, 4x2 and 4x4 rates, create one additional mip layer for the input fullscreen texture (a small performance penalty). Use MIP bias of -1.0 or similar to make sure filtering is correct for rectangular VRS rates.

Note: when SSAO is applied only as a post-process fullscreen pass as a simple multiply blend, there are no issues with VRS because that 'apply AO' fullscreen pass will be done using no VRS. The case we describe here is a more complex approach where AO is used as part of the lighting equations (Chan 2018, "Material Advances in Call of Duty: WWII", W. Brinck and A. Maximov. "The Technical Art of Uncharted 4", SIGGRAPH 2016). Another similar example of this issue is when computing shadows as a fullscreen pre-pass, in which case light bleed artifacts are a lot more pronounced.

A more advanced solution to this problem could be to use depth/normal information to precompute a per-VRS-rate fullscreen UV offset map that is then used to shift sampling locations for fullscreen textures such that they don't bleed across different surfaces (thanks to Gus Romero from Codemasters for the idea).

If we imagine the ability to run pixel shader at the lower rate (VRS) but still output the individual pixels at the higher rate (not currently supported by VRS), we could think of scenarios where it is beneficial to split the computation into coarse (for ex., diffuse lighting) and full resolution (for ex., specular lighting, alpha testing) parts, all within the capabilities of the classic pixel shading pipeline.

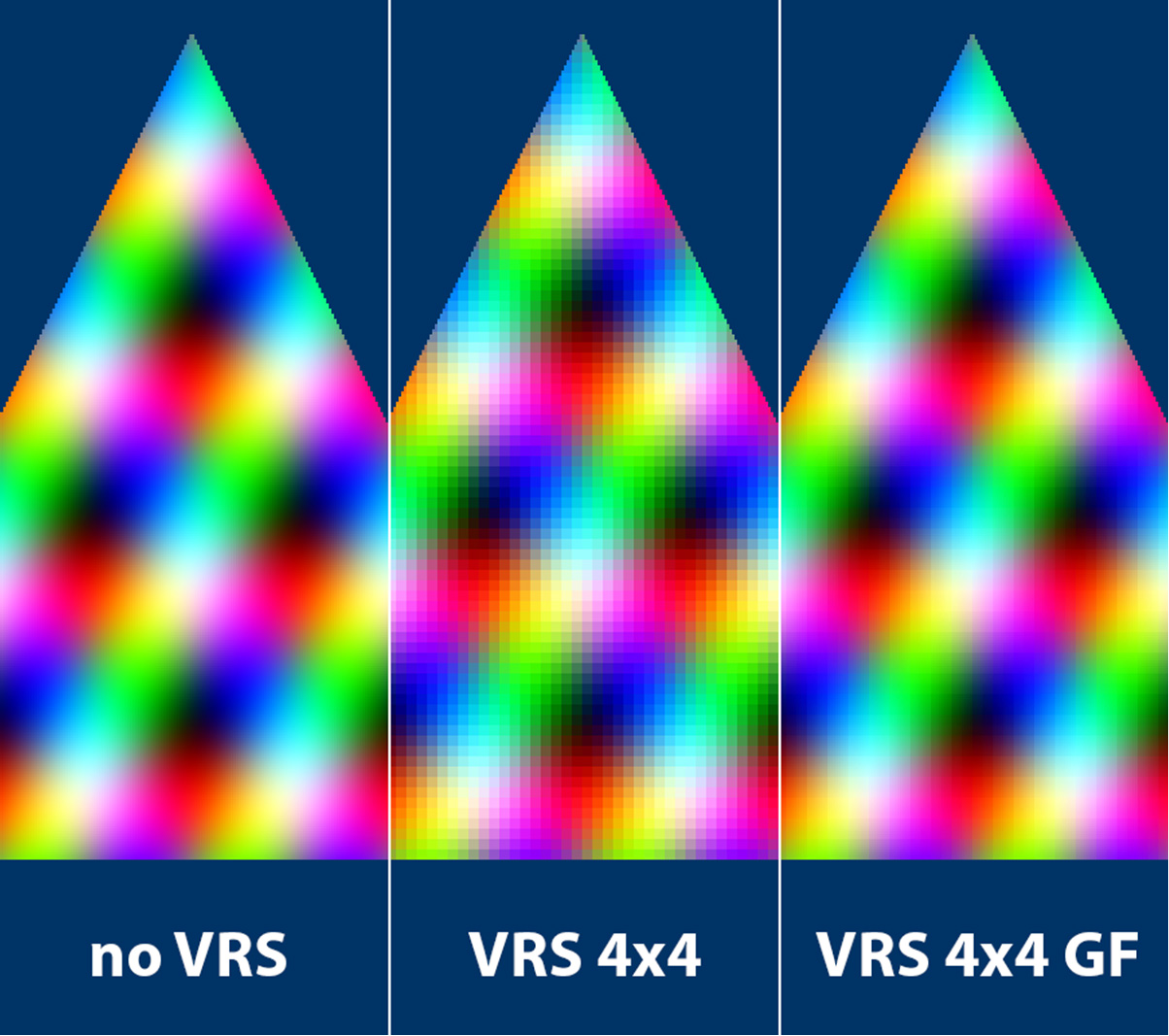

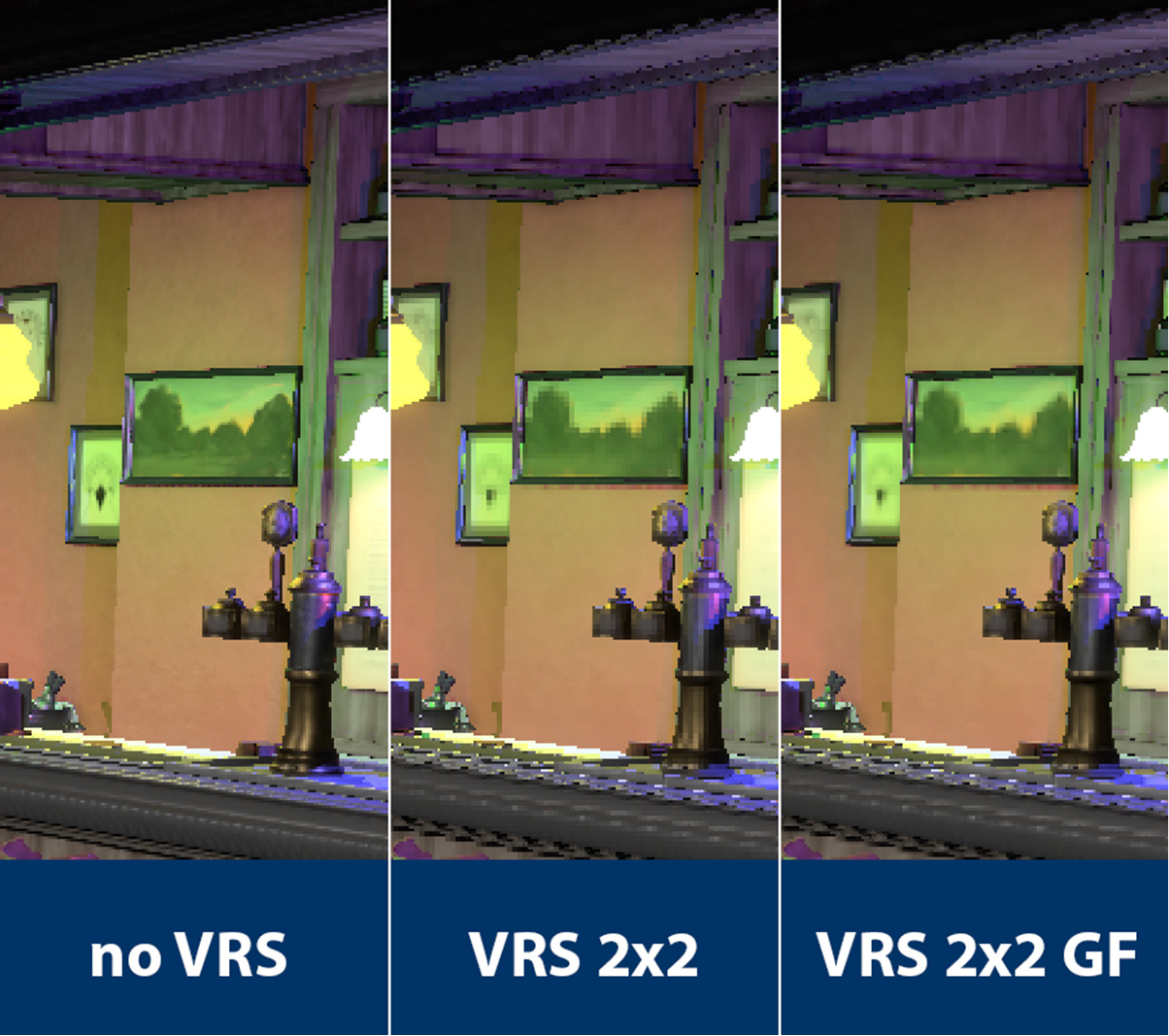

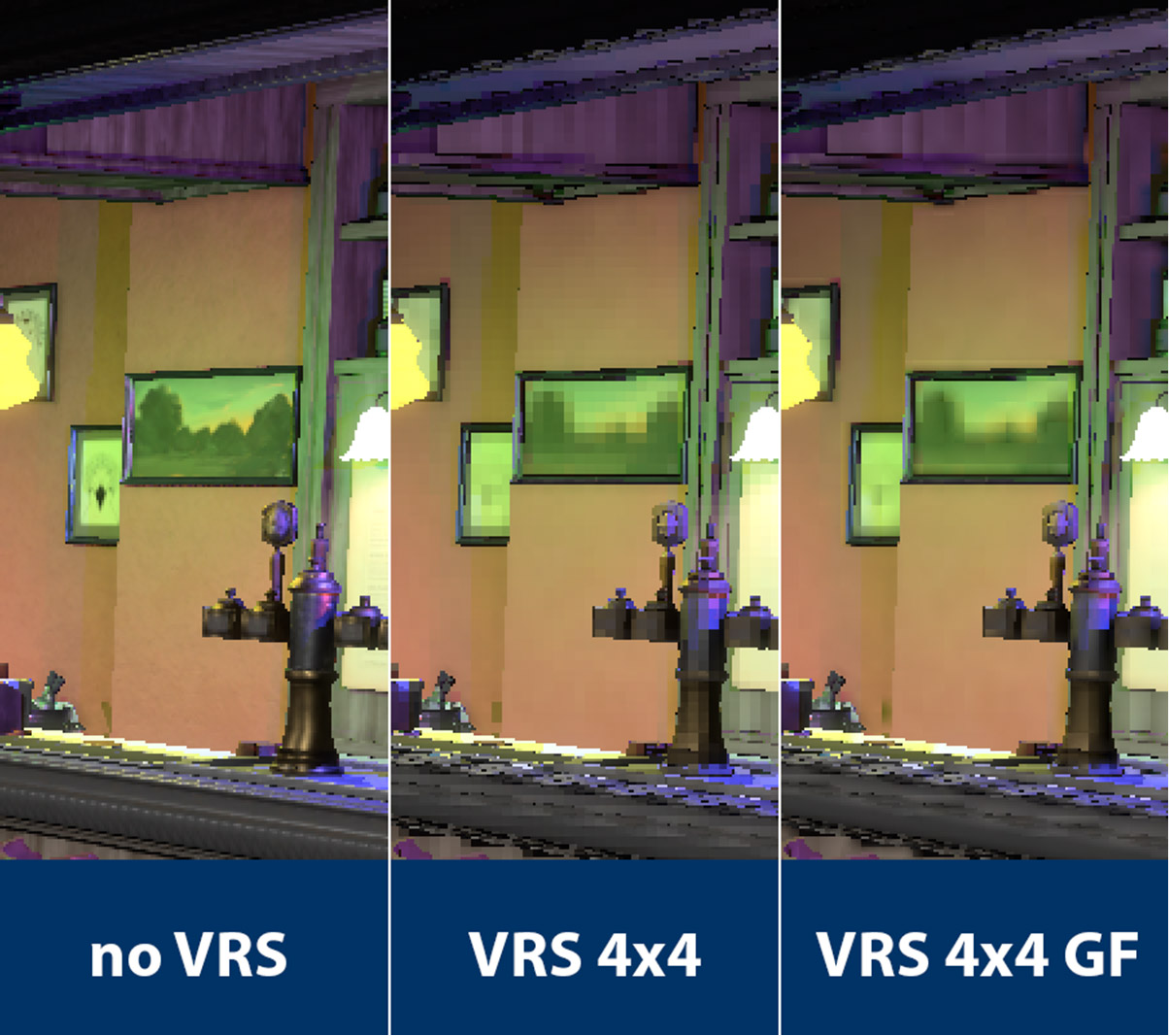

To explore this idea, we created a filter that can be added at the end of a pixel shader in order to mitigate one of the main issues when using VRS - blockiness artifacts. This filter works by taking the final (per-VRS block) color output and, based on the information available within the 2x2 shading quad (obtained simply with ddx/ddy partial derivative instructions), it part-interpolates and part-extrapolates color values over the output full resolution pixels.

The output of this Gradient Filter is visually better than the native output of VRS in almost all cases (including the 0.5-1.0 higher overall PSNR metrics) at the expense of a couple of math instructions at the end of the shader and the added cost of outputting per-pixel values instead of broadcasting one color value across the whole block (variable based on hardware/driver implementation).

In order to be able to output per-pixel colors while in VRS mode, which is not something that is supported by the current VRS API, we had to implement this Gradient Filter proof of concept on the driver level and are working to expose it as an experimental Intel-specific extension. If you are interested in trying it out, please contact us directly for more detail.

Tested with Visual Studio 2019, DirectX 12 GPU with VRS support and Windows version 1909 (master/VisualStudio/VRS-DOF.sln).

Sample created by Filip Strugar, feel free to send any feedback directly at filip.strugar@intel.com. Many thanks to Trapper McFerron for implementing the DoF effect.

Many thanks to Amazon and Nvidia for providing the Amazon Lumberyard Bistro dataset through the Open Research Content Archive (ORCA): https://developer.nvidia.com/orca/amazon-lumberyard-bistro

Many thanks to the developers of the following open-source libraries or projects that made this sample possibe:

- dear imgui (https://github.com/ocornut/imgui)

- assimp (https://github.com/assimp/assimp)

- DirectXTex (https://github.com/Microsoft/DirectXTex)

- DirectX Shader Compiler (https://github.com/microsoft/DirectXShaderCompiler)

- Filament (https://github.com/google/filament)

- Game Task Scheduler (https://github.com/GameTechDev/GTS-GamesTaskScheduler)

- Cpp-Taskflow (https://github.com/cpp-taskflow/cpp-taskflow)

- tinyxml2 (https://github.com/leethomason/tinyxml2)

- zlib (https://zlib.net/) ...and any I might have forgotten :)

Sample provided under MIT license, please see LICENSE