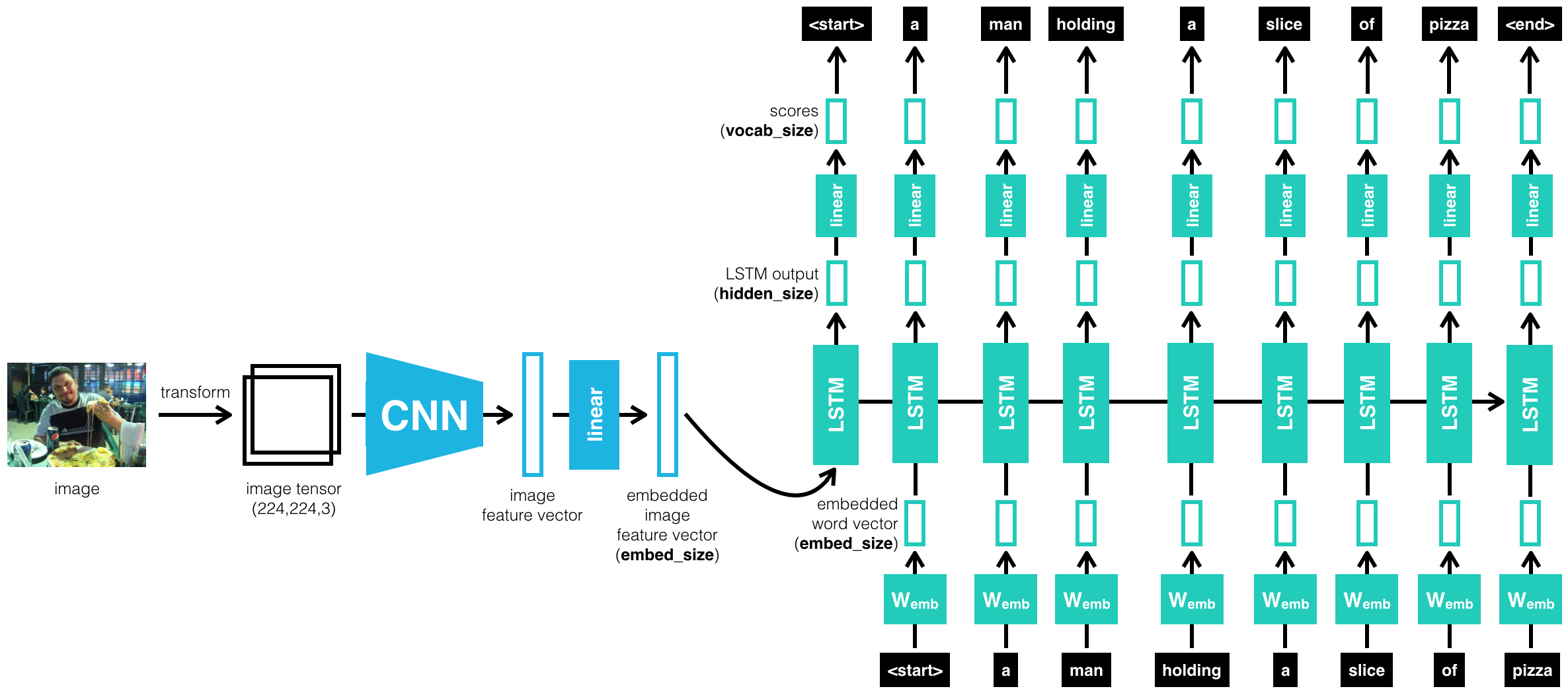

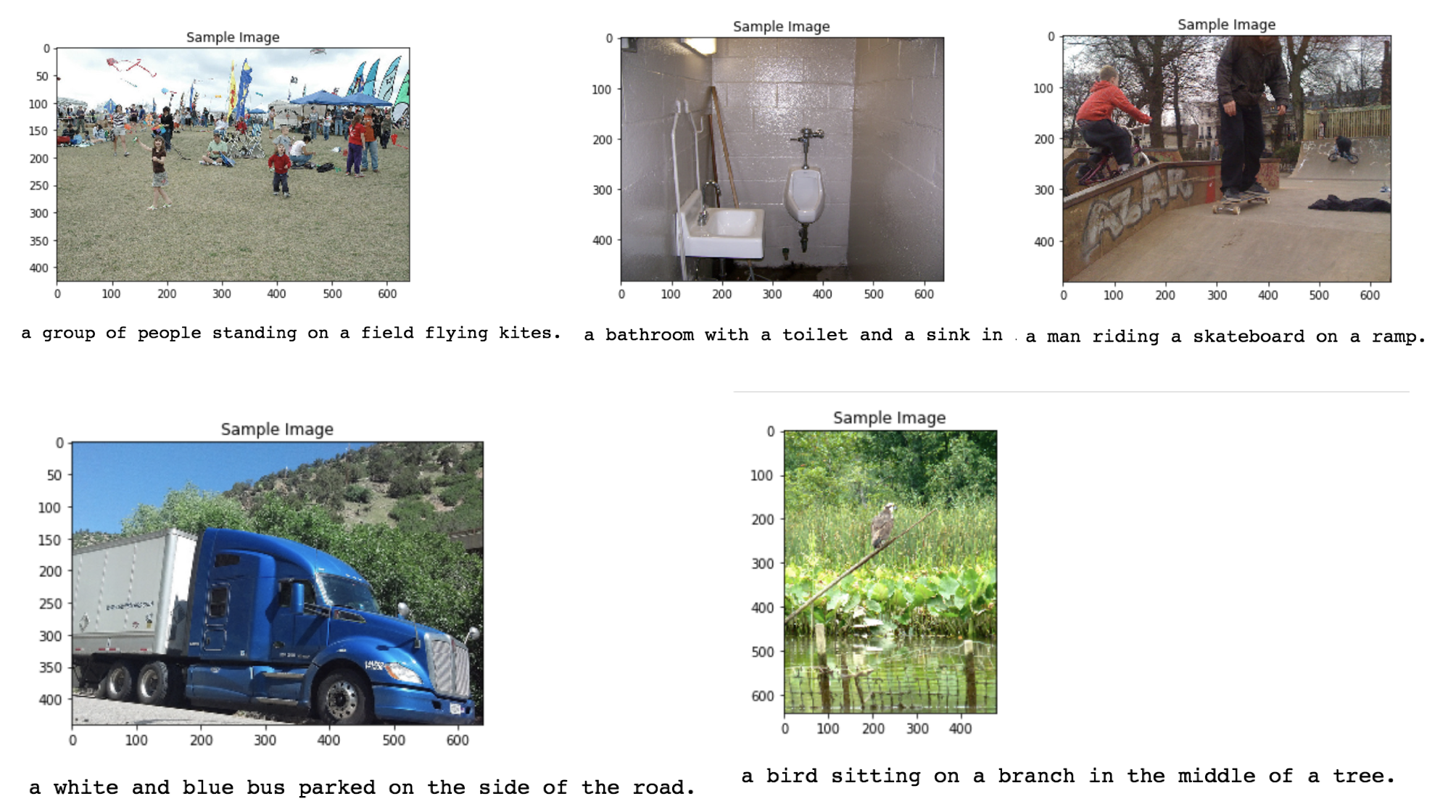

In this project, I'll create a neural network architecture consisting of both CNNs and LSTMs to automatically generate captions from images.

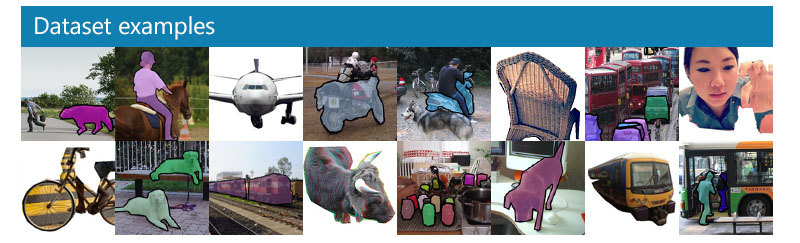

The Microsoft Common Objects in COntext (MS COCO) dataset is a large-scale dataset for scene understanding. The dataset is commonly used to train and benchmark object detection, segmentation, and captioning algorithms.

You can read more about the dataset on the website or in the research paper.

To obtain and explore the dataset, you can use either the COCO API, or run the Dataset notebook.

The core architecture used to achieve this task follows an encoder-decoder architecture, where the encoder is a pretrained ResNet CNN on ImageNet, and the decoder is a basic one-layer LSTM.

You can use my pre-trained model for your own experimentation. To use it, download. After downloading, unzip the file and place the contained pickle models under the subdirectory models.

Please feel free to experiment with alternative architectures, such as bidirectional LSTM with attention mechanisms.

Here are some visualizations of the facial recognition, keypoints detection, CNN feature maps, and interesting sunglasses layover applications:

Before you can experiment with the code, you'll have to make sure that you have all the libraries and dependencies required to support this project. You will mainly need Python 3, PyTorch and its torchvision, OpenCV, Matplotlib. You can install many dependencies using pip3 install -r requirements.txt.