Group Members: Bojun Liu, Xinmin Feng, Bowei Kang, Ganlin Yang, Yuqi Li, Yiming Wang

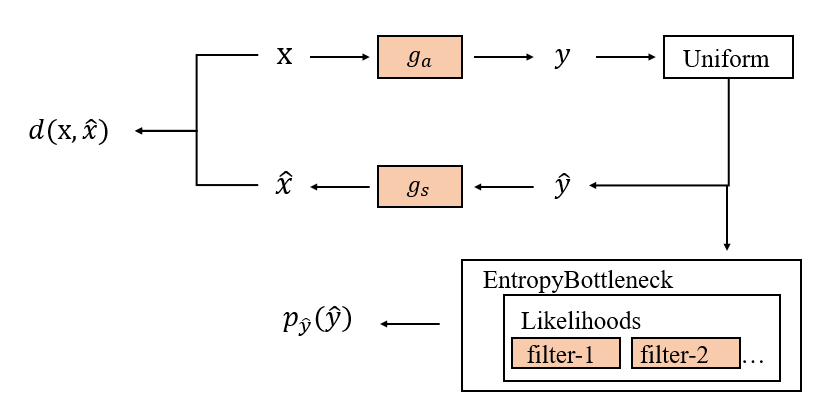

This subsection reproduces END-To-END Optimized Image Compression, In this work, an end-to-end image compression framework including analysistransformation, uniform Quantizer and synthesizer is proposed. The nonderivable steps such as quantization and discrete entropy are relaxed into derivable steps using the form of proxy functions. This work also proves that under certain conditions, the relaxed loss function in this paper is formally similar to the likelihood of a variational Autoencoder.

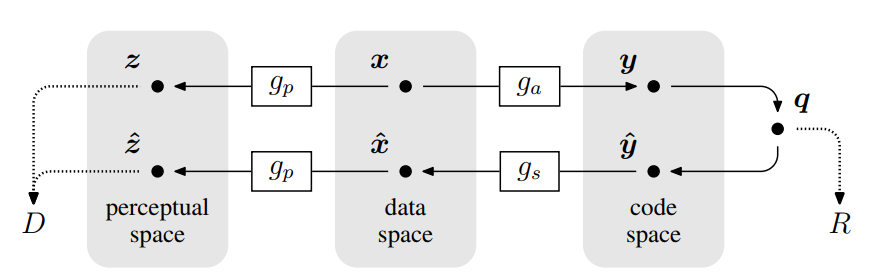

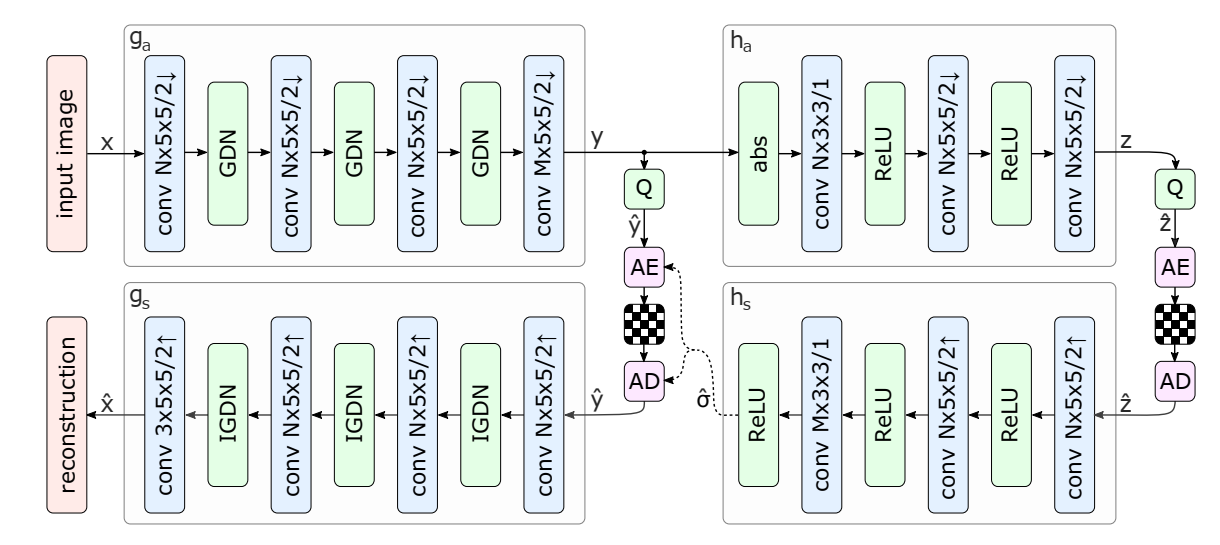

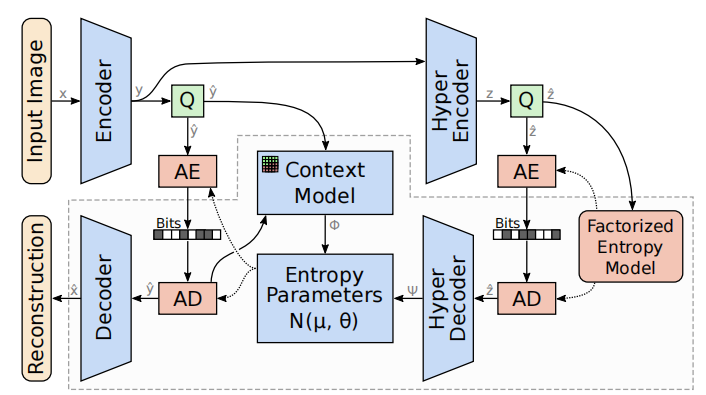

The data flow diagram of the working end-to-end image compression framework is shown below

Where, X and $\hat{x}$are the original image and the reconstructed image, respectively, and

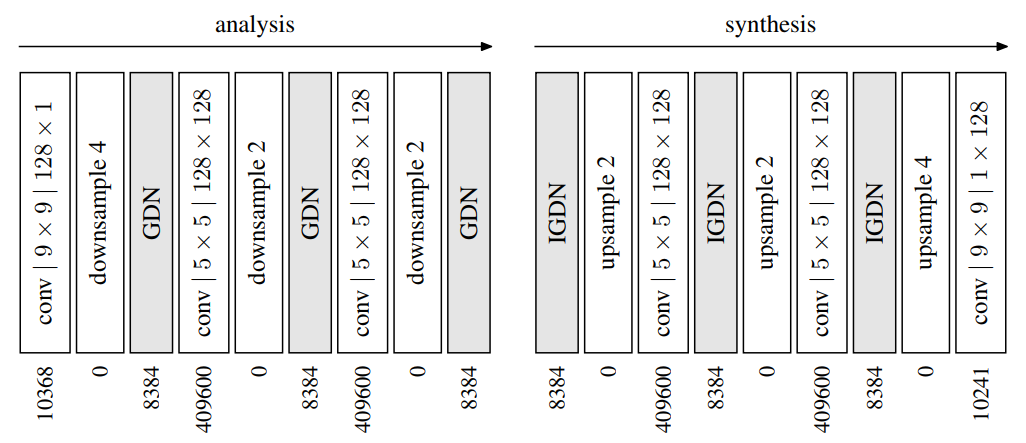

The above figure shows the transformation steps of $G_A $and

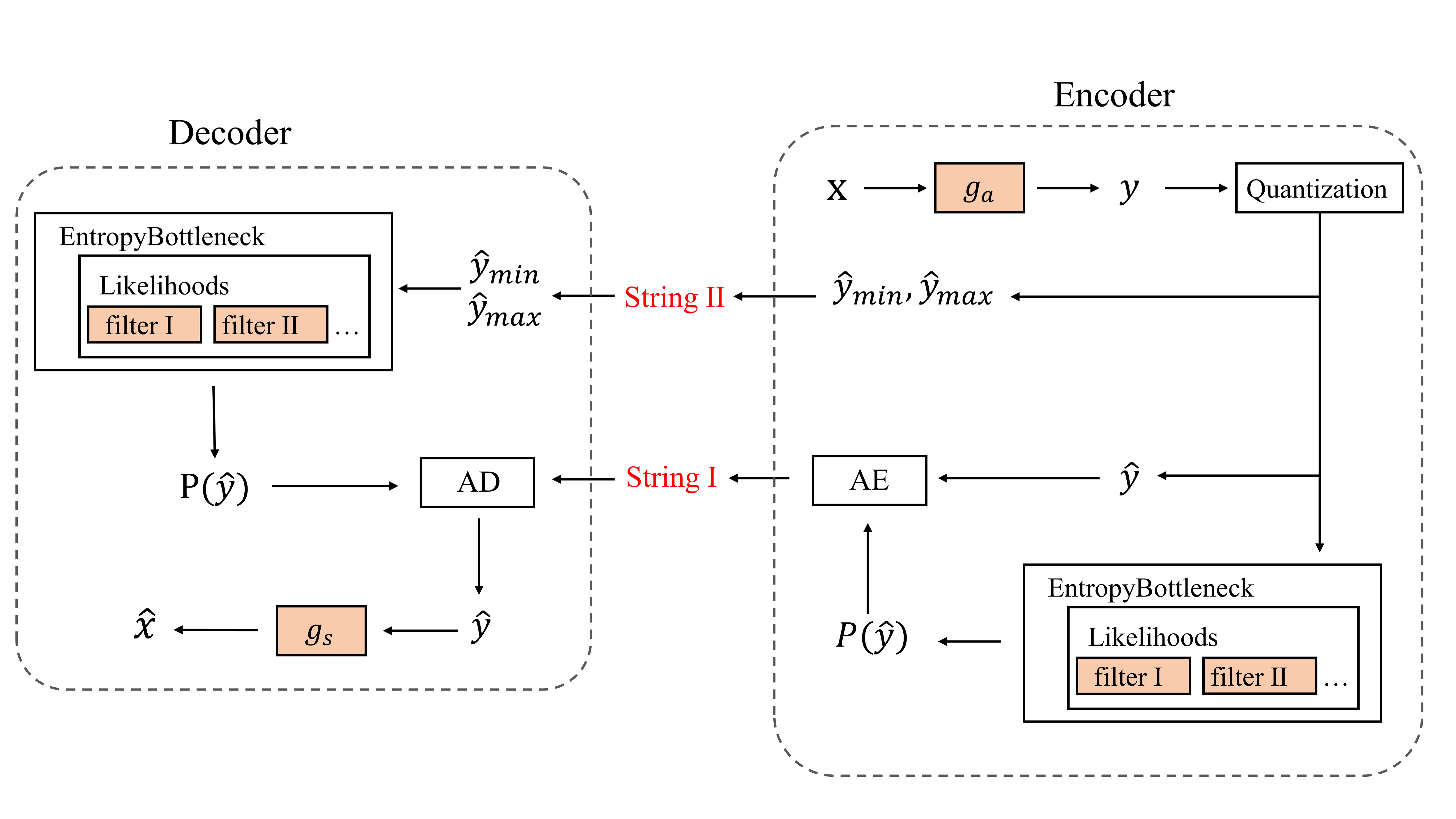

In reproducing the paper, we draw on the progress of subsequent work and the requirements of the current task, removing Perceptron Transformation. In addition, in this paper, the training process of the realization of the basic follow compressAI, the test code part on the basis of compressAI realized further simplification and improvement, Especially arithmetic entropy encoder and code stream design.

Arithmetic entropy codec is ETH Zurich open source based on the study of nondestructive arithmetic encoder torchac, the input parameter is the CDF of the symbol to be encoded and the corresponding symbol. Therefore, the code stream is designed into two parts. The first part is the output of arithmetic entropy encoder, and the second part is the minimum and maximum values of symbols to be encoded, which is more concise than the implementation of compressAI.

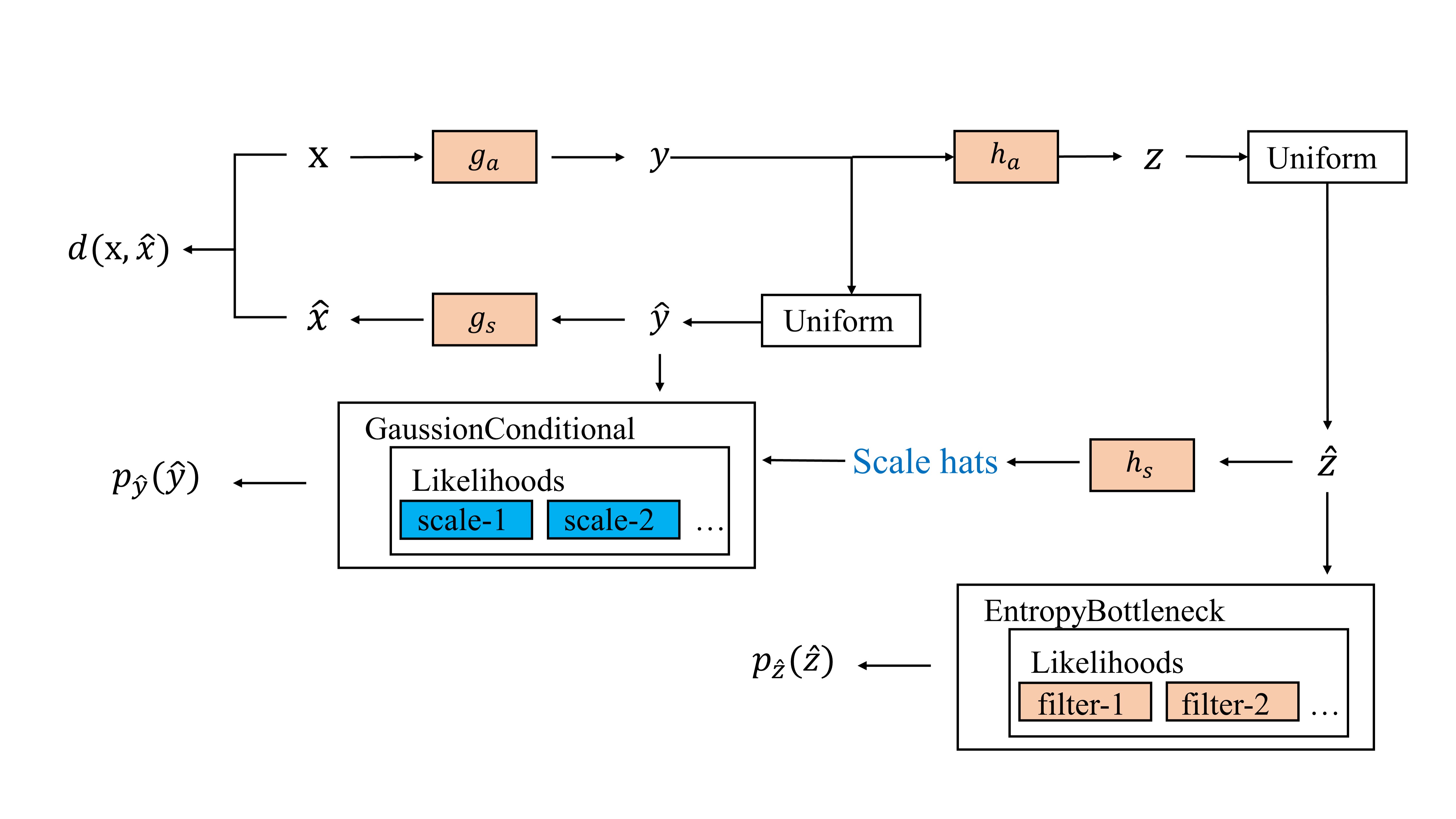

The training and testing flow chart of the Factorized Prior model designed in this project is shown below:

In this work, we use a multivariable nonparametric probabilistic model to model the hidden layer variables, and here we illustrate the basic principle of the probabilistic model in the simple case of univariate.

We first define the probability density $P $and the cumulative probability density

Among them, the

$F_k$is designed as follows

Where $g_k$is designed in the following form

Where

The overall operation is as follows

Here, to restrict the Jacobi matrix to be nonnegative and

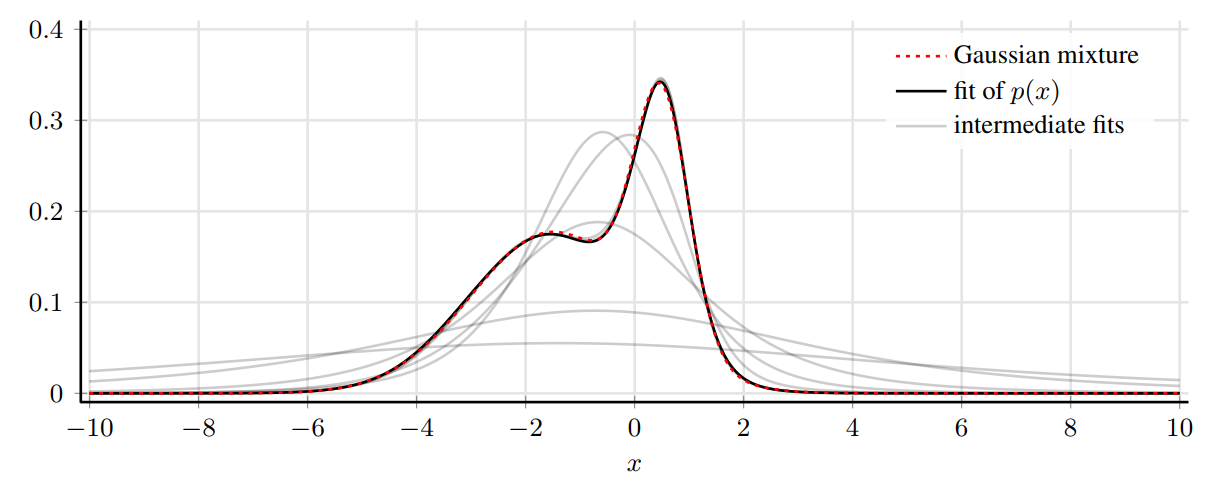

The following figure shows that the three-layer non-parametric probability density model $P $is used in this paper to fit a mixed Gaussian distribution, and the fitting effect is very good. The gray line shows the convergence process of the fitting

In this work, we set K=4 all the time and achieved as good a performance as the piecewise linear function, thanks to the fact that it is more friendly to implement this operation based on an automatic differentiator.

This subsection reproduces Variational Image Compression with a scale hyperprior, which follows the work of FactorizedPrior and proposes Hyperprior to describe Image side information. Based on HyperPrior, the hidden layer variable $y$is modeled as a Gaussian distribution with mean zero and variance

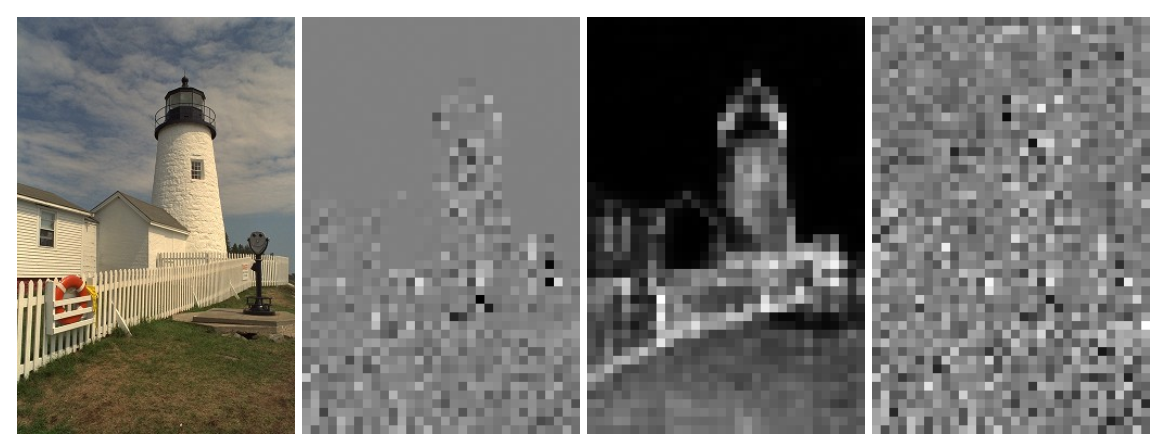

The researchers first performed a pre-experiment by visualizing the Factorized Prior's working hidden layer variable

It can be seen that 1) factorized Prior did not fully learn part of texture and edge information, so the spatial coupling of discrete signals could not be completely removed. 2)$\hat{y}$ after removing the spatial coupling basically follows

Based on this observation, researchers have proposed an end-to-end image compression framework with HyperPrior

Our formulation is the formulation of codec on latent representation

$$\begin{equation} \begin{aligned} \mathbb{E}{\boldsymbol{x} \sim p{\boldsymbol{x}}} D_{\mathrm{KL}}\left[q | p_{\tilde{\boldsymbol{y}}, \tilde{\boldsymbol{z}} \mid \boldsymbol{x}}\right]=\mathbb{E}{\boldsymbol{x} \sim p{\boldsymbol{x}}} \mathbb{E}{\tilde{\boldsymbol{y}}, \tilde{\boldsymbol{z}} \sim q}\left[\log q(\tilde{\boldsymbol{y}}, \tilde{\boldsymbol{z}} \mid \boldsymbol{x})-\log p{\boldsymbol{x} \mid \tilde{\boldsymbol{y}}}(\boldsymbol{x} \mid \tilde{\boldsymbol{y}})\right.\ \left.-\log p_{\tilde{\boldsymbol{y}} \mid \tilde{\boldsymbol{z}}}(\tilde{\boldsymbol{y}} \mid \tilde{\boldsymbol{z}})-\log p_{\tilde{\boldsymbol{z}}}(\tilde{\boldsymbol{z}})\right]+\text { const. } \end{aligned} \end{equation}$$

It is worth noting that the number of channels needs to be adjusted manually according to the complexity of the task, and in the paper, the researchers recommend $N=128\quad M=192$for five low $\lambda$values and $N=192\quad M=320$for three high $\lambda$values.

Like FactorizedPrior, we still use Torchac as the entropy encoder, and like that, we add the bitstream and symbol bits to the bitstream for the hidden layer variable

The training framework designed in this project is shown in the figure below

Loss function is designed as

$$Loss=\sum_i{p_{\hat{y}_i}}(\hat{y}i)+\sum_j{p{\hat{z}_j}}(\hat{z}_j)+\lambda d(x,\hat{x})$$

-

Features

Based on the structure of the Hyperprior model, the context module and the entropy module are introduced. The hyperprior network obtain the parameter

$\psi$ and the context module obtain the parameter$\Phi$ , then concatenate the two parameters and enter into the entropy module to get the probability parameter of each element in$\hat{y}$ , spacifically, when the$\hat{y}$ elements are considered to be subject to mutually independent Gaussian distributions, the probability parameters are the mean and the variance scales respectively.Due to the introduction of the context module, each element depends on each other during the decoding process, that is, the elements in the back position need to wait for the elements in the front position to be decoded before they can be decoded. There is a sequence in time, so a strict serial decoding method is required. The time complexity of decoding is greatly increased.

The context module also brings gains in performance, further reducing the spatial redundancy between pixels, and surpassing the BPG coding method in PSNR and MS-SSIM evaluation indicators.

-

compress&decompress

The torchac is used to encode and decode

$\hat{y}$ . Encoding provides two ways of parallel compress and serial compress,(serial compress is not a good way so we give it up) and there is only one way of serial decompress for decoding. We permute the$\hat{y}$ tensor and then use the torchac to encode. As for decoding, we use iteratively updated cdf_list to get the$\hat{y}$ step by step. According to the description in Compressai, the model needs to run on the cpu during the test, but in the actual test, it is found that the test on the cpu is not stable, and there will be garbled decoding, however, the test performance on gpu is very stable with usingtorch.use_deterministic_algorithms(True)statement. The current training results and the test results are normal.

For training, we used the vimeo-90k dataset, and randomly cropped the pictures with the size of 256×256. Models were trained with a batch size of 64, optimized using Adam. The loss function is set as:

Due to lack of experience with deep learning training, we tried different kinds of ways to adjust the learning rate and epoch number. First, as for Hyperprior models, we used the lr_scheduler.MultiStepLR method in the torch.optim package, and set milestones=[40, 90, 140] (epochs). This method allows the learning rate begins with a value of 1e-4, and divided by 2 when meet the milestones. As for Factorized models, we used lr_scheduler.ReduceLROnPlateau method, letting the learning rate reduce by half when loss has stopped reducing. Above Hyperprior models and Factorized models were trained for 200 epochs.

After trying lr_scheduler.ReduceLROnPlateau method more powerful, as for JointAutoregressive models and CheckerboardAutogressive models' training, we all used this method for learning rate adjustment. These models were trained for 250 epochs.

The command for training is as below:

python train.py

--dataset # path to training dataset

--test_dataset # path to testing dataset

--lmbda # weight for distortion loss

--lr # initial learning rate

--batch_size # training batch size

--test_batch_size # testing batch size

--epoch # training epoches

--model_name # arguments to distinguish different mode, selected from['FactorizedPrior','Hyperprior', 'JointAutoregressiveHierarchicalPriors', 'CheckerboardAutogressive']

--exp_version # experiment version ID, assign a different value each training time to aviod overwrite

--gpu_id # pass '0 1 2' for 3 gpus as example, pass '0' for single gpu

--code_rate # choose from 'low' or 'high'To test Factorized models:

python test.py --model_name Factorized --epoch_num 199To test Hyperprior models:

python test.py --model_name Hyperprior --epoch_num 199To test JointAutoregressive models:

python test.py --model_name JointAutoregressiveHierarchicalPriors --epoch_num 249To test CheckerboardAutogressive models:

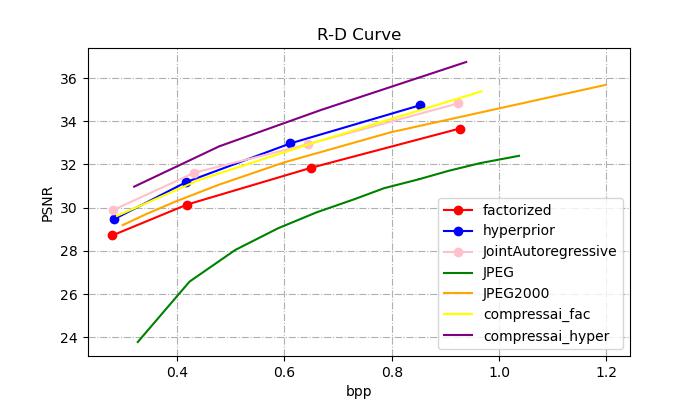

python test.py --model_name CheckerboardAutogressive --epoch_num 249The R-D Curve is plotted below:

We can see that our models both provide better rate-distortion performance compared to JPEG. What's more, our Hyperprior model can provide better performance than JPEG2000. However, our JointAutoregressive model can't get expected performance due to training method or other reasons. Compared with compressai official results, our models get 1dB lower than the official results at same bit rates.

Specifically, the Factorized models' detailed results are recorded as:

| lmbda | num_pixels | bits | bpp | psnr | time(enc) | time(dec) |

|---|---|---|---|---|---|---|

| 0.0067 | 393216 | 109462 | 0.278833 | 28.71093 | 0.305292 | 0.32475 |

| 0.013 | 393216 | 164219 | 0.418083 | 30.14009 | 0.377417 | 0.386792 |

| 0.025 | 393216 | 255458 | 0.650125 | 31.84871 | 0.383417 | 0.357583 |

| 0.0483 | 393216 | 364205.3 | 0.92675 | 33.6627 | 0.230708 | 0.234292 |

The Hyperprior models' detailed results are recorded as:

| lmbda | num_pixels | bits | bpp | psnr | time(enc) | time(dec) |

|---|---|---|---|---|---|---|

| 0.0067 | 393216 | 111120.7 | 0.282583 | 29.46871 | 1.035042 | 0.832708 |

| 0.013 | 393216 | 163870 | 0.416667 | 31.18252 | 1.100542 | 0.912625 |

| 0.025 | 393216 | 240186.3 | 0.610958 | 32.97386 | 1.117917 | 0.772125 |

| 0.0483 | 393216 | 335234.7 | 0.852583 | 34.73847 | 1.202125 | 1.087917 |

The JointAutoregressive models' detailed results are recorded as:

| lmbda | num_pixels | bits | bpp | psnr | time(enc) | time(dec) |

|---|---|---|---|---|---|---|

| 0.0067 | 393216 | 109899.7 | 0.279542 | 29.87457 | 1.137833 | 753.0708 |

| 0.013 | 393216 | 169756 | 0.431875 | 31.61642 | 0.619042 | 727.0031 |

| 0.025 | 393216 | 252778.3 | 0.643 | 32.9335 | 1.272083 | 1245.762 |

| 0.0483 | 393216 | 363390 | 0.924333 | 34.83142 | 1.421083 | 1603.472 |

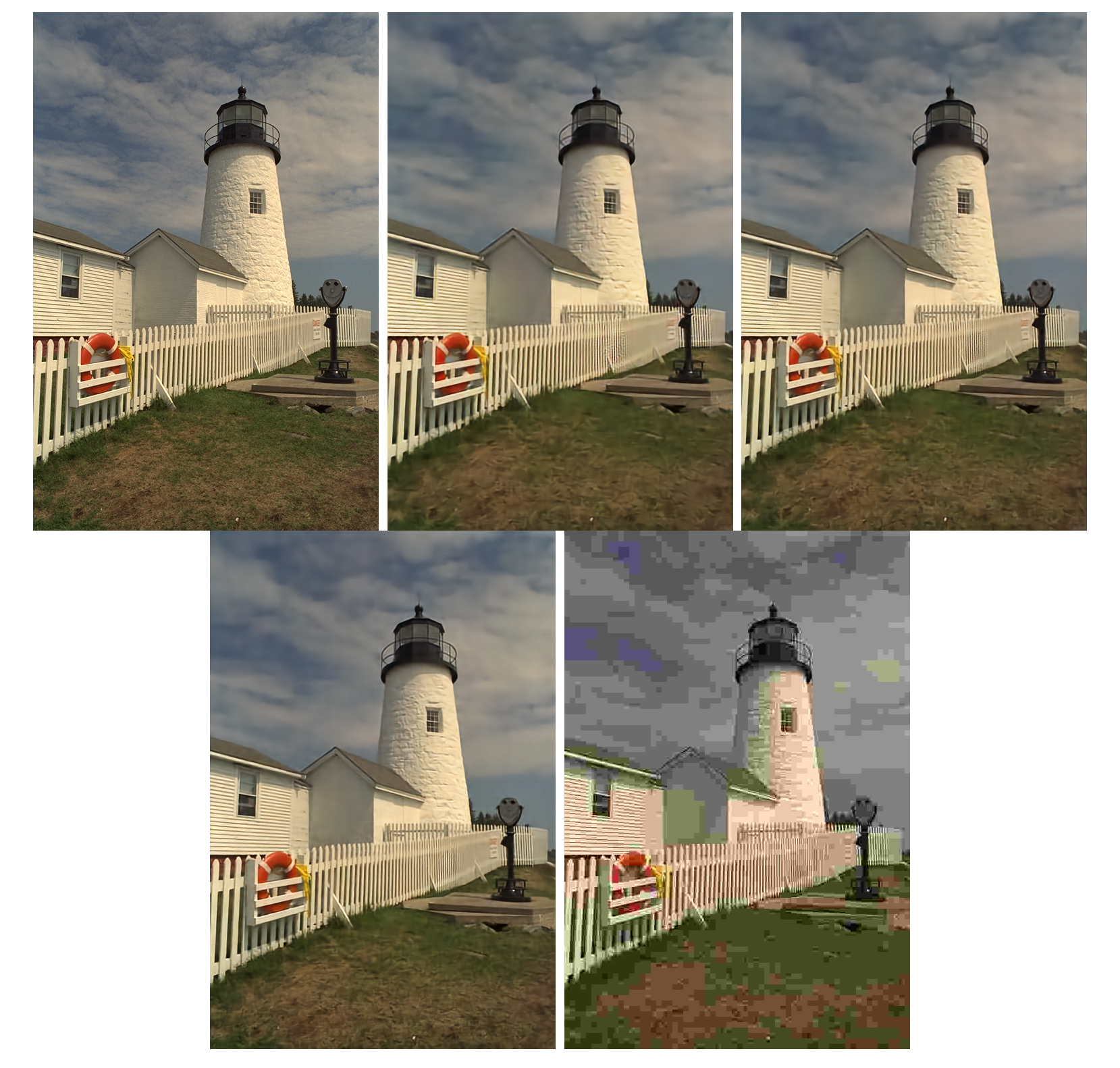

We show an image(take kodak-19 as an example) compressed using our method optimized for a low value of λ (and thus, a low bit rate), compared to JPEG image compressed at equal or greater bit rates.

The above images of the first row from left to right are the original kodak-19 image, image compressed using our Factorized model, image compressed using our Hyperprior model. The second row row from left to right are the image compressed using our JointAutoregressive model and JPEG compressed image. The detailed bpp and psnr are as below:

| Methods | bpp | psnr |

|---|---|---|

| Factorized | 0.250 | 28.496 |

| Hyperprior | 0.255 | 29.357 |

| JointAutoregressive | 0.256 | 29.757 |

| JPEG | 0.221 | 23.890 |

At similar bit rates, our method provides the higher visual quality on the kodak-19 image. However, for some detailed parts of the original image, such as the textures of the sky and the fence, our Factorized model restores more blur, and the Hyperprior model and the JointAutoregressive model retains some textures, but a little blur still exists. JPEG shows severe blocking artifacts at this bit rate.

We use thop package to calculate model parameters(Params) and Multiply–Accumulate Operations(MACs) :

| Methods | Params | MACs |

|---|---|---|

| Factorized | 2.887M | 72.352G |

| Hyperprior | 4.969M | 74.014G |

| Joint Autoregressive Hierarchical Priors | 12.053M | 162.232G |

| Checkerboard Autoregressive | 12.053M | 163.792G |