RobustBench: a standardized adversarial robustness benchmark

Francesco Croce* (University of Tübingen), Maksym Andriushchenko* (EPFL), Vikash Sehwag* (Princeton University), Nicolas Flammarion (EPFL), Mung Chiang (Purdue University), Prateek Mittal (Princeton University), Matthias Hein (University of Tübingen)

Leaderboard: https://robustbench.github.io/

Paper: https://arxiv.org/abs/2010.09670

Main idea

The goal of RobustBench is to systematically track the real progress in adversarial robustness.

There are already more than 2'000 papers

on this topic, but it is still unclear which approaches really work and which only lead to overestimated robustness.

We start from benchmarking the Linf-robustness since it is the most studied setting in the literature.

We plan to extend the benchmark to other threat models in the future: first to other Lp-norms and then to more general perturbation sets

(Wasserstein perturbations, common corruptions, etc).

Robustness evaluation in general is not straightforward and requires adaptive attacks (Tramer et al., (2020)). Thus, in order to establish a reliable standardized benchmark, we need to impose some restrictions on the defenses we consider. In particular, we accept only defenses that are (1) have in general non-zero gradients wrt the inputs, (2) have a fully deterministic forward pass (i.e. no randomness) that (3) does not have an optimization loop. Often, defenses that violate these 3 principles only make gradient-based attacks harder but do not substantially improve robustness (Carlini et al., (2019)) except those that can present concrete provable guarantees (e.g. Cohen et al., (2019)).

RobustBench consists of two parts:

- a website https://robustbench.github.io/ with the leaderboard based on many recent papers (plots below 👇)

- a collection of the most robust models, Model Zoo, which are easy to use for any downstream application (see the tutorial below after FAQ 👇)

FAQ

Q: Wait, how does this leaderboard differ from the AutoAttack leaderboard? 🤔

A: The AutoAttack leaderboard is maintained simultaneously with the RobustBench L2 / Linf leaderboards by Francesco Croce, and all the changes to either of them will be synchronized (given that the 3 restrictions on the models are met for the RobustBench leaderboard). One can see the current L2 / Linf RobustBench leaderboard as a continuously updated fork of the AutoAttack leaderboard extended by adaptive evaluations, Model Zoo, and clear restrictions on the models we accept. And in the future, we will extend RobustBench with other threat models and potentially with a different standardized attack if it's shown to perform better than AutoAttack.

Q: Wait, how is it different from robust-ml.org? 🤔

A: robust-ml.org focuses on adaptive evaluations, but we provide a standardized benchmark. Adaptive evaluations

are great (e.g., see Tramer et al., 2020) but very time consuming and not standardized. Instead, we argue that one can estimate robustness accurately without adaptive attacks but for this one has to introduce some restrictions on the considered models.

Q: How is it related to libraries like foolbox / cleverhans / advertorch? 🤔

A: These libraries provide implementations of different attacks. Besides the standardized benchmark, RobustBench

additionally provides a repository of the most robust models. So you can start using the

robust models in one line of code (see the tutorial below 👇).

Q: Why is Lp-robustness still interesting in 2020? 🤔

A: There are numerous interesting applications of Lp-robustness that span

transfer learning (Salman et al. (2020), Utrera et al. (2020)),

interpretability (Tsipras et al. (2018), Kaur et al. (2019), Engstrom et al. (2019)),

security (Tramèr et al. (2018), Saadatpanah et al. (2019)),

generalization (Xie et al. (2019), Zhu et al. (2019), Bochkovskiy et al. (2020)),

robustness to unseen perturbations (Xie et al. (2019), Kang et al. (2019)),

stabilization of GAN training (Zhong et al. (2020)).

Q: Does this benchmark only focus on Lp-robustness? 🤔

A: Lp-robustness is the most well-studied area, so we focus on it first. However, in the future, we plan

to extend the benchmark to other perturbations sets beyond Lp-balls.

Q: What about verified adversarial robustness? 🤔

A: We specifically focus on defenses which improve empirical robustness, given the lack of clarity regarding

which approaches really improve robustness and which only make some particular attacks unsuccessful.

For methods targeting verified robustness, we encourage the readers to check out Salman et al. (2019)

and Li et al. (2020).

Q: What if I have a better attack than the one used in this benchmark? 🤔

A: We will be happy to add a better attack or any adaptive evaluation that would complement our default standardized attacks.

Model Zoo: quick tour

The goal of our Model Zoo is to simplify the usage of robust models as much as possible. Check out our Colab notebook here 👉 RobustBench: quick start for a quick introduction. It is also summarized below 👇.

First, install RobustBench:

pip install git+https://github.com/RobustBench/robustbenchNow let's try to load CIFAR-10 and the most robust CIFAR-10 model from Carmon2019Unlabeled that achieves 59.53% robust accuracy evaluated with AA under eps=8/255:

from robustbench.data import load_cifar10

x_test, y_test = load_cifar10(n_examples=50)

from robustbench.utils import load_model

model = load_model(model_name='Carmon2019Unlabeled', norm='Linf')Let's try to evaluate the robustness of this model. We can use any favourite library for this. For example, FoolBox implements many different attacks. We can start from a simple PGD attack:

!pip install -q foolbox

import foolbox as fb

fmodel = fb.PyTorchModel(model, bounds=(0, 1))

_, advs, success = fb.attacks.LinfPGD()(fmodel, x_test.to('cuda:0'), y_test.to('cuda:0'), epsilons=[8/255])

print('Robust accuracy: {:.1%}'.format(1 - success.float().mean()))>>> Robust accuracy: 58.0%

Wonderful! Can we do better with a more accurate attack?

Let's try to evaluate its robustness with a cheap version AutoAttack from ICML 2020 with 2/4 attacks (only APGD-CE and APGD-DLR):

!pip install -q git+https://github.com/fra31/auto-attack

from autoattack import AutoAttack

adversary = AutoAttack(model, norm='Linf', eps=8/255, version='custom', attacks_to_run=['apgd-ce', 'apgd-dlr'])

adversary.apgd.n_restarts = 1

x_adv = adversary.run_standard_evaluation(x_test, y_test)>>> initial accuracy: 92.00%

>>> apgd-ce - 1/1 - 19 out of 46 successfully perturbed

>>> robust accuracy after APGD-CE: 54.00% (total time 10.3 s)

>>> apgd-dlr - 1/1 - 1 out of 27 successfully perturbed

>>> robust accuracy after APGD-DLR: 52.00% (total time 17.0 s)

>>> max Linf perturbation: 0.03137, nan in tensor: 0, max: 1.00000, min: 0.00000

>>> robust accuracy: 52.00%

Note that for our standardized evaluation of Linf-robustness we use the full version of AutoAttack which is slower but

more accurate (for that just use adversary = AutoAttack(model, norm='Linf', eps=8/255)).

What about other types of perturbations? Is Lp-robustness useful there? We can evaluate the available models on more general perturbations. For example, let's take images corrupted by fog perturbations from CIFAR-10-C with the highest level of severity (5). Are different Linf robust models perform better on them?

from robustbench.data import load_cifar10c

from robustbench.utils import clean_accuracy

corruptions = ['fog']

x_test, y_test = load_cifar10c(n_examples=1000, corruptions=corruptions, severity=5)

for model_name in ['Standard', 'Engstrom2019Robustness', 'Rice2020Overfitting', 'Carmon2019Unlabeled']:

model = load_model(model_name)

acc = clean_accuracy(model, x_test, y_test)

print('Model: {}, CIFAR-10-C accuracy: {:.1%}'.format(model_name, acc))>>> Model: Standard, CIFAR-10-C accuracy: 74.4%

>>> Model: Engstrom2019Robustness, CIFAR-10-C accuracy: 38.8%

>>> Model: Rice2020Overfitting, CIFAR-10-C accuracy: 22.0%

>>> Model: Carmon2019Unlabeled, CIFAR-10-C accuracy: 31.1%

As we can see, all these Linf robust models perform considerably worse than the standard model on this type of corruptions. This curious phenomenon was first noticed in Adversarial Examples Are a Natural Consequence of Test Error in Noise and explained from the frequency perspective in A Fourier Perspective on Model Robustness in Computer Vision.

However, on average adversarial training does help on CIFAR-10-C. One can check this easily by loading all types of corruptions

via load_cifar10c(n_examples=1000, severity=5), and repeating evaluation on them.

Model Zoo

In order to use a model, you just need to know its ID, e.g. Carmon2019Unlabeled, and to run:

model = load_model(model_name='Carmon2019Unlabeled', norm='Linf')which automatically downloads the model (all models are defined in model_zoo/models.py).

You can find all available model IDs in the table below (note that the full leaderboard contains more models):

Linf

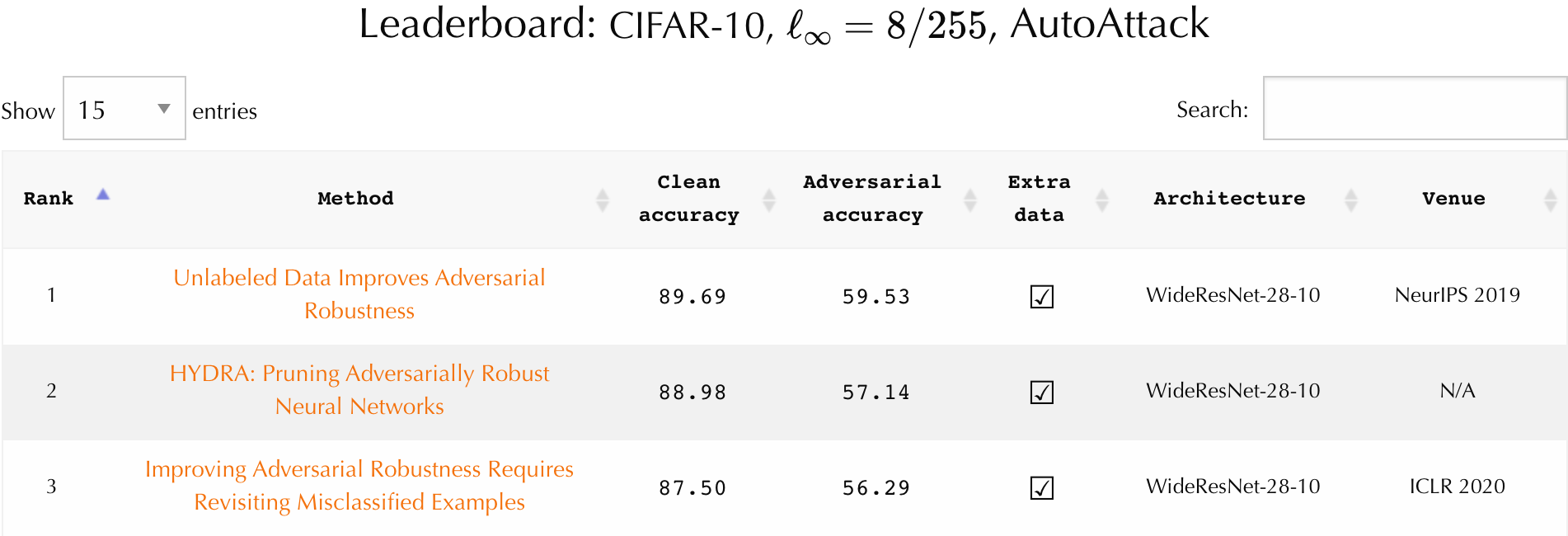

| # | Model ID | Paper | Clean accuracy | Robust accuracy | Architecture | Venue |

|---|---|---|---|---|---|---|

| 1 | Wu2020Adversarial_extra | Adversarial Weight Perturbation Helps Robust Generalization | 88.25% | 60.04% | WideResNet-28-10 | NeurIPS 2020 |

| 2 | Carmon2019Unlabeled | Unlabeled Data Improves Adversarial Robustness | 89.69% | 59.53% | WideResNet-28-10 | NeurIPS 2019 |

| 3 | Sehwag2020Hydra | HYDRA: Pruning Adversarially Robust Neural Networks | 88.98% | 57.14% | WideResNet-28-10 | NeurIPS 2020 |

| 4 | Wang2020Improving | Improving Adversarial Robustness Requires Revisiting Misclassified Examples | 87.50% | 56.29% | WideResNet-28-10 | ICLR 2020 |

| 5 | Wu2020Adversarial | Adversarial Weight Perturbation Helps Robust Generalization | 85.36% | 56.17% | WideResNet-34-10 | NeurIPS 2020 |

| 6 | Hendrycks2019Using | Using Pre-Training Can Improve Model Robustness and Uncertainty | 87.11% | 54.92% | WideResNet-28-10 | ICML 2019 |

| 7 | Pang2020Boosting | Boosting Adversarial Training with Hypersphere Embedding | 85.14% | 53.74% | WideResNet-34-20 | NeurIPS 2020 |

| 8 | Zhang2020Attacks | Attacks Which Do Not Kill Training Make Adversarial Learning Stronger | 84.52% | 53.51% | WideResNet-34-10 | ICML 2020 |

| 9 | Rice2020Overfitting | Overfitting in adversarially robust deep learning | 85.34% | 53.42% | WideResNet-34-20 | ICML 2020 |

| 10 | Huang2020Self | Self-Adaptive Training: beyond Empirical Risk Minimization | 83.48% | 53.34% | WideResNet-34-10 | NeurIPS 2020 |

| 11 | Zhang2019Theoretically | Theoretically Principled Trade-off between Robustness and Accuracy | 84.92% | 53.08% | WideResNet-34-10 | ICML 2019 |

| 12 | Chen2020Adversarial | Adversarial Robustness: From Self-Supervised Pre-Training to Fine-Tuning | 86.04% | 51.56% | ResNet-50 (3x ensemble) |

CVPR 2020 |

| 13 | Engstrom2019Robustness | Robustness library | 87.03% | 49.25% | ResNet-50 | Unpublished |

| 14 | Zhang2019You | You Only Propagate Once: Accelerating Adversarial Training via Maximal Principle | 87.20% | 44.83% | WideResNet-34-10 | NeurIPS 2019 |

| 15 | Wong2020Fast | Fast is better than free: Revisiting adversarial training | 83.34% | 43.21% | ResNet-18 | ICLR 2020 |

| 16 | Ding2020MMA | MMA Training: Direct Input Space Margin Maximization through Adversarial Training | 84.36% | 41.44% | WideResNet-28-4 | ICLR 2020 |

| 17 | Standard | Standardly trained model | 94.78% | 0.00% | WideResNet-28-10 | Unpublished |

L2

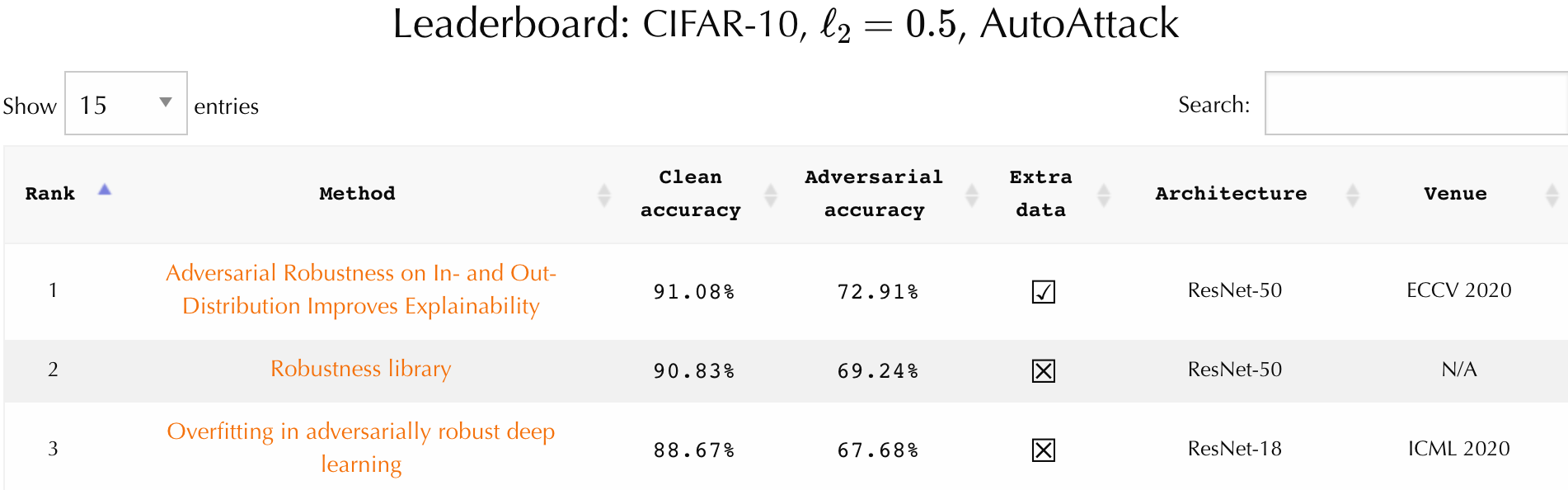

| # | Model ID | Paper | Clean accuracy | Robust accuracy | Architecture | Venue |

|---|---|---|---|---|---|---|

| 1 | Wu2020Adversarial | Adversarial Weight Perturbation Helps Robust Generalization | 88.51% | 73.66% | WideResNet-34-10 | NeurIPS 2020 |

| 2 | Augustin2020Adversarial | Adversarial Robustness on In- and Out-Distribution Improves Explainability | 91.08% | 72.91% | ResNet-50 | ECCV 2020 |

| 3 | Engstrom2019Robustness | Robustness library | 90.83% | 69.24% | ResNet-50 | Unpublished |

| 4 | Rice2020Overfitting | Overfitting in adversarially robust deep learning | 88.67% | 67.68% | ResNet-18 | ICML 2020 |

| 5 | Rony2019Decoupling | Decoupling Direction and Norm for Efficient Gradient-Based L2 Adversarial Attacks and Defenses | 89.05% | 66.44% | WideResNet-28-10 | CVPR 2019 |

| 6 | Ding2020MMA | MMA Training: Direct Input Space Margin Maximization through Adversarial Training | 88.02% | 66.09% | WideResNet-28-4 | ICLR 2020 |

| 7 | Standard | Standardly trained model | 94.78% | 0.00% | WideResNet-28-10 | Unpublished |

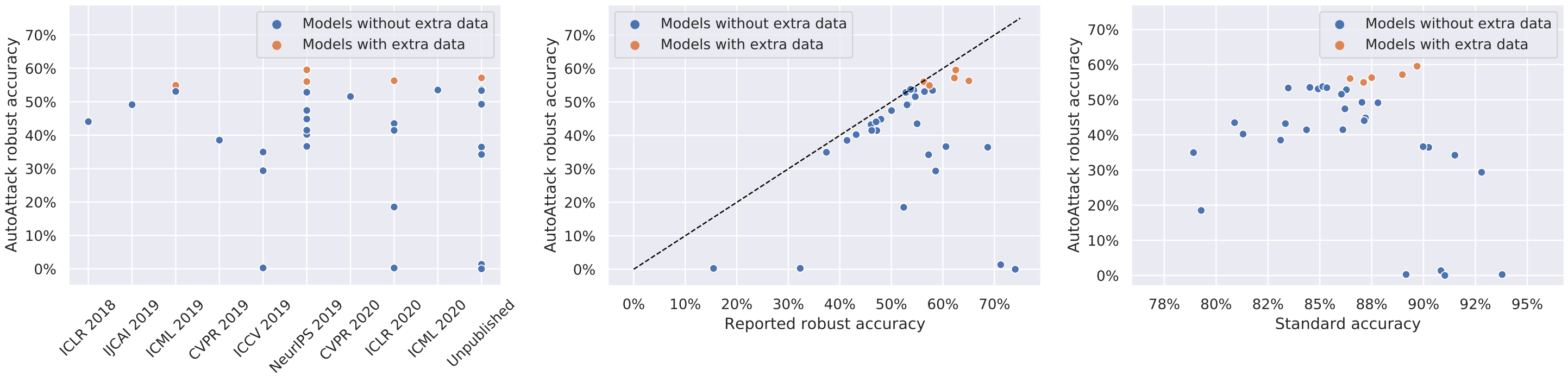

Notebooks

We host all the notebooks at Google Colab:

- RobustBench: quick start: a quick tutorial

to get started that illustrates the main features of

RobustBench. - RobustBench: json stats: various plots based

on the jsons from

model_info(robustness over venues, robustness vs accuracy, etc).

Feel free to suggest a new notebook based on the Model Zoo or the jsons from model_info. We are very interested in

collecting new insights about benefits and tradeoffs between different perturbation types.

How to contribute

Contributions to RobustBench are very welcome! You can help to improve RobustBench:

- Are you an author of a recent paper focusing on improving adversarial robustness? Consider adding new models (see the instructions below 👇).

- Do you have in mind some better standardized attack or an adaptive evaluation? Do you want to extend

RobustBenchto other threat models? We'll be glad to discuss that! - Do you have an idea how to make the existing codebase better? Just open a pull request or create an issue and we'll be happy to discuss potential changes.

Adding a new model

Public model submission (leaderboard + Model Zoo)

In order to add a new model, submit a pull request where you specify the claim, model definition, and model checkpoint:

- Claim:

model_info/<Name><Year><FirstWordOfTheTitle>.json: follow the convention of the existing json-files to specify the information to be displayed on the website. Here is an example frommodel_info/Rice2020Overfitting.json:

{

"link": "https://arxiv.org/abs/2002.11569",

"name": "Overfitting in adversarially robust deep learning",

"authors": "Leslie Rice, Eric Wong, J. Zico Kolter",

"additional_data": false,

"number_forward_passes": 1,

"dataset": "cifar10",

"venue": "ICML 2020",

"architecture": "WideResNet-34-20",

"eps": "8/255",

"clean_acc": "85.34",

"reported": "58",

"AA": "53.42"

}- Model definition:

robustbench/model_zoo/models.py: add your model definition as a new class. For standard architectures (e.g.,WideResNet) consider inheriting the class defined inwide_resnet.pyorresnet.py. For example:

class Rice2020OverfittingNet(WideResNet):

def __init__(self, depth, widen_factor):

super(Rice2020OverfittingNet, self).__init__(depth=depth, widen_factor=widen_factor, sub_block1=False)

self.mu = torch.Tensor([0.4914, 0.4822, 0.4465]).float().view(3, 1, 1).cuda()

self.sigma = torch.Tensor([0.2471, 0.2435, 0.2616]).float().view(3, 1, 1).cuda()

def forward(self, x):

x = (x - self.mu) / self.sigma

return super(Rice2020OverfittingNet, self).forward(x)- Model checkpoint:

robustbench/model_zoo/models.py: And also add your model entry inmodel_dictswhich should also contain the Google Drive ID with your pytorch model so that it can be downloaded automatically from Google Drive:

('Rice2020Overfitting', {

'model': Rice2020OverfittingNet(34, 20),

'gdrive_id': '1vC_Twazji7lBjeMQvAD9uEQxi9Nx2oG-',

})

Private model submission (leaderboard only)

In case you want to keep your checkpoints private for some reasons, you can also submit your claim, model definition, and model checkpoint directly to this email address adversarial.benchmark@gmail.com. In this case, we will add your model to the leaderboard but not to the Model Zoo and will not share your checkpoints publicly.

Automatic tests

In order to run the tests, run:

python -m unittest discover tests -t . -vfor fast testingRUN_SLOW=true python -m unittest discover tests -t . -vfor slower testing

For example, one can test if the clean accuracy on 200 examples exceeds some threshold (70%) or if clean accuracy on

10'000 examples for each model matches the ones from the jsons located at robustbench/model_info.

Note that one can specify some configurations like batch_size, data_dir, model_dir in tests/config.py for

running the tests.

Citation

Would you like to reference the RobustBench leaderboard or you are using models from the Model Zoo?

Then consider citing our whitepaper:

@article{croce2020robustbench,

title={RobustBench: a standardized adversarial robustness benchmark},

author={Croce, Francesco and Andriushchenko, Maksym and Sehwag, Vikash and Flammarion, Nicolas and Chiang, Mung and Mittal, Prateek and Matthias Hein},

journal={arXiv preprint arXiv:2010.09670},

year={2020}

}

Contact

Feel free to contact us about anything related to RobustBench by creating an issue, a pull request or

by email at adversarial.benchmark@gmail.com.